From Wikipedia, the free encyclopedia

Loop quantum gravity (LQG) is a theory that attempts to describe the quantum properties of the universe and gravity. It is also a theory of quantum space and quantum time because, according to general relativity, the geometry of spacetime is a manifestation of gravity. LQG is an attempt to merge and adapt standard quantum mechanics and standard general relativity. The main output of the theory is a physical picture of space where space is granular. The granularity is a direct consequence of the quantization. It has the same nature as the granularity of the photons in the quantum theory of electromagnetism or the discrete levels of the energy of the atoms. Here, it is space itself that is discrete. In other words, there is a minimum distance possible to travel through it.

More precisely, space can be viewed as an extremely fine fabric or network "woven" of finite loops. These networks of loops are called spin networks. The evolution of a spin network over time is called a spin foam. The predicted size of this structure is the Planck length, which is approximately 10−35 meters. According to the theory, there is no meaning to distance at scales smaller than the Planck scale. Therefore, LQG predicts that not just matter, but also space itself has an atomic structure.

Today LQG is a vast area of research, developing in several directions, which involves about 30 research groups worldwide.[1] They all share the basic physical assumptions and the mathematical description of quantum space. The full development of the theory is being pursued in two directions: the more traditional canonical loop quantum gravity, and the newer covariant loop quantum gravity, more commonly called spin foam theory.

Research into the physical consequences of the theory is proceeding in several directions. Among these, the most well-developed is the application of LQG to cosmology, called loop quantum cosmology (LQC). LQC applies LQG ideas to the study of the early universe and the physics of the Big Bang. Its most spectacular consequence is that the evolution of the universe can be continued beyond the Big Bang. The Big Bang appears thus to be replaced by a sort of cosmic Big Bounce.

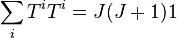

In 1994, Rovelli and Smolin showed that the quantum operators of the theory associated to area and volume have a discrete spectrum. That is, geometry is quantized. This result defines an explicit basis of states of quantum geometry, which turned out to be labelled by Roger Penrose's spin networks, which are graphs labelled by spins.

The canonical version of the dynamics was put on firm ground by Thomas Thiemann, who defined an anomaly-free Hamiltonian operator, showing the existence of a mathematically consistent background-independent theory. The covariant or spinfoam version of the dynamics developed during several decades, and crystallized in 2008, from the joint work of research groups in France, Canada, UK, Poland, and Germany, lead to the definition of a family of transition amplitudes, which in the classical limit can be shown to be related to a family of truncations of general relativity.[2] The finiteness of these amplitudes was proven in 2011.[3][4] It requires the existence of a positive cosmological constant, and this is consistent with observed acceleration in the expansion of the Universe.

In general relativity, general covariance is intimately related to "diffeomorphism invariance". This symmetry is one of the defining features of the theory. However, it is a common misunderstanding that "diffeomorphism invariance" refers to the invariance of the physical predictions of a theory under arbitrary coordinate transformations; this is untrue and in fact every physical theory is invariant under coordinate transformations this way. Diffeomorphisms, as mathematicians define them, correspond to something much more radical; intuitively a way they can be envisaged is as simultaneously dragging all the physical fields (including the gravitational field) over the bare differentiable manifold while staying in the same coordinate system. Diffeomorphisms are the true symmetry transformations of general relativity, and come about from the assertion that the formulation of the theory is based on a bare differentiable manifold, but not on any prior geometry — the theory is background-independent (this is a profound shift, as all physical theories before general relativity had as part of their formulation a prior geometry). What is preserved under such transformations are the coincidences between the values the gravitational field take at such and such a "place" and the values the matter fields take there. From these relationships one can form a notion of matter being located with respect to the gravitational field, or vice versa. This is what Einstein discovered: that physical entities are located with respect to one another only and not with respect to the spacetime manifold. As Carlo Rovelli puts it: "No more fields on spacetime: just fields on fields.".[5] This is the true meaning of the saying "The stage disappears and becomes one of the actors"; space-time as a "container" over which physics takes place has no objective physical meaning and instead the gravitational interaction is represented as just one of the fields forming the world. This is known as the relationalist interpretation of space-time. The realization by Einstein that general relativity should be interpreted this way is the origin of his remark "Beyond my wildest expectations".

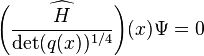

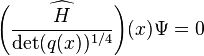

In LQG this aspect of general relativity is taken seriously and this symmetry is preserved by requiring that the physical states remain invariant under the generators of diffeomorphisms. The interpretation of this condition is well understood for purely spatial diffeomorphisms. However, the understanding of diffeomorphisms involving time (the Hamiltonian constraint) is more subtle because it is related to dynamics and the so-called "problem of time" in general relativity.[6] A generally accepted calculational framework to account for this constraint has yet to be found.[7][8] A plausible candidate for the quantum hamiltonian constraint is the operator introduced by Thiemann.[9]

LQG is formally background independent. The equations of LQG are not embedded in, or dependent on, space and time (except for its invariant topology). Instead, they are expected to give rise to space and time at distances which are large compared to the Planck length. The issue of background independence in LQG still has some unresolved subtleties. For example, some derivations require a fixed choice of the topology, while any consistent quantum theory of gravity should include topology change as a dynamical process.

More precisely, space can be viewed as an extremely fine fabric or network "woven" of finite loops. These networks of loops are called spin networks. The evolution of a spin network over time is called a spin foam. The predicted size of this structure is the Planck length, which is approximately 10−35 meters. According to the theory, there is no meaning to distance at scales smaller than the Planck scale. Therefore, LQG predicts that not just matter, but also space itself has an atomic structure.

Today LQG is a vast area of research, developing in several directions, which involves about 30 research groups worldwide.[1] They all share the basic physical assumptions and the mathematical description of quantum space. The full development of the theory is being pursued in two directions: the more traditional canonical loop quantum gravity, and the newer covariant loop quantum gravity, more commonly called spin foam theory.

Research into the physical consequences of the theory is proceeding in several directions. Among these, the most well-developed is the application of LQG to cosmology, called loop quantum cosmology (LQC). LQC applies LQG ideas to the study of the early universe and the physics of the Big Bang. Its most spectacular consequence is that the evolution of the universe can be continued beyond the Big Bang. The Big Bang appears thus to be replaced by a sort of cosmic Big Bounce.

History

In 1986, Abhay Ashtekar reformulated Einstein's general relativity in a language closer to that of the rest of fundamental physics. Shortly after, Ted Jacobson and Lee Smolin realized that the formal equation of quantum gravity, called the Wheeler–DeWitt equation, admitted solutions labelled by loops, when rewritten in the new Ashtekar variables, and Carlo Rovelli and Lee Smolin defined a nonperturbative and background-independent quantum theory of gravity in terms of these loop solutions. Jorge Pullin and Jerzy Lewandowski understood that the intersections of the loops are essential for the consistency of the theory, and the theory should be formulated in terms of intersecting loops, or graphs.In 1994, Rovelli and Smolin showed that the quantum operators of the theory associated to area and volume have a discrete spectrum. That is, geometry is quantized. This result defines an explicit basis of states of quantum geometry, which turned out to be labelled by Roger Penrose's spin networks, which are graphs labelled by spins.

The canonical version of the dynamics was put on firm ground by Thomas Thiemann, who defined an anomaly-free Hamiltonian operator, showing the existence of a mathematically consistent background-independent theory. The covariant or spinfoam version of the dynamics developed during several decades, and crystallized in 2008, from the joint work of research groups in France, Canada, UK, Poland, and Germany, lead to the definition of a family of transition amplitudes, which in the classical limit can be shown to be related to a family of truncations of general relativity.[2] The finiteness of these amplitudes was proven in 2011.[3][4] It requires the existence of a positive cosmological constant, and this is consistent with observed acceleration in the expansion of the Universe.

General covariance and background independence

In theoretical physics, general covariance is the invariance of the form of physical laws under arbitrary differentiable coordinate transformations. The essential idea is that coordinates are only artifices used in describing nature, and hence should play no role in the formulation of fundamental physical laws. A more significant requirement is the principle of general relativity that states that the laws of physics take the same form in all reference systems. This is a generalization of the principle of special relativity which states that the laws of physics take the same form in all inertial frames.In mathematics, a diffeomorphism is an isomorphism in the category of smooth manifolds. It is an invertible function that maps one differentiable manifold to another, such that both the function and its inverse are smooth. These are the defining symmetry transformations of General Relativity since the theory is formulated only in terms of a differentiable manifold.In general relativity, general covariance is intimately related to "diffeomorphism invariance". This symmetry is one of the defining features of the theory. However, it is a common misunderstanding that "diffeomorphism invariance" refers to the invariance of the physical predictions of a theory under arbitrary coordinate transformations; this is untrue and in fact every physical theory is invariant under coordinate transformations this way. Diffeomorphisms, as mathematicians define them, correspond to something much more radical; intuitively a way they can be envisaged is as simultaneously dragging all the physical fields (including the gravitational field) over the bare differentiable manifold while staying in the same coordinate system. Diffeomorphisms are the true symmetry transformations of general relativity, and come about from the assertion that the formulation of the theory is based on a bare differentiable manifold, but not on any prior geometry — the theory is background-independent (this is a profound shift, as all physical theories before general relativity had as part of their formulation a prior geometry). What is preserved under such transformations are the coincidences between the values the gravitational field take at such and such a "place" and the values the matter fields take there. From these relationships one can form a notion of matter being located with respect to the gravitational field, or vice versa. This is what Einstein discovered: that physical entities are located with respect to one another only and not with respect to the spacetime manifold. As Carlo Rovelli puts it: "No more fields on spacetime: just fields on fields.".[5] This is the true meaning of the saying "The stage disappears and becomes one of the actors"; space-time as a "container" over which physics takes place has no objective physical meaning and instead the gravitational interaction is represented as just one of the fields forming the world. This is known as the relationalist interpretation of space-time. The realization by Einstein that general relativity should be interpreted this way is the origin of his remark "Beyond my wildest expectations".

In LQG this aspect of general relativity is taken seriously and this symmetry is preserved by requiring that the physical states remain invariant under the generators of diffeomorphisms. The interpretation of this condition is well understood for purely spatial diffeomorphisms. However, the understanding of diffeomorphisms involving time (the Hamiltonian constraint) is more subtle because it is related to dynamics and the so-called "problem of time" in general relativity.[6] A generally accepted calculational framework to account for this constraint has yet to be found.[7][8] A plausible candidate for the quantum hamiltonian constraint is the operator introduced by Thiemann.[9]

LQG is formally background independent. The equations of LQG are not embedded in, or dependent on, space and time (except for its invariant topology). Instead, they are expected to give rise to space and time at distances which are large compared to the Planck length. The issue of background independence in LQG still has some unresolved subtleties. For example, some derivations require a fixed choice of the topology, while any consistent quantum theory of gravity should include topology change as a dynamical process.

Constraints and their Poisson Bracket Algebra

The constraints of classical canonical general relativity

In the Hamiltonian formulation of ordinary classical mechanics the Poisson bracket is an important concept. A "canonical coordinate system" consists of canonical position and momentum variables that satisfy canonical Poisson-bracket relations,

where the Poisson bracket is given by

and

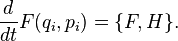

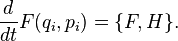

and  . With the use of Poisson brackets, the Hamilton's equations can be rewritten as,

. With the use of Poisson brackets, the Hamilton's equations can be rewritten as,

,

,

.

.

These equations describe a ``flow" or orbit in phase space generated by the Hamiltonian . Given any phase space function

. Given any phase space function  , we have

, we have

Let us consider constrained systems, of which General relativity is an example. In a similar way the Poisson bracket between a constraint and the phase space variables generates a flow along an orbit in (the unconstrained) phase space generated by the constraint. There are three types of constraints in Ashtekar's reformulation of classical general relativity:

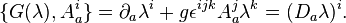

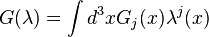

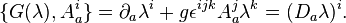

The Gauss constraints

.

.

This represents an infinite number of constraints one for each value of . These come about from re-expressing General relativity as an

. These come about from re-expressing General relativity as an  Yang–Mills type gauge theory (Yang–Mills is a generalization of Maxwell's theory where the gauge field transforms as a vector under Gauss transformations, that is, the Gauge field is of the form

Yang–Mills type gauge theory (Yang–Mills is a generalization of Maxwell's theory where the gauge field transforms as a vector under Gauss transformations, that is, the Gauge field is of the form  where

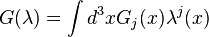

where  is an internal index. See Ashtekar variables). These infinite number of Gauss gauge constraints can be smeared with test fields with internal indices,

is an internal index. See Ashtekar variables). These infinite number of Gauss gauge constraints can be smeared with test fields with internal indices,  ,

,

.

.

which we demand vanish for any such function. These smeared constraints defined with respect to a suitable space of smearing functions give an equivalent description to the original constraints.

In fact Ashtekar's formulation may be thought of as ordinary Yang–Mills theory together with the following special constraints, resulting from diffeomorphism invariance, and a Hamiltonian that vanishes. The dynamics of such a theory are thus very different from that of ordinary Yang–Mills theory.

Yang–Mills theory together with the following special constraints, resulting from diffeomorphism invariance, and a Hamiltonian that vanishes. The dynamics of such a theory are thus very different from that of ordinary Yang–Mills theory.

can be smeared by the so-called shift functions to give an equivalent set of smeared spatial diffeomorphism constraints,

to give an equivalent set of smeared spatial diffeomorphism constraints,

.

.

These generate spatial diffeomorphisms along orbits defined by the shift function .

.

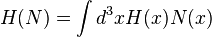

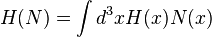

can be smeared by the so-called lapse functions to give an equivalent set of smeared Hamiltonian constraints,

to give an equivalent set of smeared Hamiltonian constraints,

.

.

These generate time diffeomorphisms along orbits defined by the lapse function .

.

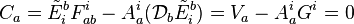

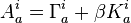

In Ashtekar formulation the gauge field is the configuration variable (the configuration variable being analogous to

is the configuration variable (the configuration variable being analogous to  in ordinary mechanics) and its conjugate momentum is the (densitized) triad (electrical field)

in ordinary mechanics) and its conjugate momentum is the (densitized) triad (electrical field)  . The constraints are certain functions of these phase space variables.

. The constraints are certain functions of these phase space variables.

We consider the action of the constraints on arbitrary phase space functions. An important notion here is the Lie derivative, , which is basically a derivative operation that infinitesimally "shifts" functions along some orbit with tangent vector

, which is basically a derivative operation that infinitesimally "shifts" functions along some orbit with tangent vector  .

.

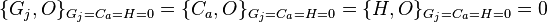

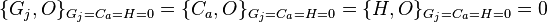

![\{ G (\lambda) , G (\mu) \} = G ([\lambda , \mu])](//upload.wikimedia.org/math/a/3/e/a3e4371d1cb39d73a064dd0e7bf19ad7.png)

where![[\lambda , \mu]^k = \lambda_i \mu_j \epsilon^{ijk}](//upload.wikimedia.org/math/1/b/9/1b9d3419ef766cad634cc57e44c81100.png) . And so we see that the Poisson bracket of two Gauss' law is equivalent to a single Gauss' law evaluated on the commutator of the smearings. The Poisson bracket amongst spatial diffeomorphisms constraints reads

. And so we see that the Poisson bracket of two Gauss' law is equivalent to a single Gauss' law evaluated on the commutator of the smearings. The Poisson bracket amongst spatial diffeomorphisms constraints reads

and we see that its effect is to "shift the smearing". The reason for this is that the smearing functions are not functions of the canonical variables and so the spatial diffeomorphism does not generate diffeomorphims on them. They do however generate diffeomorphims on everything else. This is equivalent to leaving everything else fixed while shifting the smearing .The action of the spatial diffeomorphism on the Gauss law is

,

,

again, it shifts the test field . The Gauss law has vanishing Poisson bracket with the Hamiltonian constraint. The spatial diffeomorphism constraint with a Hamiltonian gives a Hamiltonian with its smearing shifted,

. The Gauss law has vanishing Poisson bracket with the Hamiltonian constraint. The spatial diffeomorphism constraint with a Hamiltonian gives a Hamiltonian with its smearing shifted,

.

.

Finally, the poisson bracket of two Hamiltonians is a spatial diffeomorphism,

where is some phase space function. That is, it is a sum over infinitesimal spatial diffeomorphisms constraints where the coefficients of proportionality are not constants but have non-trivial phase space dependence.

is some phase space function. That is, it is a sum over infinitesimal spatial diffeomorphisms constraints where the coefficients of proportionality are not constants but have non-trivial phase space dependence.

A (Poisson bracket) Lie algebra, with constraints , is of the form

, is of the form

where are constants (the so-called structure constants). The above Poisson bracket algebra for General relativity does not form a true Lie algebra as we have structure functions rather than structure constants for the Poisson bracket between two Hamiltonians. This leads to difficulties.

are constants (the so-called structure constants). The above Poisson bracket algebra for General relativity does not form a true Lie algebra as we have structure functions rather than structure constants for the Poisson bracket between two Hamiltonians. This leads to difficulties.

, that Poisson commute with all the constraints when the constraint equations are imposed,

, that Poisson commute with all the constraints when the constraint equations are imposed,

,

,

that is, they are quantities defined on the constraint surface that are invariant under the gauge transformations of the theory.

Then, solving only the constraint and determining the Dirac observables with respect to it leads us back to the ADM phase space with constraints

and determining the Dirac observables with respect to it leads us back to the ADM phase space with constraints  . The dynamics of general relativity is generated by the constraints, it can be shown that six Einstein equations describing time evolution (really a gauge transformation) can be obtained by calculating the Poisson brackets of the three-metric and its conjugate momentum with a linear combination of the spatial diffeomorphism and Hamiltonian constraint. The vanishing of the constraints, giving the physical phase space, are the four other Einstein equations.[10]

. The dynamics of general relativity is generated by the constraints, it can be shown that six Einstein equations describing time evolution (really a gauge transformation) can be obtained by calculating the Poisson brackets of the three-metric and its conjugate momentum with a linear combination of the spatial diffeomorphism and Hamiltonian constraint. The vanishing of the constraints, giving the physical phase space, are the four other Einstein equations.[10]

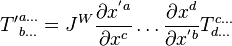

(a triad

(a triad  is simply three orthogonal vector fields labeled by

is simply three orthogonal vector fields labeled by  and the densitized triad is defined by

and the densitized triad is defined by  ) to encode information about the spatial metric,

) to encode information about the spatial metric, .

.

(where is the flat space metric, and the above equation expresses that

is the flat space metric, and the above equation expresses that  , when written in terms of the basis

, when written in terms of the basis  , is locally flat). (Formulating general relativity with triads instead of metrics was not new.) The densitized triads are not unique, and in fact one can perform a local in space rotation with respect to the internal indices

, is locally flat). (Formulating general relativity with triads instead of metrics was not new.) The densitized triads are not unique, and in fact one can perform a local in space rotation with respect to the internal indices  . The canonically conjugate variable is related to the extrinsic curvature by

. The canonically conjugate variable is related to the extrinsic curvature by  . But problems similar to using the metric formulation arise when one tries to quantize the theory. Ashtekar's new insight was to introduce a new configuration variable,

. But problems similar to using the metric formulation arise when one tries to quantize the theory. Ashtekar's new insight was to introduce a new configuration variable,

that behaves as a complex connection where

connection where  is related to the so-called spin connection via

is related to the so-called spin connection via  . Here

. Here  is called the chiral spin connection. It defines a covariant derivative

is called the chiral spin connection. It defines a covariant derivative  . It turns out that

. It turns out that  is the conjugate momentum of

is the conjugate momentum of  , and together these form Ashtekar's new variables.

, and together these form Ashtekar's new variables.

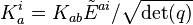

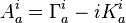

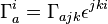

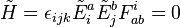

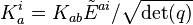

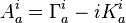

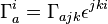

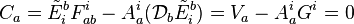

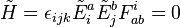

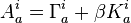

The expressions for the constraints in Ashtekar variables; the Gauss's law, the spatial diffeomorphism constraint and the (densitized) Hamiltonian constraint then read:

,

,

respectively, where is the field strength tensor of the connection

is the field strength tensor of the connection  and where

and where  is referred to as the vector constraint. The above-mentioned local in space rotational invariance is the original of the

is referred to as the vector constraint. The above-mentioned local in space rotational invariance is the original of the  gauge invariance here expressed by the Gauss law. Note that these constraints are polynomial in the fundamental variables, unlike as with the constraints in the metric formulation.

gauge invariance here expressed by the Gauss law. Note that these constraints are polynomial in the fundamental variables, unlike as with the constraints in the metric formulation.

This dramatic simplification seemed to open up the way to quantizing the constraints. (See the article Self-dual Palatini action for a derivation of Ashtekar's formulism).

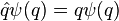

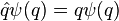

With Ashtekar's new variables, given the configuration variable , it is natural to consider wavefunctions

, it is natural to consider wavefunctions  . This is the connection representation. It is analogous to ordinary quantum mechanics with configuration variable

. This is the connection representation. It is analogous to ordinary quantum mechanics with configuration variable  and wavefunctions

and wavefunctions  . The configuration variable gets promoted to a quantum operator via:

. The configuration variable gets promoted to a quantum operator via:

,

,

(analogous to ) and the triads are (functional) derivatives,

) and the triads are (functional) derivatives,

.

.

(analogous to ). In passing over to the quantum theory the constraints become operators on a kinematic Hilbert space (the unconstrained

). In passing over to the quantum theory the constraints become operators on a kinematic Hilbert space (the unconstrained  Yang–Mills Hilbert space). Note that different ordering of the

Yang–Mills Hilbert space). Note that different ordering of the  's and

's and  's when replacing the

's when replacing the  's with derivatives give rise to different operators - the choice made is called the factor ordering and should be chosen via physical reasoning. Formally they read

's with derivatives give rise to different operators - the choice made is called the factor ordering and should be chosen via physical reasoning. Formally they read

.

.

There are still problems in properly defining all these equations and solving them. For example the Hamiltonian constraint Ashtekar worked with was the densitized version instead of the original Hamiltonian, that is, he worked with . There were serious difficulties in promoting this quantity to a quantum operator. Moreover, although Ashtekar variables had the virtue of simplifying the Hamiltonian, they are complex. When one quantizes the theory, it is difficult to ensure that one recovers real general relativity as opposed to complex general relativity.

. There were serious difficulties in promoting this quantity to a quantum operator. Moreover, although Ashtekar variables had the virtue of simplifying the Hamiltonian, they are complex. When one quantizes the theory, it is difficult to ensure that one recovers real general relativity as opposed to complex general relativity.

with the connections is

with the connections is

The quantum Gauss' law reads

![\hat{G}_j \Psi (A) = - i D_a {\delta \Psi [A] \over \delta A_a^j} = 0.](//upload.wikimedia.org/math/f/a/6/fa660742fb4df6dc6fe438fede4b1b5d.png)

If one smears the quantum Gauss' law and study its action on the quantum state one finds that the action of the constraint on the quantum state is equivalent to shifting the argument of by an infinitesimal (in the sense of the parameter

by an infinitesimal (in the sense of the parameter  small) gauge transformation,

small) gauge transformation,

![\Big [ 1 + \int d^3x \lambda^j (x) \hat{G}_j \Big] \Psi (A) = \Psi [A + D \lambda] = \Psi [A],](//upload.wikimedia.org/math/1/9/5/1957f516ce46c218473569fc2fa66159.png)

and the last identity comes from the fact that the constraint annihilates the state. So the constraint, as a quantum operator, is imposing the same symmetry that its vanishing imposed classically: it is telling us that the functions![\Psi [A]](//upload.wikimedia.org/math/4/1/a/41ad023d676a0ddb997cda57332f738f.png) have to be gauge invariant functions of the connection. The same idea is true for the other constraints.

have to be gauge invariant functions of the connection. The same idea is true for the other constraints.

Therefore the two step process in the classical theory of solving the constraints (equivalent to solving the admissibility conditions for the initial data) and looking for the gauge orbits (solving the `evolution' equations) is replaced by a one step process in the quantum theory, namely looking for solutions

(equivalent to solving the admissibility conditions for the initial data) and looking for the gauge orbits (solving the `evolution' equations) is replaced by a one step process in the quantum theory, namely looking for solutions  of the quantum equations

of the quantum equations  . This is because it obviously solves the constraint at the quantum level and it simultaneously looks for states that are gauge invariant because

. This is because it obviously solves the constraint at the quantum level and it simultaneously looks for states that are gauge invariant because  is the quantum generator of gauge transformations (gauge invariant functions are constant along the gauge orbits and thus characterize them).[11] Recall that, at the classical level, solving the admissibility conditions and evolution equations was equivalent to solving all of Einstein's field equations, this underlines the central role of the quantum constraint equations in canonical quantum gravity.

is the quantum generator of gauge transformations (gauge invariant functions are constant along the gauge orbits and thus characterize them).[11] Recall that, at the classical level, solving the admissibility conditions and evolution equations was equivalent to solving all of Einstein's field equations, this underlines the central role of the quantum constraint equations in canonical quantum gravity.

We need the notion of a holonomy. A holonomy is a measure of how much the initial and final values of a spinor or vector differ after parallel transport around a closed loop; it is denoted

![h_\gamma [A]](//upload.wikimedia.org/math/8/c/4/8c455ecc38dceb85eec603fdffa9fb02.png) .

.

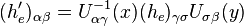

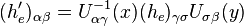

Knowledge of the holonomies is equivalent to knowledge of the connection, up to gauge equivalence. Holonomies can also be associated with an edge; under a Gauss Law these transform as

.

.

For a closed loop if we take the trace of this, that is, putting

if we take the trace of this, that is, putting  and summing we obtain

and summing we obtain

![(h'_e)_{\alpha \alpha} = U_{\alpha \gamma}^{-1} (x) (h_e)_{\gamma \sigma} U_{\sigma \alpha} (x) = [U_{\sigma \alpha} (x) U_{\alpha \gamma}^{-1} (x)] (h_e)_{\gamma \sigma} = \delta_{\sigma \gamma} (h_e)_{\gamma \sigma} = (h_e)_{\gamma \gamma}](//upload.wikimedia.org/math/7/3/f/73fa4a42427a01f547683510f9ff9a5b.png)

or

.

.

The trace of an holonomy around a closed loop and is written

![W_\gamma [A]](//upload.wikimedia.org/math/2/6/0/260de4ee7f31312a45164ead4ad5067f.png)

and is called a Wilson loop. Thus Wilson loop are gauge invariant. The explicit form of the Holonomy is

![h_\gamma [A] = \mathcal{P} \exp \Big\{-\int_{\gamma_0}^{\gamma_1} ds \dot{\gamma}^a A_a^i (\gamma (s)) T_i \Big\}](//upload.wikimedia.org/math/8/4/b/84b7a8d8576f2464937c0a0e8e76be57.png)

where is the curve along which the holonomy is evaluated, and

is the curve along which the holonomy is evaluated, and  is a parameter along the curve,

is a parameter along the curve,  denotes path ordering meaning factors for smaller values of

denotes path ordering meaning factors for smaller values of  appear to the left, and

appear to the left, and  are matrices that satisfy the

are matrices that satisfy the  algebra

algebra

![[T^i ,T^j] = 2i \epsilon^{ijk} T^k](//upload.wikimedia.org/math/1/f/d/1fda1c2f92ac5bca8542433cbe18df11.png) .

.

The Pauli matrices satisfy the above relation. It turns out that there are infinitely many more examples of sets of matrices that satisfy these relations, where each set comprises matrices with

matrices with  , and where non of these can be thought to `decompose' into two or more examples of lower dimension. They are called different irreducible representations of the

, and where non of these can be thought to `decompose' into two or more examples of lower dimension. They are called different irreducible representations of the  algebra. The most fundamental representation being the Pauli matrices. The holonomy is labelled by a half integer

algebra. The most fundamental representation being the Pauli matrices. The holonomy is labelled by a half integer  according to the irreducible representation used.

according to the irreducible representation used.

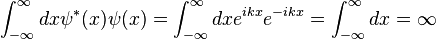

The use of Wilson loops explicitly solves the Gauss gauge constraint. To handle the spatial diffeomorphism constraint we need to go over to the loop representation. As Wilson loops form a basis we can formally expand any Gauss gauge invariant function as,

![\Psi [A] = \sum_\gamma \Psi [\gamma] W_\gamma [A]](//upload.wikimedia.org/math/6/2/3/623d5ab0c7b3fa7bcd65272e87725574.png) .

.

This is called the loop transform. We can see the analogy with going to the momentum representation in quantum mechanics(see Position and momentum space). There one has a basis of states labelled by a number

labelled by a number  and one expands

and one expands

![\psi [x] = \int dk \psi (k) \exp (ikx)](//upload.wikimedia.org/math/e/3/f/e3f1bb436ad2a28765ea29676f582fd3.png) .

.

and works with the coefficients of the expansion .

.

The inverse loop transform is defined by

![\Psi [\gamma] = \int [dA] \Psi [A] W_\gamma [A]](//upload.wikimedia.org/math/7/e/c/7ec857bcf774b3034a1116675e5aebf8.png) .

.

This defines the loop representation. Given an operator in the connection representation,

in the connection representation,

![\Phi [A] = \hat{O} \Psi [A] \qquad Eq \; 1](//upload.wikimedia.org/math/7/3/1/73108ee00d119572456ab602cb7231d9.png) ,

,

one should define the corresponding operator on

on ![\Psi [\gamma]](//upload.wikimedia.org/math/1/8/1/181eaf0d09b9f4e57e737133901576bc.png) in the loop representation via,

in the loop representation via,

![\Phi [\gamma] = \hat{O}' \Psi [\gamma] \qquad Eq \; 2](//upload.wikimedia.org/math/b/0/f/b0fb2036bda953c1c609364216b108d4.png) ,

,

where![\Phi [\gamma]](//upload.wikimedia.org/math/e/4/2/e4281a54d537dd7550fc2c87776f84cf.png) is defined by the usual inverse loop transform,

is defined by the usual inverse loop transform,

![\Phi [\gamma] = \int [dA] \Phi [A] W_\gamma [A] \qquad Eq \; 3.](//upload.wikimedia.org/math/e/2/2/e228cecd0419fe5b8852ff8158093273.png) .

.

A transformation formula giving the action of the operator on

on ![\Psi [\gamma]](//upload.wikimedia.org/math/1/8/1/181eaf0d09b9f4e57e737133901576bc.png) in terms of the action of the operator

in terms of the action of the operator  on

on ![\Psi [A]](//upload.wikimedia.org/math/4/1/a/41ad023d676a0ddb997cda57332f738f.png) is then obtained by equating the R.H.S. of

is then obtained by equating the R.H.S. of  with the R.H.S. of

with the R.H.S. of  with

with  substituted into

substituted into  , namely

, namely

![\hat{O}' \Psi [\gamma] = \int [dA] W_\gamma [A] \hat{O} \Psi [A]](//upload.wikimedia.org/math/e/1/c/e1c47d6373d0ee5fb362c35f3b3cbe78.png) ,

,

or

![\hat{O}' \Psi [\gamma] = \int [dA] (\hat{O}^\dagger W_\gamma [A]) \Psi [A]](//upload.wikimedia.org/math/c/3/e/c3e8eb31a611891b010b5e138d374e56.png) ,

,

where by we mean the operator

we mean the operator  but with the reverse factor ordering (remember from simple quantum mechanics where the product of operators is reversed under conjugation). We evaluate the action of this operator on the Wilson loop as a calculation in the connection representation and rearranging the result as a manipulation purely in terms of loops (one should remember that when considering the action on the Wilson loop one should choose the operator one wishes to transform with the opposite factor ordering to the one chosen for its action on wavefunctions

but with the reverse factor ordering (remember from simple quantum mechanics where the product of operators is reversed under conjugation). We evaluate the action of this operator on the Wilson loop as a calculation in the connection representation and rearranging the result as a manipulation purely in terms of loops (one should remember that when considering the action on the Wilson loop one should choose the operator one wishes to transform with the opposite factor ordering to the one chosen for its action on wavefunctions ![\Psi [A]](//upload.wikimedia.org/math/4/1/a/41ad023d676a0ddb997cda57332f738f.png) ). This gives the physical meaning of the operator

). This gives the physical meaning of the operator  . For example if

. For example if  corresponded to a spatial diffeomorphism, then this can be thought of as keeping the connection field

corresponded to a spatial diffeomorphism, then this can be thought of as keeping the connection field  of

of ![W_\gamma [A]](//upload.wikimedia.org/math/2/6/0/260de4ee7f31312a45164ead4ad5067f.png) where it is while performing a spatial diffeomorphism on

where it is while performing a spatial diffeomorphism on  instead. Therefore the meaning of

instead. Therefore the meaning of  is a spatial diffeomorphism on

is a spatial diffeomorphism on  , the argument of

, the argument of ![\Psi [\gamma]](//upload.wikimedia.org/math/1/8/1/181eaf0d09b9f4e57e737133901576bc.png) .

.

In the loop representation we can then solve the spatial diffeomorphism constraint by considering functions of loops![\Psi [\gamma]](//upload.wikimedia.org/math/1/8/1/181eaf0d09b9f4e57e737133901576bc.png) that are invariant under spatial diffeomorphisms of the loop

that are invariant under spatial diffeomorphisms of the loop  . That is, we construct what mathematicians call knot invariants. This opened up an unexpected connection between knot theory and quantum gravity.

. That is, we construct what mathematicians call knot invariants. This opened up an unexpected connection between knot theory and quantum gravity.

What about the Hamiltonian constraint? Let us go back to the connection representation. Any collection of non-intersecting Wilson loops satisfy Ashtekar's quantum Hamiltonian constraint. This can be seen from the following. With a particular ordering of terms and replacing by a derivative, the action of the quantum Hamiltonian constraint on a Wilson loop is

by a derivative, the action of the quantum Hamiltonian constraint on a Wilson loop is

![\hat{\tilde{H}}^\dagger W_\gamma [A] = - \epsilon_{ijk} \hat{F}^k_{ab} {\delta \over \delta A_a^i} \; {\delta \over \delta A_b^j} W_\gamma [A]](//upload.wikimedia.org/math/7/6/4/764ad620b063f93e149c74fce6c3fe61.png) .

.

When a derivative is taken it brings down the tangent vector, , of the loop,

, of the loop,  . So we have something like

. So we have something like

.

.

However, as is anti-symmetric in the indices

is anti-symmetric in the indices  and

and  this vanishes (this assumes that

this vanishes (this assumes that  is not discontinuous anywhere and so the tangent vector is unique). Now let us go back to the loop representation.

is not discontinuous anywhere and so the tangent vector is unique). Now let us go back to the loop representation.

We consider wavefunctions![\Psi [\gamma]](//upload.wikimedia.org/math/1/8/1/181eaf0d09b9f4e57e737133901576bc.png) that vanish if the loop has discontinuities and that are knot invariants. Such functions solve the Gauss law, the spatial diffeomorphism constraint and (formally) the Hamiltonian constraint. Thus we have identified an infinite set of exact (if only formal) solutions to all the equations of quantum general relativity![12] This generated a lot of interest in the approach and eventually led to LQG.

that vanish if the loop has discontinuities and that are knot invariants. Such functions solve the Gauss law, the spatial diffeomorphism constraint and (formally) the Hamiltonian constraint. Thus we have identified an infinite set of exact (if only formal) solutions to all the equations of quantum general relativity![12] This generated a lot of interest in the approach and eventually led to LQG.

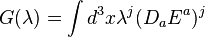

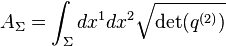

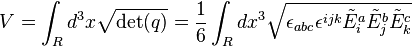

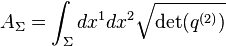

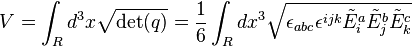

is characterized by

is characterized by  . The area of small parallelogram of the surface

. The area of small parallelogram of the surface  is the product of length of each side times

is the product of length of each side times  where

where  is the angle between the sides. Say one edge is given by the vector

is the angle between the sides. Say one edge is given by the vector  and the other by

and the other by  then,

then,

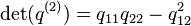

From this we get the area of the surface to be given by

to be given by

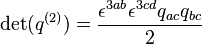

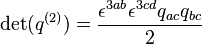

where and is the determinant of the metric induced on

and is the determinant of the metric induced on  . This can be rewritten as

. This can be rewritten as

.

.

The standard formula for an inverse matrix is

Note the similarity between this and the expression for . But in Ashtekar variables we have

. But in Ashtekar variables we have  . Therefore

. Therefore

.

.

According to the rules of canonical quantization we should promote the triads to quantum operators,

to quantum operators,

.

.

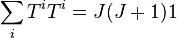

It turns out that the area can be promoted to a well defined quantum operator despite the fact that we are dealing with product of two functional derivatives and worse we have a square-root to contend with as well.[13] Putting

can be promoted to a well defined quantum operator despite the fact that we are dealing with product of two functional derivatives and worse we have a square-root to contend with as well.[13] Putting  , we talk of being in the

, we talk of being in the  -th representation. We note that

-th representation. We note that  . This quantity is important in the final formula for the area spectrum. We simply state the result below,

. This quantity is important in the final formula for the area spectrum. We simply state the result below,

![\hat{A}_\Sigma W_\gamma [A] = 8 \pi \ell_{\text{Planck}}^2 \beta \sum_I \sqrt{j_I (j_I + 1)} W_\gamma [A]](//upload.wikimedia.org/math/7/e/2/7e22ed7d14e5f2cc91692b718665073f.png)

where the sum is over all edges of the Wilson loop that pierce the surface

of the Wilson loop that pierce the surface  .

.

The formula for the volume of a region is given by

is given by

.

.

The quantization of the volume proceeds the same way as with the area. As we take the derivative, and each time we do so we bring down the tangent vector , when the volume operator acts on non-intersecting Wilson loops the result vanishes. Quantum states with non-zero volume must therefore involve intersections. Given that the anti-symmetric summation is taken over in the formula for the volume we would need at least intersections with three non-coplanar lines. Actually it turns out that one needs at least four-valent vertices for the volume operator to be non-vanishing.

, when the volume operator acts on non-intersecting Wilson loops the result vanishes. Quantum states with non-zero volume must therefore involve intersections. Given that the anti-symmetric summation is taken over in the formula for the volume we would need at least intersections with three non-coplanar lines. Actually it turns out that one needs at least four-valent vertices for the volume operator to be non-vanishing.

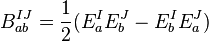

We now consider Wilson loops with intersections. We assume the real representation where the gauge group is . Wilson loops are an over complete basis as there are identities relating different Wilson loops. These come about from the fact that Wilson loops are based on matrices (the holonomy) and these matrices satisfy identities. Given any two

. Wilson loops are an over complete basis as there are identities relating different Wilson loops. These come about from the fact that Wilson loops are based on matrices (the holonomy) and these matrices satisfy identities. Given any two  matrices

matrices  and

and  it is easy to check that,

it is easy to check that,

.

.

This implies that given two loops and

and  that intersect, we will have,

that intersect, we will have,

![W_\gamma [A] W_\eta [A] = W_{\gamma \circ \eta} [A] + W_{\gamma \circ \eta^{-1}} [A]](//upload.wikimedia.org/math/0/7/d/07d9bf6730eb5272357a3281004b6db7.png)

where by we mean the loop

we mean the loop  traversed in the opposite direction and

traversed in the opposite direction and  means the loop obtained by going around the loop

means the loop obtained by going around the loop  and then along

and then along  . See figure below. Given that the matrices are unitary one has that

. See figure below. Given that the matrices are unitary one has that ![W_\gamma [A] = W_{\gamma^{-1}} [A]](//upload.wikimedia.org/math/f/1/2/f129434ef54907d369d7733d8156474a.png) . Also given the cyclic property of the matrix traces (i.e.

. Also given the cyclic property of the matrix traces (i.e.  ) one has that

) one has that ![W_{\gamma \circ \eta} [A] = W_{\eta \circ \gamma} [A]](//upload.wikimedia.org/math/c/0/9/c09529622b4fad31a4e5517d0fbdc723.png) . These identities can be combined with each other into further identities of increasing complexity adding more loops. These identities are the so-called Mandelstam identities. Spin networks certain are linear combinations of intersecting Wilson loops designed to address the over completeness introduced by the Mandelstam identities (for trivalent intersections they eliminate the over-compleness entirely) and actually constitute a basis for all gauge invariant functions.

. These identities can be combined with each other into further identities of increasing complexity adding more loops. These identities are the so-called Mandelstam identities. Spin networks certain are linear combinations of intersecting Wilson loops designed to address the over completeness introduced by the Mandelstam identities (for trivalent intersections they eliminate the over-compleness entirely) and actually constitute a basis for all gauge invariant functions.

As mentioned above the holonomy tells you how to propagate test spin half particles. A spin network state assigns an amplitude to a set of spin half particles tracing out a path in space, merging and splitting. These are described by spin networks : the edges are labelled by spins together with `intertwiners' at the vertices which are prescription for how to sum over different ways the spins are rerouted. The sum over rerouting are chosen as such to make the form of the intertwiner invariant under Gauss gauge transformations.

: the edges are labelled by spins together with `intertwiners' at the vertices which are prescription for how to sum over different ways the spins are rerouted. The sum over rerouting are chosen as such to make the form of the intertwiner invariant under Gauss gauge transformations.

and not

and not  . As

. As  is non-compact it creates serious problems for the rigorous construction of the necessary mathematical machinery. The group

is non-compact it creates serious problems for the rigorous construction of the necessary mathematical machinery. The group  is on the other hand is compact and the relevant constructions needed have been developed.

is on the other hand is compact and the relevant constructions needed have been developed.

As mentioned above, because Ashtekar's variables are complex it results in complex general relativity. To recover the real theory one has to impose what are known as the reality conditions. These require that the densitized triad be real and that the real part of the Ashtekar connection equals the compatible spin connection (the compatibility condition being ) determined by the desitized triad. The expression for compatible connection

) determined by the desitized triad. The expression for compatible connection  is rather complicated and as such non-polynomial formula enters through the back door.

is rather complicated and as such non-polynomial formula enters through the back door.

Before we state the next difficulty we should give a definition; a tensor density of weight transforms like an ordinary tensor, except that in additional the

transforms like an ordinary tensor, except that in additional the  th power of the Jacobian,

th power of the Jacobian,

appears as a factor, i.e.

.

.

It turns out that it is impossible, on general grounds, to construct a UV-finite, diffeomorphism non-violating operator corresponding to . The reason is that the rescaled Hamiltonian constraint is a scalar density of weight two while it can be shown that only scalar densities of weight one have a chance to result in a well defined operator. Thus, one is forced to work with the original unrescaled, density one-valued, Hamiltonian constraint. However, this is non-polynomial and the whole virtue of the complex variables is questioned. In fact, all the solutions constructed for Ashtekar's Hamiltonian constraint only vanished for finite regularization (physics), however, this violates spatial diffeomorphism invariance.

. The reason is that the rescaled Hamiltonian constraint is a scalar density of weight two while it can be shown that only scalar densities of weight one have a chance to result in a well defined operator. Thus, one is forced to work with the original unrescaled, density one-valued, Hamiltonian constraint. However, this is non-polynomial and the whole virtue of the complex variables is questioned. In fact, all the solutions constructed for Ashtekar's Hamiltonian constraint only vanished for finite regularization (physics), however, this violates spatial diffeomorphism invariance.

Without the implementation and solution of the Hamiltonian constraint no progress can be made and no reliable predictions are possible!

To overcome the first problem one works with the configuration variable

where is real (as pointed out by Barbero, who introduced real variables some time after Ashtekar's variables[14][15]). The Guass law and the spatial diffeomorphism constraints are the same. In real Ashtekar variables the Hamiltonian is

is real (as pointed out by Barbero, who introduced real variables some time after Ashtekar's variables[14][15]). The Guass law and the spatial diffeomorphism constraints are the same. In real Ashtekar variables the Hamiltonian is

.

.

The complicated relationship between and the desitized triads causes serious problems upon quantization. It is with the choice

and the desitized triads causes serious problems upon quantization. It is with the choice  that the second more complicated term is made to vanish. However, as mentioned above

that the second more complicated term is made to vanish. However, as mentioned above  reappears in the reality conditions. Also we still have the problem of the

reappears in the reality conditions. Also we still have the problem of the  factor.

factor.

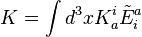

Thiemann was able to make it work for real . First he could simplify the troublesome

. First he could simplify the troublesome  by using the identity

by using the identity

where is the volume. The

is the volume. The  and

and  can be promoted to well defined operators in the loop representation and the Poisson bracket is replaced by a commutator upon quantization; this takes care of the first term. It turns out that a similar trick can be used to treat the second term. One introduces the quantity

can be promoted to well defined operators in the loop representation and the Poisson bracket is replaced by a commutator upon quantization; this takes care of the first term. It turns out that a similar trick can be used to treat the second term. One introduces the quantity

and notes that

.

.

We are then able to write

.

.

The reason the quantity is easier to work with at the time of quantization is that it can be written as

is easier to work with at the time of quantization is that it can be written as

where we have used that the integrated densitized trace of the extrinsic curvature, , is the``time derivative of the volume".

, is the``time derivative of the volume".

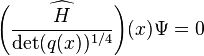

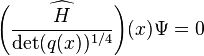

In the long history of canonical quantum gravity formulating the Hamiltonian constraint as a quantum operator (Wheeler–DeWitt equation) in a mathematically rigorous manner has been a formidable problem. It was in the loop representation that a mathematically well defined Hamiltonian constraint was finally formulated in 1996.[9] We leave more details of its construction to the article Hamiltonian constraint of LQG. This together with the quantum versions of the Gauss law and spatial diffeomorphism constrains written in the loop representation are the central equations of LQG (modern canonical quantum General relativity).

Finding the states that are annihilated by these constraints (the physical states), and finding the corresponding physical inner product, and observables is the main goal of the technical side of LQG.

A very important aspect of the Hamiltonian operator is that it only acts at vertices (a consequence of this is that Thiemann's Hamiltonian operator, like Ashtekar's operator, annihilates non-intersecting loops except now it is not just formal and has rigorous mathematical meaning). More precisely, its action is non-zero on at least vertices of valence three and greater and results in a linear combination of new spin networks where the original graph has been modified by the addition of lines at each vertex together and a change in the labels of the adjacent links of the vertex.

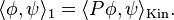

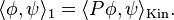

Before we move on to the constraints of LQG, lets us consider certain cases. We start with a kinematic Hilbert space as so is equipped with an inner product—the kinematic inner product

as so is equipped with an inner product—the kinematic inner product  .

.

i) Say we have constraints whose zero eigenvalues lie in their discrete spectrum. Solutions of the first constraint,

whose zero eigenvalues lie in their discrete spectrum. Solutions of the first constraint,  , correspond to a subspace of the kinematic Hilbert space,

, correspond to a subspace of the kinematic Hilbert space,  . There will be a projection operator

. There will be a projection operator  mapping

mapping  onto

onto  . The kinematic inner product structure is easily employed to provide the inner product structure after solving this first constraint; the new inner product

. The kinematic inner product structure is easily employed to provide the inner product structure after solving this first constraint; the new inner product  is simply

is simply

They are based on the same inner product and are states normalizable with respect to it.

ii) The zero point is not contained in the point spectrum of all the , there is then no non-trivial solution

, there is then no non-trivial solution  to the system of quantum constraint equations

to the system of quantum constraint equations  for all

for all  .

.

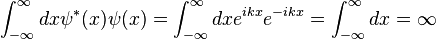

For example the zero eigenvalue of the operator

on lies in the continuous spectrum

lies in the continuous spectrum  but the formal ``eigenstate"

but the formal ``eigenstate"  is not normalizable in the kinematic inner product,

is not normalizable in the kinematic inner product,

and so does not belong to the kinematic Hilbert space . In these cases we take a dense subset

. In these cases we take a dense subset  of

of  (intuitively this means either any point in

(intuitively this means either any point in  is either in

is either in  or arbitrarily close to a point in

or arbitrarily close to a point in  ) with very good convergence properties and consider its dual space

) with very good convergence properties and consider its dual space  (intuitively these map elements of

(intuitively these map elements of  onto finite complex numbers in a linear manner), then

onto finite complex numbers in a linear manner), then  (as

(as  contains distributional functions). The constraint operator is then implemented on this larger dual space, which contains distributional functions, under the adjoint action on the operator. One looks for solutions on this larger space. This comes at the price that the solutions must be given a new Hilbert space inner product with respect to which they are normalizable (see article on rigged Hilbert space).

contains distributional functions). The constraint operator is then implemented on this larger dual space, which contains distributional functions, under the adjoint action on the operator. One looks for solutions on this larger space. This comes at the price that the solutions must be given a new Hilbert space inner product with respect to which they are normalizable (see article on rigged Hilbert space).

In this case we have a generalized projection operator on the new space of states. We cannot use the above formula for the new inner product as it diverges, instead the new inner product is given by the simply modification of the above,

The generalized projector is known as a rigging map.

is known as a rigging map.

Let us move to LQG, additional complications will arise from the fact the constraint algebra is not a Lie algebra due to the bracket between two Hamiltonian constraints.

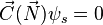

The Gauss law is solved by the use of spin network states. They provide a basis for the Kinematic Hilbert space . The spatial diffeomorphism constraint has been solved. The induced inner product on

. The spatial diffeomorphism constraint has been solved. The induced inner product on  (we do not pursue the details) has a very simple description in terms of spin network states; given two spin networks

(we do not pursue the details) has a very simple description in terms of spin network states; given two spin networks  and

and  , with associated spin network states

, with associated spin network states  and

and  , the inner product is 1 if

, the inner product is 1 if  and

and  are related to each other by a spatial diffeomorphism and zero otherwise.

are related to each other by a spatial diffeomorphism and zero otherwise.

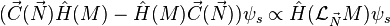

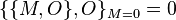

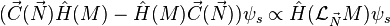

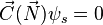

The Hamiltonian constraint maps diffeomorphism invariant states onto non-diffeomorphism invaiant states as so does not preserve the diffeomorphism Hilbert space . This is an unavoidable consequence of the operator algebra, in particular the commutator:

. This is an unavoidable consequence of the operator algebra, in particular the commutator:

![[ \hat{C} (\vec{N}) , \hat{H} (M) ] \propto \hat{H} (\mathcal{L}_\vec{N} M)](//upload.wikimedia.org/math/f/4/1/f412f91684221ebaa554edb7172feccb.png)

as can be seen by applying this to ,

,

and using to obtain

to obtain

![\vec{C} (\vec{N}) [\hat{H} (M) \psi_s] \propto \hat{H} (\mathcal{L}_\vec{N} M) \psi_s \not= 0](//upload.wikimedia.org/math/c/c/2/cc21e995ed9cd9404312c3f9d7432374.png)

and so is not in

is not in  .

.

This means that you can't just solve the diffeomorphism constraint and then the Hamiltonian constraint. This problem can be circumvented by the introduction of the Master constraint, with its trivial operator algebra, one is then able in principle to construct the physical inner product from .

.

In physics, a spin foam is a topological structure made out of two-dimensional faces that represents one of the configurations that must be summed to obtain a Feynman's path integral (functional integration) description of quantum gravity. It is closely related to loop quantum gravity.

, that is zero everywhere except at

, that is zero everywhere except at  but whose integral is finite and nonzero. It can be represented as a Fourier integral,

but whose integral is finite and nonzero. It can be represented as a Fourier integral,

.

.

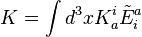

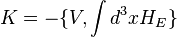

One can employ the idea of the delta function to impose the condition that the Hamiltonian constraint should vanish. It is obvious that

is non-zero only when for all

for all  in

in  . Using this we can `project' out solutions to the Hamiltonian constraint. With analogy to the Fourier integral given above, this (generalized) projector can formally be written as

. Using this we can `project' out solutions to the Hamiltonian constraint. With analogy to the Fourier integral given above, this (generalized) projector can formally be written as

![\int [d N] e^{i \int d^3 x N (x) \hat{H} (x)}](//upload.wikimedia.org/math/4/d/6/4d61d0aba3544b8c70473053064199dd.png) .

.

Interestingly, this is formally spatially diffeomorphism-invariant. As such it can be applied at the spatially diffeomorphism-invariant level. Using this the physical inner product is formally given by

![\biggl\langle \int [d N] e^{i \int d^3 x N (x) \hat{H} (x)} s_{\text{int}} s_{\text{fin}} \biggr\rangle_{\text{Diff}}](//upload.wikimedia.org/math/b/1/4/b14724b0ebb090211d4b45d970880822.png)

where are the initial spin network and

are the initial spin network and  is the final spin network.

is the final spin network.

The exponential can be expanded

![\biggl\langle \int [d N] (1 + i \int d^3 x N (x) \hat{H} (x) + {i^2 \over 2!} [\int d^3 x N (x) \hat{H} (x)] [\int d^3 x' N (x') \hat{H} (x')] + \dots) s_{\text{int}}, s_{\text{fin}} \biggr\rangle_{\text{Diff}}](//upload.wikimedia.org/math/2/c/f/2cf177d032d9355f7a92af0d2def3d4d.png)

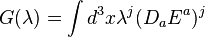

and each time a Hamiltonian operator acts it does so by adding a new edge at the vertex. The summation over different sequences of actions of can be visualized as a summation over different histories of `interaction vertices' in the `time' evolution sending the initial spin network to the final spin network. This then naturally gives rise to the two-complex (a combinatorial set of faces that join along edges, which in turn join on vertices) underlying the spin foam description; we evolve forward an initial spin network sweeping out a surface, the action of the Hamiltonian constraint operator is to produce a new planar surface starting at the vertex. We are able to use the action of the Hamiltonian constraint on the vertex of a spin network state to associate an amplitude to each "interaction" (in analogy to Feynman diagrams). See figure below. This opens up a way of trying to directly link canonical LQG to a path integral description. Now just as a spin networks describe quantum space, each configuration contributing to these path integrals, or sums over history, describe `quantum space-time'. Because of their resemblance to soap foams and the way they are labeled John Baez gave these `quantum space-times' the name `spin foams'.

can be visualized as a summation over different histories of `interaction vertices' in the `time' evolution sending the initial spin network to the final spin network. This then naturally gives rise to the two-complex (a combinatorial set of faces that join along edges, which in turn join on vertices) underlying the spin foam description; we evolve forward an initial spin network sweeping out a surface, the action of the Hamiltonian constraint operator is to produce a new planar surface starting at the vertex. We are able to use the action of the Hamiltonian constraint on the vertex of a spin network state to associate an amplitude to each "interaction" (in analogy to Feynman diagrams). See figure below. This opens up a way of trying to directly link canonical LQG to a path integral description. Now just as a spin networks describe quantum space, each configuration contributing to these path integrals, or sums over history, describe `quantum space-time'. Because of their resemblance to soap foams and the way they are labeled John Baez gave these `quantum space-times' the name `spin foams'.

There are however severe difficulties with this particular approach, for example the Hamiltonian operator is not self-adjoint, in fact it is not even a normal operator (i.e. the operator does not commute with its adjoint) and so the spectral theorem cannot be used to define the exponential in general. The most serious problem is that the 's are not mutually commuting, it can then be shown the formal quantity

's are not mutually commuting, it can then be shown the formal quantity ![\int [d N] e^{i \int d^3 x N (x) \hat{H} (x)}](//upload.wikimedia.org/math/4/d/6/4d61d0aba3544b8c70473053064199dd.png) cannot even define a (generalized) projector. The Master constraint (see below) does not suffer from these problems and as such offers a way of connecting the canonical theory to the path integral formulation.

cannot even define a (generalized) projector. The Master constraint (see below) does not suffer from these problems and as such offers a way of connecting the canonical theory to the path integral formulation.

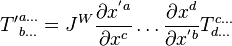

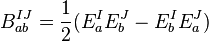

Surprisingly, it turns out that general relativity can be obtained from BF theory by imposing a constraint,[16] BF theory involves a field and if one chooses the field

and if one chooses the field  to be the (anti-symmetric) product of two tetrads

to be the (anti-symmetric) product of two tetrads

(tetrads are like triads but in four spacetime dimensions), one recovers general relativity. The condition that the field be given by the product of two tetrads is called the simplicity constraint. The spin foam dynamics of the topological field theory is well understood. Given the spin foam `interaction' amplitudes for this simple theory, one then tries to implement the simplicity conditions to obtain a path integral for general relativity. The non-trivial task of constructing a spin foam model is then reduced to the question of how this simplicity constraint should be imposed in the quantum theory. The first attempt at this was the famous Barrett–Crane model.[17] However this model was shown to be problematic, for example there did not seem to be enough degrees of freedom to ensure the correct classical limit.[18] It has been argued that the simplicity constraint was imposed too strongly at the quantum level and should only be imposed in the sense of expectation values just as with the Lorenz gauge condition

field be given by the product of two tetrads is called the simplicity constraint. The spin foam dynamics of the topological field theory is well understood. Given the spin foam `interaction' amplitudes for this simple theory, one then tries to implement the simplicity conditions to obtain a path integral for general relativity. The non-trivial task of constructing a spin foam model is then reduced to the question of how this simplicity constraint should be imposed in the quantum theory. The first attempt at this was the famous Barrett–Crane model.[17] However this model was shown to be problematic, for example there did not seem to be enough degrees of freedom to ensure the correct classical limit.[18] It has been argued that the simplicity constraint was imposed too strongly at the quantum level and should only be imposed in the sense of expectation values just as with the Lorenz gauge condition  in the Gupta–Bleuler formalism of quantum electrodynamics. New models have now been put forward, sometimes motivated by imposing the simplicity conditions in a weaker sense.

in the Gupta–Bleuler formalism of quantum electrodynamics. New models have now been put forward, sometimes motivated by imposing the simplicity conditions in a weaker sense.

Another difficulty here is that spin foams are defined on a discretization of spacetime. While this presents no problems for a topological field theory as it has no local degrees of freedom, it presents problems for GR. This is known as the problem triangularization dependence.

Much progress has been made with regard to this issue by Engle, Pereira, and Rovelli[19] and Freidal and Krasnov[20] in defining spin foam interaction amplitudes with much better behaviour.

An attempt to make contact between EPRL-FK spin foam and the canonical formulation of LQG has been made.[21]

In physics, the correspondence principle states that the behavior of systems described by the theory of quantum mechanics (or by the old quantum theory) reproduces classical physics in the limit of large quantum numbers. In other words, it says that for large orbits and for large energies, quantum calculations must agree with classical calculations.[23]

The principle was formulated by Niels Bohr in 1920,[24] though he had previously made use of it as early as 1913 in developing his model of the atom.[25]

There are two basic requirements in establishing the semi-classical limit of any quantum theory:

i) reproduction of the Poisson brackets (of the diffeomorphism constraints in the case of general relativity). This is extremely important because, as noted above, the Poisson bracket algebra formed between the (smeared) constraints themselves completely determines the classical theory. This is analogous to establishing Ehrenfest's theorem;

ii) the specification of a complete set of classical observables whose corresponding operators (see complete set of commuting observables for the quantum mechanical definition of a complete set of observables) when acted on by appropriate semi-classical states reproduce the same classical variables with small quantum corrections (a subtle point is that states that are semi-classical for one class of observables may not be semi-classical for a different class of observables[26]).

This may be easily done, for example, in ordinary quantum mechanics for a particle but in general relativity this becomes a highly non-trivial problem as we will see below.

Theorems establishing the uniqueness of the loop representation as defined by Ashtekar et al. (i.e. a certain concrete realization of a Hilbert space and associated operators reproducing the correct loop algebra - the realization that everybody was using) have been given by two groups (Lewandowski, Okolow, Sahlmann and Thiemann)[27] and (Christian Fleischhack).[28] Before this result was established it was not known whether there could be other examples of Hilbert spaces with operators invoking the same loop algebra, other realizations, not equivalent to the one that had been used so far. These uniqueness theorems imply no others exist and so if LQG does not have the correct semiclassical limit then this would mean the end of the loop representation of quantum gravity altogether.

Concerning issue number 2 above one can consider so-called weave states. Ordinary measurements of geometric quantities are macroscopic, and planckian discreteness is smoothed out. The fabric of a T-shirt is analogous. At a distance it is a smooth curved two-dimensional surface. But a closer inspection we see that it is actually composed of thousands of one-dimensional linked threads. The image of space given in LQG is similar, consider a very large spin network formed by a very large number of nodes and links, each of Planck scale. But probed at a macroscopic scale, it appears as a three-dimensional continuous metric geometry.

As far as the editor knows problem 4 of having semi-classical machinery for non-graph changing operators is as the moment still out of reach.

To make contact with familiar low energy physics it is mandatory to have to develop approximation schemes both for the physical inner product and for Dirac observables.

The spin foam models have been intensively studied can be viewed as avenues toward approximation schemes for the physical inner product.

Markopoulou et al. adopted the idea of noiseless subsystems in an attempt to solve the problem of the low energy limit in background independent quantum gravity theories[32][33][34] The idea has even led to the intriguing possibility of matter of the standard model being identified with emergent degrees of freedom from some versions of LQG (see section below: LQG and related research programs).

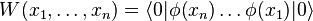

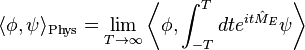

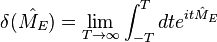

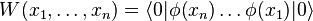

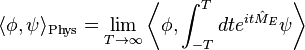

As Wightman emphasized in the 1950s, in Minkowski QFTs the point functions

point functions

,

,

completely determine the theory. In particular, one can calculate the scattering amplitudes from these quantities. As explained below in the section on the Background independent scattering amplitudes, in the background-independent context, the point functions refer to a state and in gravity that state can naturally encode information about a specific geometry which can then appear in the expressions of these quantities. To leading order LQG calculations have been shown to agree in an appropriate sense with the

point functions refer to a state and in gravity that state can naturally encode information about a specific geometry which can then appear in the expressions of these quantities. To leading order LQG calculations have been shown to agree in an appropriate sense with the  point functions calculated in the effective low energy quantum general relativity.

point functions calculated in the effective low energy quantum general relativity.

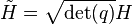

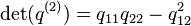

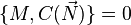

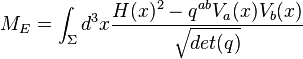

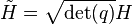

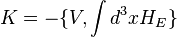

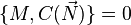

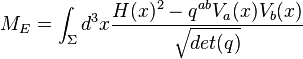

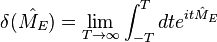

( being a continuous index) in terms of a single Master constraint,

being a continuous index) in terms of a single Master constraint,

![M = \int d^3x {[H (x)]^2 \over \sqrt{\operatorname{det}(q(x))}}](//upload.wikimedia.org/math/e/2/9/e29a43ce890390922bdbd90b3ec6e797.png) .

.

which involves the square of the constraints in question. Note that were infinitely many whereas the Master constraint is only one. It is clear that if

were infinitely many whereas the Master constraint is only one. It is clear that if  vanishes then so do the infinitely many

vanishes then so do the infinitely many  's. Conversely, if all the

's. Conversely, if all the  's vanish then so does

's vanish then so does  , therefore they are equivalent. The Master constraint

, therefore they are equivalent. The Master constraint  involves an appropriate averaging over all space and so is invariant under spatial diffeomorphisms (it is invariant under spatial "shifts" as it is a summation over all such spatial "shifts" of a quantity that transforms as a scalar). Hence its Poisson bracket with the (smeared) spacial diffeomorphism constraint,

involves an appropriate averaging over all space and so is invariant under spatial diffeomorphisms (it is invariant under spatial "shifts" as it is a summation over all such spatial "shifts" of a quantity that transforms as a scalar). Hence its Poisson bracket with the (smeared) spacial diffeomorphism constraint,  , is simple:

, is simple:

.

.

(it is invariant as well). Also, obviously as any quantity Poisson commutes with itself, and the Master constraint being a single constraint, it satisfies

invariant as well). Also, obviously as any quantity Poisson commutes with itself, and the Master constraint being a single constraint, it satisfies

.

.

We also have the usual algebra between spatial diffeomorphisms. This represents a dramatic simplification of the Poisson bracket structure, and raises new hope in understanding the dynamics and establishing the semi-classical limit.[35]

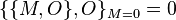

An initial objection to the use of the Master constraint was that on first sight it did not seem to encode information about the observables; because the Mater constraint is quadratic in the constraint, when you compute its Poisson bracket with any quantity, the result is proportional to the constraint, therefore it always vanishes when the constraints are imposed and as such does not select out particular phase space functions. However, it was realized that the condition

is equivalent to being a Dirac observable. So the Master constraint does capture information about the observables. Because of its significance this is known as the Master equation.[35]

being a Dirac observable. So the Master constraint does capture information about the observables. Because of its significance this is known as the Master equation.[35]

That the Master constraint Poisson algebra is an honest Lie algebra opens up the possibility of using a certain method, know as group averaging, in order to construct solutions of the infinite number of Hamiltonian constraints, a physical inner product thereon and Dirac observables via what is known as refined algebraic quantization RAQ[36]

.

.

Obviously,

for all implies

implies  . Conversely, if

. Conversely, if  then

then

implies

.

.