From Wikipedia, the free encyclopedia

In particle physics, quantum electrodynamics (QED) is the relativistic quantum field theory of electrodynamics.

In essence, it describes how light and matter interact and is the first theory where full agreement between quantum mechanics and special relativity is achieved. QED mathematically describes all phenomena involving electrically charged particles interacting by means of exchange of photons and represents the quantum counterpart of classical electromagnetism giving a complete account of matter and light interaction.

In technical terms, QED can be described as a perturbation theory of the electromagnetic quantum vacuum. Richard Feynman called it "the jewel of physics" for its extremely accurate predictions of quantities like the anomalous magnetic moment of the electron and the Lamb shift of the energy levels of hydrogen.[1]:Ch1

History

The first formulation of a quantum theory describing radiation and matter interaction is attributed to British scientist Paul Dirac, who (during the 1920s) was first able to compute the coefficient of spontaneous emission of an atom.[2]

Dirac described the quantization of the electromagnetic field as an ensemble of harmonic oscillators with the introduction of the concept of creation and annihilation operators of particles. In the following years, with contributions from Wolfgang Pauli, Eugene Wigner, Pascual Jordan, Werner Heisenberg and an elegant formulation of quantum electrodynamics due to Enrico Fermi,[3] physicists came to believe that, in principle, it would be possible to perform any computation for any physical process involving photons and charged particles. However, further studies by Felix Bloch with Arnold Nordsieck,[4] and Victor Weisskopf,[5] in 1937 and 1939, revealed that such computations were reliable only at a first order of perturbation theory, a problem already pointed out by Robert Oppenheimer.[6] At higher orders in the series infinities emerged, making such computations meaningless and casting serious doubts on the internal consistency of the theory itself. With no solution for this problem known at the time, it appeared that a fundamental incompatibility existed between special relativity and quantum mechanics.

Difficulties with the theory increased through the end of 1940. Improvements in microwave technology made it possible to take more precise measurements of the shift of the levels of a hydrogen atom,[7] now known as the Lamb shift and magnetic moment of the electron.[8] These experiments unequivocally exposed discrepancies which the theory was unable to explain.

A first indication of a possible way out was given by Hans Bethe. In 1947, while he was traveling by train to reach Schenectady from New York,[9] after giving a talk at the conference at Shelter Island on the subject, Bethe completed the first non-relativistic computation of the shift of the lines of the hydrogen atom as measured by Lamb and Retherford.[10] Despite the limitations of the computation, agreement was excellent. The idea was simply to attach infinities to corrections of mass and charge that were actually fixed to a finite value by experiments. In this way, the infinities get absorbed in those constants and yield a finite result in good agreement with experiments. This procedure was named renormalization.

Based on Bethe's intuition and fundamental papers on the subject by Sin-Itiro Tomonaga,[11] Julian Schwinger,[12][13] Richard Feynman[14][15][16] and Freeman Dyson,[17][18] it was finally possible to get fully covariant formulations that were finite at any order in a perturbation series of quantum electrodynamics. Sin-Itiro Tomonaga, Julian Schwinger and Richard Feynman were jointly awarded with a Nobel prize in physics in 1965 for their work in this area.[19] Their contributions, and those of Freeman Dyson, were about covariant and gauge invariant formulations of quantum electrodynamics that allow computations of observables at any order of perturbation theory. Feynman's mathematical technique, based on his diagrams, initially seemed very different from the field-theoretic, operator-based approach of Schwinger and Tomonaga, but Freeman Dyson later showed that the two approaches were equivalent.[17] Renormalization, the need to attach a physical meaning at certain divergences appearing in the theory through integrals, has subsequently become one of the fundamental aspects of quantum field theory and has come to be seen as a criterion for a theory's general acceptability. Even though renormalization works very well in practice, Feynman was never entirely comfortable with its mathematical validity, even referring to renormalization as a "shell game" and "hocus pocus".[1]:128

QED has served as the model and template for all subsequent quantum field theories. One such subsequent theory is quantum chromodynamics, which began in the early 1960s and attained its present form in the 1975 work by H. David Politzer, Sidney Coleman, David Gross and Frank Wilczek. Building on the pioneering work of Schwinger, Gerald Guralnik, Dick Hagen, and Tom Kibble,[20][21] Peter Higgs, Jeffrey Goldstone, and others, Sheldon Glashow, Steven Weinberg and Abdus Salam independently showed how the weak nuclear force and quantum electrodynamics could be merged into a single electroweak force.

Feynman's view of quantum electrodynamics

Introduction

Near the end of his life, Richard P. Feynman gave a series of lectures on QED intended for the lay public. These lectures were transcribed and published as Feynman (1985), QED: The strange theory of light and matter,[1] a classic non-mathematical exposition of QED from the point of view articulated below.The key components of Feynman's presentation of QED are three basic actions.[1]:85

- A photon goes from one place and time to another place and time.

- An electron goes from one place and time to another place and time.

- An electron emits or absorbs a photon at a certain place and time.

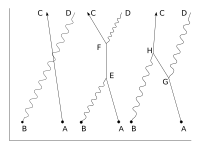

It is important not to over-interpret these diagrams. Nothing is implied about how a particle gets from one point to another. The diagrams do not imply that the particles are moving in straight or curved lines. They do not imply that the particles are moving with fixed speeds. The fact that the photon is often represented, by convention, by a wavy line and not a straight one does not imply that it is thought that it is more wavelike than is an electron. The images are just symbols to represent the actions above: photons and electrons do, somehow, move from point to point and electrons, somehow, emit and absorb photons. We do not know how these things happen, but the theory tells us about the probabilities of these things happening.

As well as the visual shorthand for the actions Feynman introduces another kind of shorthand for the numerical quantities called probability amplitudes. The probability is the square of the total probability amplitude. If a photon moves from one place and time—in shorthand, A—to another place and time—in shorthand, B—the associated quantity is written in Feynman's shorthand as P(A to B). The similar quantity for an electron moving from C to D is written E(C to D). The quantity which tells us about the probability amplitude for the emission or absorption of a photon he calls 'j'. This is related to, but not the same as, the measured electron charge 'e'.[1]:91

QED is based on the assumption that complex interactions of many electrons and photons can be represented by fitting together a suitable collection of the above three building blocks, and then using the probability amplitudes to calculate the probability of any such complex interaction. It turns out that the basic idea of QED can be communicated while making the assumption that the square of the total of the probability amplitudes mentioned above (P(A to B), E(A to B) and 'j') acts just like our everyday probability. (A simplification made in Feynman's book.) Later on, this will be corrected to include specifically quantum-style mathematics, following Feynman.

The basic rules of probability amplitudes that will be used are that a) if an event can happen in a variety of different ways then its probability amplitude is the sum of the probability amplitudes of the possible ways and b) if a process involves a number of independent sub-processes then its probability amplitude is the product of the component probability amplitudes.[1]:93

Basic constructions

Suppose we start with one electron at a certain place and time (this place and time being given the arbitrary label A) and a photon at another place and time (given the label B). A typical question from a physical standpoint is: 'What is the probability of finding an electron at C (another place and a later time) and a photon at D (yet another place and time)?'. The simplest process to achieve this end is for the electron to move from A to C (an elementary action) and for the photon to move from B to D (another elementary action). From a knowledge of the probability amplitudes of each of these sub-processes – E(A to C) and P(B to D) – then we would expect to calculate the probability amplitude of both happening together by multiplying them, using rule b) above. This gives a simple estimated overall probability amplitude, which is squared to give an estimated probability.But there are other ways in which the end result could come about. The electron might move to a place and time E where it absorbs the photon; then move on before emitting another photon at F; then move on to C where it is detected, while the new photon moves on to D. The probability of this complex process can again be calculated by knowing the probability amplitudes of each of the individual actions: three electron actions, two photon actions and two vertexes – one emission and one absorption. We would expect to find the total probability amplitude by multiplying the probability amplitudes of each of the actions, for any chosen positions of E and F. We then, using rule a) above, have to add up all these probability amplitudes for all the alternatives for E and F. (This is not elementary in practice, and involves integration.) But there is another possibility, which is that the electron first moves to G where it emits a photon which goes on to D, while the electron moves on to H, where it absorbs the first photon, before moving on to C. Again we can calculate the probability amplitude of these possibilities (for all points G and H). We then have a better estimation for the total probability amplitude by adding the probability amplitudes of these two possibilities to our original simple estimate. Incidentally the name given to this process of a photon interacting with an electron in this way is Compton scattering.

There are an infinite number of other intermediate processes in which more and more photons are absorbed and/or emitted. For each of these possibilities there is a Feynman diagram describing it. This implies a complex computation for the resulting probability amplitudes, but provided it is the case that the more complicated the diagram the less it contributes to the result, it is only a matter of time and effort to find as accurate an answer as one wants to the original question. This is the basic approach of QED. To calculate the probability of any interactive process between electrons and photons it is a matter of first noting, with Feynman diagrams, all the possible ways in which the process can be constructed from the three basic elements. Each diagram involves some calculation involving definite rules to find the associated probability amplitude.

That basic scaffolding remains when one moves to a quantum description but some conceptual changes are needed. One is that whereas we might expect in our everyday life that there would be some constraints on the points to which a particle can move, that is not true in full quantum electrodynamics. There is a possibility of an electron at A, or a photon at B, moving as a basic action to any other place and time in the universe. That includes places that could only be reached at speeds greater than that of light and also earlier times. (An electron moving backwards in time can be viewed as a positron moving forward in time.)[1]:89, 98–99

Probability amplitudes

Feynman replaces complex numbers with spinning arrows, which start at emission and end at detection of a particle. The sum of all resulting arrows represents the total probability of the event. In this diagram, light emitted by the source S bounces off a few segments of the mirror (in blue) before reaching the detector at P. The sum of all paths must be taken into account. The graph below depicts the total time spent to traverse each of the paths above.

Quantum mechanics introduces an important change in the way probabilities are computed. Probabilities are still represented by the usual real numbers we use for probabilities in our everyday world, but probabilities are computed as the square of probability amplitudes. Probability amplitudes are complex numbers.

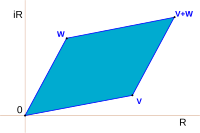

Feynman avoids exposing the reader to the mathematics of complex numbers by using a simple but accurate representation of them as arrows on a piece of paper or screen. (These must not be confused with the arrows of Feynman diagrams which are actually simplified representations in two dimensions of a relationship between points in three dimensions of space and one of time.) The amplitude arrows are fundamental to the description of the world given by quantum theory. No satisfactory reason has been given for why they are needed. But pragmatically we have to accept that they are an essential part of our description of all quantum phenomena. They are related to our everyday ideas of probability by the simple rule that the probability of an event is the square of the length of the corresponding amplitude arrow. So, for a given process, if two probability amplitudes, v and w, are involved, the probability of the process will be given either by

Addition and multiplication are familiar operations in the theory of complex numbers and are given in the figures. The sum is found as follows. Let the start of the second arrow be at the end of the first. The sum is then a third arrow that goes directly from the start of the first to the end of the second. The product of two arrows is an arrow whose length is the product of the two lengths. The direction of the product is found by adding the angles that each of the two have been turned through relative to a reference direction: that gives the angle that the product is turned relative to the reference direction.

That change, from probabilities to probability amplitudes, complicates the mathematics without changing the basic approach. But that change is still not quite enough because it fails to take into account the fact that both photons and electrons can be polarized, which is to say that their orientations in space and time have to be taken into account. Therefore P(A to B) actually consists of 16 complex numbers, or probability amplitude arrows.[1]:120–121 There are also some minor changes to do with the quantity "j", which may have to be rotated by a multiple of 90° for some polarizations, which is only of interest for the detailed bookkeeping.

Associated with the fact that the electron can be polarized is another small necessary detail which is connected with the fact that an electron is a fermion and obeys Fermi–Dirac statistics. The basic rule is that if we have the probability amplitude for a given complex process involving more than one electron, then when we include (as we always must) the complementary Feynman diagram in which we just exchange two electron events, the resulting amplitude is the reverse – the negative – of the first. The simplest case would be two electrons starting at A and B ending at C and D. The amplitude would be calculated as the "difference", E(A to D) × E(B to C) − E(A to C) × E(B to D), where we would expect, from our everyday idea of probabilities, that it would be a sum.[1]:112–113

Propagators

Finally, one has to compute P (A to B) and E (C to D) corresponding to the probability amplitudes for the photon and the electron respectively. These are essentially the solutions of the Dirac Equation which describes the behavior of the electron's probability amplitude and the Klein–Gordon equation which describes the behavior of the photon's probability amplitude. These are called Feynman propagators. The translation to a notation commonly used in the standard literature is as follows: stands for the four real numbers which give the time and position in three dimensions of the point labeled A.

stands for the four real numbers which give the time and position in three dimensions of the point labeled A.Mass renormalization

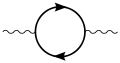

Electron self-energy loop

A problem arose historically which held up progress for twenty years: although we start with the assumption of three basic "simple" actions, the rules of the game say that if we want to calculate the probability amplitude for an electron to get from A to B we must take into account all the possible ways: all possible Feynman diagrams with those end points. Thus there will be a way in which the electron travels to C, emits a photon there and then absorbs it again at D before moving on to B. Or it could do this kind of thing twice, or more. In short we have a fractal-like situation in which if we look closely at a line it breaks up into a collection of "simple" lines, each of which, if looked at closely, are in turn composed of "simple" lines, and so on ad infinitum. This is a very difficult situation to handle. If adding that detail only altered things slightly then it would not have been too bad, but disaster struck when it was found that the simple correction mentioned above led to infinite probability amplitudes. In time this problem was "fixed" by the technique of renormalization. However, Feynman himself remained unhappy about it, calling it a "dippy process".[1]:128

Conclusions

Within the above framework physicists were then able to calculate to a high degree of accuracy some of the properties of electrons, such as the anomalous magnetic dipole moment. However, as Feynman points out, it fails totally to explain why particles such as the electron have the masses they do. "There is no theory that adequately explains these numbers. We use the numbers in all our theories, but we don't understand them – what they are, or where they come from. I believe that from a fundamental point of view, this is a very interesting and serious problem."[1]:152Mathematics

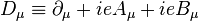

Mathematically, QED is an abelian gauge theory with the symmetry group U(1). The gauge field, which mediates the interaction between the charged spin-1/2 fields, is the electromagnetic field. The QED Lagrangian for a spin-1/2 field interacting with the electromagnetic field is given by the real part of[22]:78 are Dirac matrices;

are Dirac matrices; a bispinor field of spin-1/2 particles (e.g. electron–positron field);

a bispinor field of spin-1/2 particles (e.g. electron–positron field); , called "psi-bar", is sometimes referred to as the Dirac adjoint;

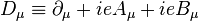

, called "psi-bar", is sometimes referred to as the Dirac adjoint; is the gauge covariant derivative;

is the gauge covariant derivative;- e is the coupling constant, equal to the electric charge of the bispinor field;

- Aμ is the covariant four-potential of the electromagnetic field generated by the electron itself;

- Bμ is the external field imposed by external source;

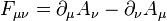

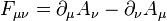

is the electromagnetic field tensor.

is the electromagnetic field tensor.

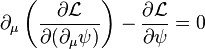

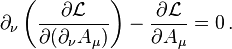

Equations of motion

To begin, substituting the definition of D into the Lagrangian gives us (2)

(2)

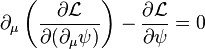

The two terms from this Lagrangian are then

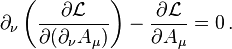

One further important equation can be found by substituting the above Lagrangian into another Euler–Lagrange equation, this time for the field, Aμ:

(3)

(3)

Interaction picture

This theory can be straightforwardly quantized by treating bosonic and fermionic sectors[clarification needed] as free.This permits us to build a set of asymptotic states which can be used to start a computation of the probability amplitudes for different processes. In order to do so, we have to compute an evolution operator that, for a given initial state

, will give a final state

, will give a final state  in such a way to have[22]:5

in such a way to have[22]:5Feynman diagrams

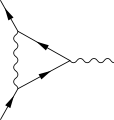

Despite the conceptual clarity of this Feynman approach to QED, almost no early textbooks follow him in their presentation. When performing calculations it is much easier to work with the Fourier transforms of the propagators. Quantum physics considers particle's momenta rather than their positions, and it is convenient to think of particles as being created or annihilated when they interact. Feynman diagrams then look the same, but the lines have different interpretations. The electron line represents an electron with a given energy and momentum, with a similar interpretation of the photon line. A vertex diagram represents the annihilation of one electron and the creation of another together with the absorption or creation of a photon, each having specified energies and momenta.Using Wick theorem on the terms of the Dyson series, all the terms of the S-matrix for quantum electrodynamics can be computed through the technique of Feynman diagrams.

To these rules we must add a further one for closed loops that implies an integration on momenta

, since these internal ("virtual") particles are not constrained to any specific energy–momentum – even that usually required by special relativity (see this article for details). From them, computations of probability amplitudes are straightforwardly given. An example is Compton scattering, with an electron and a photon undergoing elastic scattering.

, since these internal ("virtual") particles are not constrained to any specific energy–momentum – even that usually required by special relativity (see this article for details). From them, computations of probability amplitudes are straightforwardly given. An example is Compton scattering, with an electron and a photon undergoing elastic scattering.Renormalizability

Higher order terms can be straightforwardly computed for the evolution operator but these terms display diagrams containing the following simpler ones[22]:ch 10-

One-loop contribution to the vacuum polarization function

-

One-loop contribution to the electron self-energy function

-

One-loop contribution to the vertex function

Renormalizability has become an essential criterion for a quantum field theory to be considered as a viable one. All the theories describing fundamental interactions, except gravitation whose quantum counterpart is presently under very active research, are renormalizable theories.

Nonconvergence of series

An argument by Freeman Dyson shows that the radius of convergence of the perturbation series in QED is zero.[23] The basic argument goes as follows: if the coupling constant were negative, this would be equivalent to the Coulomb force constant being negative. This would "reverse" the electromagnetic interaction so that like charges would attract and unlike charges would repel. This would render the vacuum unstable against decay into a cluster of electrons on one side of the universe and a cluster of positrons on the other side of the universe. Because the theory is 'sick' for any negative value of the coupling constant, the series do not converge, but are an asymptotic series.From a modern perspective, we say that QED is not well defined as a quantum field theory to arbitrarily high energy.[24] The coupling constant runs to infinity at finite energy, signalling a Landau pole. The problem is essentially that QED is not asymptotically free. This is one of the motivations for embedding QED within a Grand Unified Theory.

are

are  a

a  , called "psi-bar", is sometimes referred to as the

, called "psi-bar", is sometimes referred to as the  is the

is the  is the

is the

![U=T\exp\left[-\frac{i}{\hbar}\int_{t_0}^tdt'V(t')\right]](http://upload.wikimedia.org/math/f/f/c/ffce1a89c334d137bda73d0ccba5aa1a.png)