Agent-based models have many applications in biology, primarily due to the characteristics of the modeling method. Agent-based modeling is a rule-based, computational modeling methodology that focuses on rules and interactions among the individual components or the agents of the system.

The goal of this modeling method is to generate populations of the

system components of interest and simulate their interactions in a

virtual world. Agent-based models start with rules for behavior and

seek to reconstruct, through computational instantiation of those

behavioral rules, the observed patterns of behavior. Several of the characteristics of agent-based models important to biological studies include:

- Modular structure: The behavior of an agent-based model is defined by the rules of its agents. Existing agent rules can be modified or new agents can be added without having to modify the entire model.

- Emergent properties: Through the use of the individual agents that interact locally with rules of behavior, agent-based models result in a synergy that leads to a higher level whole with much more intricate behavior than those of each individual agent.

- Abstraction: Either by excluding non-essential details or when details are not available, agent-based models can be constructed in the absence of complete knowledge of the system under study. This allows the model to be as simple and verifiable as possible.

- Stochasticity: Biological systems exhibit behavior that appears to be random. The probability of a particular behavior can be determined for a system as a whole and then be translated into rules for the individual agents.

Forest insect infestations

In

the paper titled "Exploring Forest Management Practices Using an

Agent-Based Model of Forest Insect Infestations", an agent-based model

was developed to simulate attack behavior of the mountain pine beetle, Dendroctonus ponderosae,

(MPB) in order to evaluate how different harvesting policies influence

spatial characteristics of the forest and spatial propagation of the MPB

infestation over time. About two-thirds of the land in British Columbia, Canada is covered by forests that are constantly being modified by natural disturbances

such as fire, disease, and insect infestation. Forest resources make

up approximately 15% of the province’s economy, so infestations caused

by insects such as the MPB can have significant impacts on the economy.

The MPB outbreaks are considered a major natural disturbance that can

result in widespread mortality of the lodgepole pine

tree, one of the most abundant commercial tree species in British

Columbia. Insect outbreaks have resulted in the death of trees over

areas of several thousand square kilometers.

The agent-based model developed for this study was designed to simulate the MPB attack behavior in order to evaluate how management

practices influence the spatial distribution and patterns of insect

population and their preferences for attacked and killed trees. Three

management strategies were considered by the model: 1) no management, 2)

sanitation harvest and 3) salvage harvest. In the model, the Beetle

Agent represented the MPB behavior; the Pine Agent represented the

forest environment and tree health evolution; the Forest Management

Agent represented the different management strategies. The Beetle Agent

follows a series of rules to decide where to fly within the forest and

to select a healthy tree to attack, feed, and breed. The MPB typically

kills host trees in its natural environment in order to successfully

reproduce. The beetle larvae

feed on the inner bark of mature host trees, eventually killing them.

In order for the beetles to reproduce, the host tree must be

sufficiently large and have thick inner bark. The MPB outbreaks end

when the food supply decreases to the point that there is not enough to

sustain the population or when climatic conditions become unfavorable

for the beetle. The Pine Agent simulates the resistance of the host

tree, specifically the Lodgepole pine tree, and monitors the state and

attributes of each stand of trees. At some point in the MPB attack, the

number of beetles per tree reaches the host tree capacity. When this

point is reached, the beetles release a chemical to direct beetles to

attack other trees. The Pine Agent models this behavior by calculating

the beetle population density per stand and passes the information to

the Beetle Agents. The Forest Management Agent was used, at the stand

level, to simulate two common silviculture

practices (sanitation and salvage) as well as the strategy where no

management practice was employed. With the sanitation harvest strategy,

if a stand has an infestation rate greater than a set threshold, the

stand is removed as well as any healthy neighbor stand when the average

size of the trees exceeded a set threshold. For the salvage harvest

strategy, a stand is removed even it is not under a MPB attack if a

predetermined number of neighboring stands are under a MPB attack.

The study considered a forested area in the North-Central Interior of British Columbia of approximately 560 hectare. The area consisted primarily of Lodgepole pine with smaller proportions of Douglas fir and White spruce.

The model was executed for five time steps, each step representing a

single year. Thirty simulation runs were conducted for each forest

management strategy considered. The results of the simulation showed

that when no management strategy was employed, the highest overall MPB

infestation occurred. The results also showed that the salvage forest

management technique resulted in a 25% reduction in the number of forest

strands killed by the MPB, as opposed to a 19% reduction by the salvage

forest management strategy. In summary, the results show that the

model can be used as a tool to build forest management policies.

Invasive species

Invasive species

refers to "non-native" plants and animals that adversely affect the

environments they invade. The introduction of invasive species may have

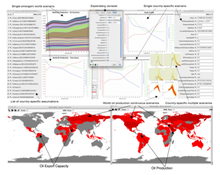

environmental, economic, and ecological implications. In the paper

titled "An Agent-Based Model of Border Enforcement for Invasive Species

Management", an agent-based model is presented that was developed to

evaluate the impacts of port-specific and importer-specific enforcement regimes for a given agricultural commodity

that presents invasive species risk. Ultimately, the goal of the study

was to improve the allocation of enforcement resources and to provide a

tool to policy makers to answer further questions concerning border

enforcement and invasive species risk.

The agent-based model developed for the study considered three

types of agents: invasive species, importers, and border enforcement

agents.

In the model, the invasive species can only react to their

surroundings, while the importers and border enforcement agents are able

to make their own decisions based on their own goals and objectives.

The invasive species has the ability to determine if it has been

released in an area containing the target crop, and to spread to

adjacent plots of the target crop. The model incorporates spatial

probability maps that are used to determine if an invasive species

becomes established. The study focused on shipments of broccoli from Mexico into California through the ports of entry Calexico, California and Otay Mesa, California. The selected invasive species of concern was the crucifer flea beetle (Phyllotreta cruciferae).

California is by far the largest producer of broccoli in the United

States and so the concern and potential impact of an invasive species

introduction through the chosen ports of entry is significant. The

model also incorporated a spatially explicit damage function that was

used to model invasive species damage in a realistic manner.

Agent-based modeling provides the ability to analyze the behavior of heterogeneous

actors, so three different types of importers were considered that

differed in terms of commodity infection rates (high, medium, and low),

pretreatment choice, and cost of transportation to the ports. The model

gave predictions on inspection rates for each port of entry and

importer and determined the success rate of border agent inspection, not

only for each port and importer but also for each potential level of

pretreatment (no pretreatment, level one, level two, and level three).

The model was implemented and ran in NetLogo,

version 3.1.5. Spatial information on the location of the ports of

entry, major highways, and transportation routes was included in the

analysis as well as a map of California broccoli crops layered with

invasive species establishment probability maps. BehaviorSpace,

a software tool integrated with NetLogo, was used to test the effects

of different parameters (e.g. shipment value, pretreatment cost) in the

model. On average, 100 iterations were calculated at each level of the

parameter being used, where an iteration represented a one-year run.

The results of the model showed that as inspection efforts

increase, importers increase due care, or the pre-treatment of shipments,

and the total monetary loss of California crops decreases. The model

showed that importers respond to an increase in inspection effort in

different ways. Some importers responded to increased inspection rate

by increasing pre-treatment effort, while others chose to avoid shipping

to a specific port, or shopped for another port. An important result of

the model results is that it can show or provide recommendations to

policy makers about the point at which importers may start to shop for

ports, such as the inspection rate at which port shopping is introduced

and the importers associated with a certain level of pest risk or

transportation cost are likely to make these changes. Another

interesting outcome of the model is that when inspectors were not able

to learn to respond to an importer with previously infested shipments,

damage to California broccoli crops was estimated to be $150 million.

However, when inspectors were able to increase inspection rates of

importers with previous violations, damage to the California broccoli

crops was reduced by approximately 12%. The model provides a mechanism

to predict the introduction of invasive species from agricultural

imports and their likely damage. Equally as important, the model

provides policy makers and border control agencies with a tool that can

be used to determine the best allocation of inspectional resources.

Aphid population dynamics

In

the article titled "Aphid Population Dynamics in Agricultural

Landscapes: An Agent-based Simulation Model", an agent-based model is

presented to study the population dynamics of the bird cherry-oat aphid, Rhopalosiphum padi (L.). The study was conducted in a five square kilometer region of North Yorkshire, a county located in the Yorkshire and the Humber region of England.

The agent-based modeling method was chosen because of its focus on the

behavior of the individual agents rather than the population as a

whole. The authors propose that traditional models that focus on

populations as a whole do not take into account the complexity of the

concurrent interactions in ecosystems,

such as reproduction and competition for resources which may have

significant impacts on population trends. The agent-based modeling

approach also allows modelers to create more generic and modular models

that are more flexible and easier to maintain than modeling approaches

that focus on the population as a whole. Other proposed advantages of

agent-based models include realistic representation of a phenomenon of

interest due to the interactions of a group of autonomous agents, and

the capability to integrate quantitative variables, differential equations, and rule based behavior into the same model.

The model was implemented in the modeling toolkit Repast using the JAVA

programming language. The model was run in daily time steps and

focused on the autumn and winter seasons. Input data for the model

included habitat data, daily minimum, maximum, and mean temperatures,

and wind speed and direction. For the Aphid agents, age, position, and morphology (alate or apterous)

were considered. Age ranged from 0.00 to 2.00, with 1.00 being the

point at which the agent becomes an adult. Reproduction by the Aphid

agents is dependent on age, morphology, and daily minimum, maximum, and

mean temperatures. Once nymphs

hatch, they remain in the same location as their parents. The

morphology of the nymphs is related to population density and the

nutrient quality of the aphid’s

food source. The model also considered mortality among the Aphid

agents, which is dependent on age, temperatures, and quality of habitat.

The speed at which an Aphid agent ages is determined by the daily

minimum, maximum, and mean temperatures. The model considered movement

of the Aphid agents to occur in two separate phases, a migratory phase

and a foraging phase, both of which affect the overall population distribution.

The study started the simulation run with an initial population

of 10,000 alate aphids distributed across a grid of 25 meter cells. The

simulation results showed that there were two major population peaks,

the first in early autumn due to an influx of alate immigrants and the

second due to lower temperatures later in the year and a lack of

immigrants. Ultimately, it is the goal of the researchers to adapt this

model to simulate broader ecosystems and animal types.

Aquatic population dynamics

In

the article titled "Exploring Multi-Agent Systems In Aquatic Population

Dynamics Modeling", a model is proposed to study the population

dynamics of two species of macrophytes. Aquatic plants play a vital role in the ecosystems

in which they live as they may provide shelter and food for other

aquatic organisms. However, they may also have harmful impacts such as

the excessive growth of non-native plants or eutrophication of the lakes in which they live leading to anoxic

conditions. Given these possibilities, it is important to understand

how the environment and other organisms affect the growth of these

aquatic plants to allow mitigation or prevention of these harmful

impacts.

Potamogeton pectinatus is one of the aquatic plant agents in the model. It is an annual growth plant that absorbs nutrients from the soil and reproduces through root tubers and rhizomes.

Reproduction of the plant is not impacted by water flow, but can be

influenced by animals, other plants, and humans. The plant can grow up

to two meters tall, which is a limiting condition because it can only

grow in certain water depths, and most of its biomass is found at the

top of the plant in order to capture the most sunlight possible. The

second plant agent in the model is Chara aspera, also a rooted

aquatic plant. One major difference in the two plants is that the

latter reproduces through the use of very small seeds called oospores

and bulbills which are spread via the flow of water. Chara aspera

only grows up to 20 cm and requires very good light conditions as well

as good water quality, all of which are limiting factors on the growth

of the plant. Chara aspera has a higher growth rate than Potamogeton pectinatus

but has a much shorter life span. The model also considered

environmental and animal agents. Environmental agents considered

included water flow, light penetration, and water depth. Flow

conditions, although not of high importance to Potamogeton pectinatus, directly impact the seed dispersal of Chara aspera.

Flow conditions affect the direction as well as the distance the seeds

will be distributed. Light penetration strongly influences Chara aspera as it requires high water quality. Extinction coefficient (EC) is a measure of light penetration in water. As EC increases, the growth rate of Chara aspera

decreases. Finally, depth is important to both species of plants. As

water depth increases, the light penetration decreases making it

difficult for either species to survive beyond certain depths.

The area of interest in the model was a lake in the Netherlands named Lake Veluwe.

It is a relatively shallow lake with an average depth of 1.55 meters

and covers about 30 square kilometers. The lake is under eutrophication

stress which means that nutrients are not a limiting factor for either

of the plant agents in the model. The initial position of the plant

agents in the model was randomly determined. The model was implemented

using Repast

software package and was executed to simulate the growth and decay of

the two different plant agents, taking into account the environmental

agents previously discussed as well as interactions with other plant

agents. The results of the model execution show that the population

distribution of Chara aspera has a spatial pattern very similar

to the GIS maps of observed distributions. The authors of the study

conclude that the agent rules developed in the study are reasonable to

simulate the spatial pattern of macrophyte growth in this particular

lake.

Bacteria aggregation leading to biofilm formation

In

the article titled "iDynoMiCS: next-generation individual-based

modelling of biofilms", an agent-based model is presented that models

the colonisation of bacteria onto a surface, leading to the formation of

biofilms.

The purpose of iDynoMiCS (standing for individual-based Dynamics of

Microbial Communities Simulator) is to simulate the growth of

populations and communities of individual microbes (small unicellular

organisms such as bacteria, archaea and protists)

that compete for space and resources in biofilms immersed in aquatic

environments. iDynoMiCS can be used to seek to understand how individual

microbial dynamics lead to emergent population- or biofilm-level

properties and behaviours. Examining such formations is important in

soil and river studies, dental hygiene studies, infectious disease and

medical implant related infection research, and for understanding

biocorrosion.

An agent-based modelling paradigm was employed to make it possible to

explore how each individual bacterium, of a particular species,

contributes to the development of the biofilm. The initial illustration

of iDynoMiCS considered how environmentally fluctuating oxygen

availability affects the diversity and composition of a community of denitrifying bacteria that induce the denitrification pathway under anoxic or low oxygen conditions.

The study explores the hypothesis that the existence of diverse

strategies of denitrification in an environment can be explained by

solely assuming that faster response incurs a higher cost. The

agent-based model suggests that if metabolic pathways can be switched

without cost the faster the switching the better. However, where faster

switching incurs a higher cost, there is a strategy with optimal

response time for any frequency of environmental fluctuations. This

suggests that different types of denitrifying strategies win in

different biological environments. Since this introduction the

applications of iDynoMiCS continues to increase: a recent exploration of

the plasmid invasion in biofilms being one example.

This study explored the hypothesis that poor plasmid spread in biofilms

is caused by a dependence of conjugation on the growth rate of the

plasmid donor agent. Through simulation, the paper suggests that plasmid

invasion into a resident biofilm is only limited when plasmid transfer

depends on growth. Sensitivity analysis techniques were employed that

suggests parameters relating to timing (lag before plasmid transfer

between agents) and spatial reach are more important for plasmid

invasion into a biofilm than the receiving agents growth rate or

probability of segregational loss. Further examples that use iDynoMiCS

continue to be published, including use of iDynoMiCS in modelling of a Pseudomonas aeruginosa biofilm with glucose substrate.

iDynoMiCS has been developed by an international team of

researchers in order to provide a common platform for further

development of all individual-based models of microbial biofilms and

such like. The model was originally the result of years of work by

Laurent Lardon, Brian Merkey, and Jan-Ulrich Kreft, with code

contributions from Joao Xavier. With additional funding from the National Centre for Replacement, Refinement, and Reduction of Animals in Research (NC3Rs)

in 2013, the development of iDynoMiCS as a tool for biological

exploration continues apace, with new features being added when

appropriate. From its inception, the team have committed to releasing

iDynoMiCS as an open source

platform, encouraging collaborators to develop additional functionality

that can then be merged into the next stable release. IDynoMiCS has

been implemented in the Java

programming language, with MATLAB and R scripts provided to analyse

results. Biofilm structures that are formed in simulation can be viewed

as a movie using POV-Ray files that are generated as the simulation is run.

Mammary stem cell enrichment following irradiation during puberty

Experiments

have shown that exposure to ionizing irradiation of pubertal mammary

glands results in an increase in the ratio of mammary stem cells in the gland.

This is important because stem cells are thought to be key targets for

cancer initiation by ionizing radiation because they have the greatest

long-term proliferative potential and mutagenic events persist in

multiple daughter cells. Additionally, epidemiology data show that

children exposed to ionizing radiation have a substantially greater

breast cancer risk than adults.

These experiments thus prompted questions about the underlying

mechanism for the increase in mammary stem cells following radiation. In

this research article titled "Irradiation of Juvenile, but not Adult,

Mammary Gland Increases Stem Cell Self-Renewal and Estrogen Receptor

Negative Tumors", two agent-based models were developed and were used in parallel with in vivo and in vitro experiments to evaluate cell inactivation, dedifferentiation via epithelial-mesenchymal transition (EMT), and self-renewal (symmetric division) as mechanisms by which radiation could increase stem cells.

The first agent-based model is a multiscale model of mammary

gland development starting with a rudimentary mammary ductal tree at the

onset of puberty (during active proliferation) all the way to a full

mammary gland at adulthood (when there is little proliferation). The

model consists of millions of agents, with each agent representing a

mammary stem cell, a progenitor cell, or a differentiated cell in the

breast. Simulations were first run on the Lawrence Berkeley National Laboratory Lawrencium supercomputer to parameterize and benchmark the model against a variety of in vivo

mammary gland measurements. The model was then used to test the three

different mechanisms to determine which one led to simulation results

that matched in vivo experiments the best. Surprisingly,

radiation-induced cell inactivation by death did not contribute to

increased stem cell frequency independently of the dose delivered in the

model. Instead the model revealed that the combination of increased

self-renewal and cell proliferation during puberty led to stem cell

enrichment. In contrast epithelial-mesenchymal transition in the model

was shown to increase stem cell frequency not only in pubertal mammary

glands but also in adult glands. This latter prediction, however,

contradicted the in vivo data; irradiation of adult mammary

glands did not lead to increased stem cell frequency. These simulations

therefore suggested self-renewal as the primary mechanism behind

pubertal stem cell increase.

To further evaluate self-renewal as the mechanism, a second

agent-based model was created to simulate the growth dynamics of human

mammary epithelial cells (containing stem/progenitor and differentiated

cell subpopulations) in vitro after irradiation. By comparing the simulation results with data from the in vitro

experiments, the second agent-based model further confirmed that cells

must extensively proliferate to observe a self-renewal dependent

increase in stem/progenitor cell numbers after irradiation.

The combination of the two agent-based models and the in vitro/in vivo

experiments provide insight into why children exposed to ionizing

radiation have a substantially greater breast cancer risk than adults.

Together, they support the hypothesis that the breast is susceptible to a

transient increase in stem cell self-renewal when exposed to radiation

during puberty, which primes the adult tissue to develop cancer decades

later.