From Wikipedia, the free encyclopedia

Scholarly and popular discussion about nature and nurture relates to the relative importance of an individual's innate qualities ("nature" in the sense of nativism or innatism) as compared to an individual's personal experiences ("nurture" in the sense of empiricism or behaviorism) in causing individual differences in physical and behavioral traits.

The phrase "nature and nurture" in its modern sense was coined[1][2][3] by the English Victorian polymath Francis Galton in discussion of the influence of heredity and environment on social advancement, although the terms had been contrasted previously, for example by Shakespeare (in his play, The Tempest: 4.1). Galton was influenced[4] by the book On the Origin of Species written by his half-cousin, Charles Darwin. The concept embodied in the phrase has been criticized[3][4] for its binary simplification of two tightly interwoven parameters, as for example an environment of wealth, education, and social privilege are often historically passed to genetic offspring, even though wealth, education, and social privilege are not part of the human biological system, and so cannot be directly attributed to genetics.

The view that humans acquire all or almost all their behavioral traits from "nurture" was termed tabula rasa ("blank slate") by philosopher John Locke. The blank slate view proposes that humans develop only from environmental influences. This question was once considered to be an appropriate division of developmental influences, but since both types of factors are known to play interacting roles in development, most modern psychologists and other scholars of human development consider the question naive—representing an outdated state of knowledge.[5][6][7][8]

One may also refer to the concepts of innatism and empiricism as genetic determinism and environmentalism respectively. These two conflicting approaches have influenced research agendas for a century. While genetic determinism holds that the development is primarily influenced by the genetic code of a person, environmentalism emphasises the influence of experiences and social factors. In the twenty-first century, a consensus is developing that both genetic and environmental agents influence development interactively.[9]:85 In the social and political sciences, the nature versus nurture debate may be contrasted with the structure versus agency debate (that is, socialization versus individual autonomy). For a discussion of nature versus nurture in language and other human universals, see also psychological nativism.

In their 2014 survey of scientists, many respondents wrote that the familiar distinction between nature and nurture has outlived its usefulness, and should be retired. One reason is the explosion of work in the field of epigenetics. Scientists believe that there is a long and circuitous route, with many feedback loops, from a particular set of genes to a feature of the adult organism. Culture is a biological phenomenon: a set of abilities and practices that allow members of one generation to learn and change and to pass the results of that learning on to the next generation.[10][11]

Scientific approaches also seek to break down variance beyond these two categories of nature and nurture. Thus rather than "nurture", behavior geneticists distinguish shared family factors (i.e., those shared by siblings, making them more similar) and nonshared factors (i.e., those that uniquely affect individuals, making siblings different). To express the portion of the variance due to the "nature" component, behavioral geneticists generally refer to the heritability of a trait.

With regard to personality traits and adult IQ in the general U.S. population, the portion of the overall variance that can be attributed to shared family effects is often negligible.[14]

In her Pulitzer Prize-nominated book The Nurture Assumption, author Judith Harris argues that "nurture," as traditionally defined in terms of family upbringing does not effectively explain the variance for most traits (such as adult IQ and the Big Five personality traits) in the general population of the United States. On the contrary, Harris suggests that either peer groups or random environmental factors (i.e., those that are independent of family upbringing) are more important than family environmental effects.[15][16]

Although "nurture" has historically been referred to as the care given to children by the parents, with the mother playing a role of particular importance, this term is now regarded by some as any environmental factor in the contemporary nature versus nurture debate. Thus the definition of "nurture" has expanded to include influences on development arising from prenatal, parental, extended family, and peer experiences, and extending to influences such as media, marketing, and socio-economic status. Indeed, a substantial source of environmental input to human nature may arise from stochastic variations in prenatal development.[17][18]

It is important to note that the term heritability refers only to the degree of genetic variation between people on a trait. It does not refer to the degree to which a trait of a particular individual is due to environmental or genetic factors. The traits of an individual are always a complex interweaving of both.[19] For an individual, even strongly genetically influenced, or "obligate" traits, such as eye color, assume the inputs of a typical environment during ontogenetic development (e.g., certain ranges of temperatures, oxygen levels, etc.).

In contrast, the "heritability index" statistically quantifies the extent to which variation between individuals on a trait is due to variation in the genes those individuals carry. In animals where breeding and environments can be controlled experimentally, heritability can be determined relatively easily. Such experiments would be unethical for human research. This problem can be overcome by finding existing populations of humans that reflect the experimental setting the researcher wishes to create.

One way to determine the contribution of genes and environment to a trait is to study twins. In one kind of study, identical twins reared apart are compared to randomly selected pairs of people. The twins share identical genes, but different family environments. In another kind of twin study, identical twins reared together (who share family environment and genes) are compared to fraternal twins reared together (who also share family environment but only share half their genes). Another condition that permits the disassociation of genes and environment is adoption. In one kind of adoption study, biological siblings reared together (who share the same family environment and half their genes) are compared to adoptive siblings (who share their family environment but none of their genes).

In many cases, it has been found that genes make a substantial contribution, including psychological traits such as intelligence and personality.[20] Yet heritability may differ in other circumstances, for instance environmental deprivation. Examples of low, medium, and high heritability traits include:

Twin and adoption studies have their methodological limits. For example, both are limited to the range of environments and genes which they sample. Almost all of these studies are conducted in Western, first-world countries, and therefore cannot be extrapolated globally to include poorer, non-western populations. Additionally, both types of studies depend on particular assumptions, such as the equal environments assumption in the case of twin studies, and the lack of pre-adoptive effects in the case of adoption studies.

Heritability refers to the origins of differences between people. Individual development, even of highly heritable traits, such as eye color, depends on a range of environmental factors, from the other genes in the organism, to physical variables such as temperature, oxygen levels etc. during its development or ontogenesis.

The variability of trait can be meaningfully spoken of as being due in certain proportions to genetic differences ("nature"), or environments ("nurture"). For highly penetrant Mendelian genetic disorders such as Huntington's disease virtually all the incidence of the disease is due to genetic differences. Huntington's animal models live much longer or shorter lives depending on how they are cared for[citation needed].

At the other extreme, traits such as native language are environmentally determined: linguists have found that any child (if capable of learning a language at all) can learn any human language with equal facility.[21] With virtually all biological and psychological traits, however, genes and environment work in concert, communicating back and forth to create the individual.

At a molecular level, genes interact with signals from other genes and from the environment. While there are many thousands of single-gene-locus traits, so-called complex traits are due to the additive effects of many (often hundreds) of small gene effects. A good example of this is height, where variance appears to be spread across many hundreds of loci.[22]

Extreme genetic or environmental conditions can predominate in rare circumstances—if a child is born mute due to a genetic mutation, it will not learn to speak any language regardless of the environment; similarly, someone who is practically certain to eventually develop Huntington's disease according to their genotype may die in an unrelated accident (an environmental event) long before the disease will manifest itself.

Steven Pinker likewise described several examples:[23]

Heritability measures always refer to the degree of variation between individuals in a population. That is, as these statistics cannot be applied at the level of the individual, it would be incorrect to say that while the heritability index of personality is about 0.6, 60% of one's personality is obtained from one's parents and 40% from the environment. To help to understand this, imagine that all humans were genetic clones. The heritability index for all traits would be zero (all variability between clonal individuals must be due to environmental factors). And, contrary to erroneous interpretations of the heritability index, as societies become more egalitarian (everyone has more similar experiences) the heritability index goes up (as environments become more similar, variability between individuals is due more to genetic factors).

One should also take into account the fact that the variables of heritability and environmentality are not precise and vary within a chosen population and across cultures. It would be more accurate to state that the degree of heritability and environmentality is measured in its reference to a particular phenotype in a chosen group of a population in a given period of time. The accuracy of the calculations is further hindered by the number of coefficients taken into consideration, age being one such variable. The display of the influence of heritability and environmentality differs drastically across age groups: the older the studied age is, the more noticeable the heritability factor becomes, the younger the test subjects are, the more likely it is to show signs of strong influence of the environmental factors.

Some have pointed out that environmental inputs affect the expression of genes (see the article on epigenetics). This is one explanation of how environment can influence the extent to which a genetic disposition will actually manifest.[citation needed] The interactions of genes with environment, called gene–environment interactions, are another component of the nature–nurture debate. A classic example of gene–environment interaction is the ability of a diet low in the amino acid phenylalanine to partially suppress the genetic disease phenylketonuria. Yet another complication to the nature–nurture debate is the existence of gene-environment correlations. These correlations indicate that individuals with certain genotypes are more likely to find themselves in certain environments. Thus, it appears that genes can shape (the selection or creation of) environments. Even using experiments like those described above, it can be very difficult to determine convincingly the relative contribution of genes and environment.

A very convincing experiment conducted by T.J. Bouchard, Jr. has shown data that has been significant evidence for the importance of genes when testing middle-aged twins reared together and reared apart. The results shown have been important evidence against the importance of environment when determining, happiness, for example. In the Minnesota study of twins reared apart, it was actually found that there was higher correlation for monozygotic twins reared apart (.52)than monozygotic twins reared together (.44). Also, highlighting the importance of genes, these correlations found much higher correlation among monozygotic than dizygotic twins that had a correlation of .08 when reared together and -.02 when reared apart (Lykken & Tellegen, 1996).

Another advanced technique, multivariate genetic analysis, examines the genetic contribution to several traits that vary together. For example, multivariate genetic analysis has demonstrated that the genetic determinants of all specific cognitive abilities (e.g., memory, spatial reasoning, processing speed) overlap greatly, such that the genes associated with any specific cognitive ability will affect all others. Similarly, multivariate genetic analysis has found that genes that affect scholastic achievement completely overlap with the genes that affect cognitive ability.

Extremes analysis, examines the link between normal and pathological traits. For example, it is hypothesized that a given behavioral disorder may represent an extreme of a continuous distribution of a normal behavior and hence an extreme of a continuous distribution of genetic and environmental variation. Depression, phobias, and reading disabilities have been examined in this context.

For a few highly heritable traits, some studies have identified loci associated with variance in that trait in some individuals. For example, research groups have identified loci that are associated with schizophrenia in subsets of patients with that diagnosis.[30]

The nature side of this debate emphasizes how much of an organism reflects biological factors. But, on the other hand genes are activated at appropriate times during development and are the basis for protein production. Proteins include a wide range of molecules, such as hormones and enzymes that act in the body as signaling and structural molecules to direct development. When looking at the influence of genes in the Nature vs. Nurture debate there has been found to be variation in the promotor region of the serotonin transporter gene (5-HTTLPR). The discovery of this inherited, genetic "happiness gene" is promising evidence for the nature side of the debate when measuring life satisfaction (Jan-Emmanuel De Neve, 2010). The nurture side, on the other hand, emphasizes how much of an organism reflects environmental factors. In reality, it is most likely an interaction of both genes and environment, nature and nurture, that affects the development of a person. Even in the womb, genes interact with hormones in the environment to signal the start of a new developmental phase. The hormonal environment, likewise, does not act independently of the genes and it cannot correct lethal errors in the genetic makeup of a fetus. The genes and the environment must be in sync for normal development. Similarly, even if a person has inherited genes for taller than average height, the person may not grow to be as tall as is genetically possible if proper nutrition is not provided. Here too the interaction of genes and the environment is blurred. It has been suggested that the key to understanding complex human behaviour and diseases is to study genes, the environment, and the interactions between the two equally.[31]

Moreover, adoption studies indicate that, by adulthood, adoptive siblings are no more similar in IQ than strangers (IQ correlation near zero), while full siblings show an IQ correlation of 0.6. Twin studies reinforce this pattern: monozygotic (identical) twins raised separately are highly similar in IQ (0.74), more so than dizygotic (fraternal) twins raised together (0.6) and much more than adoptive siblings (~0.0).[32] Recent adoption studies also found that supportive parents can have a positive effect on the development of their children.[33]

With the advent of genomic sequencing, it has become possible to search for and identify specific gene polymorphisms that affect traits such as IQ and personality. These techniques work by tracking the association of differences in a trait of interest with differences in specific molecular markers or functional variants. An example of a visible human traits for which the precise genetic basis of differences are relatively well known is eye color. For traits with many genes affecting the outcome, a smaller portion of the variance is currently understood: For instance for height known gene variants account for around 5-10% of height variance at present.[citation needed] When discussing the significant role of genetic heritability in relation to one's level of happiness, it has been found that from 44% to 52% of the variance in one's well-being is associated with genetic variation. Based on the retest of smaller samples of twins studies after 4,5, and 10 years, it is estimated that the heritability of the genetic stable component of subjective well-being approaches 80% (Lykken & Tellegen, 1996). Other studies that have found that genes are a large influence in the variance found in happiness measures, exactly around 35-50%(Roysamb et al., 2002; Stubbe et al., 2005; Nes et al., 2005, 2006).

Association studies, on the other hand, are more hypothetic and seek to verify whether a particular genetic variable really influences the phenotype of interest. In association studies it is more common to use case-control approach, comparing the subject with a relatively higher or lower hereditary determinants with the control subject.

As well as asking if a trait such as IQ is heritable, one can ask what it is about "intelligence" that is being inherited. Similarly, if in a broad set of environments genes account for almost all observed variation in a trait then this raises the notion of genetic determinism and or biological determinism, and the level of analysis which is appropriate for the trait. Finally, as early as 1951, Calvin Hall[35] suggested that discussion opposing nature and nurture was fruitless. Environments may be able to be varied in ways that affect development: This would alter the heritability of the character changes, too. Conversely, if the genetic composition of a population changes, then heritability may also change.

The example of phenylketonuria (PKU) is informative. Untreated, this is a completely penetrant genetic disorder causing brain damage and progressive mental retardation. PKU can be treated by the elimination of phenylalanine from the diet. Hence, a character (PKU) that used to have a virtually perfect heritability is not heritable any more if modern medicine is available (the actual allele causing PKU would still be inherited, but the phenotype PKU would not be expressed anymore). It is useful then to think of what is inherited as a mechanism for breaking down phenylalanine. Separately from this we can consider whether the organism has other mechanisms (for instance a drug that breakdown this amino acid) or does not need the mechanism (due to dietary exclusion).

Similarly, within, say, an inbred strain of mice, no genetic variation is present and every character will have a zero heritability. If the complications of gene–environment interactions and correlations (see above) are added, then it appears to many that heritability, the epitome of the nature–nurture opposition, is "a station passed."[36]

A related concept is the view that the idea that either nature or nurture explains a creature's behavior is an example of the single cause fallacy.

Critics of this ethical view point out that whether or not a behavioral trait is inherited does not affect whether it can be changed by one's culture or independent choice,[38] and that evolutionary inclinations could be discarded in ethical and political discussions regardless of whether they exist or not.[39]

Leda Cosmides and John Tooby noted that William James (1842–1910) argued that humans have more "instincts" than animals, and that greater freedom of action is the result of having more psychological instincts, not fewer.[40] Daniel C. Dennett explores this idea in his 2003 book Freedom Evolves.

Evidence that supports the genetic defense stems includes H.G. Brunner's 1993 discovery of what is now known as Brunner Syndrome, and a series of studies on mice. Proponents of the defense suggest that individuals cannot be held accountable for their genes, and as a result, should not be held responsible for their dispositions and resulting actions.[42] If a single gene mutation is the reason for aggressive behaviour, there is a possibility that aggressive offenders would have a genetic excuse for their crimes.[42] Genetic determinism has been debated for its plausibility of use in the judicial system for some time, yet "the use of a 'genetic defense' in the courtroom is a fairly new phenomenon."[42] In the United States, use of genetics in court defense has been used and successful in reducing sentencing for violent offenders, for "certain predispositions may reduce the blameworthiness of the offender."[42]

Canadian Judge Justice McLachlin denotes, "I can find no support in criminal theory for the conclusion that protection of the morally innocent requires a general consideration of individual excusing conditions. The principle comes into play only at the point where the person is shown to lack the capacity to appreciate the nature and quality or consequences of his or her acts."[43]

Several mitigating factors already shape the purposes and principles of sentencing in relation to genetics such as impairment of judgment and a disadvantaged background. A 'genetic predisposition to violence' could fall similarly under the same statute laws 718c in the USA as a mitigating factor in crime if the science behind genetic determinants can be found conclusive.[42] However, Duke University researcher Laura Baker disagrees, as "although genes may increase the propensity for criminality, for example, they do not determine it."[43]

The phrase "nature and nurture" in its modern sense was coined[1][2][3] by the English Victorian polymath Francis Galton in discussion of the influence of heredity and environment on social advancement, although the terms had been contrasted previously, for example by Shakespeare (in his play, The Tempest: 4.1). Galton was influenced[4] by the book On the Origin of Species written by his half-cousin, Charles Darwin. The concept embodied in the phrase has been criticized[3][4] for its binary simplification of two tightly interwoven parameters, as for example an environment of wealth, education, and social privilege are often historically passed to genetic offspring, even though wealth, education, and social privilege are not part of the human biological system, and so cannot be directly attributed to genetics.

The view that humans acquire all or almost all their behavioral traits from "nurture" was termed tabula rasa ("blank slate") by philosopher John Locke. The blank slate view proposes that humans develop only from environmental influences. This question was once considered to be an appropriate division of developmental influences, but since both types of factors are known to play interacting roles in development, most modern psychologists and other scholars of human development consider the question naive—representing an outdated state of knowledge.[5][6][7][8]

One may also refer to the concepts of innatism and empiricism as genetic determinism and environmentalism respectively. These two conflicting approaches have influenced research agendas for a century. While genetic determinism holds that the development is primarily influenced by the genetic code of a person, environmentalism emphasises the influence of experiences and social factors. In the twenty-first century, a consensus is developing that both genetic and environmental agents influence development interactively.[9]:85 In the social and political sciences, the nature versus nurture debate may be contrasted with the structure versus agency debate (that is, socialization versus individual autonomy). For a discussion of nature versus nurture in language and other human universals, see also psychological nativism.

In their 2014 survey of scientists, many respondents wrote that the familiar distinction between nature and nurture has outlived its usefulness, and should be retired. One reason is the explosion of work in the field of epigenetics. Scientists believe that there is a long and circuitous route, with many feedback loops, from a particular set of genes to a feature of the adult organism. Culture is a biological phenomenon: a set of abilities and practices that allow members of one generation to learn and change and to pass the results of that learning on to the next generation.[10][11]

Scientific approach

To disentangle the effects of genes and environment, behavioral geneticists perform adoption and twin studies. These provide ways to seek to decompose the variance in a population into genetic and environmental components. This move from individuals to populations makes a critical difference in the way people think about nature and nurture. This difference is perhaps highlighted in the quote attributed to psychologist Donald Hebb who is said to have once answered a journalist's question of "which, nature or nurture, contributes more to personality?" by asking in response, "Which contributes more to the area of a rectangle, its length or its width?"[12] For a particular rectangle, its area is indeed the product of its length and width. Moving to a population, however, this analogy masks the fact that there are many individuals, and that it is meaningful to talk about their differences.[13]Scientific approaches also seek to break down variance beyond these two categories of nature and nurture. Thus rather than "nurture", behavior geneticists distinguish shared family factors (i.e., those shared by siblings, making them more similar) and nonshared factors (i.e., those that uniquely affect individuals, making siblings different). To express the portion of the variance due to the "nature" component, behavioral geneticists generally refer to the heritability of a trait.

With regard to personality traits and adult IQ in the general U.S. population, the portion of the overall variance that can be attributed to shared family effects is often negligible.[14]

In her Pulitzer Prize-nominated book The Nurture Assumption, author Judith Harris argues that "nurture," as traditionally defined in terms of family upbringing does not effectively explain the variance for most traits (such as adult IQ and the Big Five personality traits) in the general population of the United States. On the contrary, Harris suggests that either peer groups or random environmental factors (i.e., those that are independent of family upbringing) are more important than family environmental effects.[15][16]

Although "nurture" has historically been referred to as the care given to children by the parents, with the mother playing a role of particular importance, this term is now regarded by some as any environmental factor in the contemporary nature versus nurture debate. Thus the definition of "nurture" has expanded to include influences on development arising from prenatal, parental, extended family, and peer experiences, and extending to influences such as media, marketing, and socio-economic status. Indeed, a substantial source of environmental input to human nature may arise from stochastic variations in prenatal development.[17][18]

Heritability estimates

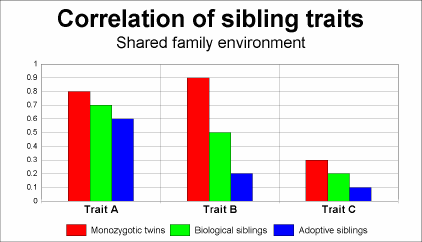

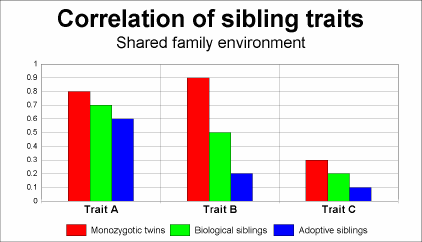

This chart illustrates three patterns one might see when studying the influence of genes and environment on traits in individuals. Trait A shows a high sibling correlation, but little heritability (i.e. high shared environmental variance c2; low heritability h2). Trait B shows a high heritability since correlation of trait rises sharply with degree of genetic similarity. Trait C shows low heritability, but also low correlations generally; this means Trait C has a high nonshared environmental variance e2. In other words, the degree to which individuals display Trait C has little to do with either genes or broadly predictable environmental factors—roughly, the outcome approaches random for an individual. Notice also that even identical twins raised in a common family rarely show 100% trait correlation.

It is important to note that the term heritability refers only to the degree of genetic variation between people on a trait. It does not refer to the degree to which a trait of a particular individual is due to environmental or genetic factors. The traits of an individual are always a complex interweaving of both.[19] For an individual, even strongly genetically influenced, or "obligate" traits, such as eye color, assume the inputs of a typical environment during ontogenetic development (e.g., certain ranges of temperatures, oxygen levels, etc.).

In contrast, the "heritability index" statistically quantifies the extent to which variation between individuals on a trait is due to variation in the genes those individuals carry. In animals where breeding and environments can be controlled experimentally, heritability can be determined relatively easily. Such experiments would be unethical for human research. This problem can be overcome by finding existing populations of humans that reflect the experimental setting the researcher wishes to create.

One way to determine the contribution of genes and environment to a trait is to study twins. In one kind of study, identical twins reared apart are compared to randomly selected pairs of people. The twins share identical genes, but different family environments. In another kind of twin study, identical twins reared together (who share family environment and genes) are compared to fraternal twins reared together (who also share family environment but only share half their genes). Another condition that permits the disassociation of genes and environment is adoption. In one kind of adoption study, biological siblings reared together (who share the same family environment and half their genes) are compared to adoptive siblings (who share their family environment but none of their genes).

In many cases, it has been found that genes make a substantial contribution, including psychological traits such as intelligence and personality.[20] Yet heritability may differ in other circumstances, for instance environmental deprivation. Examples of low, medium, and high heritability traits include:

| Low heritability | Medium heritability | High heritability |

|---|---|---|

| Specific language | Weight | Blood type |

| Specific religion | Religiosity | Eye color |

Twin and adoption studies have their methodological limits. For example, both are limited to the range of environments and genes which they sample. Almost all of these studies are conducted in Western, first-world countries, and therefore cannot be extrapolated globally to include poorer, non-western populations. Additionally, both types of studies depend on particular assumptions, such as the equal environments assumption in the case of twin studies, and the lack of pre-adoptive effects in the case of adoption studies.

Interaction of genes and environment

| “ | Many properties of the brain are genetically organized, and don't depend on information coming in from the senses. | ” |

The variability of trait can be meaningfully spoken of as being due in certain proportions to genetic differences ("nature"), or environments ("nurture"). For highly penetrant Mendelian genetic disorders such as Huntington's disease virtually all the incidence of the disease is due to genetic differences. Huntington's animal models live much longer or shorter lives depending on how they are cared for[citation needed].

At the other extreme, traits such as native language are environmentally determined: linguists have found that any child (if capable of learning a language at all) can learn any human language with equal facility.[21] With virtually all biological and psychological traits, however, genes and environment work in concert, communicating back and forth to create the individual.

At a molecular level, genes interact with signals from other genes and from the environment. While there are many thousands of single-gene-locus traits, so-called complex traits are due to the additive effects of many (often hundreds) of small gene effects. A good example of this is height, where variance appears to be spread across many hundreds of loci.[22]

Extreme genetic or environmental conditions can predominate in rare circumstances—if a child is born mute due to a genetic mutation, it will not learn to speak any language regardless of the environment; similarly, someone who is practically certain to eventually develop Huntington's disease according to their genotype may die in an unrelated accident (an environmental event) long before the disease will manifest itself.

Steven Pinker likewise described several examples:[23]

- concrete behavioral traits that patently depend on content provided by the home or culture—which language one speaks, which religion one practices, which political party one supports—are not heritable at all. But traits that reflect the underlying talents and temperaments—how proficient with language a person is, how religious, how liberal or conservative—are partially heritable.

Heritability measures always refer to the degree of variation between individuals in a population. That is, as these statistics cannot be applied at the level of the individual, it would be incorrect to say that while the heritability index of personality is about 0.6, 60% of one's personality is obtained from one's parents and 40% from the environment. To help to understand this, imagine that all humans were genetic clones. The heritability index for all traits would be zero (all variability between clonal individuals must be due to environmental factors). And, contrary to erroneous interpretations of the heritability index, as societies become more egalitarian (everyone has more similar experiences) the heritability index goes up (as environments become more similar, variability between individuals is due more to genetic factors).

One should also take into account the fact that the variables of heritability and environmentality are not precise and vary within a chosen population and across cultures. It would be more accurate to state that the degree of heritability and environmentality is measured in its reference to a particular phenotype in a chosen group of a population in a given period of time. The accuracy of the calculations is further hindered by the number of coefficients taken into consideration, age being one such variable. The display of the influence of heritability and environmentality differs drastically across age groups: the older the studied age is, the more noticeable the heritability factor becomes, the younger the test subjects are, the more likely it is to show signs of strong influence of the environmental factors.

Some have pointed out that environmental inputs affect the expression of genes (see the article on epigenetics). This is one explanation of how environment can influence the extent to which a genetic disposition will actually manifest.[citation needed] The interactions of genes with environment, called gene–environment interactions, are another component of the nature–nurture debate. A classic example of gene–environment interaction is the ability of a diet low in the amino acid phenylalanine to partially suppress the genetic disease phenylketonuria. Yet another complication to the nature–nurture debate is the existence of gene-environment correlations. These correlations indicate that individuals with certain genotypes are more likely to find themselves in certain environments. Thus, it appears that genes can shape (the selection or creation of) environments. Even using experiments like those described above, it can be very difficult to determine convincingly the relative contribution of genes and environment.

A very convincing experiment conducted by T.J. Bouchard, Jr. has shown data that has been significant evidence for the importance of genes when testing middle-aged twins reared together and reared apart. The results shown have been important evidence against the importance of environment when determining, happiness, for example. In the Minnesota study of twins reared apart, it was actually found that there was higher correlation for monozygotic twins reared apart (.52)than monozygotic twins reared together (.44). Also, highlighting the importance of genes, these correlations found much higher correlation among monozygotic than dizygotic twins that had a correlation of .08 when reared together and -.02 when reared apart (Lykken & Tellegen, 1996).

Obligate vs. facultative adaptations

Traits may be considered to be adaptations (such as the umbilical cord), byproducts of adaptations (the belly button) or due to random variation (convex or concave belly button shape).[24] An alternative to contrasting nature and nurture focuses on "obligate vs. facultative" adaptations.[24] Adaptations may be generally more obligate (robust in the face of typical environmental variation) or more facultative (sensitive to typical environmental variation). For example, the rewarding sweet taste of sugar and the pain of bodily injury are obligate psychological adaptations—typical environmental variability during development does not much affect their operation.[25] On the other hand, facultative adaptations are somewhat like "if-then" statements.[26] An example of a facultative psychological adaptation may be adult attachment style. The attachment style of adults, (for example, a "secure attachment style," the propensity to develop close, trusting bonds with others) is proposed to be conditional on whether an individual's early childhood caregivers could be trusted to provide reliable assistance and attention. An example of a facultative physiological adaptation is tanning of skin on exposure to sunlight (to prevent skin damage).Advanced techniques

The power of quantitative studies of heritable traits has been expanded by the development of new techniques. Developmental genetic analysis examines the effects of genes over the course of a human lifespan. For example, early studies of intelligence, which mostly examined young children, found that heritability measures 40–50%. Subsequent developmental genetic analyses found that variance attributable to additive environmental effects is less apparent in older individuals,[27][28][29] with estimated heritability of IQ being higher than that in adulthood.Another advanced technique, multivariate genetic analysis, examines the genetic contribution to several traits that vary together. For example, multivariate genetic analysis has demonstrated that the genetic determinants of all specific cognitive abilities (e.g., memory, spatial reasoning, processing speed) overlap greatly, such that the genes associated with any specific cognitive ability will affect all others. Similarly, multivariate genetic analysis has found that genes that affect scholastic achievement completely overlap with the genes that affect cognitive ability.

Extremes analysis, examines the link between normal and pathological traits. For example, it is hypothesized that a given behavioral disorder may represent an extreme of a continuous distribution of a normal behavior and hence an extreme of a continuous distribution of genetic and environmental variation. Depression, phobias, and reading disabilities have been examined in this context.

For a few highly heritable traits, some studies have identified loci associated with variance in that trait in some individuals. For example, research groups have identified loci that are associated with schizophrenia in subsets of patients with that diagnosis.[30]

Nature and nurture

"Give me a dozen healthy infants, well-formed, and my own specified world to bring them up in and I'll guarantee to take any one at random and train him to become any type of specialist I might select – doctor, lawyer, artist, merchant-chief and, yes, even beggar-man and thief, regardless of his talents, penchants, tendencies, abilities, vocations, and race of his ancestors." – John B. WatsonThe nature side of this debate emphasizes how much of an organism reflects biological factors. But, on the other hand genes are activated at appropriate times during development and are the basis for protein production. Proteins include a wide range of molecules, such as hormones and enzymes that act in the body as signaling and structural molecules to direct development. When looking at the influence of genes in the Nature vs. Nurture debate there has been found to be variation in the promotor region of the serotonin transporter gene (5-HTTLPR). The discovery of this inherited, genetic "happiness gene" is promising evidence for the nature side of the debate when measuring life satisfaction (Jan-Emmanuel De Neve, 2010). The nurture side, on the other hand, emphasizes how much of an organism reflects environmental factors. In reality, it is most likely an interaction of both genes and environment, nature and nurture, that affects the development of a person. Even in the womb, genes interact with hormones in the environment to signal the start of a new developmental phase. The hormonal environment, likewise, does not act independently of the genes and it cannot correct lethal errors in the genetic makeup of a fetus. The genes and the environment must be in sync for normal development. Similarly, even if a person has inherited genes for taller than average height, the person may not grow to be as tall as is genetically possible if proper nutrition is not provided. Here too the interaction of genes and the environment is blurred. It has been suggested that the key to understanding complex human behaviour and diseases is to study genes, the environment, and the interactions between the two equally.[31]

IQ debate

Evidence suggests that family environmental factors may have an effect upon childhood IQ, accounting for up to a quarter of the variance. The American Psychological Association's report "Intelligence: Knowns and Unknowns" (1995) states that there is no doubt that normal child development requires a certain minimum level of responsible care. Here, environment is playing a role in what is believed to be fully genetic (intelligence) but it was found that severely deprived, neglectful, or abusive environments have highly negative effects on many aspects of children's intellect development. Beyond that minimum, however, the role of family experience is in serious dispute. On the other hand, by late adolescence this correlation disappears, such that adoptive siblings no longer have similar IQ scores.[14]Moreover, adoption studies indicate that, by adulthood, adoptive siblings are no more similar in IQ than strangers (IQ correlation near zero), while full siblings show an IQ correlation of 0.6. Twin studies reinforce this pattern: monozygotic (identical) twins raised separately are highly similar in IQ (0.74), more so than dizygotic (fraternal) twins raised together (0.6) and much more than adoptive siblings (~0.0).[32] Recent adoption studies also found that supportive parents can have a positive effect on the development of their children.[33]

Personality traits

Personality is a frequently cited example of a heritable trait that has been studied in twins and adoptions. The most famous categorical organization of heritable personality traits were created by Goldberg (1990) in which he had college students rate their personalities on 1400 dimensions to begin, and then narrowed these down into "The Big Five" factors of personality—Openness, conscientiousness, extraversion, agreeableness, and neuroticism. The close genetic relationship between positive personality traits and, for example, our happiness traits are the mirror images of comorbidity in psychopathology(Kendler et al., 2006, 2007). These personality factors were consistent across cultures, and many experiments have also tested the heritability of these traits. Identical twins reared apart are far more similar in personality than randomly selected pairs of people. Likewise, identical twins are more similar than fraternal twins. Also, biological siblings are more similar in personality than adoptive siblings. Each observation suggests that personality is heritable to a certain extent. A supporting article had focused on the heritability of personality (which is estimated to be around 50% for subjective well-being) in which an experiment was conducted using a representative sample of 973 twin pairs to test the heritable differences in subjective well-being which were found to be fully accounted for by the genetic model of the Five-Factor Model’s personality domains.[34] However, these same study designs allow for the examination of environment as well as genes. Adoption studies also directly measure the strength of shared family effects. Adopted siblings share only family environment. Most adoption studies indicate that by adulthood the personalities of adopted siblings are little or no more similar than random pairs of strangers. This would mean that shared family effects on personality are zero by adulthood. As is the case with personality, non-shared environmental effects are often found to out-weigh shared environmental effects. That is, environmental effects that are typically thought to be life-shaping (such as family life) may have less of an impact than non-shared effects, which are harder to identify. One possible source of non-shared effects is the environment of pre-natal development. Random variations in the genetic program of development may be a substantial source of non-shared environment. These results suggest that "nurture" may not be the predominant factor in "environment". Environment and our situations, do in fact impact our lives, but not the way in which we would typically react to these environmental factors. We are preset with personality traits that are the basis for how we would react to situations. An example would be how extraverted prisoners become less happy than introverted prisoners and would react to their incarceration more negatively due to their preset extraverted personality.[19]:Ch 19Genetics

Genomics

The relationship between personality and people's own well-being is influenced and mediated by genes (Weiss, Bates, & Luciano, 2008). There has been found to be a stable set point for happiness that is characteristic of the individual (largely determined by the individual's genes). Happiness fluctuates around that setpoint (again, genetically determined) based on whether good things or bad things are happening to us ("nurture"), but only fluctuates in small magnitude in a normal human. The midpoint of these fluctuations is determined by the "great genetic lottery" that people are born with, which leads them to conclude that how happy they may feel at the moment or overtime is simply due to the luck of the draw, or gene. This fluctuation was also not due to educational attainment, which only accounted for less than 2% of the variance in well-being for women, and less than 1% of the variance for men.(Lykken & Tellegen, 1996).With the advent of genomic sequencing, it has become possible to search for and identify specific gene polymorphisms that affect traits such as IQ and personality. These techniques work by tracking the association of differences in a trait of interest with differences in specific molecular markers or functional variants. An example of a visible human traits for which the precise genetic basis of differences are relatively well known is eye color. For traits with many genes affecting the outcome, a smaller portion of the variance is currently understood: For instance for height known gene variants account for around 5-10% of height variance at present.[citation needed] When discussing the significant role of genetic heritability in relation to one's level of happiness, it has been found that from 44% to 52% of the variance in one's well-being is associated with genetic variation. Based on the retest of smaller samples of twins studies after 4,5, and 10 years, it is estimated that the heritability of the genetic stable component of subjective well-being approaches 80% (Lykken & Tellegen, 1996). Other studies that have found that genes are a large influence in the variance found in happiness measures, exactly around 35-50%(Roysamb et al., 2002; Stubbe et al., 2005; Nes et al., 2005, 2006).

Linkage and association studies

In their attempts to locate the genes responsible for configuring certain phenotypes, researches resort to two different techniques. Linkage study facilitates the process of determining a specific location in which a gene of interested is located. This methodology is applied only among individuals that are related and does not serve to pinpoint specific genes. It does, however, narrow down the area of search, making it easier to locate one or several genes in the genome which constitute a specific trait.Association studies, on the other hand, are more hypothetic and seek to verify whether a particular genetic variable really influences the phenotype of interest. In association studies it is more common to use case-control approach, comparing the subject with a relatively higher or lower hereditary determinants with the control subject.

Philosophical difficulties

Philosophical questions regarding nature and nurture include the question of the nature of the trait itself, questions of determinism, and whether the question is well posed.As well as asking if a trait such as IQ is heritable, one can ask what it is about "intelligence" that is being inherited. Similarly, if in a broad set of environments genes account for almost all observed variation in a trait then this raises the notion of genetic determinism and or biological determinism, and the level of analysis which is appropriate for the trait. Finally, as early as 1951, Calvin Hall[35] suggested that discussion opposing nature and nurture was fruitless. Environments may be able to be varied in ways that affect development: This would alter the heritability of the character changes, too. Conversely, if the genetic composition of a population changes, then heritability may also change.

The example of phenylketonuria (PKU) is informative. Untreated, this is a completely penetrant genetic disorder causing brain damage and progressive mental retardation. PKU can be treated by the elimination of phenylalanine from the diet. Hence, a character (PKU) that used to have a virtually perfect heritability is not heritable any more if modern medicine is available (the actual allele causing PKU would still be inherited, but the phenotype PKU would not be expressed anymore). It is useful then to think of what is inherited as a mechanism for breaking down phenylalanine. Separately from this we can consider whether the organism has other mechanisms (for instance a drug that breakdown this amino acid) or does not need the mechanism (due to dietary exclusion).

Similarly, within, say, an inbred strain of mice, no genetic variation is present and every character will have a zero heritability. If the complications of gene–environment interactions and correlations (see above) are added, then it appears to many that heritability, the epitome of the nature–nurture opposition, is "a station passed."[36]

A related concept is the view that the idea that either nature or nurture explains a creature's behavior is an example of the single cause fallacy.

Free will

Some believe that evolutionary explanations describes factors which limit our free will, in that it can be seen to imply that we behave in ways in which we are ‘naturally inclined’. J. Mizzoni wrote “There are some moral philosophers (such as Thomas Nagel) who believe that evolutionary considerations are irrelevant to a full understanding of the foundations of ethics. Other moral philosophers (such as J. L. Mackie) tell quite a different story. They hold that the admission of the evolutionary origins of human beings compels us to concede that there are no foundations for ethics.”[37]Critics of this ethical view point out that whether or not a behavioral trait is inherited does not affect whether it can be changed by one's culture or independent choice,[38] and that evolutionary inclinations could be discarded in ethical and political discussions regardless of whether they exist or not.[39]

Leda Cosmides and John Tooby noted that William James (1842–1910) argued that humans have more "instincts" than animals, and that greater freedom of action is the result of having more psychological instincts, not fewer.[40] Daniel C. Dennett explores this idea in his 2003 book Freedom Evolves.

In popular culture

The nature vs. nurture debate has come up in fiction in various ways. An obvious example of the debate can be seen in science-fiction stories involving cloning, where genetically identical people experience different lives growing up and are consequently shaped into different people despite beginning with the same potential. An example of this is The Boys from Brazil, where multiple clones of Hitler are created as part of a Nazi scientist's experiments to recreate the Führer by arranging for the clones to experience the same defining moments that Hitler did, or Star Trek: Nemesis, where the film's villain is a clone of protagonist Captain Jean-Luc Picard who regularly 'defends' his actions by arguing that Picard would do the same thing if he had lived the clone's life (although Picard's crew argue that Picard has shown a desire to better himself from the beginning that the clone has never displayed). Other, more complex examples include the film Trading Places- where two wealthy brothers make a bet over the debate by ruining the life of one of their employees and promoting a street bum to replace him to see what happens-, the series Orphan Black, featuring multiple clones of one woman shaped in various ways by their upbringing (ranging from a cop to a con artist to a religious assassin), or the differences between the Marvel Comics characters Nate Grey and Cable, two people who are essentially alternate versions of each other from two different timelines, beginning with the same power potential but developing in a highly different manner afterwards due to Cable's military training contrasting with Nate's reliance on his powers.Implications in law

Recently, the nature versus nurture debate has entered the realm of law and criminal defense. In some cases, lawyers for violent offenders have begun to argue that an individual’s genes, rather than their rational decision-making processes, can cause criminal activity.[41] Early attempts to employ a genetic defense were concerned with XYY syndrome – a genetic abnormality in which men have a second Y chromosome. However, several critiques argue that XYY individuals are not predisposed to aggression or violence, discrediting the theory as a plausible criminal defense.[42]Evidence that supports the genetic defense stems includes H.G. Brunner's 1993 discovery of what is now known as Brunner Syndrome, and a series of studies on mice. Proponents of the defense suggest that individuals cannot be held accountable for their genes, and as a result, should not be held responsible for their dispositions and resulting actions.[42] If a single gene mutation is the reason for aggressive behaviour, there is a possibility that aggressive offenders would have a genetic excuse for their crimes.[42] Genetic determinism has been debated for its plausibility of use in the judicial system for some time, yet "the use of a 'genetic defense' in the courtroom is a fairly new phenomenon."[42] In the United States, use of genetics in court defense has been used and successful in reducing sentencing for violent offenders, for "certain predispositions may reduce the blameworthiness of the offender."[42]

Canadian Judge Justice McLachlin denotes, "I can find no support in criminal theory for the conclusion that protection of the morally innocent requires a general consideration of individual excusing conditions. The principle comes into play only at the point where the person is shown to lack the capacity to appreciate the nature and quality or consequences of his or her acts."[43]

Several mitigating factors already shape the purposes and principles of sentencing in relation to genetics such as impairment of judgment and a disadvantaged background. A 'genetic predisposition to violence' could fall similarly under the same statute laws 718c in the USA as a mitigating factor in crime if the science behind genetic determinants can be found conclusive.[42] However, Duke University researcher Laura Baker disagrees, as "although genes may increase the propensity for criminality, for example, they do not determine it."[43]