Metadata (or metainformation) is "data that provides information about other data", but not the content of the data, such as the text of a message or the image itself. There are many distinct types of metadata, including:

- Descriptive metadata – the descriptive information about a resource. It is used for discovery and identification. It includes elements such as title, abstract, author, and keywords.

- Structural metadata – metadata about containers of data and indicates how compound objects are put together, for example, how pages are ordered to form chapters. It describes the types, versions, relationships, and other characteristics of digital materials.

- Administrative metadata – the information to help manage a resource, like resource type, permissions, and when and how it was created.

- Reference metadata – the information about the contents and quality of statistical data.

- Statistical metadata – also called process data, may describe processes that collect, process, or produce statistical data.

- Legal metadata – provides information about the creator, copyright holder, and public licensing, if provided.

Metadata is not strictly bound to one of these categories, as it can describe a piece of data in many other ways.

History

Metadata has various purposes. It can help users find relevant information and discover resources. It can also help organize electronic resources, provide digital identification, and archive and preserve resources. Metadata allows users to access resources by "allowing resources to be found by relevant criteria, identifying resources, bringing similar resources together, distinguishing dissimilar resources, and giving location information". Metadata of telecommunication activities including Internet traffic is very widely collected by various national governmental organizations. This data is used for the purposes of traffic analysis and can be used for mass surveillance.

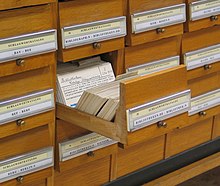

Metadata was traditionally used in the card catalogs of libraries until the 1980s when libraries converted their catalog data to digital databases. In the 2000s, as data and information were increasingly stored digitally, this digital data was described using metadata standards.

The first description of "meta data" for computer systems is purportedly noted by MIT's Center for International Studies experts David Griffel and Stuart McIntosh in 1967: "In summary then, we have statements in an object language about subject descriptions of data and token codes for the data. We also have statements in a meta language describing the data relationships and transformations, and ought/is relations between norm and data."

Unique metadata standards exist for different disciplines (e.g., museum collections, digital audio files, websites, etc.). Describing the contents and context of data or data files increases its usefulness. For example, a web page may include metadata specifying what software language the page is written in (e.g., HTML), what tools were used to create it, what subjects the page is about, and where to find more information about the subject. This metadata can automatically improve the reader's experience and make it easier for users to find the web page online. A CD may include metadata providing information about the musicians, singers, and songwriters whose work appears on the disc.

In many countries, government organizations routinely store metadata about emails, telephone calls, web pages, video traffic, IP connections, and cell phone locations.

Definition

Metadata means "data about data". Metadata is defined as the data providing information about one or more aspects of the data; it is used to summarize basic information about data that can make tracking and working with specific data easier. Some examples include:

- Means of creation of the data

- Purpose of the data

- Time and date of creation

- Creator or author of the data

- Location on a computer network where the data was created

- Standards used

- File size

- Data quality

- Source of the data

- Process used to create the data

For example, a digital image may include metadata that describes the size of the image, its color depth, resolution, when it was created, the shutter speed, and other data. A text document's metadata may contain information about how long the document is, who the author is, when the document was written, and a short summary of the document. Metadata within web pages can also contain descriptions of page content, as well as key words linked to the content. These links are often called "Metatags", which were used as the primary factor in determining order for a web search until the late 1990s. The reliance on metatags in web searches was decreased in the late 1990s because of "keyword stuffing", whereby metatags were being largely misused to trick search engines into thinking some websites had more relevance in the search than they really did.

Metadata can be stored and managed in a database, often called a metadata registry or metadata repository. However, without context and a point of reference, it might be impossible to identify metadata just by looking at it. For example: by itself, a database containing several numbers, all 13 digits long could be the results of calculations or a list of numbers to plug into an equation – without any other context, the numbers themselves can be perceived as the data. But if given the context that this database is a log of a book collection, those 13-digit numbers may now be identified as ISBNs – information that refers to the book, but is not itself the information within the book. The term "metadata" was coined in 1968 by Philip Bagley, in his book "Extension of Programming Language Concepts" where it is clear that he uses the term in the ISO 11179 "traditional" sense, which is "structural metadata" i.e. "data about the containers of data"; rather than the alternative sense "content about individual instances of data content" or metacontent, the type of data usually found in library catalogs. Since then the fields of information management, information science, information technology, librarianship, and GIS have widely adopted the term. In these fields, the word metadata is defined as "data about data". While this is the generally accepted definition, various disciplines have adopted their own more specific explanations and uses of the term.

Slate reported in 2013 that the United States government's interpretation of "metadata" could be broad, and might include message content such as the subject lines of emails.

Types

While the metadata application is manifold, covering a large variety of fields, there are specialized and well-accepted models to specify types of metadata. Bretherton & Singley (1994) distinguish between two distinct classes: structural/control metadata and guide metadata. Structural metadata describes the structure of database objects such as tables, columns, keys and indexes. Guide metadata helps humans find specific items and is usually expressed as a set of keywords in a natural language. According to Ralph Kimball, metadata can be divided into three categories: technical metadata (or internal metadata), business metadata (or external metadata), and process metadata.

NISO distinguishes three types of metadata: descriptive, structural, and administrative. Descriptive metadata is typically used for discovery and identification, as information to search and locate an object, such as title, authors, subjects, keywords, and publisher. Structural metadata describes how the components of an object are organized. An example of structural metadata would be how pages are ordered to form chapters of a book. Finally, administrative metadata gives information to help manage the source. Administrative metadata refers to the technical information, such as file type, or when and how the file was created. Two sub-types of administrative metadata are rights management metadata and preservation metadata. Rights management metadata explains intellectual property rights, while preservation metadata contains information to preserve and save a resource.

Statistical data repositories have their own requirements for metadata in order to describe not only the source and quality of the data but also what statistical processes were used to create the data, which is of particular importance to the statistical community in order to both validate and improve the process of statistical data production.

An additional type of metadata beginning to be more developed is accessibility metadata. Accessibility metadata is not a new concept to libraries; however, advances in universal design have raised its profile. Projects like Cloud4All and GPII identified the lack of common terminologies and models to describe the needs and preferences of users and information that fits those needs as a major gap in providing universal access solutions. Those types of information are accessibility metadata. Schema.org has incorporated several accessibility properties based on IMS Global Access for All Information Model Data Element Specification. The Wiki page WebSchemas/Accessibility lists several properties and their values. While the efforts to describe and standardize the varied accessibility needs of information seekers are beginning to become more robust, their adoption into established metadata schemas has not been as developed. For example, while Dublin Core (DC)'s "audience" and MARC 21's "reading level" could be used to identify resources suitable for users with dyslexia and DC's "format" could be used to identify resources available in braille, audio, or large print formats, there is more work to be done.

Structures

Metadata (metacontent) or, more correctly, the vocabularies used to assemble metadata (metacontent) statements, is typically structured according to a standardized concept using a well-defined metadata scheme, including metadata standards and metadata models. Tools such as controlled vocabularies, taxonomies, thesauri, data dictionaries, and metadata registries can be used to apply further standardization to the metadata. Structural metadata commonality is also of paramount importance in data model development and in database design.

Syntax

Metadata (metacontent) syntax refers to the rules created to structure the fields or elements of metadata (metacontent). A single metadata scheme may be expressed in a number of different markup or programming languages, each of which requires a different syntax. For example, Dublin Core may be expressed in plain text, HTML, XML, and RDF.

A common example of (guide) metacontent is the bibliographic classification, the subject, the Dewey Decimal class number. There is always an implied statement in any "classification" of some object. To classify an object as, for example, Dewey class number 514 (Topology) (i.e. books having the number 514 on their spine) the implied statement is: "<book><subject heading><514>". This is a subject-predicate-object triple, or more importantly, a class-attribute-value triple. The first 2 elements of the triple (class, attribute) are pieces of some structural metadata having a defined semantic. The third element is a value, preferably from some controlled vocabulary, some reference (master) data. The combination of the metadata and master data elements results in a statement which is a metacontent statement i.e. "metacontent = metadata + master data". All of these elements can be thought of as "vocabulary". Both metadata and master data are vocabularies that can be assembled into metacontent statements. There are many sources of these vocabularies, both meta and master data: UML, EDIFACT, XSD, Dewey/UDC/LoC, SKOS, ISO-25964, Pantone, Linnaean Binomial Nomenclature, etc. Using controlled vocabularies for the components of metacontent statements, whether for indexing or finding, is endorsed by ISO 25964: "If both the indexer and the searcher are guided to choose the same term for the same concept, then relevant documents will be retrieved." This is particularly relevant when considering search engines of the internet, such as Google. The process indexes pages and then matches text strings using its complex algorithm; there is no intelligence or "inferencing" occurring, just the illusion thereof.

Hierarchical, linear, and planar schemata

Metadata schemata can be hierarchical in nature where relationships exist between metadata elements and elements are nested so that parent-child relationships exist between the elements. An example of a hierarchical metadata schema is the IEEE LOM schema, in which metadata elements may belong to a parent metadata element. Metadata schemata can also be one-dimensional, or linear, where each element is completely discrete from other elements and classified according to one dimension only. An example of a linear metadata schema is the Dublin Core schema, which is one-dimensional. Metadata schemata are often 2 dimensional, or planar, where each element is completely discrete from other elements but classified according to 2 orthogonal dimensions.

Granularity

The degree to which the data or metadata is structured is referred to as "granularity". "Granularity" refers to how much detail is provided. Metadata with a high granularity allows for deeper, more detailed, and more structured information and enables a greater level of technical manipulation. A lower level of granularity means that metadata can be created for considerably lower costs but will not provide as detailed information. The major impact of granularity is not only on creation and capture, but moreover on maintenance costs. As soon as the metadata structures become outdated, so too is the access to the referred data. Hence granularity must take into account the effort to create the metadata as well as the effort to maintain it.

Hypermapping

In all cases where the metadata schemata exceed the planar depiction, some type of hypermapping is required to enable display and view of metadata according to chosen aspect and to serve special views. Hypermapping frequently applies to layering of geographical and geological information overlays.

Standards

International standards apply to metadata. Much work is being accomplished in the national and international standards communities, especially ANSI (American National Standards Institute) and ISO (International Organization for Standardization) to reach a consensus on standardizing metadata and registries. The core metadata registry standard is ISO/IEC 11179 Metadata Registries (MDR), the framework for the standard is described in ISO/IEC 11179-1:2004. A new edition of Part 1 is in its final stage for publication in 2015 or early 2016. It has been revised to align with the current edition of Part 3, ISO/IEC 11179-3:2013 which extends the MDR to support the registration of Concept Systems. (see ISO/IEC 11179). This standard specifies a schema for recording both the meaning and technical structure of the data for unambiguous usage by humans and computers. ISO/IEC 11179 standard refers to metadata as information objects about data, or "data about data". In ISO/IEC 11179 Part-3, the information objects are data about Data Elements, Value Domains, and other reusable semantic and representational information objects that describe the meaning and technical details of a data item. This standard also prescribes the details for a metadata registry, and for registering and administering the information objects within a Metadata Registry. ISO/IEC 11179 Part 3 also has provisions for describing compound structures that are derivations of other data elements, for example through calculations, collections of one or more data elements, or other forms of derived data. While this standard describes itself originally as a "data element" registry, its purpose is to support describing and registering metadata content independently of any particular application, lending the descriptions to being discovered and reused by humans or computers in developing new applications, databases, or for analysis of data collected in accordance with the registered metadata content. This standard has become the general basis for other kinds of metadata registries, reusing and extending the registration and administration portion of the standard.

The Geospatial community has a tradition of specialized geospatial metadata standards, particularly building on traditions of map- and image-libraries and catalogs. Formal metadata is usually essential for geospatial data, as common text-processing approaches are not applicable.

The Dublin Core metadata terms are a set of vocabulary terms that can be used to describe resources for the purposes of discovery. The original set of 15 classic metadata terms, known as the Dublin Core Metadata Element Set are endorsed in the following standards documents:

- IETF RFC 5013

- ISO Standard 15836-2009

- NISO Standard Z39.85.

The W3C Data Catalog Vocabulary (DCAT) is an RDF vocabulary that supplements Dublin Core with classes for Dataset, Data Service, Catalog, and Catalog Record. DCAT also uses elements from FOAF, PROV-O, and OWL-Time. DCAT provides an RDF model to support the typical structure of a catalog that contains records, each describing a dataset or service.

Although not a standard, Microformat (also mentioned in the section metadata on the internet below) is a web-based approach to semantic markup which seeks to re-use existing HTML/XHTML tags to convey metadata. Microformat follows XHTML and HTML standards but is not a standard in itself. One advocate of microformats, Tantek Çelik, characterized a problem with alternative approaches:

Here's a new language we want you to learn, and now you need to output these additional files on your server. It's a hassle. (Microformats) lower the barrier to entry.

Use

Photographs

Metadata may be written into a digital photo file that will identify who owns it, copyright and contact information, what brand or model of camera created the file, along with exposure information (shutter speed, f-stop, etc.) and descriptive information, such as keywords about the photo, making the file or image searchable on a computer and/or the Internet. Some metadata is created by the camera such as, color space, color channels, exposure time, and aperture (EXIF), while some is input by the photographer and/or software after downloading to a computer. Most digital cameras write metadata about the model number, shutter speed, etc., and some enable you to edit it; this functionality has been available on most Nikon DSLRs since the Nikon D3, on most new Canon cameras since the Canon EOS 7D, and on most Pentax DSLRs since the Pentax K-3. Metadata can be used to make organizing in post-production easier with the use of key-wording. Filters can be used to analyze a specific set of photographs and create selections on criteria like rating or capture time. On devices with geolocation capabilities like GPS (smartphones in particular), the location the photo was taken from may also be included.

Photographic Metadata Standards are governed by organizations that develop the following standards. They include, but are not limited to:

- IPTC Information Interchange Model IIM (International Press Telecommunications Council)

- IPTC Core Schema for XMP

- XMP – Extensible Metadata Platform (an ISO standard)

- Exif – Exchangeable image file format, Maintained by CIPA (Camera & Imaging Products Association) and published by JEITA (Japan Electronics and Information Technology Industries Association)

- Dublin Core (Dublin Core Metadata Initiative – DCMI)

- PLUS (Picture Licensing Universal System)

- VRA Core (Visual Resource Association)

Telecommunications

Information on the times, origins and destinations of phone calls, electronic messages, instant messages, and other modes of telecommunication, as opposed to message content, is another form of metadata. Bulk collection of this call detail record metadata by intelligence agencies has proven controversial after disclosures by Edward Snowden of the fact that certain Intelligence agencies such as the NSA had been (and perhaps still are) keeping online metadata on millions of internet users for up to a year, regardless of whether or not they [ever] were persons of interest to the agency.

Video

Metadata is particularly useful in video, where information about its contents (such as transcripts of conversations and text descriptions of its scenes) is not directly understandable by a computer, but where an efficient search of the content is desirable. This is particularly useful in video applications such as Automatic Number Plate Recognition and Vehicle Recognition Identification software, wherein license plate data is saved and used to create reports and alerts. There are 2 sources in which video metadata is derived: (1) operational gathered metadata, that is information about the content produced, such as the type of equipment, software, date, and location; (2) human-authored metadata, to improve search engine visibility, discoverability, audience engagement, and providing advertising opportunities to video publishers. Today most professional video editing software has access to metadata. Avid's MetaSync and Adobe's Bridge are 2 prime examples of this.

Geospatial metadata

Geospatial metadata relates to Geographic Information Systems (GIS) files, maps, images, and other data that is location-based. Metadata is used in GIS to document the characteristics and attributes of geographic data, such as database files and data that is developed within a GIS. It includes details like who developed the data, when it was collected, how it was processed, and what formats it's available in, and then delivers the context for the data to be used effectively.

Creation

Metadata can be created either by automated information processing or by manual work. Elementary metadata captured by computers can include information about when an object was created, who created it, when it was last updated, file size, and file extension. In this context an object refers to any of the following:

- A physical item such as a book, CD, DVD, a paper map, chair, table, flower pot, etc.

- An electronic file such as a digital image, digital photo, electronic document, program file, database table, etc.

A metadata engine collects, stores and analyzes information about data and metadata (data about data) in use within a domain.

Data virtualization

Data virtualization emerged in the 2000s as the new software technology to complete the virtualization "stack" in the enterprise. Metadata is used in data virtualization servers which are enterprise infrastructure components, alongside database and application servers. Metadata in these servers is saved as persistent repository and describe business objects in various enterprise systems and applications. Structural metadata commonality is also important to support data virtualization.

Statistics and census services

Standardization and harmonization work has brought advantages to industry efforts to build metadata systems in the statistical community. Several metadata guidelines and standards such as the European Statistics Code of Practice and ISO 17369:2013 (Statistical Data and Metadata Exchange or SDMX) provide key principles for how businesses, government bodies, and other entities should manage statistical data and metadata. Entities such as Eurostat, European System of Central Banks, and the U.S. Environmental Protection Agency have implemented these and other such standards and guidelines with the goal of improving "efficiency when managing statistical business processes".

Library and information science

Metadata has been used in various ways as a means of cataloging items in libraries in both digital and analog formats. Such data helps classify, aggregate, identify, and locate a particular book, DVD, magazine, or any object a library might hold in its collection. Until the 1980s, many library catalogs used 3x5 inch cards in file drawers to display a book's title, author, subject matter, and an abbreviated alpha-numeric string (call number) which indicated the physical location of the book within the library's shelves. The Dewey Decimal System employed by libraries for the classification of library materials by subject is an early example of metadata usage. The early paper catalog had information regarding whichever item was described on said card: title, author, subject, and a number as to where to find said item. Beginning in the 1980s and 1990s, many libraries replaced these paper file cards with computer databases. These computer databases make it much easier and faster for users to do keyword searches. Another form of older metadata collection is the use by the US Census Bureau of what is known as the "Long Form". The Long Form asks questions that are used to create demographic data to find patterns of distribution. Libraries employ metadata in library catalogues, most commonly as part of an Integrated Library Management System. Metadata is obtained by cataloging resources such as books, periodicals, DVDs, web pages or digital images. This data is stored in the integrated library management system, ILMS, using the MARC metadata standard. The purpose is to direct patrons to the physical or electronic location of items or areas they seek as well as to provide a description of the item/s in question.

More recent and specialized instances of library metadata include the establishment of digital libraries including e-print repositories and digital image libraries. While often based on library principles, the focus on non-librarian use, especially in providing metadata, means they do not follow traditional or common cataloging approaches. Given the custom nature of included materials, metadata fields are often specially created e.g. taxonomic classification fields, location fields, keywords, or copyright statement. Standard file information such as file size and format are usually automatically included. Library operation has for decades been a key topic in efforts toward international standardization. Standards for metadata in digital libraries include Dublin Core, METS, MODS, DDI, DOI, URN, PREMIS schema, EML, and OAI-PMH. Leading libraries in the world give hints on their metadata standards strategies. The use and creation of metadata in library and information science also include scientific publications:

Science

Metadata for scientific publications is often created by journal publishers and citation databases such as PubMed and Web of Science. The data contained within manuscripts or accompanying them as supplementary material is less often subject to metadata creation, though they may be submitted to e.g. biomedical databases after publication. The original authors and database curators then become responsible for metadata creation, with the assistance of automated processes. Comprehensive metadata for all experimental data is the foundation of the FAIR Guiding Principles, or the standards for ensuring research data are findable, accessible, interoperable, and reusable.

Such metadata can then be utilized, complemented, and made accessible in useful ways. OpenAlex is a free online index of over 200 million scientific documents that integrates and provides metadata such as sources, citations, author information, scientific fields, and research topics. Its API and open source website can be used for metascience, scientometrics, and novel tools that query this semantic web of papers. Another project under development, Scholia, uses the metadata of scientific publications for various visualizations and aggregation features such as providing a simple user interface summarizing literature about a specific feature of the SARS-CoV-2 virus using Wikidata's "main subject" property.

In research labor, transparent metadata about authors' contributions to works have been proposed – e.g. the role played in the production of the paper, the level of contribution and the responsibilities.

Moreover, various metadata about scientific outputs can be created or complemented – for instance, scite.ai attempts to track and link citations of papers as 'Supporting', 'Mentioning' or 'Contrasting' the study. Other examples include developments of alternative metrics – which, beyond providing help for assessment and findability, also aggregate many of the public discussions about a scientific paper on social media such as Reddit, citations on Wikipedia, and reports about the study in the news media – and a call for showing whether or not the original findings are confirmed or could get reproduced.

Museums

Metadata in a museum context is the information that trained cultural documentation specialists, such as archivists, librarians, museum registrars and curators, create to index, structure, describe, identify, or otherwise specify works of art, architecture, cultural objects and their images. Descriptive metadata is most commonly used in museum contexts for object identification and resource recovery purposes.

Usage

Metadata is developed and applied within collecting institutions and museums in order to:

- Facilitate resource discovery and execute search queries.

- Create digital archives that store information relating to various aspects of museum collections and cultural objects, and serve archival and managerial purposes.

- Provide public audiences access to cultural objects through publishing digital content online.

Standards

Many museums and cultural heritage centers recognize that given the diversity of artworks and cultural objects, no single model or standard suffices to describe and catalog cultural works. For example, a sculpted Indigenous artifact could be classified as an artwork, an archaeological artifact, or an Indigenous heritage item. The early stages of standardization in archiving, description and cataloging within the museum community began in the late 1990s with the development of standards such as Categories for the Description of Works of Art (CDWA), Spectrum, CIDOC Conceptual Reference Model (CRM), Cataloging Cultural Objects (CCO) and the CDWA Lite XML schema. These standards use HTML and XML markup languages for machine processing, publication and implementation. The Anglo-American Cataloguing Rules (AACR), originally developed for characterizing books, have also been applied to cultural objects, works of art and architecture. Standards, such as the CCO, are integrated within a Museum's Collections Management System (CMS), a database through which museums are able to manage their collections, acquisitions, loans and conservation. Scholars and professionals in the field note that the "quickly evolving landscape of standards and technologies" creates challenges for cultural documentarians, specifically non-technically trained professionals. Most collecting institutions and museums use a relational database to categorize cultural works and their images. Relational databases and metadata work to document and describe the complex relationships amongst cultural objects and multi-faceted works of art, as well as between objects and places, people, and artistic movements. Relational database structures are also beneficial within collecting institutions and museums because they allow for archivists to make a clear distinction between cultural objects and their images; an unclear distinction could lead to confusing and inaccurate searches.

Cultural objects

An object's materiality, function, and purpose, as well as the size (e.g., measurements, such as height, width, weight), storage requirements (e.g., climate-controlled environment), and focus of the museum and collection, influence the descriptive depth of the data attributed to the object by cultural documentarians. The established institutional cataloging practices, goals, and expertise of cultural documentarians and database structure also influence the information ascribed to cultural objects and the ways in which cultural objects are categorized. Additionally, museums often employ standardized commercial collection management software that prescribes and limits the ways in which archivists can describe artworks and cultural objects. As well, collecting institutions and museums use Controlled Vocabularies to describe cultural objects and artworks in their collections. Getty Vocabularies and the Library of Congress Controlled Vocabularies are reputable within the museum community and are recommended by CCO standards. Museums are encouraged to use controlled vocabularies that are contextual and relevant to their collections and enhance the functionality of their digital information systems. Controlled Vocabularies are beneficial within databases because they provide a high level of consistency, improving resource retrieval. Metadata structures, including controlled vocabularies, reflect the ontologies of the systems from which they were created. Often the processes through which cultural objects are described and categorized through metadata in museums do not reflect the perspectives of the maker communities.

Online content

Metadata has been instrumental in the creation of digital information systems and archives within museums and has made it easier for museums to publish digital content online. This has enabled audiences who might not have had access to cultural objects due to geographic or economic barriers to have access to them. In the 2000s, as more museums have adopted archival standards and created intricate databases, discussions about Linked Data between museum databases have come up in the museum, archival, and library science communities. Collection Management Systems (CMS) and Digital Asset Management tools can be local or shared systems. Digital Humanities scholars note many benefits of interoperability between museum databases and collections, while also acknowledging the difficulties of achieving such interoperability.

Law

United States

Problems involving metadata in litigation in the United States are becoming widespread. Courts have looked at various questions involving metadata, including the discoverability of metadata by parties. The Federal Rules of Civil Procedure have specific rules for discovery of electronically stored information, and subsequent case law applying those rules has elucidated on the litigant's duty to produce metadata when litigating in federal court. In October 2009, the Arizona Supreme Court has ruled that metadata records are public record. Document metadata have proven particularly important in legal environments in which litigation has requested metadata, that can include sensitive information detrimental to a certain party in court. Using metadata removal tools to "clean" or redact documents can mitigate the risks of unwittingly sending sensitive data. This process partially (see data remanence) protects law firms from potentially damaging leaking of sensitive data through electronic discovery.

Opinion polls have shown that 45% of Americans are "not at all confident" in the ability of social media sites to ensure their personal data is secure and 40% say that social media sites should not be able to store any information on individuals. 76% of Americans say that they are not confident that the information advertising agencies collect on them is secure and 50% say that online advertising agencies should not be allowed to record any of their information at all.

Australia

In Australia, the need to strengthen national security has resulted in the introduction of a new metadata storage law. This new law means that both security and policing agencies will be allowed to access up to 2 years of an individual's metadata, with the aim of making it easier to stop any terrorist attacks and serious crimes from happening.

Legislation

Legislative metadata has been the subject of some discussion in law.gov forums such as workshops held by the Legal Information Institute at the Cornell Law School on 22 and 23 March 2010. The documentation for these forums is titled, "Suggested metadata practices for legislation and regulations".

A handful of key points have been outlined by these discussions, section headings of which are listed as follows:

- General Considerations

- Document Structure

- Document Contents

- Metadata (elements of)

- Layering

- Point-in-time versus post-hoc

Healthcare

Australian medical research pioneered the definition of metadata for applications in health care. That approach offers the first recognized attempt to adhere to international standards in medical sciences instead of defining a proprietary standard under the World Health Organization (WHO) umbrella. The medical community yet did not approve of the need to follow metadata standards despite research that supported these standards.

Biomedical researches

Research studies in the fields of biomedicine and molecular biology frequently yield large quantities of data, including results of genome or meta-genome sequencing, proteomics data, and even notes or plans created during the course of research itself. Each data type involves its own variety of metadata and the processes necessary to produce these metadata. General metadata standards, such as ISA-Tab, allow researchers to create and exchange experimental metadata in consistent formats. Specific experimental approaches frequently have their own metadata standards and systems: metadata standards for mass spectrometry include mzML and SPLASH, while XML-based standards such as PDBML and SRA XML serve as standards for macromolecular structure and sequencing data, respectively.

The products of biomedical research are generally realized as peer-reviewed manuscripts and these publications are yet another source of data .

Data warehousing

A data warehouse (DW) is a repository of an organization's electronically stored data. Data warehouses are designed to manage and store the data. Data warehouses differ from business intelligence (BI) systems because BI systems are designed to use data to create reports and analyze the information, to provide strategic guidance to management. Metadata is an important tool in how data is stored in data warehouses. The purpose of a data warehouse is to house standardized, structured, consistent, integrated, correct, "cleaned" and timely data, extracted from various operational systems in an organization. The extracted data are integrated in the data warehouse environment to provide an enterprise-wide perspective. Data are structured in a way to serve the reporting and analytic requirements. The design of structural metadata commonality using a data modeling method such as entity-relationship model diagramming is important in any data warehouse development effort. They detail metadata on each piece of data in the data warehouse. An essential component of a data warehouse/business intelligence system is the metadata and tools to manage and retrieve the metadata. Ralph Kimball describes metadata as the DNA of the data warehouse as metadata defines the elements of the data warehouse and how they work together.

Kimball et al. refers to 3 main categories of metadata: Technical metadata, business metadata and process metadata. Technical metadata is primarily definitional, while business metadata and process metadata is primarily descriptive. The categories sometimes overlap.

- Technical metadata defines the objects and processes in a DW/BI system, as seen from a technical point of view. The technical metadata includes the system metadata, which defines the data structures such as tables, fields, data types, indexes, and partitions in the relational engine, as well as databases, dimensions, measures, and data mining models. Technical metadata defines the data model and the way it is displayed for the users, with the reports, schedules, distribution lists, and user security rights.

- Business metadata is content from the data warehouse described in more user-friendly terms. The business metadata tells you what data you have, where they come from, what they mean and what their relationship is to other data in the data warehouse. Business metadata may also serve as documentation for the DW/BI system. Users who browse the data warehouse are primarily viewing the business metadata.

- Process metadata is used to describe the results of various operations in the data warehouse. Within the ETL process, all key data from tasks is logged on execution. This includes start time, end time, CPU seconds used, disk reads, disk writes, and rows processed. When troubleshooting the ETL or query process, this sort of data becomes valuable. Process metadata is the fact measurement when building and using a DW/BI system. Some organizations make a living out of collecting and selling this sort of data to companies – in that case, the process metadata becomes the business metadata for the fact and dimension tables. Collecting process metadata is in the interest of business people who can use the data to identify the users of their products, which products they are using, and what level of service they are receiving.

Internet

The HTML format used to define web pages allows for the inclusion of a variety of types of metadata, from basic descriptive text, dates and keywords to further advanced metadata schemes such as the Dublin Core, e-GMS, and AGLS standards. Pages and files can also be geotagged with coordinates, categorized or tagged, including collaboratively such as with folksonomies.

When media has identifiers set or when such can be generated, information such as file tags and descriptions can be pulled or scraped from the Internet – for example about movies. Various online databases are aggregated and provide metadata for various data. The collaboratively built Wikidata has identifiers not just for media but also abstract concepts, various objects, and other entities, that can be looked up by humans and machines to retrieve useful information and to link knowledge in other knowledge bases and databases.

Metadata may be included in the page's header or in a separate file. Microformats allow metadata to be added to on-page data in a way that regular web users do not see, but computers, web crawlers and search engines can readily access. Many search engines are cautious about using metadata in their ranking algorithms because of exploitation of metadata and the practice of search engine optimization, SEO, to improve rankings. See the Meta element article for further discussion. This cautious attitude may be justified as people, according to Doctorow, are not executing care and diligence when creating their own metadata and that metadata is part of a competitive environment where the metadata is used to promote the metadata creators own purposes. Studies show that search engines respond to web pages with metadata implementations, and Google has an announcement on its site showing the meta tags that its search engine understands. Enterprise search startup Swiftype recognizes metadata as a relevance signal that webmasters can implement for their website-specific search engine, even releasing their own extension, known as Meta Tags 2.

Broadcast industry

In the broadcast industry, metadata is linked to audio and video broadcast media to:

- identify the media: clip or playlist names, duration, timecode, etc.

- describe the content: notes regarding the quality of video content, rating, description (for example, during a sport event, keywords like goal, red card will be associated to some clips)

- classify media: metadata allows producers to sort the media or to easily and quickly find a video content (a TV news could urgently need some archive content for a subject). For example, the BBC has a large subject classification system, Lonclass, a customized version of the more general-purpose Universal Decimal Classification.

This metadata can be linked to the video media thanks to the video servers. Most major broadcast sporting events like FIFA World Cup or the Olympic Games use this metadata to distribute their video content to TV stations through keywords. It is often the host broadcaster who is in charge of organizing metadata through its International Broadcast Centre and its video servers. This metadata is recorded with the images and entered by metadata operators (loggers) who associate in live metadata available in metadata grids through software (such as Multicam(LSM) or IPDirector used during the FIFA World Cup or Olympic Games).

Geography

Metadata that describes geographic objects in electronic storage or format (such as datasets, maps, features, or documents with a geospatial component) has a history dating back to at least 1994. This class of metadata is described more fully on the geospatial metadata article.

Ecology and environment

Ecological and environmental metadata is intended to document the "who, what, when, where, why, and how" of data collection for a particular study. This typically means which organization or institution collected the data, what type of data, which date(s) the data was collected, the rationale for the data collection, and the methodology used for the data collection. Metadata should be generated in a format commonly used by the most relevant science community, such as Darwin Core, Ecological Metadata Language, or Dublin Core. Metadata editing tools exist to facilitate metadata generation (e.g. Metavist, Mercury, Morpho). Metadata should describe the provenance of the data (where they originated, as well as any transformations the data underwent) and how to give credit for (cite) the data products.

Digital music

When first released in 1982, Compact Discs only contained a Table Of Contents (TOC) with the number of tracks on the disc and their length in samples. Fourteen years later in 1996, a revision of the CD Red Book standard added CD-Text to carry additional metadata. But CD-Text was not widely adopted. Shortly thereafter, it became common for personal computers to retrieve metadata from external sources (e.g. CDDB, Gracenote) based on the TOC.

Digital audio formats such as digital audio files superseded music formats such as cassette tapes and CDs in the 2000s. Digital audio files could be labeled with more information than could be contained in just the file name. That descriptive information is called the audio tag or audio metadata in general. Computer programs specializing in adding or modifying this information are called tag editors. Metadata can be used to name, describe, catalog, and indicate ownership or copyright for a digital audio file, and its presence makes it much easier to locate a specific audio file within a group, typically through use of a search engine that accesses the metadata. As different digital audio formats were developed, attempts were made to standardize a specific location within the digital files where this information could be stored.

As a result, almost all digital audio formats, including mp3, broadcast wav, and AIFF files, have similar standardized locations that can be populated with metadata. The metadata for compressed and uncompressed digital music is often encoded in the ID3 tag. Common editors such as TagLib support MP3, Ogg Vorbis, FLAC, MPC, Speex, WavPack TrueAudio, WAV, AIFF, MP4, and ASF file formats.

Cloud applications

With the availability of cloud applications, which include those to add metadata to content, metadata is increasingly available over the Internet.

Administration and management

Storage

Metadata can be stored either internally, in the same file or structure as the data (this is also called embedded metadata), or externally, in a separate file or field from the described data. A data repository typically stores the metadata detached from the data but can be designed to support embedded metadata approaches. Each option has advantages and disadvantages:

- Internal storage means metadata always travels as part of the data they describe; thus, metadata is always available with the data, and can be manipulated locally. This method creates redundancy (precluding normalization), and does not allow managing all of a system's metadata in one place. It arguably increases consistency, since the metadata is readily changed whenever the data is changed.

- External storage allows collocating metadata for all the contents, for example in a database, for more efficient searching and management. Redundancy can be avoided by normalizing the metadata's organization. In this approach, metadata can be united with the content when information is transferred, for example in Streaming media; or can be referenced (for example, as a web link) from the transferred content. On the downside, the division of the metadata from the data content, especially in standalone files that refer to their source metadata elsewhere, increases the opportunities for misalignments between the two, as changes to either may not be reflected in the other.

Metadata can be stored in either human-readable or binary form. Storing metadata in a human-readable format such as XML can be useful because users can understand and edit it without specialized tools. However, text-based formats are rarely optimized for storage capacity, communication time, or processing speed. A binary metadata format enables efficiency in all these respects, but requires special software to convert the binary information into human-readable content.

Database management

Each relational database system has its own mechanisms for storing metadata. Examples of relational-database metadata include:

- Tables of all tables in a database, their names, sizes, and number of rows in each table.

- Tables of columns in each database, what tables they are used in, and the type of data stored in each column.

In database terminology, this set of metadata is referred to as the catalog. The SQL standard specifies a uniform means to access the catalog, called the information schema, but not all databases implement it, even if they implement other aspects of the SQL standard. For an example of database-specific metadata access methods, see Oracle metadata. Programmatic access to metadata is possible using APIs such as JDBC, or SchemaCrawler.