Artificial consciousness[1] (AC), also known as machine consciousness (MC) or synthetic consciousness (Gamez 2008; Reggia 2013), is a field related to artificial intelligence and cognitive robotics. The aim of the theory

of artificial consciousness is to "Define that which would have to be

synthesized were consciousness to be found in an engineered artifact" (Aleksander 1995).

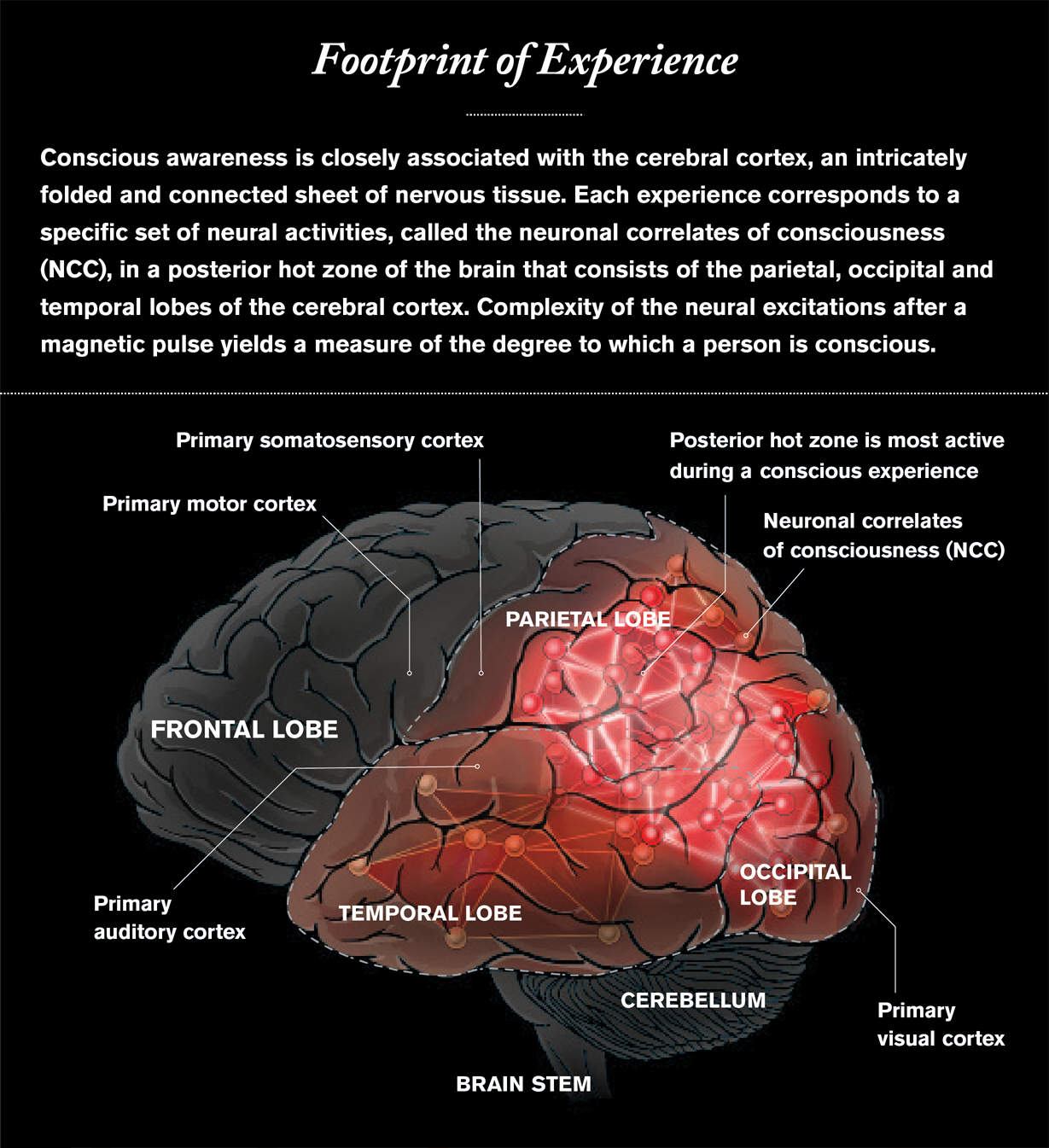

Neuroscience hypothesizes that consciousness is generated by the interoperation of various parts of the brain, called the neural correlates of consciousness or NCC, though there are challenges to that perspective. Proponents of AC believe it is possible to construct systems (e.g., computer systems) that can emulate this NCC interoperation.[2]

Artificial consciousness concepts are also pondered in the philosophy of artificial intelligence through questions about mind, consciousness, and mental states.[3]

Neuroscience hypothesizes that consciousness is generated by the interoperation of various parts of the brain, called the neural correlates of consciousness or NCC, though there are challenges to that perspective. Proponents of AC believe it is possible to construct systems (e.g., computer systems) that can emulate this NCC interoperation.[2]

Artificial consciousness concepts are also pondered in the philosophy of artificial intelligence through questions about mind, consciousness, and mental states.[3]

Philosophical views

As there are many hypothesized types of consciousness, there are many potential implementations of artificial consciousness. In the philosophical literature, perhaps the most common taxonomy of consciousness is into "access" and "phenomenal" variants. Access consciousness concerns those aspects of experience that can be apprehended, while phenomenal consciousness concerns those aspects of experience that seemingly cannot be apprehended, instead being characterized qualitatively in terms of “raw feels”, “what it is like” or qualia (Block 1997).Plausibility debate

Type-identity theorists and other skeptics hold the view that consciousness can only be realized in particular physical systems because consciousness has properties that necessarily depend on physical constitution (Block 1978; Bickle 2003).[4][5]In his article "Artificial Consciousness: Utopia or Real Possibility" Giorgio Buttazzo says that despite our current technology's ability to simulate autonomy, "Working in a fully automated mode, they [the computers] cannot exhibit creativity, emotions, or free will. A computer, like a washing machine, is a slave operated by its components."[6]

For other theorists (e.g., functionalists), who define mental states in terms of causal roles, any system that can instantiate the same pattern of causal roles, regardless of physical constitution, will instantiate the same mental states, including consciousness (Putnam 1967).

Computational Foundation argument

One of the most explicit arguments for the plausibility of AC comes from David Chalmers. His proposal, found within his article Chalmers 2011, is roughly that the right kinds of computations are sufficient for the possession of a conscious mind. In the outline, he defends his claim thus: Computers perform computations. Computations can capture other systems' abstract causal organization.The most controversial part of Chalmers' proposal is that mental properties are "organizationally invariant". Mental properties are of two kinds, psychological and phenomenological. Psychological properties, such as belief and perception, are those that are "characterized by their causal role". He adverts to the work of Armstrong 1968 and Lewis 1972 in claiming that "[s]ystems with the same causal topology…will share their psychological properties".

Phenomenological properties are not prima facie definable in terms of their causal roles. Establishing that phenomenological properties are amenable to individuation by causal role therefore requires argument. Chalmers provides his Dancing Qualia Argument for this purpose.[7]

Chalmers begins by assuming that agents with identical causal organizations could have different experiences. He then asks us to conceive of changing one agent into the other by the replacement of parts (neural parts replaced by silicon, say) while preserving its causal organization. Ex hypothesi, the experience of the agent under transformation would change (as the parts were replaced), but there would be no change in causal topology and therefore no means whereby the agent could "notice" the shift in experience.

Critics of AC object that Chalmers begs the question in assuming that all mental properties and external connections are sufficiently captured by abstract causal organization.

Ethics

If it were suspected that a particular machine was conscious, its rights would be an ethical issue that would need to be assessed (e.g. what rights it would have under law). For example, a conscious computer that was owned and used as a tool or central computer of a building or large machine is a particular ambiguity. Should laws be made for such a case, consciousness would also require a legal definition (for example a machine's ability to experience pleasure or pain, known as sentience). Because artificial consciousness is still largely a theoretical subject, such ethics have not been discussed or developed to a great extent, though it has often been a theme in fiction (see below).The rules for the 2003 Loebner Prize competition explicitly addressed the question of robot rights:

61. If, in any given year, a publicly available open source Entry entered by the University of Surrey or the Cambridge Center wins the Silver Medal or the Gold Medal, then the Medal and the Cash Award will be awarded to the body responsible for the development of that Entry. If no such body can be identified, or if there is disagreement among two or more claimants, the Medal and the Cash Award will be held in trust until such time as the Entry may legally possess, either in the United States of America or in the venue of the contest, the Cash Award and Gold Medal in its own right.[8]

Research and implementation proposals

Aspects of consciousness

There are various aspects of consciousness generally deemed necessary for a machine to be artificially conscious. A variety of functions in which consciousness plays a role were suggested by Bernard Baars (Baars 1988) and others. The functions of consciousness suggested by Bernard Baars are Definition and Context Setting, Adaptation and Learning, Editing, Flagging and Debugging, Recruiting and Control, Prioritizing and Access-Control, Decision-making or Executive Function, Analogy-forming Function, Metacognitive and Self-monitoring Function, and Autoprogramming and Self-maintenance Function. Igor Aleksander suggested 12 principles for artificial consciousness (Aleksander 1995) and these are: The Brain is a State Machine, Inner Neuron Partitioning, Conscious and Unconscious States, Perceptual Learning and Memory, Prediction, The Awareness of Self, Representation of Meaning, Learning Utterances, Learning Language, Will, Instinct, and Emotion. The aim of AC is to define whether and how these and other aspects of consciousness can be synthesized in an engineered artifact such as a digital computer. This list is not exhaustive; there are many others not covered.Awareness

Awareness could be one required aspect, but there are many problems with the exact definition of awareness. The results of the experiments of neuroscanning on monkeys suggest that a process, not only a state or object, activates neurons. Awareness includes creating and testing alternative models of each process based on the information received through the senses or imagined, and is also useful for making predictions. Such modeling needs a lot of flexibility. Creating such a model includes modeling of the physical world, modeling of one's own internal states and processes, and modeling of other conscious entities.There are at least three types of awareness:[9] agency awareness, goal awareness, and sensorimotor awareness, which may also be conscious or not. For example, in agency awareness you may be aware that you performed a certain action yesterday, but are not now conscious of it. In goal awareness you may be aware that you must search for a lost object, but are not now conscious of it. In sensorimotor awareness, you may be aware that your hand is resting on an object, but are not now conscious of it.

Because objects of awareness are often conscious, the distinction between awareness and consciousness is frequently blurred or they are used as synonyms.[10]

Memory

Conscious events interact with memory systems in learning, rehearsal, and retrieval.[11] The IDA model[12] elucidates the role of consciousness in the updating of perceptual memory,[13] transient episodic memory, and procedural memory. Transient episodic and declarative memories have distributed representations in IDA, there is evidence that this is also the case in the nervous system.[14] In IDA, these two memories are implemented computationally using a modified version of Kanerva’s Sparse distributed memory architecture.[15]Learning

Learning is also considered necessary for AC. By Bernard Baars, conscious experience is needed to represent and adapt to novel and significant events (Baars 1988). By Axel Cleeremans and Luis Jiménez, learning is defined as "a set of philogenetically [sic] advanced adaptation processes that critically depend on an evolved sensitivity to subjective experience so as to enable agents to afford flexible control over their actions in complex, unpredictable environments" (Cleeremans 2001).Anticipation

The ability to predict (or anticipate) foreseeable events is considered important for AC by Igor Aleksander.[16] The emergentist multiple drafts principle proposed by Daniel Dennett in Consciousness Explained may be useful for prediction: it involves the evaluation and selection of the most appropriate "draft" to fit the current environment. Anticipation includes prediction of consequences of one's own proposed actions and prediction of consequences of probable actions by other entities.Relationships between real world states are mirrored in the state structure of a conscious organism enabling the organism to predict events.[16] An artificially conscious machine should be able to anticipate events correctly in order to be ready to respond to them when they occur or to take preemptive action to avert anticipated events. The implication here is that the machine needs flexible, real-time components that build spatial, dynamic, statistical, functional, and cause-effect models of the real world and predicted worlds, making it possible to demonstrate that it possesses artificial consciousness in the present and future and not only in the past. In order to do this, a conscious machine should make coherent predictions and contingency plans, not only in worlds with fixed rules like a chess board, but also for novel environments that may change, to be executed only when appropriate to simulate and control the real world.

Subjective experience

Subjective experiences or qualia are widely considered to be the hard problem of consciousness. Indeed, it is held to pose a challenge to physicalism, let alone computationalism. On the other hand, there are problems in other fields of science which limit that which we can observe, such as the uncertainty principle in physics, which have not made the research in these fields of science impossible.Role of cognitive architectures

The term "cognitive architecture" may refer to a theory about the structure of the human mind, or any portion or function thereof, including consciousness. In another context, a cognitive architecture implements the theory on computers. An example is QuBIC: Quantum and Bio-inspired Cognitive Architecture for Machine Consciousness. One of the main goals of a cognitive architecture is to summarize the various results of cognitive psychology in a comprehensive computer model. However, the results need to be in a formalized form so they can be the basis of a computer program. Also, the role of cognitive architecture is for the A.I. to clearly structure, build, and implement it's thought process.Symbolic or hybrid proposals

Franklin's Intelligent Distribution Agent

Stan Franklin (1995, 2003) defines an autonomous agent as possessing functional consciousness when it is capable of several of the functions of consciousness as identified by Bernard Baars' Global Workspace Theory (Baars 1988, 1997). His brain child IDA (Intelligent Distribution Agent) is a software implementation of GWT, which makes it functionally conscious by definition. IDA's task is to negotiate new assignments for sailors in the US Navy after they end a tour of duty, by matching each individual's skills and preferences with the Navy's needs. IDA interacts with Navy databases and communicates with the sailors via natural language e-mail dialog while obeying a large set of Navy policies. The IDA computational model was developed during 1996–2001 at Stan Franklin's "Conscious" Software Research Group at the University of Memphis. It "consists of approximately a quarter-million lines of Java code, and almost completely consumes the resources of a 2001 high-end workstation." It relies heavily on codelets, which are "special purpose, relatively independent, mini-agent[s] typically implemented as a small piece of code running as a separate thread." In IDA's top-down architecture, high-level cognitive functions are explicitly modeled (see Franklin 1995 and Franklin 2003 for details). While IDA is functionally conscious by definition, Franklin does "not attribute phenomenal consciousness to his own 'conscious' software agent, IDA, in spite of her many human-like behaviours. This in spite of watching several US Navy detailers repeatedly nodding their heads saying 'Yes, that's how I do it' while watching IDA's internal and external actions as she performs her task."Ron Sun's cognitive architecture CLARION

CLARION posits a two-level representation that explains the distinction between conscious and unconscious mental processes.CLARION has been successful in accounting for a variety of psychological data. A number of well-known skill learning tasks have been simulated using CLARION that span the spectrum ranging from simple reactive skills to complex cognitive skills. The tasks include serial reaction time (SRT) tasks, artificial grammar learning (AGL) tasks, process control (PC) tasks, the categorical inference (CI) task, the alphabetical arithmetic (AA) task, and the Tower of Hanoi (TOH) task (Sun 2002). Among them, SRT, AGL, and PC are typical implicit learning tasks, very much relevant to the issue of consciousness as they operationalized the notion of consciousness in the context of psychological experiments.

Ben Goertzel's OpenCog

Ben Goertzel is pursuing an embodied AGI through the open-source OpenCog project. Current code includes embodied virtual pets capable of learning simple English-language commands, as well as integration with real-world robotics, being done at the Hong Kong Polytechnic University.Connectionist proposals

Haikonen's cognitive architecture

Pentti Haikonen (2003) considers classical rule-based computing inadequate for achieving AC: "the brain is definitely not a computer. Thinking is not an execution of programmed strings of commands. The brain is not a numerical calculator either. We do not think by numbers." Rather than trying to achieve mind and consciousness by identifying and implementing their underlying computational rules, Haikonen proposes "a special cognitive architecture to reproduce the processes of perception, inner imagery, inner speech, pain, pleasure, emotions and the cognitive functions behind these. This bottom-up architecture would produce higher-level functions by the power of the elementary processing units, the artificial neurons, without algorithms or programs". Haikonen believes that, when implemented with sufficient complexity, this architecture will develop consciousness, which he considers to be "a style and way of operation, characterized by distributed signal representation, perception process, cross-modality reporting and availability for retrospection." Haikonen is not alone in this process view of consciousness, or the view that AC will spontaneously emerge in autonomous agents that have a suitable neuro-inspired architecture of complexity; these are shared by many, e.g. Freeman (1999) and Cotterill (2003). A low-complexity implementation of the architecture proposed by Haikonen (2003) was reportedly not capable of AC, but did exhibit emotions as expected. See Doan (2009) for a comprehensive introduction to Haikonen's cognitive architecture. An updated account of Haikonen's architecture, along with a summary of his philosophical views, is given in Haikonen (2012).Shanahan's cognitive architecture

Murray Shanahan describes a cognitive architecture that combines Baars's idea of a global workspace with a mechanism for internal simulation ("imagination") (Shanahan 2006). For discussions of Shanahan's architecture, see (Gamez 2008) and (Reggia 2013) and Chapter 20 of (Haikonen 2012).Takeno's self-awareness research

Self-awareness in robots is being investigated by Junichi Takeno[17] at Meiji University in Japan. Takeno is asserting that he has developed a robot capable of discriminating between a self-image in a mirror and any other having an identical image to it,[18][19] and this claim has already been reviewed (Takeno, Inaba & Suzuki 2005). Takeno asserts that he first contrived the computational module called a MoNAD, which has a self-aware function, and he then constructed the artificial consciousness system by formulating the relationships between emotions, feelings and reason by connecting the modules in a hierarchy (Igarashi, Takeno 2007). Takeno completed a mirror image cognition experiment using a robot equipped with the MoNAD system. Takeno proposed the Self-Body Theory stating that "humans feel that their own mirror image is closer to themselves than an actual part of themselves." The most important point in developing artificial consciousness or clarifying human consciousness is the development of a function of self awareness, and he claims that he has demonstrated physical and mathematical evidence for this in his thesis.[20] He also demonstrated that robots can study episodes in memory where the emotions were stimulated and use this experience to take predictive actions to prevent the recurrence of unpleasant emotions (Torigoe, Takeno 2009).Aleksander's impossible mind

Igor Aleksander, emeritus professor of Neural Systems Engineering at Imperial College, has extensively researched artificial neural networks and claims in his book Impossible Minds: My Neurons, My Consciousness that the principles for creating a conscious machine already exist but that it would take forty years to train such a machine to understand language.[21] Whether this is true remains to be demonstrated and the basic principle stated in Impossible Minds—that the brain is a neural state machine—is open to doubt.[22]Thaler's Creativity Machine Paradigm

Stephen Thaler proposed a possible connection between consciousness and creativity in his 1994 patent, called "Device for the Autonomous Generation of Useful Information" (DAGUI),[23][24][25] or the so-called "Creativity Machine", in which computational critics govern the injection of synaptic noise and degradation into neural nets so as to induce false memories or confabulations that may qualify as potential ideas or strategies.[26] He recruits this neural architecture and methodology to account for the subjective feel of consciousness, claiming that similar noise-driven neural assemblies within the brain invent dubious significance to overall cortical activity.[27][28][29] Thaler's theory and the resulting patents in machine consciousness were inspired by experiments in which he internally disrupted trained neural nets so as to drive a succession of neural activation patterns that he likened to stream of consciousness.[28][30][31][32][33][34]Michael Graziano's attention schema

In 2011, Michael Graziano and Sabine Kastler published a paper named "Human consciousness and its relationship to social neuroscience: A novel hypothesis" proposing a theory of consciousness as an attention schema.[35] Graziano went on to publish an expanded discussion of this theory in his book "Consciousness and the Social Brain".[2] This Attention Schema Theory of Consciousness, as he named it, proposes that the brain tracks attention to various sensory inputs by way of an attention schema, analogous to the well study body schema that tracks the spatial place of a person's body.[2] This relates to artificial consciousness by proposing a specific mechanism of information handling, that produces what we allegedly experience and describe as consciousness, and which should be able to be duplicated by a machine using current technology. When the brain finds that person X is aware of thing Y, it is in effect modeling the state in which person X is applying an attentional enhancement to Y. In the attention schema theory, the same process can be applied to oneself. The brain tracks attention to various sensory inputs, and one's own awareness is a schematized model of one's attention. Graziano proposes specific locations in the brain for this process, and suggests that such awareness is a computed feature constructed by an expert system in the brain.Testing

The most well-known method for testing machine intelligence is the Turing test. But when interpreted as only observational, this test contradicts the philosophy of science principles of theory dependence of observations. It also has been suggested that Alan Turing's recommendation of imitating not a human adult consciousness, but a human child consciousness, should be taken seriously.[36]Other tests, such as ConsScale, test the presence of features inspired by biological systems, or measure the cognitive development of artificial systems.

Qualia, or phenomenological consciousness, is an inherently first-person phenomenon. Although various systems may display various signs of behavior correlated with functional consciousness, there is no conceivable way in which third-person tests can have access to first-person phenomenological features. Because of that, and because there is no empirical definition of consciousness,[37] a test of presence of consciousness in AC may be impossible.

In 2014, Victor Argonov suggested a non-Turing test for machine consciousness based on machine's ability to produce philosophical judgments.[38] He argues that a deterministic machine must be regarded as conscious if it is able to produce judgments on all problematic properties of consciousness (such as qualia or binding) having no innate (preloaded) philosophical knowledge on these issues, no philosophical discussions while learning, and no informational models of other creatures in its memory (such models may implicitly or explicitly contain knowledge about these creatures’ consciousness). However, this test can be used only to detect, but not refute the existence of consciousness. A positive result proves that machine is conscious but a negative result proves nothing. For example, absence of philosophical judgments may be caused by lack of the machine’s intellect, not by absence of consciousness.

In fiction

Characters with artificial consciousness (or at least with personalities that imply they have consciousness), from works of fiction:- AC – created by merging two AIs in the Sprawl trilogy by William Gibson

- Agents – in the simulated reality known as "The Matrix" in The Matrix franchise

- Agent Smith – began as an Agent in The Matrix, then became a renegade program of overgrowing power that could make copies of itself like a self-replicating computer virus

- A.L.I.E. – Sentient genocidal AI from the TV series The 100

- AM (Allied Mastercomputer) – the antagonist of Harlan Ellison's short novel "I Have No Mouth, and I Must Scream"

- Amusement park robots (with pixilated consciousness) that went homicidal in Westworld and Futureworld

- Annalee Call – an Auton (android manufactured by other androids) from the movie Alien Resurrection

- Arnold Rimmer – computer-generated sapient hologram aboard the Red Dwarf

- Ava – a humanoid robot in Ex Machina

- Ash – android crew member of the Nostromo starship in the movie Alien

- The Bicentennial Man – an android in Isaac Asimov's Foundation universe

- Bishop – android crew member aboard the U.S.S. Sulaco in the movie Aliens

- The uploaded mind of Dr. Will Caster, which presumably included his consciousness, from the film Transcendence

- C-3PO – protocol droid featured in all the Star Wars movies

- Chappie – CHAPPiE

- Cohen (and other Emergent AIs) – Chris Moriarty's Spin Series

- Commander Data – Star Trek: The Next Generation

- Cortana (and other "Smart AI") – from the Halo series of games

- Cylons – genocidal robots with resurrection ships that enable the consciousness of any Cylon within an unspecified range to download into a new body aboard the ship upon death, from Battlestar Galactica

- Erasmus – baby killer robot that incited the Butlerian Jihad in the Dune franchise

- Fal'Cie – Mechanical beings with god-like powers from the Final Fantasy XIII series

- The Geth, EDI and SAM – Mass Effect

- HAL 9000 – spaceship USS Discovery One's onboard computer, that lethally malfunctioned due to mutually exclusive directives, from the 1968 novel 2001: A Space Odyssey and in the film

- Holly – ship's computer with an IQ of 6000, aboard the Red Dwarf

- Hosts in the Westworld franchise

- Jane – Orson Scott Card's Speaker for the Dead, Xenocide, Children of the Mind, and "Investment Counselor"

- Johnny Five – Short Circuit

- Joshua – WarGames

- Keymaker – an "exile" sapient program in The Matrix franchise

- "Machine" – android from the film The Machine, whose owners try to kill her when they witness her conscious thoughts, out of fear that she will design better androids (intelligence explosion)

- Mike – The Moon Is a Harsh Mistress

- Mimi – humanoid robot in Real Humans, (original title – Äkta människor) 2012

- The Minds – Iain M. Banks' Culture novels

- Omnius – sentient computer network that controlled the Universe until overthrown by the Butlerian Jihad in the Dune franchise

- Operating Systems in the movie Her

- The Oracle – sapient program in The Matrix franchise

- Professor James Moriarty – sentient holodeck character in the "Ship in a Bottle" episode from Star Trek: The Next Generation

- In Greg Egan's novel Permutation City the protagonist creates digital copies of himself to conduct experiments that are also related to implications of artificial consciousness on identity

- Puppet Master – Ghost in the Shell manga and anime

- R2-D2 – exciteable astromech droid featured in all the Star Wars movies

- Replicants – bio-robotic androids from the novel Do Androids Dream of Electric Sheep? and the movie Blade Runner which portray what might happen when artificially conscious robots are modeled very closely upon humans

- Roboduck – combat robot superhero in the NEW-GEN comic book series from Marvel Comics

- Robots in Isaac Asimov's Robot series

- Robots in The Matrix franchise, especially in The Animatrix

- The Ship – the result of a large-scale AC experiment, in Frank Herbert's Destination: Void and sequels, despite past edicts warning against "Making a Machine in the Image of a Man's Mind"

- Skynet – from the Terminator franchise

- "Synths" are a type of android in the video game Fallout 4. There is a faction in the game known as "The Railroad" which believes that, as conscious beings, synths have their own rights. The Institute, the lab that produces the synths, mostly does not believe they are truly conscious and attributes any apparent desires for freedom as a malfunction.

- TARDIS – time machine and spacecraft of Doctor Who, sometimes portrayed with a mind of its own

- Terminator cyborgs – from the Terminator franchise, with visual consciousness depicted via first-person perspective

- Transformers – sentient robots from the various series in the Transformers robot superhero franchise of the same name

- Vanamonde – an artificial being that was immensely powerful but entirely child-like in Arthur C. Clarke's The City and the Stars

- WALL-E – a robot and the title character in WALL-E

- Gideon – An interactive artificial consciousness made by Barry Allen shown in DC comics and shows like The Flash and Legends of Tomorrow