From Wikipedia, the free encyclopedia

In astrophysics and physical cosmology, Olbers' paradox, named after the German astronomer Heinrich Wilhelm Olbers (1758–1840) and also called the "dark night sky paradox", is the argument that the darkness of the night sky conflicts with the assumption of an infinite and eternal static universe. The darkness of the night sky is one of the pieces of evidence for a non-static universe such as the Big Bang model. If the universe is static, homogeneous at a large scale, and populated by an infinite number of stars, any sight line from Earth must end at the (very bright) surface of a star, so the night sky should be completely bright. This contradicts the observed darkness of the night.

History

Edward Robert Harrison's Darkness at Night: A Riddle of the Universe (1987) gives an account of the dark night sky paradox, seen as a problem in the history of science. According to Harrison, the first to conceive of anything like the paradox was Thomas Digges, who was also the first to expound the Copernican system in English and also postulated an infinite universe with infinitely many stars.[1] Kepler also posed the problem in 1610, and the paradox took its mature form in the 18th century work of Halley and Cheseaux.[2] The paradox is commonly attributed to the German amateur astronomer Heinrich Wilhelm Olbers, who described it in 1823, but Harrison shows convincingly that Olbers was far from the first to pose the problem, nor was his thinking about it particularly valuable. Harrison argues that the first to set out a satisfactory resolution of the paradox was Lord Kelvin, in a little known 1901 paper,[3] and that Edgar Allan Poe's essay Eureka (1848) curiously anticipated some qualitative aspects of Kelvin's argument:Were the succession of stars endless, then the background of the sky would present us a uniform luminosity, like that displayed by the Galaxy – since there could be absolutely no point, in all that background, at which would not exist a star. The only mode, therefore, in which, under such a state of affairs, we could comprehend the voids which our telescopes find in innumerable directions, would be by supposing the distance of the invisible background so immense that no ray from it has yet been able to reach us at all.[4]

The paradox

The paradox is that a static, infinitely old universe with an infinite number of stars distributed in an infinitely large space would be bright rather than dark.

To show this, we divide the universe into a series of concentric shells, 1 light year thick. Thus, a certain number of stars will be in the shell 1,000,000,000 to 1,000,000,001 light years away. If the universe is homogeneous at a large scale, then there would be four times as many stars in a second shell between 2,000,000,000 to 2,000,000,001 light years away. However, the second shell is twice as far away, so each star in it would appear four times dimmer than the first shell. Thus the total light received from the second shell is the same as the total light received from the first shell.

Thus each shell of a given thickness will produce the same net amount of light regardless of how far away it is. That is, the light of each shell adds to the total amount. Thus the more shells, the more light. And with infinitely many shells there would be a bright night sky.

Dark clouds could obstruct the light. But in that case the clouds would heat up, until they were as hot as stars, and then radiate the same amount of light.

Kepler saw this as an argument for a finite observable universe, or at least for a finite number of stars. In general relativity theory, it is still possible for the paradox to hold in a finite universe:[5] though the sky would not be infinitely bright, every point in the sky would still be like the surface of a star.

In a universe of three dimensions with stars distributed evenly, the number of stars would be proportional to volume. If the surface of concentric sphere shells were considered, the number of stars on each shell would be proportional to the square of the radius of the shell. In the picture above, the shells are reduced to rings in two dimensions with all of the stars on them.

The mainstream explanation

Poet Edgar Allan Poe suggested that the finite size of the observable universe resolves the apparent paradox.[6] More specifically, because the universe is finitely old and the speed of light is finite, only finitely many stars can be observed within a given volume of space visible from Earth (although the whole universe can be infinite in space).[7] The density of stars within this finite volume is sufficiently low that any line of sight from Earth is unlikely to reach a star.However, the Big Bang theory introduces a new paradox: it states that the sky was much brighter in the past, especially at the end of the recombination era, when it first became transparent. All points of the local sky at that era were comparable in brightness to the surface of the Sun, due to the high temperature of the universe in that era; and most light rays will terminate not in a star but in the relic of the Big Bang.

This paradox is explained by the fact that the Big Bang theory also involves the expansion of space which can cause the energy of emitted light to be reduced via redshift. More specifically, the extreme levels of radiation from the Big Bang have been redshifted to microwave wavelengths (1100 times longer than its original wavelength) as a result of the cosmic expansion, and thus form the cosmic microwave background radiation. This explains the relatively low light densities present in most of our sky despite the assumed bright nature of the Big Bang. The redshift also affects light from distant stars and quasars, but the diminution is minor, since the most distant galaxies and quasars have redshifts of only around 5 to 8.6.

Alternative explanations

Steady state

The redshift hypothesised in the Big Bang model would by itself explain the darkness of the night sky, even if the universe were infinitely old. The steady state cosmological model assumed that the universe is infinitely old and uniform in time as well as space. There is no Big Bang in this model, but there are stars and quasars at arbitrarily great distances. The expansion of the universe will cause the light from these distant stars and quasars to be redshifted (by the Doppler effect), so that the total light flux from the sky remains finite. However, observations of the reduction in [radio] light-flux with distance in the 1950s and 1960s showed that it did not drop as rapidly as the Steady State model predicted. Moreover, the Steady State model predicts that stars should (collectively) be visible at all redshifts (provided that their light is not drowned out by nearer stars, of course). Thus, it does not predict a distinct background at fixed temperature as the Big Bang does. And the steady-state model cannot be modified to predict the temperature distribution of the microwave background accurately.[8]Finite age of stars

Stars have a finite age and a finite power, thereby implying that each star has a finite impact on a sky's light field density. Edgar Allan Poe suggested that this idea could provide a resolution to Olbers' paradox; a related theory was also proposed by Jean-Philippe de Chéseaux. However, stars are continually being born as well as dying. As long as the density of stars throughout the universe remains constant, regardless of whether the universe itself has a finite or infinite age, there would be infinitely many other stars in the same angular direction, with an infinite total impact. So the finite age of the stars does not explain the paradox.[9]Brightness

Suppose that the universe were not expanding, and always had the same stellar density; then the temperature of the universe would continually increase as the stars put out more radiation. Eventually, it would reach 3000 K (corresponding to a typical photon energy of 0.3 eV and so a frequency of 7.5×1013 Hz), and the photons would begin to be absorbed by the hydrogen plasma filling most of the universe, rendering outer space opaque. This maximal radiation density corresponds to about 1.2×1017 eV/m3 = 2.1×10−19 kg/m3, which is much greater than the observed value of 4.7×10−31 kg/m3.[2] So the sky is about fifty billion times darker than it would be if the universe were neither expanding nor too young to have reached equilibrium yet.Fractal star distribution

A different resolution, which does not rely on the Big Bang theory, was first proposed by Carl Charlier in 1908 and later rediscovered by Benoît Mandelbrot in 1974. They both postulated that if the stars in the universe were distributed in a hierarchical fractal cosmology (e.g., similar to Cantor dust)—the average density of any region diminishes as the region considered increases—it would not be necessary to rely on the Big Bang theory to explain Olbers' paradox. This model would not rule out a Big Bang but would allow for a dark sky even if the Big Bang had not occurred.Mathematically, the light received from stars as a function of star distance in a hypothetical fractal cosmos is:

r0 = the distance of the nearest star. r0 > 0;

r = the variable measuring distance from the Earth;

L(r) = average luminosity per star at distance r;

N(r) = number of stars at distance r.

The function of luminosity from a given distance L(r)N(r) determines whether the light received is finite or infinite. For any luminosity from a given distance L(r)N(r) proportional to ra,

is infinite for a ≥ −1 but finite for a < −1. So if L(r) is proportional to r−2, then for

is infinite for a ≥ −1 but finite for a < −1. So if L(r) is proportional to r−2, then for  to be finite, N(r) must be proportional to rb, where b < 1. For b = 1, the numbers of stars at a given radius is proportional to that radius. When integrated over the radius, this implies that for b = 1, the total number of stars is proportional to r2. This would correspond to a fractal dimension of 2. Thus the fractal dimension of the universe would need to be less than 2 for this explanation to work.

to be finite, N(r) must be proportional to rb, where b < 1. For b = 1, the numbers of stars at a given radius is proportional to that radius. When integrated over the radius, this implies that for b = 1, the total number of stars is proportional to r2. This would correspond to a fractal dimension of 2. Thus the fractal dimension of the universe would need to be less than 2 for this explanation to work.This explanation is not widely accepted among cosmologists since the evidence suggests that the fractal dimension of the universe is at least 2.[10][11][12] Moreover, the majority of cosmologists accept the cosmological principle, which assumes that matter at the scale of billions of light years is distributed isotropically. Contrarily, fractal cosmology requires anisotropic matter distribution at the largest scales.

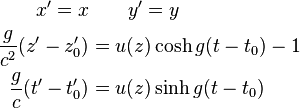

in addition to its rest-frame

in addition to its rest-frame  Coulomb field. This radiation electric field has an accompanying magnetic field, and the whole oscillating electromagnetic radiation field propagates independently of the accelerated charge, carrying away momentum and energy. The energy in the radiation is provided by the work that accelerates the charge. We understand a

Coulomb field. This radiation electric field has an accompanying magnetic field, and the whole oscillating electromagnetic radiation field propagates independently of the accelerated charge, carrying away momentum and energy. The energy in the radiation is provided by the work that accelerates the charge. We understand a

is the speed of light,

is the speed of light,  is proper time,

is proper time,  are the usual coordinates of space and time,

are the usual coordinates of space and time,  is the acceleration of the gravitational field, and

is the acceleration of the gravitational field, and  is an arbitrary function of the coordinate but must approach the observed Newtonian value of

is an arbitrary function of the coordinate but must approach the observed Newtonian value of  . This is the metric for the gravitational field measured by the supported observer.

. This is the metric for the gravitational field measured by the supported observer.

uniformly accelerated at rate

uniformly accelerated at rate