From Wikipedia, the free encyclopedia

Blindsight is the ability of people who are cortically blind due to lesions in their striate cortex, also known as primary visual cortex or V1, to respond to visual stimuli that they do not consciously see.[1] The majority of studies on blindsight are conducted on patients who have the "blindness" on only one side of their visual field. Following the destruction of the striate cortex, patients are asked to detect, localize, and discriminate amongst visual stimuli that are presented to their blind side, often in a forced-response or guessing situation, even though they don't consciously recognise the visual stimulus. Research shows that blind patients achieve a higher accuracy than would be expected from chance alone. Type 1 blindsight is the term given to this ability to guess—at levels significantly above chance—aspects of a visual stimulus (such as location or type of movement) without any conscious awareness of any stimuli. Type 2 blindsight occurs when patients claim to have a feeling that there has been a change within their blind area—e.g. movement—but that it was not a visual percept.[2] Blindsight challenges the common belief that perceptions must enter consciousness to affect our behavior;[3] it shows that our behavior can be guided by sensory information of which we have no conscious awareness.[3] It may be thought of as a converse of the form of anosognosia known as Anton–Babinski syndrome, in which there is full cortical blindness along with the confabulation of visual experience.

A similar phenomenon was also discovered in humans. Subjects who had suffered damage to their visual cortices due to accidents or strokes reported partial or total blindness. In spite of this, when they were prompted they could "guess" with above-average accuracy about the presence and details of objects, much like the animal subjects, and they could even catch objects that were tossed at them. Interestingly, the subjects never developed any kind of confidence in their abilities. Even when told of their successes, they would not begin to spontaneously make "guesses" about objects, but instead still required prompting. Furthermore, blindsight subjects rarely express the amazement about their abilities that sighted people would expect them to express.[8]

The second system seems to be the one that is responsible for our ability to perceive the world around us and the first system is devoted mainly to controlling eye movements and orienting our attention to sudden movements in our periphery. Patients with blindsight have damage to the second, "mammalian" visual system (the visual cortex of the brain and some of the nerve fibers that bring information to it from the eyes).[3] This phenomenon shows how, after the more complex visual system is damaged, people can use the primitive visual system of their brains to guide hand movements towards an object even though they can't see what they are reaching for.[3] Hence, visual information can control behavior without producing a conscious sensation. This ability of those with blindsight to see objects that they are unconscious of suggests that consciousness is not a general property of all parts of the brain; yet it suggests that only certain parts of the brain play a special role in consciousness.[3]

Blindsight patients show awareness of single visual features, such as edges and motion, but cannot gain a holistic visual percept. This suggests that perceptual awareness is modular and that—in sighted individuals—there is a "binding process that unifies all information into a whole percept", which is interrupted in patients with such conditions as blindsight and visual agnosia.[1] Therefore, object identification and object recognition are thought to be separate process and occur in different areas of the brain, working independently from one another. The modular theory of object perception and integration would account for the "hidden perception" experienced in blindsight patients. Research has shown that visual stimuli with the single visual features of sharp borders, sharp onset/offset times,[9] motion,[10] and low spacial frequency[11] contribute to, but are not strictly necessary for, an object's salience in blindsight.

Another explanation to the phenomenon is that even though the majority of a person's visual cortex may be damaged, tiny islands of healthy tissue remain. These islands aren't large enough to provide conscious perception, but nevertheless enough for blindsight. (Kalat, 2009)

A third theory is that the information required to determine the distance to and velocity of an object in object space is determined by the lateral geniculate nucleus before the information is projected to the cerebral cortex. In the normal subject these signals are used to merge the information from the eyes into a three-dimensional representation (which includes the position and velocity of individual objects relative to the organism), extract a vergence signal to benefit the precision (previously auxiliary) optical system (POS), and extract a focus control signal for the lenses of the eyes. The stereoscopic information is attached to the object information passed to the cerebral cortex.[12]

Evidence of blindsight can be indirectly observed in children as young as two months, although there is difficulty in determining the type in a patient who is not old enough to answer questions.[13]

To obtain a better understanding, a more detailed look of visual pathways is included. What is seen in the left and right visual field is taken in by each eye and brought back to the optic disk via the nerve fiber of the retina.[17] From there, the visual information is taken from the optic disk to the optic nerve and down to the optic chiasm where the information is then in the optic tract and will terminate in four different areas of the brain including the LGN, superior colliculus, pretectum of the mid brain and suprachiasmatic nucleus of the hypothalamus and most axons from the LGN will terminate in the primary visual cortex, but not all of them.[17]

In a normal, healthy individual, brain scans were conducted to try and prove that visual motion can bypass V1, creating a connection from the LGN to the human middle temporal complex.[18] Their findings concluded that yes, there was a connection of visual motion information that went directly from the LGN to the hMT+ that allowed information travel without means of V1.[18] Alan Cowey also states that there is still a direct pathway from the retina to the LGN following a traumatic injury to V1 which from there is sent to the extrastriate visual areas.[19] The extrastriate visual areas include the occipital lobes that surround V1.[17] In non-human primates, these can include V2, V3 and V4.[17]

In a study done on primates, after removal of part of V1, the V2 and V3 regions of the brain were still excited by visual stimulus.[19] According to Lawrence Weiskrantz, "the LGN projections that survive V1 removal are relatively sparse in density, but are nevertheless widespread and probably encompass all extrastriate visual areas".[20] This was found through indirect testing methods by first measuring the influence of a stimuli on the blind hemifield against the same stimuli presented in the intact hemifield as well as using reflex measures such as electrical skin conductance.[20] These methods, used with MRI, produced the results that Weiskrantz recorded.[20] This finding also suggests that following the removal of V1, neurons from the LGN remain and send visual stimulus information to V2, V4, V5 and TEO.[20]

Injury to the primary visual cortex, including lesions and other trauma, leads to the loss of visual experience.[16] However, the residual vision that is left cannot be attributed to V1. According to the study done by Schmid, Mrowka, Turchi, Saunders, Wilke, Peters, Ye & Leopold, "thalamic lateral geniculate nucleus has a causal role in V1-independent processing of visual information". This information was founded by the previously stated authors through experiments using fMRI images during activation and inactivation of the LGN and the contribution the LGN has on visual experience in monkeys with a V1 lesion. Furthermore, once the LGN was inactivated, virtually all of the extrastriate areas of the brain no longer showed a response on the fMRI.[16] The information founded in this study lead to a qualitative assessment that included "scotoma stimulation, with the LGN intact had fMRI activation of ~20% of that under normal conditions".[16] This finding agrees with the information obtained from and fMRI images of patients with blindsight.[16]

These findings run parallel with the theory that the LGN is what actually houses this residual vision upon damage of the VI. Also from the same study [16] patient GY presented with blindsight. After research, it was discovered that the LGN is less affected by a V1 injury as many neurons are still reacting to lower-visual field stimulation.[16] All residual vision was lost, however, when there is injury to the LGN.[16] This further substantiates the idea that the LGN is preserved upon injury of V1 and is what encompasses the "sight" portion of blindsight.

The LGN plays a major role in blindsight.[16][17][19][20] Although injury to V1 does create a loss of vision, the LGN is what is credited for the residual vision that remains, substantiating the word "sight" in blindsight. It is important to recognize that the LGN has the ability to bypass V1 and still communicate to the extrastraite areas of the brain, creating the response to visual stimuli that we see in blindsight patients.[16][17][19][20] Using the proper techniques such as fMRI and other indirect methodologies, blindsight can be attributed to V1 damage and a functioning LGN.

Also that year, Cowey published a second paper, "Visual detection in monkey's with Blindsight". In this paper he wanted to show that monkeys too could be conscious of movement in their deficit visual field despite not being consciously aware of the presence of an object there. To do this Cowey used another standard test for humans with the condition. The test is similar to the one he previously used, however for this trial the object would only be presented in the deficit visual field and would move. Starting from the center of the deficit visual field the object would either move up, down, or to the right. The monkeys performed identically to humans on the test, getting them right almost every time. This showed that the monkey's ability to detect movement is separate from their ability to consciously detect an object in their deficit visual field, and gave further evidence for the claim that damage to the striate cortex plays a large role in causing the disorder.[22]

Several years later, Cowey would go on to publish another paper in which he would compare and contrast the data collected from his monkeys and that of a specific human patient with Blindsight, GY. GY's striate cortical region was damaged through trauma at the age of eight, though for the most part he retained full functionality, GY was not consciously aware of anything in his right visual field. By comparing brain scars of both GY and the monkeys he had worked with, as well as their test results, Cowey concluded that the effects of striate cortical damage are the same in both species. This finding provided strong validation for Cowey's previous work with monkeys, and showed that monkeys can be used as accurate test subjects for Blindsight.[23]

Researchers applied the same type of tests that were used to study blindsight in animals to a patient referred to as DB. The normal techniques that were used to assess visual acuity in humans involved asking them to verbally describe some visually recognizable aspect of an object or objects. DB was given forced-choice tasks to complete instead. This meant that even if he or she wasn't visually conscious of the presence, location, or shape of an object they still had to attempt to guess regardless. The results of DB's guesses—if one would even refer to them as such—showed that DB was able to determine shape and detect movement at some unconscious level, despite not being visually aware of this. DB themselves chalked up the accuracy of their guesses to be merely coincidental.[24]

The discovery of the condition known as blindsight raised questions about how different types of visual information, even unconscious information, may be affected and sometimes even unaffected by damage to different areas of the visual cortex.[25] Previous studies had already demonstrated that even without conscious awareness of visual stimuli that humans could still determine certain visual features such as presence in the visual field, shape, orientation and movement.[24] But, in a newer study evidence showed that if the damage to the visual cortex occurs in areas above the primary visual cortex the conscious awareness of visual stimuli itself is not damaged.[25] Blindsight is a phenomenon that shows that even when the primary visual cortex is damaged or removed a person can still perform actions guided by unconscious visual information. So even when damage occurs in the area necessary for conscious awareness of visual information, other functions of the processing of these visual percepts are still available to the individual.[24] The same also goes for damage to other areas of the visual cortex. If an area of the cortex that is responsible for a certain function is damaged it will only result in the loss of that particular function or aspect, functions that other parts of the visual cortex are responsible for remain intact.[25]

Alexander and Cowey investigated how contrasting brightness of stimuli affects blindsight patients' ability to discern movement. Prior studies have already shown that blindsight patients are able to detect motion even though they claim they do not see any visual percepts in their blind fields.[24] The subjects of the study were two patients who suffered from hemianopsia—blindness in more than half of their visual field. Both of the subjects had displayed the ability to accurately determine the presence of visual stimuli in their blind hemifields without acknowledging an actual visual percept previously.[26]

To test the effect of brightness on the subject's ability to determine motion they used a white background with a series of colored dots. They would alter the contrast of the brightness of the dots compared to the white background in each different trial to see if the participants performed better or worse when there was a larger discrepancy in brightness or not.[26] Their procedure was to have the participants face the display for a period of time and ask them to tell the researchers when the dots were moving. The subjects focused on the display through two equal length time intervals. They would tell the researchers whether they thought the dots were moving during the first or the second time interval.[26]

When the contrast in brightness between the background and the dots was higher both of the subjects could discern motion more accurately than they would have statistically by just guessing. However one of the subjects was not able to accurately determine whether or not blue dots were moving regardless of the brightness contrast, but he/she was able to do so with every other color dot.[26] When the contrast was highest the subjects were able to tell whether or not the dots were moving with very high rates of accuracy. Even when the dots were white, but still of a different brightness from the background, the subjects could still determine if they were moving or not. But, regardless of the dots' color the subjects could not tell when they were in motion or not when the white background and the dots were of similar brightness.[26]

Kentridge, Heywood, and Weiskrantz used the phenomenon of blindsight to investigate the connection between visual attention and visual awareness. They wanted to see if their subject—who exhibited blindsight in other studies[26]—could react more quickly when his/her attention was cued without the ability to be visually aware of it. The researchers wanted to show that being conscious of a stimulus and paying attention to it was not the same thing.[27]

To test the relationship between attention and awareness they had the participant try to determine where a target was and whether it was oriented horizontally or vertically on a computer screen.[27] The target line would appear at one of two different locations and would be oriented in one of two directions. Before the target would appear an arrow would become visible on the screen and sometimes it would point to the correct position of the target line and less frequently it would not, this arrow was the cue for the subject. The participant would press a key to indicate whether the line was horizontal or vertical, and could then also indicate to an observer whether or not he/she actually had a feeling that any object was there or not—even if they couldn't see anything. The participant was able to accurately determine the orientation of the line when the target was cued by an arrow before the appearance of the target, even though these visual stimuli did not equal awareness in the subject who had no vision in that area of his/her visual field. The study showed that even without the ability to be visually aware of a stimulus the participant could still focus his/her attention on this object.[27]

In 2003, a patient known as TN lost use of his primary visual cortex, area V1. He had two successive strokes, which knocked out the region in both his left and right hemisphere. After his strokes, ordinary tests of TN's sight turned up nothing. He could not even detect large objects moving right in front of his eyes. Researchers eventually began to notice that TN exhibited signs of blindsight and in 2008 decided to test their theory. They took TN into a hallway and asked him to walk through it without using the cane he always carried after having the strokes. TN was not aware at the time, but the researchers had placed various obstacles in the hallway to test if he could avoid them without conscious use of his sight. To the researchers' delight, he moved around every obstacle with ease, at one point even pressing himself up against the wall to squeeze past a trashcan placed in his way. After navigating through the hallway, TN reported that he was just walking the way he wanted to, not because he knew anything was there. (de Gelder, 2008)

Another case study, written about in Carlson's "Physiology of Behavior, 11th edition", gives another insight into blindsight. In this case study, a girl had brought her grandfather in to see a neuropsychologist. The girl's grandfather, Mr. J., had had a stroke which had left him completely blind apart from a tiny spot in the middle of his visual field. The neuropsychologist, Dr. M., performed an exercise with him. The doctor helped Mr. J. to a chair, had him sit down, and then asked to borrow his cane. The doctor then asked, "Mr. J., please look straight ahead. Keep looking that way, and don't move your eyes or turn your head. I know that you can see a little bit straight ahead of you, and I don't want you to use that piece of vision for what I'm going to ask you to do. Fine. Now, I'd like you to reach out with your right hand [and] point to what I'm holding." Mr. J. then replied, "But I dont see anything—I'm blind!". The doctor then said, "I know, but please try, anyway." Mr. J then shrugged and pointed, and was surprised when his finger encountered the end of the cane which the doctor was pointing toward him. After this, Mr. J. said that "it was just luck". The doctor then turned the cane around so that the handle side was pointing towards Mr. J. He then asked for Mr. J. to grab hold of the cane. Mr. J. reached out with an open hand and grabbed hold of the cane. After this, the doctor said, "Good. Now put your hand down, please." The doctor then rotated the cane 90 degrees, so that the handle was oriented vertically. The doctor then asked Mr. J. to reach for the cane again. Mr. J. did this, and he turned his wrist so that his hand matched the orientation of the handle.

This case study shows that—although (on a conscious level) Mr. J. was completely unaware of any visual abilities that he may have had—he was able to orient his grabbing motions as if he had no visual impairments.[3]

Electrophysiological evidence from the late 1970s (de Monasterio, 1978; Marrocco & Li, 1977; Schiller & Malpeli, 1977) has shown that there is no direct retinal input from S-cones to the superior colliculus, implying that the perception of color information should be impaired. However, recent evidence point to a pathway from S-cones to the superior colliculus, opposing de Monasterio's previous research and supporting the idea that some chromatic processing mechanisms are intact in blindsight.[29][30]

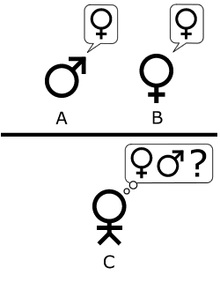

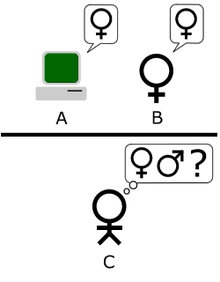

Marco Tamietto & Beatrice de Gelder performed experiments linking emotion detection and blindsight. Patients shown images on their blind side of people expressing emotions correctly guessed the emotion most of the time. The movement of facial muscles used in smiling and frowning were measured and reacted in ways that matched the kind of emotion in the unseen image. Therefore, the emotions were recognized without involving conscious sight.

A recent study found that a young woman with a unilateral lesion of area V1 could scale her grasping movement as she reached out to pick up objects of different sizes placed in her blind field, even though she could not report the sizes of the objects.[31] Similarly, another patient with unilateral lesion of area V1 could avoid obstacles placed in his blind field when he reached toward a target that was visible in his intact visual field.[32] Even though he avoided the obstacles, he never reported seeing them.

Dr. Cowey wrote extensively about patient GY and the tests he underwent to provide further evidence Blindsight is functionally different from conscious vision. To do this GY was not only asked to discriminate between whether or not a stimulus was presented, but he was asked to state the opposite direction of travel for that stimulus. That is to say if the stimulus was traveling upward, he was to indicate it was moving downward, and if it was moving downward he was to indicate that it moved upward. GY was able to do this with incredible accuracy in his left visual field, however he consistently stated the wrong direction of travel in his deficit visual field. This indicated that though GY was aware of the movement, he was not able to apply the rule as stated by the researchers to his observations.[33]

To put it in a more complex way, recent physiological findings suggest that visual processing takes place along several independent, parallel pathways. One system processes information about shape, one about color, and one about movement, location and spatial organization. This information moves through an area of the brain called the lateral geniculate nucleus, located in the thalamus, and on to be processed in the primary visual cortex, area V1 (also known as the striate cortex because of its striped appearance). People with damage to V1 report no conscious vision, no visual imagery, and no visual images in their dreams. However, some of these people still experience the blindsight phenomenon. (Kalat, 2009)

The superior colliculus and prefrontal cortex also have a major role in awareness of a visual stimulus.[34]

History

We owe much of our current understanding of blindsight to early experiments on monkeys. One monkey in particular, Helen, could be considered the "star monkey in visual research" because she was the original blindsight subject. Helen was a macaque monkey that had been decorticated; specifically, her primary visual cortex (V1) was completely removed. This procedure had the expected results that Helen became blind as indicated by the typical test results for blindness. Nevertheless, under certain specific situations, Helen exhibited sighted behavior. Her pupils would dilate and she would blink at stimuli that threatened her eyes. Furthermore, under certain experimental conditions, she could detect a variety of visual stimuli, such as the presence and location of objects, as well as shape, pattern, orientation, motion, and color.[4][5][6] In many cases she was able to navigate her environment and interact with objects as if she were sighted.[7]A similar phenomenon was also discovered in humans. Subjects who had suffered damage to their visual cortices due to accidents or strokes reported partial or total blindness. In spite of this, when they were prompted they could "guess" with above-average accuracy about the presence and details of objects, much like the animal subjects, and they could even catch objects that were tossed at them. Interestingly, the subjects never developed any kind of confidence in their abilities. Even when told of their successes, they would not begin to spontaneously make "guesses" about objects, but instead still required prompting. Furthermore, blindsight subjects rarely express the amazement about their abilities that sighted people would expect them to express.[8]

Describing blindsight

The brain contains several mechanisms involved in vision. Consider two systems in the brain which evolved at different times. The first that evolved is more primitive and resembles the visual system of animals such as fish and frogs. The second to evolve is more complex and is possessed by mammals.The second system seems to be the one that is responsible for our ability to perceive the world around us and the first system is devoted mainly to controlling eye movements and orienting our attention to sudden movements in our periphery. Patients with blindsight have damage to the second, "mammalian" visual system (the visual cortex of the brain and some of the nerve fibers that bring information to it from the eyes).[3] This phenomenon shows how, after the more complex visual system is damaged, people can use the primitive visual system of their brains to guide hand movements towards an object even though they can't see what they are reaching for.[3] Hence, visual information can control behavior without producing a conscious sensation. This ability of those with blindsight to see objects that they are unconscious of suggests that consciousness is not a general property of all parts of the brain; yet it suggests that only certain parts of the brain play a special role in consciousness.[3]

Blindsight patients show awareness of single visual features, such as edges and motion, but cannot gain a holistic visual percept. This suggests that perceptual awareness is modular and that—in sighted individuals—there is a "binding process that unifies all information into a whole percept", which is interrupted in patients with such conditions as blindsight and visual agnosia.[1] Therefore, object identification and object recognition are thought to be separate process and occur in different areas of the brain, working independently from one another. The modular theory of object perception and integration would account for the "hidden perception" experienced in blindsight patients. Research has shown that visual stimuli with the single visual features of sharp borders, sharp onset/offset times,[9] motion,[10] and low spacial frequency[11] contribute to, but are not strictly necessary for, an object's salience in blindsight.

Theories of causation

There are three theories for the explanation of blindsight. The first states that after damage to area V1, other branches of the optic nerve deliver visual information to the superior colliculus and several other areas, including parts of the cerebral cortex. These areas might control the blindsight responses, but still many people with damage to area V1 don't show blindsight or only show it in certain parts of the visual field.Another explanation to the phenomenon is that even though the majority of a person's visual cortex may be damaged, tiny islands of healthy tissue remain. These islands aren't large enough to provide conscious perception, but nevertheless enough for blindsight. (Kalat, 2009)

A third theory is that the information required to determine the distance to and velocity of an object in object space is determined by the lateral geniculate nucleus before the information is projected to the cerebral cortex. In the normal subject these signals are used to merge the information from the eyes into a three-dimensional representation (which includes the position and velocity of individual objects relative to the organism), extract a vergence signal to benefit the precision (previously auxiliary) optical system (POS), and extract a focus control signal for the lenses of the eyes. The stereoscopic information is attached to the object information passed to the cerebral cortex.[12]

Evidence of blindsight can be indirectly observed in children as young as two months, although there is difficulty in determining the type in a patient who is not old enough to answer questions.[13]

Blindsight and the lateral geniculate nucleus

Blindsight is a disorder in which the individual sustains damage to the primary visual cortex and as a result, loses sight in that corresponding visual field.[14] Patients, however, are able to detect stimuli in that damaged visual field which attributes the "sight" portion in the term blindsight. Mosby's Dictionary of Medicine, Nursing & Health Professions defines the lateral geniculate nucleus (LGN) as "one of two elevations of the lateral posterior thalamus receiving visual impulses from the retina via the optic nerves and tracts and relaying the impulses to the calcarine (visual) cortex".[15] In particular, the magnocellular system of the LGN is less affected by the removal of V1 which suggests that it is because of this system in the LGN that blindsight occurs.[16] This quote suggests that the LGN is what is responsible for the causation of blindsight. Although damage to the primary visual cortex (V1) is what causes blindsight, the still functioning magnoceullular system of the LGN is what causes the sight in blindsight. According to Dragoi of The UT Medical School at Houston, the LGN is made up of 6 layers including layers 1 & 2 which are part of the magnocellular layers. The cells in these layers behave like that of M-retinal ganglion cells,[17] are most sensitive to movement of visual stimuli [17] and have large center-surround receptive fields.[17] M-retinal ganglion cells project to the magnocellular layers.[17]To obtain a better understanding, a more detailed look of visual pathways is included. What is seen in the left and right visual field is taken in by each eye and brought back to the optic disk via the nerve fiber of the retina.[17] From there, the visual information is taken from the optic disk to the optic nerve and down to the optic chiasm where the information is then in the optic tract and will terminate in four different areas of the brain including the LGN, superior colliculus, pretectum of the mid brain and suprachiasmatic nucleus of the hypothalamus and most axons from the LGN will terminate in the primary visual cortex, but not all of them.[17]

In a normal, healthy individual, brain scans were conducted to try and prove that visual motion can bypass V1, creating a connection from the LGN to the human middle temporal complex.[18] Their findings concluded that yes, there was a connection of visual motion information that went directly from the LGN to the hMT+ that allowed information travel without means of V1.[18] Alan Cowey also states that there is still a direct pathway from the retina to the LGN following a traumatic injury to V1 which from there is sent to the extrastriate visual areas.[19] The extrastriate visual areas include the occipital lobes that surround V1.[17] In non-human primates, these can include V2, V3 and V4.[17]

In a study done on primates, after removal of part of V1, the V2 and V3 regions of the brain were still excited by visual stimulus.[19] According to Lawrence Weiskrantz, "the LGN projections that survive V1 removal are relatively sparse in density, but are nevertheless widespread and probably encompass all extrastriate visual areas".[20] This was found through indirect testing methods by first measuring the influence of a stimuli on the blind hemifield against the same stimuli presented in the intact hemifield as well as using reflex measures such as electrical skin conductance.[20] These methods, used with MRI, produced the results that Weiskrantz recorded.[20] This finding also suggests that following the removal of V1, neurons from the LGN remain and send visual stimulus information to V2, V4, V5 and TEO.[20]

Injury to the primary visual cortex, including lesions and other trauma, leads to the loss of visual experience.[16] However, the residual vision that is left cannot be attributed to V1. According to the study done by Schmid, Mrowka, Turchi, Saunders, Wilke, Peters, Ye & Leopold, "thalamic lateral geniculate nucleus has a causal role in V1-independent processing of visual information". This information was founded by the previously stated authors through experiments using fMRI images during activation and inactivation of the LGN and the contribution the LGN has on visual experience in monkeys with a V1 lesion. Furthermore, once the LGN was inactivated, virtually all of the extrastriate areas of the brain no longer showed a response on the fMRI.[16] The information founded in this study lead to a qualitative assessment that included "scotoma stimulation, with the LGN intact had fMRI activation of ~20% of that under normal conditions".[16] This finding agrees with the information obtained from and fMRI images of patients with blindsight.[16]

These findings run parallel with the theory that the LGN is what actually houses this residual vision upon damage of the VI. Also from the same study [16] patient GY presented with blindsight. After research, it was discovered that the LGN is less affected by a V1 injury as many neurons are still reacting to lower-visual field stimulation.[16] All residual vision was lost, however, when there is injury to the LGN.[16] This further substantiates the idea that the LGN is preserved upon injury of V1 and is what encompasses the "sight" portion of blindsight.

The LGN plays a major role in blindsight.[16][17][19][20] Although injury to V1 does create a loss of vision, the LGN is what is credited for the residual vision that remains, substantiating the word "sight" in blindsight. It is important to recognize that the LGN has the ability to bypass V1 and still communicate to the extrastraite areas of the brain, creating the response to visual stimuli that we see in blindsight patients.[16][17][19][20] Using the proper techniques such as fMRI and other indirect methodologies, blindsight can be attributed to V1 damage and a functioning LGN.

Evidence in animals

In 1995 Dr. Cowey published the paper "Blindsight in Monkeys". At the time Blindsight was a little proven phenomenon that was believed to be caused by damage due to stroke. However patients were rare and it was hard to separate the areas responsible for the condition from other damage retained. In this experiment Cowey attempted to show monkeys with lesions in or even wholly removed striate cortexes also suffered from Blindsight. To do this he had the monkeys complete a task similar to the tasks commonly used on human patients with the disorder. The monkeys were placed in front of a monitor and taught to differentiate between trials where either an object in their visual field or nothing is present when a tone is played. Since Blindsight causes people to not see anything in their right visual field, if the monkeys registered blank trials, or trials in which no object was presented, the same as trials in which something appeared on the right, then they would have responded the same way as a human with Blindsight. Cowey hoped this would provide evidence for his claims that the striate cortex was key to the disorder, and he did find that the monkeys did indeed perform very similar to human participants.[21]Also that year, Cowey published a second paper, "Visual detection in monkey's with Blindsight". In this paper he wanted to show that monkeys too could be conscious of movement in their deficit visual field despite not being consciously aware of the presence of an object there. To do this Cowey used another standard test for humans with the condition. The test is similar to the one he previously used, however for this trial the object would only be presented in the deficit visual field and would move. Starting from the center of the deficit visual field the object would either move up, down, or to the right. The monkeys performed identically to humans on the test, getting them right almost every time. This showed that the monkey's ability to detect movement is separate from their ability to consciously detect an object in their deficit visual field, and gave further evidence for the claim that damage to the striate cortex plays a large role in causing the disorder.[22]

Several years later, Cowey would go on to publish another paper in which he would compare and contrast the data collected from his monkeys and that of a specific human patient with Blindsight, GY. GY's striate cortical region was damaged through trauma at the age of eight, though for the most part he retained full functionality, GY was not consciously aware of anything in his right visual field. By comparing brain scars of both GY and the monkeys he had worked with, as well as their test results, Cowey concluded that the effects of striate cortical damage are the same in both species. This finding provided strong validation for Cowey's previous work with monkeys, and showed that monkeys can be used as accurate test subjects for Blindsight.[23]

Case studies

Researchers first delved into what would become the study of blindsight when it was observed that monkeys that have had their primary visual cortex removed could still seemingly discern shape, spatial location, and movement to some extent.[24] Humans however have appeared to lose their sense of sight entirely when the visual cortex was damaged. But, researchers were able to show that human blindsight sufferers did exhibit some amount of unconscious visual recognition when they were tested using the same techniques that the researchers who studied blindsight in animals used.[24]Researchers applied the same type of tests that were used to study blindsight in animals to a patient referred to as DB. The normal techniques that were used to assess visual acuity in humans involved asking them to verbally describe some visually recognizable aspect of an object or objects. DB was given forced-choice tasks to complete instead. This meant that even if he or she wasn't visually conscious of the presence, location, or shape of an object they still had to attempt to guess regardless. The results of DB's guesses—if one would even refer to them as such—showed that DB was able to determine shape and detect movement at some unconscious level, despite not being visually aware of this. DB themselves chalked up the accuracy of their guesses to be merely coincidental.[24]

The discovery of the condition known as blindsight raised questions about how different types of visual information, even unconscious information, may be affected and sometimes even unaffected by damage to different areas of the visual cortex.[25] Previous studies had already demonstrated that even without conscious awareness of visual stimuli that humans could still determine certain visual features such as presence in the visual field, shape, orientation and movement.[24] But, in a newer study evidence showed that if the damage to the visual cortex occurs in areas above the primary visual cortex the conscious awareness of visual stimuli itself is not damaged.[25] Blindsight is a phenomenon that shows that even when the primary visual cortex is damaged or removed a person can still perform actions guided by unconscious visual information. So even when damage occurs in the area necessary for conscious awareness of visual information, other functions of the processing of these visual percepts are still available to the individual.[24] The same also goes for damage to other areas of the visual cortex. If an area of the cortex that is responsible for a certain function is damaged it will only result in the loss of that particular function or aspect, functions that other parts of the visual cortex are responsible for remain intact.[25]

Alexander and Cowey investigated how contrasting brightness of stimuli affects blindsight patients' ability to discern movement. Prior studies have already shown that blindsight patients are able to detect motion even though they claim they do not see any visual percepts in their blind fields.[24] The subjects of the study were two patients who suffered from hemianopsia—blindness in more than half of their visual field. Both of the subjects had displayed the ability to accurately determine the presence of visual stimuli in their blind hemifields without acknowledging an actual visual percept previously.[26]

To test the effect of brightness on the subject's ability to determine motion they used a white background with a series of colored dots. They would alter the contrast of the brightness of the dots compared to the white background in each different trial to see if the participants performed better or worse when there was a larger discrepancy in brightness or not.[26] Their procedure was to have the participants face the display for a period of time and ask them to tell the researchers when the dots were moving. The subjects focused on the display through two equal length time intervals. They would tell the researchers whether they thought the dots were moving during the first or the second time interval.[26]

When the contrast in brightness between the background and the dots was higher both of the subjects could discern motion more accurately than they would have statistically by just guessing. However one of the subjects was not able to accurately determine whether or not blue dots were moving regardless of the brightness contrast, but he/she was able to do so with every other color dot.[26] When the contrast was highest the subjects were able to tell whether or not the dots were moving with very high rates of accuracy. Even when the dots were white, but still of a different brightness from the background, the subjects could still determine if they were moving or not. But, regardless of the dots' color the subjects could not tell when they were in motion or not when the white background and the dots were of similar brightness.[26]

Kentridge, Heywood, and Weiskrantz used the phenomenon of blindsight to investigate the connection between visual attention and visual awareness. They wanted to see if their subject—who exhibited blindsight in other studies[26]—could react more quickly when his/her attention was cued without the ability to be visually aware of it. The researchers wanted to show that being conscious of a stimulus and paying attention to it was not the same thing.[27]

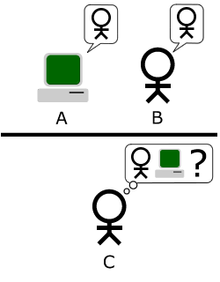

To test the relationship between attention and awareness they had the participant try to determine where a target was and whether it was oriented horizontally or vertically on a computer screen.[27] The target line would appear at one of two different locations and would be oriented in one of two directions. Before the target would appear an arrow would become visible on the screen and sometimes it would point to the correct position of the target line and less frequently it would not, this arrow was the cue for the subject. The participant would press a key to indicate whether the line was horizontal or vertical, and could then also indicate to an observer whether or not he/she actually had a feeling that any object was there or not—even if they couldn't see anything. The participant was able to accurately determine the orientation of the line when the target was cued by an arrow before the appearance of the target, even though these visual stimuli did not equal awareness in the subject who had no vision in that area of his/her visual field. The study showed that even without the ability to be visually aware of a stimulus the participant could still focus his/her attention on this object.[27]

In 2003, a patient known as TN lost use of his primary visual cortex, area V1. He had two successive strokes, which knocked out the region in both his left and right hemisphere. After his strokes, ordinary tests of TN's sight turned up nothing. He could not even detect large objects moving right in front of his eyes. Researchers eventually began to notice that TN exhibited signs of blindsight and in 2008 decided to test their theory. They took TN into a hallway and asked him to walk through it without using the cane he always carried after having the strokes. TN was not aware at the time, but the researchers had placed various obstacles in the hallway to test if he could avoid them without conscious use of his sight. To the researchers' delight, he moved around every obstacle with ease, at one point even pressing himself up against the wall to squeeze past a trashcan placed in his way. After navigating through the hallway, TN reported that he was just walking the way he wanted to, not because he knew anything was there. (de Gelder, 2008)

Another case study, written about in Carlson's "Physiology of Behavior, 11th edition", gives another insight into blindsight. In this case study, a girl had brought her grandfather in to see a neuropsychologist. The girl's grandfather, Mr. J., had had a stroke which had left him completely blind apart from a tiny spot in the middle of his visual field. The neuropsychologist, Dr. M., performed an exercise with him. The doctor helped Mr. J. to a chair, had him sit down, and then asked to borrow his cane. The doctor then asked, "Mr. J., please look straight ahead. Keep looking that way, and don't move your eyes or turn your head. I know that you can see a little bit straight ahead of you, and I don't want you to use that piece of vision for what I'm going to ask you to do. Fine. Now, I'd like you to reach out with your right hand [and] point to what I'm holding." Mr. J. then replied, "But I dont see anything—I'm blind!". The doctor then said, "I know, but please try, anyway." Mr. J then shrugged and pointed, and was surprised when his finger encountered the end of the cane which the doctor was pointing toward him. After this, Mr. J. said that "it was just luck". The doctor then turned the cane around so that the handle side was pointing towards Mr. J. He then asked for Mr. J. to grab hold of the cane. Mr. J. reached out with an open hand and grabbed hold of the cane. After this, the doctor said, "Good. Now put your hand down, please." The doctor then rotated the cane 90 degrees, so that the handle was oriented vertically. The doctor then asked Mr. J. to reach for the cane again. Mr. J. did this, and he turned his wrist so that his hand matched the orientation of the handle.

This case study shows that—although (on a conscious level) Mr. J. was completely unaware of any visual abilities that he may have had—he was able to orient his grabbing motions as if he had no visual impairments.[3]

Research

Lawrence Weiskrantz and colleagues showed in the early 1970s that if forced to guess about whether a stimulus is present in their blind field, some observers do better than chance.[28] This ability to detect stimuli that the observer is not conscious of can extend to discrimination of the type of stimulus (for example, whether an 'X' or 'O' has been presented in the blind field).Electrophysiological evidence from the late 1970s (de Monasterio, 1978; Marrocco & Li, 1977; Schiller & Malpeli, 1977) has shown that there is no direct retinal input from S-cones to the superior colliculus, implying that the perception of color information should be impaired. However, recent evidence point to a pathway from S-cones to the superior colliculus, opposing de Monasterio's previous research and supporting the idea that some chromatic processing mechanisms are intact in blindsight.[29][30]

Marco Tamietto & Beatrice de Gelder performed experiments linking emotion detection and blindsight. Patients shown images on their blind side of people expressing emotions correctly guessed the emotion most of the time. The movement of facial muscles used in smiling and frowning were measured and reacted in ways that matched the kind of emotion in the unseen image. Therefore, the emotions were recognized without involving conscious sight.

A recent study found that a young woman with a unilateral lesion of area V1 could scale her grasping movement as she reached out to pick up objects of different sizes placed in her blind field, even though she could not report the sizes of the objects.[31] Similarly, another patient with unilateral lesion of area V1 could avoid obstacles placed in his blind field when he reached toward a target that was visible in his intact visual field.[32] Even though he avoided the obstacles, he never reported seeing them.

Dr. Cowey wrote extensively about patient GY and the tests he underwent to provide further evidence Blindsight is functionally different from conscious vision. To do this GY was not only asked to discriminate between whether or not a stimulus was presented, but he was asked to state the opposite direction of travel for that stimulus. That is to say if the stimulus was traveling upward, he was to indicate it was moving downward, and if it was moving downward he was to indicate that it moved upward. GY was able to do this with incredible accuracy in his left visual field, however he consistently stated the wrong direction of travel in his deficit visual field. This indicated that though GY was aware of the movement, he was not able to apply the rule as stated by the researchers to his observations.[33]

Brain regions involved

Visual processing in the brain goes through a series of stages. Destruction of the primary visual cortex leads to blindness in the part of the visual field that corresponds to the damaged cortical representation. The area of blindness - known as a scotoma - is in the visual field opposite the damaged hemisphere and can vary from a small area up to the entire hemifield. Visual processing occurs in the brain in a hierarchical series of stages (with much crosstalk and feedback between areas). The route from the retina through V1 is not the only visual pathway into the cortex, though it is by far the largest; it is commonly thought that the residual performance of people exhibiting blindsight is due to preserved pathways into the extrastriate cortex that bypass V1. What is surprising is that activity in these extrastriate areas is apparently insufficient to support visual awareness in the absence of V1.To put it in a more complex way, recent physiological findings suggest that visual processing takes place along several independent, parallel pathways. One system processes information about shape, one about color, and one about movement, location and spatial organization. This information moves through an area of the brain called the lateral geniculate nucleus, located in the thalamus, and on to be processed in the primary visual cortex, area V1 (also known as the striate cortex because of its striped appearance). People with damage to V1 report no conscious vision, no visual imagery, and no visual images in their dreams. However, some of these people still experience the blindsight phenomenon. (Kalat, 2009)

The superior colliculus and prefrontal cortex also have a major role in awareness of a visual stimulus.[34]