From Wikipedia, the free encyclopedia

https://en.wikipedia.org/wiki/Free_software

Free software, libre software, or libreware is computer software distributed under terms that allow users to run the software for any purpose as well as to study, change, and distribute it and any adapted versions. Free software is a matter of liberty, not price; all users are legally free to do what they want with their copies of a free software (including profiting from them) regardless of how much is paid to obtain the program. Computer programs are deemed "free" if they give end-users (not just the developer) ultimate control over the software and, subsequently, over their devices.

The right to study and modify a computer program entails that the source code—the preferred format for making changes—be made available to users of that program. While this is often called "access to source code" or "public availability", the Free Software Foundation (FSF) recommends against thinking in those terms, because it might give the impression that users have an obligation (as opposed to a right) to give non-users a copy of the program.

Although the term "free software" had already been used loosely in the past and other permissive software like the Berkeley Software Distribution released in 1978 existed, Richard Stallman is credited with tying it to the sense under discussion and starting the free software movement in 1983, when he launched the GNU Project: a collaborative effort to create a freedom-respecting operating system, and to revive the spirit of cooperation once prevalent among hackers during the early days of computing.

Context

Free software thus differs from:

- proprietary software, such as Microsoft Office, Windows, Adobe Photoshop, Facebook or iMessage from Apple. Users cannot study, change, and share their source code.

- freeware or gratis software, which is a category of proprietary software that does not require payment for basic use.

For software under the purview of copyright to be free, it must carry a software license whereby the author grants users the aforementioned rights. Software that is not covered by copyright law, such as software in the public domain, is free as long as the source code is also in the public domain, or otherwise available without restrictions.

Proprietary software uses restrictive software licences or EULAs and usually does not provide users with the source code. Users are thus legally or technically prevented from changing the software, and this results in reliance on the publisher to provide updates, help, and support. (See also vendor lock-in and abandonware). Users often may not reverse engineer, modify, or redistribute proprietary software. Beyond copyright law, contracts and a lack of source code, there can exist additional obstacles keeping users from exercising freedom over a piece of software, such as software patents and digital rights management (more specifically, tivoization).

Free software can be a for-profit, commercial activity or not. Some free software is developed by volunteer computer programmers while other is developed by corporations; or even by both.

Naming and differences with open source

Although both definitions refer to almost equivalent corpora of programs, the Free Software Foundation recommends using the term "free software" rather than "open-source software" (an alternative, yet similar, concept coined in 1998), because the goals and messaging are quite dissimilar. According to the Free Software Foundation, "Open source" and its associated campaign mostly focus on the technicalities of the public development model and marketing free software to businesses, while taking the ethical issue of user rights very lightly or even antagonistically. Stallman has also stated that considering the practical advantages of free software is like considering the practical advantages of not being handcuffed, in that it is not necessary for an individual to consider practical reasons in order to realize that being handcuffed is undesirable in itself.

The FSF also notes that "Open Source" has exactly one specific meaning in common English, namely that "you can look at the source code." It states that while the term "Free Software" can lead to two different interpretations, at least one of them is consistent with the intended meaning unlike the term "Open Source". The loan adjective "libre" is often used to avoid the ambiguity of the word "free" in the English language, and the ambiguity with the older usage of "free software" as public-domain software.

Definition and the Four Essential Freedoms of Free Software

The first formal definition of free software was published by FSF in February 1986. That definition, written by Richard Stallman, is still maintained today and states that software is free software if people who receive a copy of the software have the following four freedoms. The numbering begins with zero, not only as a spoof on the common usage of zero-based numbering in programming languages, but also because "Freedom 0" was not initially included in the list, but later added first in the list as it was considered very important.

- Freedom 0: The freedom to use the program for any purpose.

- Freedom 1: The freedom to study how the program works, and change it to make it do what you wish.

- Freedom 2: The freedom to redistribute and make copies so you can help your neighbor.

- Freedom 3: The freedom to improve the program, and release your improvements (and modified versions in general) to the public, so that the whole community benefits.

Freedoms 1 and 3 require source code to be available because studying and modifying software without its source code can range from highly impractical to nearly impossible.

Thus, free software means that computer users have the freedom to cooperate with whom they choose, and to control the software they use. To summarize this into a remark distinguishing libre (freedom) software from gratis (zero price) software, the Free Software Foundation says: "Free software is a matter of liberty, not price. To understand the concept, you should think of 'free' as in 'free speech', not as in 'free beer'".

In the late 1990s, other groups published their own definitions that describe an almost identical set of software. The most notable are Debian Free Software Guidelines published in 1997, and The Open Source Definition, published in 1998.

The BSD-based operating systems, such as FreeBSD, OpenBSD, and NetBSD, do not have their own formal definitions of free software. Users of these systems generally find the same set of software to be acceptable, but sometimes see copyleft as restrictive. They generally advocate permissive free software licenses, which allow others to use the software as they wish, without being legally forced to provide the source code. Their view is that this permissive approach is more free. The Kerberos, X11, and Apache software licenses are substantially similar in intent and implementation.

Examples

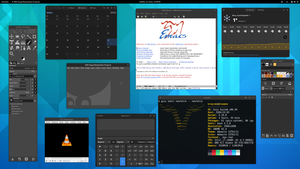

There are thousands of free applications and many operating systems available on the Internet. Users can easily download and install those applications via a package manager that comes included with most Linux distributions.

The Free Software Directory maintains a large database of free-software packages. Some of the best-known examples include Linux-libre, Linux-based operating systems, the GNU Compiler Collection and C library; the MySQL relational database; the Apache web server; and the Sendmail mail transport agent. Other influential examples include the Emacs text editor; the GIMP raster drawing and image editor; the X Window System graphical-display system; the LibreOffice office suite; and the TeX and LaTeX typesetting systems.

History

From the 1950s up until the early 1970s, it was normal for computer users to have the software freedoms associated with free software, which was typically public-domain software. Software was commonly shared by individuals who used computers and by hardware manufacturers who welcomed the fact that people were making software that made their hardware useful. Organizations of users and suppliers, for example, SHARE, were formed to facilitate exchange of software. As software was often written in an interpreted language such as BASIC, the source code was distributed to use these programs. Software was also shared and distributed as printed source code (Type-in program) in computer magazines (like Creative Computing, SoftSide, Compute!, Byte, etc.) and books, like the bestseller BASIC Computer Games. By the early 1970s, the picture changed: software costs were dramatically increasing, a growing software industry was competing with the hardware manufacturer's bundled software products (free in that the cost was included in the hardware cost), leased machines required software support while providing no revenue for software, and some customers able to better meet their own needs did not want the costs of "free" software bundled with hardware product costs. In United States vs. IBM, filed January 17, 1969, the government charged that bundled software was anti-competitive. While some software might always be free, there would henceforth be a growing amount of software produced primarily for sale. In the 1970s and early 1980s, the software industry began using technical measures (such as only distributing binary copies of computer programs) to prevent computer users from being able to study or adapt the software applications as they saw fit. In 1980, copyright law was extended to computer programs.

In 1983, Richard Stallman, one of the original authors of the popular Emacs program and a longtime member of the hacker community at the MIT Artificial Intelligence Laboratory, announced the GNU Project, the purpose of which was to produce a completely non-proprietary Unix-compatible operating system, saying that he had become frustrated with the shift in climate surrounding the computer world and its users. In his initial declaration of the project and its purpose, he specifically cited as a motivation his opposition to being asked to agree to non-disclosure agreements and restrictive licenses which prohibited the free sharing of potentially profitable in-development software, a prohibition directly contrary to the traditional hacker ethic. Software development for the GNU operating system began in January 1984, and the Free Software Foundation (FSF) was founded in October 1985. He developed a free software definition and the concept of "copyleft", designed to ensure software freedom for all. Some non-software industries are beginning to use techniques similar to those used in free software development for their research and development process; scientists, for example, are looking towards more open development processes, and hardware such as microchips are beginning to be developed with specifications released under copyleft licenses (see the OpenCores project, for instance). Creative Commons and the free-culture movement have also been largely influenced by the free software movement.

1980s: Foundation of the GNU Project

In 1983, Richard Stallman, longtime member of the hacker community at the MIT Artificial Intelligence Laboratory, announced the GNU Project, saying that he had become frustrated with the effects of the change in culture of the computer industry and its users. Software development for the GNU operating system began in January 1984, and the Free Software Foundation (FSF) was founded in October 1985. An article outlining the project and its goals was published in March 1985 titled the GNU Manifesto. The manifesto included significant explanation of the GNU philosophy, Free Software Definition and "copyleft" ideas.

1990s: Release of the Linux kernel

The Linux kernel, started by Linus Torvalds, was released as freely modifiable source code in 1991. The first licence was a proprietary software licence. However, with version 0.12 in February 1992, he relicensed the project under the GNU General Public License. Much like Unix, Torvalds' kernel attracted the attention of volunteer programmers. FreeBSD and NetBSD (both derived from 386BSD) were released as free software when the USL v. BSDi lawsuit was settled out of court in 1993. OpenBSD forked from NetBSD in 1995. Also in 1995, The Apache HTTP Server, commonly referred to as Apache, was released under the Apache License 1.0.

Licensing

All free-software licenses must grant users all the freedoms discussed above. However, unless the applications' licenses are compatible, combining programs by mixing source code or directly linking binaries is problematic, because of license technicalities. Programs indirectly connected together may avoid this problem.

The majority of free software falls under a small set of licenses. The most popular of these licenses are:

- The MIT License

- The GNU General Public License v2 (GPLv2)

- The Apache License

- The GNU General Public License v3 (GPLv3)

- The BSD License

- The GNU Lesser General Public License (LGPL)

- The Mozilla Public License (MPL)

- The Eclipse Public License

The Free Software Foundation and the Open Source Initiative both publish lists of licenses that they find to comply with their own definitions of free software and open-source software respectively:

The FSF list is not prescriptive: free-software licenses can exist that the FSF has not heard about, or considered important enough to write about. So it is possible for a license to be free and not in the FSF list. The OSI list only lists licenses that have been submitted, considered and approved. All open-source licenses must meet the Open Source Definition in order to be officially recognized as open source software. Free software, on the other hand, is a more informal classification that does not rely on official recognition. Nevertheless, software licensed under licenses that do not meet the Free Software Definition cannot rightly be considered free software.

Apart from these two organizations, the Debian project is seen by some to provide useful advice on whether particular licenses comply with their Debian Free Software Guidelines. Debian does not publish a list of approved licenses, so its judgments have to be tracked by checking what software they have allowed into their software archives. That is summarized at the Debian web site.

It is rare that a license announced as being in-compliance with the FSF guidelines does not also meet the Open Source Definition, although the reverse is not necessarily true (for example, the NASA Open Source Agreement is an OSI-approved license, but non-free according to FSF).

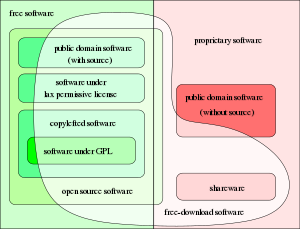

There are different categories of free software.

- Public-domain software: the copyright has expired, the work was not copyrighted (released without copyright notice before 1988), or the author has released the software onto the public domain with a waiver statement (in countries where this is possible). Since public-domain software lacks copyright protection, it may be freely incorporated into any work, whether proprietary or free. The FSF recommends the CC0 public domain dedication for this purpose.

- Permissive licenses, also called BSD-style because they are applied to much of the software distributed with the BSD operating systems: many of these licenses are also known as copyfree as they have no restrictions on distribution. The author retains copyright solely to disclaim warranty and require proper attribution of modified works, and permits redistribution and any modification, even closed-source ones. In this sense, a permissive license provides an incentive to create non-free software, by reducing the cost of developing restricted software. Since this is incompatible with the spirit of software freedom, many people consider permissive licenses to be less free than copyleft licenses.

- Copyleft licenses, with the GNU General Public License being the most prominent: the author retains copyright and permits redistribution under the restriction that all such redistribution is licensed under the same license. Additions and modifications by others must also be licensed under the same "copyleft" license whenever they are distributed with part of the original licensed product. This is also known as a viral, protective, or reciprocal license. Due to the restriction on distribution not everyone considers this type of license to be free.

Security and reliability

There is debate over the security of free software in comparison to proprietary software, with a major issue being security through obscurity. A popular quantitative test in computer security is to use relative counting of known unpatched security flaws. Generally, users of this method advise avoiding products that lack fixes for known security flaws, at least until a fix is available.

Free software advocates strongly believe that this methodology is biased by counting more vulnerabilities for the free software systems, since their source code is accessible and their community is more forthcoming about what problems exist, (This is called "Security Through Disclosure") and proprietary software systems can have undisclosed societal drawbacks, such as disenfranchising less fortunate would-be users of free programs. As users can analyse and trace the source code, many more people with no commercial constraints can inspect the code and find bugs and loopholes than a corporation would find practicable. According to Richard Stallman, user access to the source code makes deploying free software with undesirable hidden spyware functionality far more difficult than for proprietary software.

Some quantitative studies have been done on the subject.

Binary blobs and other proprietary software

In 2006, OpenBSD started the first campaign against the use of binary blobs in kernels. Blobs are usually freely distributable device drivers for hardware from vendors that do not reveal driver source code to users or developers. This restricts the users' freedom effectively to modify the software and distribute modified versions. Also, since the blobs are undocumented and may have bugs, they pose a security risk to any operating system whose kernel includes them. The proclaimed aim of the campaign against blobs is to collect hardware documentation that allows developers to write free software drivers for that hardware, ultimately enabling all free operating systems to become or remain blob-free.

The issue of binary blobs in the Linux kernel and other device drivers motivated some developers in Ireland to launch gNewSense, a Linux-based distribution with all the binary blobs removed. The project received support from the Free Software Foundation and stimulated the creation, headed by the Free Software Foundation Latin America, of the Linux-libre kernel. As of October 2012, Trisquel is the most popular FSF endorsed Linux distribution ranked by Distrowatch (over 12 months). While Debian is not endorsed by the FSF and does not use Linux-libre, it is also a popular distribution available without kernel blobs by default since 2011.

The Linux community uses the term "blob" to refer to all nonfree firmware in a kernel whereas OpenBSD uses the term to refer to device drivers. The FSF does not consider OpenBSD to be blob free under the Linux community's definition of blob.

Business model

Selling software under any free-software licence is permissible, as is commercial use. This is true for licenses with or without copyleft.

Since free software may be freely redistributed, it is generally available at little or no fee. Free software business models are usually based on adding value such as customization, accompanying hardware, support, training, integration, or certification. Exceptions exist however, where the user is charged to obtain a copy of the free application itself.

Fees are usually charged for distribution on compact discs and bootable USB drives, or for services of installing or maintaining the operation of free software. Development of large, commercially used free software is often funded by a combination of user donations, crowdfunding, corporate contributions, and tax money. The SELinux project at the United States National Security Agency is an example of a federally funded free-software project.

Proprietary software, on the other hand, tends to use a different business model, where a customer of the proprietary application pays a fee for a license to legally access and use it. This license may grant the customer the ability to configure some or no parts of the software themselves. Often some level of support is included in the purchase of proprietary software, but additional support services (especially for enterprise applications) are usually available for an additional fee. Some proprietary software vendors will also customize software for a fee.

The Free Software Foundation encourages selling free software. As the Foundation has written, "distributing free software is an opportunity to raise funds for development. Don't waste it!". For example, the FSF's own recommended license (the GNU GPL) states that "[you] may charge any price or no price for each copy that you convey, and you may offer support or warranty protection for a fee."

Microsoft CEO Steve Ballmer stated in 2001 that "open source is not available to commercial companies. The way the license is written, if you use any open-source software, you have to make the rest of your software open source." This misunderstanding is based on a requirement of copyleft licenses (like the GPL) that if one distributes modified versions of software, they must release the source and use the same license. This requirement does not extend to other software from the same developer. The claim of incompatibility between commercial companies and free software is also a misunderstanding. There are several large companies, e.g. Red Hat and IBM (IBM acquired RedHat in 2019), which do substantial commercial business in the development of free software.

Economic aspects and adoption

Free software played a significant part in the development of the Internet, the World Wide Web and the infrastructure of dot-com companies. Free software allows users to cooperate in enhancing and refining the programs they use; free software is a pure public good rather than a private good. Companies that contribute to free software increase commercial innovation.

"We migrated key functions from Windows to Linux because we needed an operating system that was stable and reliable – one that would give us in-house control. So if we needed to patch, adjust, or adapt, we could."

Official statement of the United Space Alliance, which manages the computer systems for the International Space Station (ISS), regarding their May 2013 decision to migrate ISS computer systems from Windows to Linux

The economic viability of free software has been recognized by large corporations such as IBM, Red Hat, and Sun Microsystems. Many companies whose core business is not in the IT sector choose free software for their Internet information and sales sites, due to the lower initial capital investment and ability to freely customize the application packages. Most companies in the software business include free software in their commercial products if the licenses allow that.

Free software is generally available at no cost and can result in permanently lower TCO (total cost of ownership) compared to proprietary software. With free software, businesses can fit software to their specific needs by changing the software themselves or by hiring programmers to modify it for them. Free software often has no warranty, and more importantly, generally does not assign legal liability to anyone. However, warranties are permitted between any two parties upon the condition of the software and its usage. Such an agreement is made separately from the free software license.

A report by Standish Group estimates that adoption of free software has caused a drop in revenue to the proprietary software industry by about $60 billion per year. Eric S. Raymond argued that the term free software is too ambiguous and intimidating for the business community. Raymond promoted the term open-source software as a friendlier alternative for the business and corporate world.