A cognitive distortion is an exaggerated or irrational thought pattern involved in the onset or perpetuation of psychopathological states, such as depression and anxiety.

Cognitive distortions are thoughts that cause individuals to perceive reality inaccurately. According to Aaron Beck's cognitive model, a negative outlook on reality, sometimes called negative schemas (or schemata), is a factor in symptoms of emotional dysfunction and poorer subjective well-being. Specifically, negative thinking patterns reinforce negative emotions and thoughts. During difficult circumstances, these distorted thoughts can contribute to an overall negative outlook on the world and a depressive or anxious mental state. According to hopelessness theory and Beck's theory, the meaning or interpretation that people give to their experience importantly influences whether they will become depressed and whether they will experience severe, repeated, or long-duration episodes of depression.

Challenging and changing cognitive distortions is a key element of cognitive behavioral therapy (CBT).

Definition

Cognitive comes from the Medieval Latin cognitīvus, equivalent to Latin cognit(us), 'known'. Distortion means the act of twisting or altering something out of its true, natural, or original state.

History

In 1957, American psychologist Albert Ellis, though he did not know it yet, would aid cognitive therapy in correcting cognitive distortions and indirectly helping David D. Burns in writing The Feeling Good Handbook. Ellis created what he called the ABC Technique of rational beliefs. The ABC stands for the activating event, beliefs that are irrational, and the consequences that come from the belief. Ellis wanted to prove that the activating event is not what caused the emotional behavior or the consequences, but the beliefs and how the person irrationally perceive the events that aids the consequences. With this model, Ellis attempted to use rational emotive behavior therapy (REBT) with his patients, in order to help them "reframe" or reinterpret the experience in a more rational manner. In this model Ellis explains it all for his clients, while Beck helps his clients figure this out on their own. Beck first started to notice these automatic distorted thought processes when practicing psychoanalysis, while his patients followed the rule of saying anything that comes to mind. Aaron realized that his patients had irrational fears, thoughts, and perceptions that were automatic. Beck began noticing his automatic thought processes that he knew his patients had but did not report. Most of the time the thoughts were biased against themselves and very erroneous.

Beck believed that the negative schemas developed and manifested themselves in the perspective and behavior. The distorted thought processes lead to focusing on degrading the self, amplifying minor external setbacks, experiencing other's harmless comments as ill-intended, while simultaneously seeing self as inferior. Inevitably cognitions are reflected in their behavior with a reduced desire to care for oneself, to seek pleasure, and give up. These exaggerated perceptions, due to cognition, feel real and accurate because the schemas, after being reinforced through the behavior, tend to become automatic and do not allow time for reflection. This cycle is also known as Beck's cognitive triad, focused on the theory that the person's negative schema applied to the self, the future, and the environment.

In 1972, psychiatrist, psychoanalyst, and cognitive therapy scholar Aaron T. Beck published Depression: Causes and Treatment. He was dissatisfied with the conventional Freudian treatment of depression, because there was no empirical evidence for the success of Freudian psychoanalysis. Beck's book provided a comprehensive and empirically-supported theoretical model for depression—its potential causes, symptoms, and treatments. In Chapter 2, titled "Symptomatology of Depression", he described "cognitive manifestations" of depression, including low self-evaluation, negative expectations, self-blame and self-criticism, indecisiveness, and distortion of the body image.

Beck's student David D. Burns continued research on the topic. In his book Feeling Good: The New Mood Therapy, Burns described personal and professional anecdotes related to cognitive distortions and their elimination. When Burns published Feeling Good: The New Mood Therapy, it made Beck's approach to distorted thinking widely known and popularized. Burns sold over four million copies of the book in the United States alone. It was a book commonly "prescribed" for patients who have cognitive distortions that have led to depression. Beck approved of the book, saying that it would help others alter their depressed moods by simplifying the extensive study and research that had taken place since shortly after Beck had started as a student and practitioner of psychoanalytic psychiatry. Nine years later, The Feeling Good Handbook was published, which was also built on Beck's work and includes a list of ten specific cognitive distortions that will be discussed throughout this article.

Main types

John C. Gibbs and Granville Bud Potter propose four categories for cognitive distortions: self-centered, blaming others, minimizing-mislabeling, and assuming the worst. The cognitive distortions listed below are categories of automatic thinking, and are to be distinguished from logical fallacies.

All-or-nothing thinking

The "all-or-nothing thinking distortion" is also referred to as "splitting", "black-and-white thinking", and "polarized thinking." Someone with the all-or-nothing thinking distortion looks at life in black and white categories. Either they are a success or a failure; either they are good or bad; there is no in-between. According to one article, "Because there is always someone who is willing to criticize, this tends to collapse into a tendency for polarized people to view themselves as a total failure. Polarized thinkers have difficulty with the notion of being 'good enough' or a partial success."

- Example (from The Feeling Good Handbook): A woman eats a spoonful of ice cream. She thinks she is a complete failure for breaking her diet. She becomes so depressed that she ends up eating the whole quart of ice cream.

This example captures the polarized nature of this distortion—the person believes they are totally inadequate if they fall short of perfection. In order to combat this distortion, Burns suggests thinking of the world in terms of shades of gray. Rather than viewing herself as a complete failure for eating a spoonful of ice cream, the woman in the example could still recognize her overall effort to diet as at least a partial success.

This distortion is commonly found in perfectionists.

Jumping to conclusions

Reaching preliminary conclusions (usually negative) with little (if any) evidence. Three specific subtypes are identified:

Mind reading

Inferring a person's possible or probable (usually negative) thoughts from their behavior and nonverbal communication; taking precautions against the worst suspected case without asking the person.

- Example 1: A student assumes that the readers of their paper have already made up their minds concerning its topic, and, therefore, writing the paper is a pointless exercise.

- Example 2: Kevin assumes that because he sits alone at lunch, everyone else must think he is a loser. (This can encourage self-fulfilling prophecy; Kevin may not initiate social contact because of his fear that those around him already perceive him negatively).

Fortune-telling

Predicting outcomes (usually negative) of events.

- Example: A depressed person tells themselves they will never improve; they will continue to be depressed for their whole life.

One way to combat this distortion is to ask, "If this is true, does it say more about me or them?"

Labeling

Labeling occurs when someone overgeneralizes characteristics of other people. For example, someone might use an unfavorable term to describe a complex person or event.

Emotional reasoning

In the emotional reasoning distortion, it is assumed that feelings expose the true nature of things and experience reality as a reflection of emotionally linked thoughts; something is believed true solely based on a feeling.

- Examples: "I feel stupid, therefore I must be stupid". Feeling fear of flying in planes, and then concluding that planes must be a dangerous way to travel. Feeling overwhelmed by the prospect of cleaning one's house, therefore concluding that it's hopeless to even start cleaning.

Should/shouldn't and must/mustn't statements

Making "must" or "should" statements was included by Albert Ellis in his rational emotive behavior therapy (REBT), an early form of CBT; he termed it "musturbation". Michael C. Graham called it "expecting the world to be different than it is". It can be seen as demanding particular achievements or behaviors regardless of the realistic circumstances of the situation.

- Example: After a performance, a concert pianist believes he or she should not have made so many mistakes.

- In Feeling Good: The New Mood Therapy, David Burns clearly distinguished between pathological "should statements", moral imperatives, and social norms.

A related cognitive distortion, also present in Ellis' REBT, is a tendency to "awfulize"; to say a future scenario will be awful, rather than to realistically appraise the various negative and positive characteristics of that scenario. According to Burns, "must" and "should" statements are negative because they cause the person to feel guilty and upset at themselves. Some people also direct this distortion at other people, which can cause feelings of anger and frustration when that other person does not do what they should have done. He also mentions how this type of thinking can lead to rebellious thoughts. In other words, trying to whip oneself into doing something with "shoulds" may cause one to desire just the opposite.

Gratitude traps

A gratitude trap is a type of cognitive distortion that typically arises from misunderstandings regarding the nature or practice of gratitude. The term can refer to one of two related but distinct thought patterns:

- A self-oriented thought process involving feelings of guilt, shame, or frustration related to one's expectations of how things "should" be

- An "elusive ugliness in many relationships, a deceptive 'kindness,' the main purpose of which is to make others feel indebted", as defined by psychologist Ellen Kenner

Blaming others

Personalization and blaming

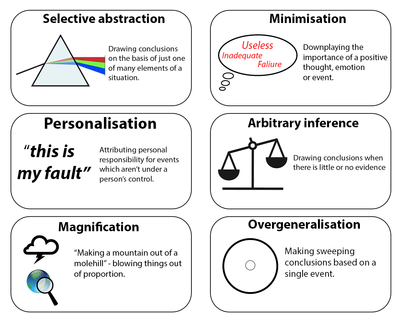

Personalization is assigning personal blame disproportionate to the level of control a person realistically has in a given situation.

- Example 1: A foster child assumes that he/she has not been adopted because he/she is not "loveable enough".

- Example 2: A child has bad grades. His/her mother believes it is because she is not a good enough parent.

Blaming is the opposite of personalization. In the blaming distortion, the disproportionate level of blame is placed upon other people, rather than oneself. In this way, the person avoids taking personal responsibility, making way for a "victim mentality".

- Example: Placing blame for marital problems entirely on one's spouse.

Always being right

In this cognitive distortion, being wrong is unthinkable. This distortion is characterized by actively trying to prove one's actions or thoughts to be correct, and sometimes prioritizing self-interest over the feelings of another person. In this cognitive distortion, the facts that oneself has about their surroundings are always right while other people's opinions and perspectives are wrongly seen.

Fallacy of change

Relying on social control to obtain cooperative actions from another person. The underlying assumption of this thinking style is that one's happiness depends on the actions of others. The fallacy of change also assumes that other people should change to suit one's own interests automatically and/or that it is fair to pressure them to change. It may be present in most abusive relationships in which partners' "visions" of each other are tied into the belief that happiness, love, trust, and perfection would just occur once they or the other person change aspects of their beings.

Minimizing-mislabeling

Magnification and minimization

Giving proportionally greater weight to a perceived failure, weakness or threat, or lesser weight to a perceived success, strength or opportunity, so that the weight differs from that assigned by others, such as "making a mountain out of a molehill". In depressed clients, often the positive characteristics of other people are exaggerated and their negative characteristics are understated.

- Catastrophizing – Giving greater weight to the worst possible outcome, however unlikely, or experiencing a situation as unbearable or impossible when it is just uncomfortable.

Labeling and mislabeling

A form of overgeneralization; attributing a person's actions to their character instead of to an attribute. Rather than assuming the behaviour to be accidental or otherwise extrinsic, one assigns a label to someone or something that is based on the inferred character of that person or thing.

Assuming the worst

Overgeneralizing

Someone who overgeneralizes makes faulty generalizations from insufficient evidence. Such as seeing a "single negative event" as a "never-ending pattern of defeat", and as such drawing a very broad conclusion from a single incident or a single piece of evidence. Even if something bad happens only once, it is expected to happen over and over again.

- Example 1: A young woman is asked out on a first date, but not a second one. She is distraught as she tells her friend, "This always happens to me! I'll never find love!"

- Example 2: A woman is lonely and often spends most of her time at home. Her friends sometimes ask her to dinner and to meet new people. She feels it is useless to even try. No one really could like her. And anyway, all people are the same; petty and selfish.

One suggestion to combat this distortion is to "examine the evidence" by performing an accurate analysis of one's situation. This aids in avoiding exaggerating one's circumstances.

Disqualifying the positive

Disqualifying the positive refers to rejecting positive experiences by insisting they "don't count" for some reason or other. Negative belief is maintained despite contradiction by everyday experiences. Disqualifying the positive may be the most common fallacy in the cognitive distortion range; it is often analyzed with "always being right", a type of distortion where a person is in an all-or-nothing self-judgment. People in this situation show signs of depression. Examples include:

- "I will never be as good as Jane"

- "Anyone could have done as well"

- "They are just congratulating me to be nice"

Mental filtering

Filtering distortions occur when an individual dwells only on the negative details of a situation and filters out the positive aspects.

- Example: Andy gets mostly compliments and positive feedback about a presentation he has done at work, but he also has received a small piece of criticism. For several days following his presentation, Andy dwells on this one negative reaction, forgetting all of the positive reactions that he had also been given.

The Feeling Good Handbook notes that filtering is like a "drop of ink that discolors a beaker of water". One suggestion to combat filtering is a cost–benefit analysis. A person with this distortion may find it helpful to sit down and assess whether filtering out the positive and focusing on the negative is helping or hurting them in the long run.

Conceptualization

In a series of publications, philosopher Paul Franceschi has proposed a unified conceptual framework for cognitive distortions designed to clarify their relationships and define new ones. This conceptual framework is based on three notions: (i) the reference class (a set of phenomena or objects, e.g. events in the patient's life); (ii) dualities (positive/negative, qualitative/quantitative, ...); (iii) the taxon system (degrees allowing to attribute properties according to a given duality to the elements of a reference class). In this model, "dichotomous reasoning", "minimization", "maximization" and "arbitrary focus" constitute general cognitive distortions (applying to any duality), whereas "disqualification of the positive" and "catastrophism" are specific cognitive distortions, applying to the positive/negative duality. This conceptual framework posits two additional cognitive distortion classifications: the "omission of the neutral" and the "requalification in the other pole".

Cognitive restructuring

Cognitive restructuring (CR) is a popular form of therapy used to identify and reject maladaptive cognitive distortions, and is typically used with individuals diagnosed with depression. In CR, the therapist and client first examine a stressful event or situation reported by the client. For example, a depressed male college student who experiences difficulty in dating might believe that his "worthlessness" causes women to reject him. Together, therapist and client might then create a more realistic cognition, e.g., "It is within my control to ask girls on dates. However, even though there are some things I can do to influence their decisions, whether or not they say yes is largely out of my control. Thus, I am not responsible if they decline my invitation." CR therapies are designed to eliminate "automatic thoughts" that include clients' dysfunctional or negative views. According to Beck, doing so reduces feelings of worthlessness, anxiety, and anhedonia that are symptomatic of several forms of mental illness. CR is the main component of Beck's and Burns's CBT.

Narcissistic defense

Those diagnosed with narcissistic personality disorder tend, unrealistically, to view themselves as superior, overemphasizing their strengths and understating their weaknesses. Narcissists use exaggeration and minimization this way to shield themselves against psychological pain.

Decatastrophizing

In cognitive therapy, decatastrophizing or decatastrophization is a cognitive restructuring technique that may be used to treat cognitive distortions, such as magnification and catastrophizing, commonly seen in psychological disorders like anxiety and psychosis. Major features of these disorders are the subjective report of being overwhelmed by life circumstances and the incapability of affecting them.

The goal of CR is to help the client change their perceptions to render the felt experience as less significant.

Criticism

Common criticisms of the diagnosis of cognitive distortion relate to epistemology and the theoretical basis. If the perceptions of the patient differ from those of the therapist, it may not be because of intellectual malfunctions but because the patient has different experiences. In some cases, depressed subjects appear to be "sadder but wiser".