In statistics, many statistical tests calculate correlations between variables and when two variables are found to be correlated, it is tempting to assume that this shows that one variable causes the other.[1][2] That "correlation proves causation," is considered a questionable cause logical fallacy

when two events occurring together are taken to have established a

cause-and-effect relationship. This fallacy is also known as cum hoc ergo propter hoc,

Latin for "with this, therefore because of this," and "false cause." A

similar fallacy, that an event that followed another was necessarily a consequence of the first event, is the post hoc ergo propter hoc (Latin for "after this, therefore because of this.") fallacy.

For example, in a widely studied case, numerous epidemiological studies showed that women taking combined hormone replacement therapy (HRT) also had a lower-than-average incidence of coronary heart disease (CHD), leading doctors to propose that HRT was protective against CHD. But randomized controlled trials showed that HRT caused a small but statistically significant increase in risk of CHD. Re-analysis of the data from the epidemiological studies showed that women undertaking HRT were more likely to be from higher socio-economic groups (ABC1), with better-than-average diet and exercise regimens. The use of HRT and decreased incidence of coronary heart disease were coincident effects of a common cause (i.e. the benefits associated with a higher socioeconomic status), rather than a direct cause and effect, as had been supposed.[3]

As with any logical fallacy, identifying that the reasoning behind an argument is flawed does not imply that the resulting conclusion is false. In the instance above, if the trials had found that hormone replacement therapy does in fact have a negative incidence on the likelihood of coronary heart disease the assumption of causality would have been correct, although the logic behind the assumption would still have been flawed. Indeed, a few go further, using correlation as a basis for testing a hypothesis to try to establish a true causal relationship; examples are the Granger causality test and convergent cross mapping.[clarification needed]

For example, in a widely studied case, numerous epidemiological studies showed that women taking combined hormone replacement therapy (HRT) also had a lower-than-average incidence of coronary heart disease (CHD), leading doctors to propose that HRT was protective against CHD. But randomized controlled trials showed that HRT caused a small but statistically significant increase in risk of CHD. Re-analysis of the data from the epidemiological studies showed that women undertaking HRT were more likely to be from higher socio-economic groups (ABC1), with better-than-average diet and exercise regimens. The use of HRT and decreased incidence of coronary heart disease were coincident effects of a common cause (i.e. the benefits associated with a higher socioeconomic status), rather than a direct cause and effect, as had been supposed.[3]

As with any logical fallacy, identifying that the reasoning behind an argument is flawed does not imply that the resulting conclusion is false. In the instance above, if the trials had found that hormone replacement therapy does in fact have a negative incidence on the likelihood of coronary heart disease the assumption of causality would have been correct, although the logic behind the assumption would still have been flawed. Indeed, a few go further, using correlation as a basis for testing a hypothesis to try to establish a true causal relationship; examples are the Granger causality test and convergent cross mapping.[clarification needed]

Usage

for".[citation needed] This is the meaning intended by statisticians when they say causation is not certain. Indeed, p implies q has the technical meaning of the material conditional: if p then q symbolized as p → q. That is "if circumstance p is true, then q follows." In this sense, it is always correct to say "Correlation does not imply causation."However, in casual use, the word "implies" loosely means suggests rather than requires. The idea that correlation and causation are connected is certainly true; where there is causation, there is a likely correlation. Indeed, correlation is used when inferring causation; the important point is that such inferences are made after correlations are confirmed as real and all causational relationship are systematically explored using large enough data sets.

General pattern

For any two correlated events, A and B, the different possible relationships include[citation needed]:- A causes B (direct causation);

- B causes A (reverse causation);

- A and B are consequences of a common cause, but do not cause each other;

- A and B both cause C, which is (explicitly or implicitly) conditioned on. If A and B cause C, why do A and B have to be correlated?;

- A causes B and B causes A (bidirectional or cyclic causation);

- A causes C which causes B (indirect causation);

- There is no connection between A and B; the correlation is a coincidence.

Examples of illogically inferring causation from correlation

B causes A (reverse causation or reverse causality)

Reverse causation or reverse causality or wrong direction is an informal fallacy of questionable cause where cause and effect are reversed. The cause is said to be the effect and vice versa.- Example 1

- The faster windmills are observed to rotate, the more wind is observed to be.

- Therefore wind is caused by the rotation of windmills. (Or, simply put: windmills, as their name indicates, are machines used to produce wind.)

- Example 2

- When a country's debt rises above 90% of GDP, growth slows.

- Therefore, high debt causes slow growth.

- Example 3

- Driving a wheelchair is dangerous, because most people who drive them have had an accident.

- Example 4

- Children that watch a lot of TV are the most violent. Clearly, TV makes children more violent.

- Example 5

- Example 6

In other cases, two phenomena can each be a partial cause of the other; consider poverty and lack of education, or procrastination and poor self-esteem. One making an argument based on these two phenomena must however be careful to avoid the fallacy of circular cause and consequence. Poverty is a cause of lack of education, but it is not the sole cause, and vice versa.

Third factor C (the common-causal variable) causes both A and B

The third-cause fallacy (also known as ignoring a common cause[5] or questionable cause[5]) is a logical fallacy where a spurious relationship is confused for causation. It asserts that X causes Y when, in reality, X and Y are both caused by Z. It is a variation on the post hoc ergo propter hoc fallacy and a member of the questionable cause group of fallacies.All of these examples deal with a lurking variable, which is simply a hidden third variable that affects both causes of the correlation. A difficulty often also arises where the third factor, though fundamentally different from A and B, is so closely related to A and/or B as to be confused with them or very difficult to scientifically disentangle from them (see Example 4).

- Example 1

- Sleeping with one's shoes on is strongly correlated with waking up with a headache.

- Therefore, sleeping with one's shoes on causes headache.

- Example 2

- Young children who sleep with the light on are much more likely to develop myopia in later life.

- Therefore, sleeping with the light on causes myopia.

- Example 3

- As ice cream sales increase, the rate of drowning deaths increases sharply.

- Therefore, ice cream consumption causes drowning.

- Example 4

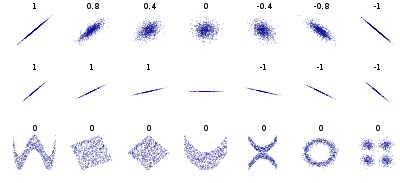

- A hypothetical study shows a relationship between test anxiety scores and shyness scores, with a statistical r value (strength of correlation) of +.59.[12]

- Therefore, it may be simply concluded that shyness, in some part, causally influences test anxiety.

- Example 5

- Since the 1950s, both the atmospheric CO2 level and obesity levels have increased sharply.

- Hence, atmospheric CO2 causes obesity.

- Example 6

- HDL ("good") cholesterol is negatively correlated with incidence of heart attack.

- Therefore, taking medication to raise HDL decreases the chance of having a heart attack.

Bidirectional causation: A causes B, and B causes A

Causality is not necessarily one-way; in a predator-prey relationship, predator numbers affect prey numbers, but prey numbers, i.e. food supply, also affect predator numbers.The relationship between A and B is coincidental

The two variables aren't related at all, but correlate by chance. The more things are examined, the more likely it is that two unrelated variables will appear to be related. For example:- The result of the last home game by the Washington Redskins prior to the presidential election predicted the outcome of every presidential election from 1936 to 2000 inclusive, despite the fact that the outcomes of football games had nothing to do with the outcome of the popular election. This streak was finally broken in 2004 (or 2012 using an alternative formulation of the original rule).

- A collection of such coincidences[14] finds that for example, there is a 99.79% correlation for the period 1999-2009 between U.S. spending on science, space, and technology; and the number of suicides by suffocation, strangulation, and hanging.

- The Mierscheid law, which correlates the Social Democratic Party of Germany's share of the popular vote with the size of crude steel production in Western Germany.

- Alternating bald–hairy Russian leaders: A bald (or obviously balding) state leader of Russia has succeeded a non-bald ("hairy") one, and vice versa, for nearly 200 years.

Determining causation

In academia

The nature of causality is systematically investigated in several academic disciplines, including philosophy and physics.In academia, there are a significant number of theories on causality; The Oxford Handbook of Causation (Beebee, Hitchcock & Menzies 2009) encompasses 770 pages. Among the more influential theories within philosophy are Aristotle's Four causes and Al-Ghazali's occasionalism.[15] David Hume argued that beliefs about causality are based on experience, and experience similarly based on the assumption that the future models the past, which in turn can only be based on experience – leading to circular logic. In conclusion, he asserted that causality is not based on actual reasoning: only correlation can actually be perceived.[16] Immanuel Kant, according to Beebee, Hitchcock & Menzies (2009), held that "a causal principle according to which every event has a cause, or follows according to a causal law, cannot be established through induction as a purely empirical claim, since it would then lack strict universality, or necessity".

Outside the field of philosophy, theories of causation can be identified in classical mechanics, statistical mechanics, quantum mechanics, spacetime theories, biology, social sciences, and law.[15] To establish a correlation as causal within physics, it is normally understood that the cause and the effect must connect through a local mechanism (cf. for instance the concept of impact) or a nonlocal mechanism (cf. the concept of field), in accordance with known laws of nature.

From the point of view of thermodynamics, universal properties of causes as compared to effects have been identified through the Second law of thermodynamics, confirming the ancient, medieval and Cartesian[17] view that "the cause is greater than the effect" for the particular case of thermodynamic free energy. This, in turn, is challenged[dubious ] by popular interpretations of the concepts of nonlinear systems and the butterfly effect, in which small events cause large effects due to, respectively, unpredictability and an unlikely triggering of large amounts of potential energy.

Causality construed from counterfactual states

Intuitively, causation seems to require not just a correlation, but a counterfactual dependence. Suppose that a student performed poorly on a test and guesses that the cause was his not studying. To prove this, one thinks of the counterfactual – the same student writing the same test under the same circumstances but having studied the night before. If one could rewind history, and change only one small thing (making the student study for the exam), then causation could be observed (by comparing version 1 to version 2). Because one cannot rewind history and replay events after making small controlled changes, causation can only be inferred, never exactly known. This is referred to as the Fundamental Problem of Causal Inference – it is impossible to directly observe causal effects.[18]A major goal of scientific experiments and statistical methods is to approximate as best possible the counterfactual state of the world.[19] For example, one could run an experiment on identical twins who were known to consistently get the same grades on their tests. One twin is sent to study for six hours while the other is sent to the amusement park. If their test scores suddenly diverged by a large degree, this would be strong evidence that studying (or going to the amusement park) had a causal effect on test scores. In this case, correlation between studying and test scores would almost certainly imply causation.

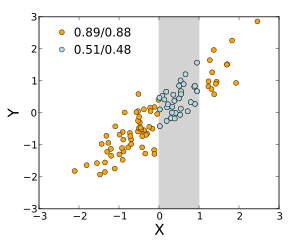

Well-designed experimental studies replace equality of individuals as in the previous example by equality of groups. The objective is to construct two groups that are similar except for the treatment that the groups receive. This is achieved by selecting subjects from a single population and randomly assigning them to two or more groups. The likelihood of the groups behaving similarly to one another (on average) rises with the number of subjects in each group. If the groups are essentially equivalent except for the treatment they receive, and a difference in the outcome for the groups is observed, then this constitutes evidence that the treatment is responsible for the outcome, or in other words the treatment causes the observed effect. However, an observed effect could also be caused "by chance", for example as a result of random perturbations in the population. Statistical tests exist to quantify the likelihood of erroneously concluding that an observed difference exists when in fact it does not (for example see P-value).

Causality predicted by an extrapolation of trends

When experimental studies are impossible and only pre-existing data are available, as is usually the case for example in economics, regression analysis can be used. Factors other than the potential causative variable of interest are controlled for by including them as regressors in addition to the regressor representing the variable of interest. False inferences of causation due to reverse causation (or wrong estimates of the magnitude of causation due the presence of bidirectional causation) can be avoided by using explanators (regressors) that are necessarily exogenous, such as physical explanators like rainfall amount (as a determinant of, say, futures prices), lagged variables whose values were determined before the dependent variable's value was determined, instrumental variables for the explanators (chosen based on their known exogeneity), etc. See Causality#Statistics and economics. Spurious correlation due to mutual influence from a third, common, causative variable, is harder to avoid: the model must be specified such that there is a theoretical reason to believe that no such underlying causative variable has been omitted from the model.Use of correlation as scientific evidence

Much of scientific evidence is based upon a correlation of variables[20] – they are observed to occur together. Scientists are careful to point out that correlation does not necessarily mean causation. The assumption that A causes B simply because A correlates with B is often not accepted as a legitimate form of argument.However, sometimes people commit the opposite fallacy – dismissing correlation entirely. This would dismiss a large swath of important scientific evidence.[20] Since it may be difficult or ethically impossible to run controlled double-blind studies, correlational evidence from several different angles may be useful for prediction despite failing to provide evidence for causation. For example, social workers might be interested in knowing how child abuse relates to academic performance. Although it would be unethical to perform an experiment in which children are randomly assigned to receive or not receive abuse, researchers can look at existing groups using a non-experimental correlational design. If in fact a negative correlation exists between abuse and academic performance, researchers could potentially use this knowledge of a statistical correlation to make predictions about children outside the study who experience abuse, even though the study failed to provide causal evidence that abuse decreases academic performance. [21] The combination of limited available methodologies with the dismissing correlation fallacy has on occasion been used to counter a scientific finding. For example, the tobacco industry has historically relied on a dismissal of correlational evidence to reject a link between tobacco and lung cancer,[22] as did biologist and statistician Ronald Fisher.[23][24][25][26][27][28][29]

Correlation is a valuable type of scientific evidence in fields such as medicine, psychology, and sociology. But first correlations must be confirmed as real, and then every possible causative relationship must be systematically explored. In the end correlation alone cannot be used as evidence for a cause-and-effect relationship between a treatment and benefit, a risk factor and a disease, or a social or economic factor and various outcomes. It is one of the most abused types of evidence, because it is easy and even tempting to come to premature conclusions based upon the preliminary appearance of a correlation.[citation needed]

![\rho _{X,Y}=\mathrm {corr} (X,Y)={\mathrm {cov} (X,Y) \over \sigma _{X}\sigma _{Y}}={E[(X-\mu _{X})(Y-\mu _{Y})] \over \sigma _{X}\sigma _{Y}},](https://wikimedia.org/api/rest_v1/media/math/render/svg/bb20ca021c7e440a88d006f541d2dde73e23d4aa)