From Wikipedia, the free encyclopedia

Parts from four early computers, 1962. From left to right: ENIAC board,

EDVAC board, ORDVAC board, and BRLESC-I board, showing the trend toward

miniaturization.

The

history of computing hardware covers the developments from

early simple devices to aid calculation to modern day computers. Before

the 20th century, most calculations were done by humans. Early

mechanical tools to help humans with digital calculations, such as the

abacus, were called "calculating machines", called by proprietary names, or referred to as

calculators. The machine operator was called the computer.

The first aids to computation were purely mechanical devices which

required the operator to set up the initial values of an elementary

arithmetic operation, then manipulate the device to obtain the result.

Later, computers represented numbers in a continuous form, for instance

distance along a scale, rotation of a shaft, or a voltage. Numbers could

also be represented in the form of digits, automatically manipulated by

a mechanical mechanism. Although this approach generally required more

complex mechanisms, it greatly increased the precision of results. A

series of breakthroughs, such as miniaturized

transistor computers, and

the integrated circuit, caused digital computers to largely replace

analog computers. The cost of computers gradually became so low that by the 1990s,

personal computers, and then, in the 2000s, mobile computers, (

smartphones and

tablets) became ubiquitous in industrialized countries.

Early devices

Ancient era

Suanpan (the number represented on this abacus is 6,302,715,408)

Devices have been used to aid computation for thousands of years, mostly using

one-to-one correspondence with

fingers. The earliest counting device was probably a form of

tally stick. Later record keeping aids throughout the

Fertile Crescent

included calculi (clay spheres, cones, etc.) which represented counts

of items, probably livestock or grains, sealed in hollow unbaked clay

containers.

[2][3][4] The use of

counting rods is one example. The

abacus was early used for arithmetic tasks. What we now call the

Roman abacus was used in

Babylonia

as early as c. 2700–2300 BC. Since then, many other forms of reckoning

boards or tables have been invented. In a medieval European

counting house,

a checkered cloth would be placed on a table, and markers moved around

on it according to certain rules, as an aid to calculating sums of

money.

Several

analog computers were constructed in ancient and medieval times to perform astronomical calculations. These included the

south-pointing chariot (c. 1050–771 BC) from

ancient China, and the

astrolabe and

Antikythera mechanism from the

Hellenistic world (c. 150–100 BC).

[5] In

Roman Egypt,

Hero of Alexandria (c. 10–70 AD) made mechanical devices including automata and a programmable cart.

[6] Other early mechanical devices used to perform one or another type of calculations include the

planisphere and other mechanical computing devices invented by

Abu Rayhan al-Biruni (c. AD 1000); the

equatorium and universal latitude-independent astrolabe by

Abū Ishāq Ibrāhīm al-Zarqālī (c. AD 1015); the astronomical analog computers of other medieval

Muslim astronomers and engineers; and the astronomical

clock tower of

Su Song (1094) during the

Song dynasty. The

castle clock, a

hydropowered mechanical

astronomical clock invented by

Ismail al-Jazari in 1206, was the first

programmable analog computer.

[7][8][9] Ramon Llull

invented the Lullian Circle: a notional machine for calculating answers

to philosophical questions (in this case, to do with Christianity) via

logical combinatorics. This idea was taken up by

Leibniz centuries later, and is thus one of the founding elements in computing and

information science.

Renaissance calculating tools

A set of

John Napier's calculating tables from around 1680

Scottish mathematician and physicist

John Napier

discovered that the multiplication and division of numbers could be

performed by the addition and subtraction, respectively, of the

logarithms

of those numbers. While producing the first logarithmic tables, Napier

needed to perform many tedious multiplications. It was at this point

that he designed his '

Napier's bones', an abacus-like device that greatly simplified calculations that involved multiplication and division.

[10]

Since

real numbers can be represented as distances or intervals on a line, the

slide rule

was invented in the 1620s, shortly after Napier's work, to allow

multiplication and division operations to be carried out significantly

faster than was previously possible.

[11] Edmund Gunter built a calculating device with a single logarithmic scale at the

University of Oxford. His device greatly simplified arithmetic calculations, including multiplication and division.

William Oughtred

greatly improved this in 1630 with his circular slide rule. He followed

this up with the modern slide rule in 1632, essentially a combination

of two

Gunter rules,

held together with the hands. Slide rules were used by generations of

engineers and other mathematically involved professional workers, until

the invention of the

pocket calculator.

[12]

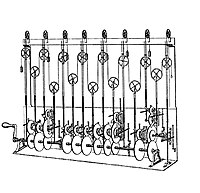

Mechanical calculators

Wilhelm Schickard, a German

polymath,

designed a calculating machine in 1623 which combined a mechanised form

of Napier's rods with the world's first mechanical adding machine built

into the base. Because it made use of a single-tooth gear there were

circumstances in which its carry mechanism would jam.

[13] A fire destroyed at least one of the machines in 1624 and it is believed Schickard was too disheartened to build another.

In 1642, while still a teenager,

Blaise Pascal started some pioneering work on calculating machines and after three years of effort and 50 prototypes

[14] he invented a

mechanical calculator.

[15][16] He built twenty of these machines (called

Pascal's calculator or Pascaline) in the following ten years.

[17] Nine Pascalines have survived, most of which are on display in European museums.

[18]

A continuing debate exists over whether Schickard or Pascal should be

regarded as the "inventor of the mechanical calculator" and the range of

issues to be considered is discussed elsewhere.

[19]

Gottfried Wilhelm von Leibniz invented the

stepped reckoner and his

famous stepped drum mechanism

around 1672. He attempted to create a machine that could be used not

only for addition and subtraction but would utilise a moveable carriage

to enable long multiplication and division. Leibniz once said "It is

unworthy of excellent men to lose hours like slaves in the labour of

calculation which could safely be relegated to anyone else if machines

were used."

[20] However, Leibniz did not incorporate a fully successful carry mechanism. Leibniz also described the

binary numeral system,

[21] a central ingredient of all modern computers. However, up to the 1940s, many subsequent designs (including

Charles Babbage's machines of the 1822 and even

ENIAC of 1945) were based on the decimal system.

[22]

Around 1820,

Charles Xavier Thomas de Colmar created what would over the rest of the century become the first successful, mass-produced mechanical calculator, the Thomas

Arithmometer.

It could be used to add and subtract, and with a moveable carriage the

operator could also multiply, and divide by a process of long

multiplication and long division.

[23]

It utilised a stepped drum similar in conception to that invented by

Leibniz. Mechanical calculators remained in use until the 1970s.

Punched card data processing

In 1804,

Joseph-Marie Jacquard developed

a loom in which the pattern being woven was controlled by a paper tape constructed from

punched cards.

The paper tape could be changed without changing the mechanical design

of the loom. This was a landmark achievement in programmability. His

machine was an improvement over similar weaving looms. Punched cards

were preceded by punch bands, as in the machine proposed by

Basile Bouchon. These bands would inspire information recording for automatic pianos and more recently

numerical control machine tools.

IBM punched card Accounting Machines, pictured in 1936

In the late 1880s, the American

Herman Hollerith invented data storage on

punched cards that could then be read by a machine.

[24] To process these punched cards he invented the

tabulator, and the

keypunch machine. His machines used electromechanical

relays and

counters.

[25] Hollerith's method was used in the

1890 United States Census. That census was processed two years faster than the prior census had been.

[26] Hollerith's company eventually became the core of

IBM.

By 1920, electromechanical tabulating machines could add, subtract and print accumulated totals.

[27] Machine functions were directed by inserting dozens of wire jumpers into removable

control panels. When the United States instituted

Social Security in 1935, IBM punched card systems were used to process records of 26 million workers.

[28] Punched cards became ubiquitous in industry and government for accounting and administration.

Leslie Comrie's articles on punched card methods and

W.J. Eckert's publication of

Punched Card Methods in Scientific Computation in 1940, described punched card techniques sufficiently advanced to solve some differential equations

[29] or perform multiplication and division using floating point representations, all on punched cards and

unit record machines.

Such machines were used during World War II for cryptographic

statistical processing, as well as a vast number of administrative uses.

The Astronomical Computing Bureau,

Columbia University, performed astronomical calculations representing the state of the art in

computing.

[30][31]

The book

IBM and the Holocaust by

Edwin Black outlines the ways in which IBM's technology helped facilitate

Nazi genocide through generation and tabulation of

punch cards based upon national

census data.

See also: Dehomag

Calculators

The

Curta calculator could also do multiplication and division.

By the 20th century, earlier mechanical calculators, cash registers,

accounting machines, and so on were redesigned to use electric motors,

with gear position as the representation for the state of a variable.

The word "computer" was a job title assigned to primarily women who used

these calculators to perform mathematical calculations.

[32] By the 1920s, British scientist

Lewis Fry Richardson's interest in weather prediction led him to propose

human computers and

numerical analysis to model the weather; to this day, the most powerful computers on

Earth are needed to adequately model its weather using the

Navier–Stokes equations.

[33]

Companies like

Friden,

Marchant Calculator and

Monroe made desktop mechanical calculators from the 1930s that could add, subtract, multiply and divide.

[34] In 1948, the

Curta was introduced by Austrian inventor

Curt Herzstark. It was a small, hand-cranked mechanical calculator and as such, a descendant of

Gottfried Leibniz's

Stepped Reckoner and

Thomas's

Arithmometer.

The world's first

all-electronic desktop calculator was the British

Bell Punch ANITA, released in 1961.

[35][36] It used

vacuum tubes, cold-cathode tubes and

Dekatrons in its circuits, with 12 cold-cathode

"Nixie" tubes for its display. The

ANITA

sold well since it was the only electronic desktop calculator

available, and was silent and quick. The tube technology was superseded

in June 1963 by the U.S. manufactured

Friden EC-130, which had an all-transistor design, a stack of four 13-digit numbers displayed on a 5-inch (13 cm)

CRT, and introduced

reverse Polish notation (RPN).

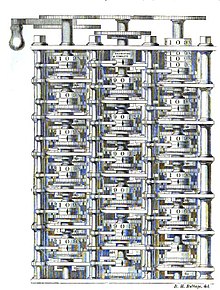

First general-purpose computing device

Charles Babbage, an English mechanical engineer and

polymath, originated the concept of a programmable computer. Considered the "

father of the computer",

[37] he conceptualized and invented the first

mechanical computer in the early 19th century. After working on his revolutionary

difference engine, designed to aid in navigational calculations, in 1833 he realized that a much more general design, an

Analytical Engine, was possible. The input of programs and data was to be provided to the machine via

punched cards, a method being used at the time to direct mechanical

looms such as the

Jacquard loom.

For output, the machine would have a printer, a curve plotter and a

bell. The machine would also be able to punch numbers onto cards to be

read in later. It employed ordinary

base-10 fixed-point arithmetic.

The Engine incorporated an

arithmetic logic unit,

control flow in the form of

conditional branching and

loops, and integrated

memory, making it the first design for a general-purpose computer that could be described in modern terms as

Turing-complete.

[38][39]

There was to be a store, or memory, capable of holding 1,000 numbers of 40 decimal digits each (ca. 16.7

kB). An

arithmetical unit, called the "mill", would be able to perform all four

arithmetic operations, plus comparisons and optionally

square roots. Initially it was conceived as a

difference engine curved back upon itself, in a generally circular layout,

[40] with the long store exiting off to one side. (Later drawings depict a regularized grid layout.)

[41] Like the

central processing unit (CPU) in a modern computer, the mill would rely upon its own internal procedures, roughly equivalent to

microcode

in modern CPUs, to be stored in the form of pegs inserted into rotating

drums called "barrels", to carry out some of the more complex

instructions the user's program might specify.

[42]

The programming language to be employed by users was akin to modern day

assembly languages. Loops and conditional branching were possible, and so the language as conceived would have been

Turing-complete as later defined by

Alan Turing.

Three different types of punch cards were used: one for arithmetical

operations, one for numerical constants, and one for load and store

operations, transferring numbers from the store to the arithmetical unit

or back. There were three separate readers for the three types of

cards.

The machine was about a century ahead of its time. However, the

project was slowed by various problems including disputes with the chief

machinist building parts for it. All the parts for his machine had to

be made by hand—this was a major problem for a machine with thousands of

parts. Eventually, the project was dissolved with the decision of the

British Government to cease funding. Babbage's failure to complete the

analytical engine can be chiefly attributed to difficulties not only of

politics and financing, but also to his desire to develop an

increasingly sophisticated computer and to move ahead faster than anyone

else could follow.

Ada Lovelace,

Lord Byron's daughter, translated and

added notes to the "

Sketch of the Analytical Engine" by

Luigi Federico Menabrea.

This appears to be the first published description of programming, so

Ada Lovelace is widely regarded as the first computer programmer.

[43]

Following Babbage, although unaware of his earlier work, was

Percy Ludgate,

an accountant from Dublin, Ireland. He independently designed a

programmable mechanical computer, which he described in a work that was

published in 1909.

[44]

Analog computers

In the first half of the 20th century,

analog computers

were considered by many to be the future of computing. These devices

used the continuously changeable aspects of physical phenomena such as

electrical,

mechanical, or

hydraulic quantities to

model the problem being solved, in contrast to

digital computers

that represented varying quantities symbolically, as their numerical

values change. As an analog computer does not use discrete values, but

rather continuous values, processes cannot be reliably repeated with

exact equivalence, as they can with

Turing machines.

[45]

The first modern analog computer was a

tide-predicting machine, invented by

Sir William Thomson,

later Lord Kelvin, in 1872. It used a system of pulleys and wires to

automatically calculate predicted tide levels for a set period at a

particular location and was of great utility to navigation in shallow

waters. His device was the foundation for further developments in analog

computing.

[46]

The

differential analyser,

a mechanical analog computer designed to solve differential equations

by integration using wheel-and-disc mechanisms, was conceptualized in

1876 by

James Thomson,

the brother of the more famous Lord Kelvin. He explored the possible

construction of such calculators, but was stymied by the limited output

torque of the

ball-and-disk integrators.

[47] In a differential analyzer, the output of one integrator drove the input of the next integrator, or a graphing output.

A Mk. I Drift Sight. The lever just in front of the bomb aimer's

fingertips sets the altitude, the wheels near his knuckles set the wind

and airspeed.

An important advance in analog computing was the development of the first

fire-control systems for long range

ship gunlaying.

When gunnery ranges increased dramatically in the late 19th century it

was no longer a simple matter of calculating the proper aim point, given

the flight times of the shells. Various spotters on board the ship

would relay distance measures and observations to a central plotting

station. There the fire direction teams fed in the location, speed and

direction of the ship and its target, as well as various adjustments for

Coriolis effect,

weather effects on the air, and other adjustments; the computer would

then output a firing solution, which would be fed to the turrets for

laying. In 1912, British engineer

Arthur Pollen developed the first electrically powered mechanical

analogue computer (called at the time the Argo Clock).

[citation needed] It was used by the

Imperial Russian Navy in

World War I.

[citation needed] The alternative

Dreyer Table fire control system was fitted to British capital ships by mid-1916.

Mechanical devices were also used to aid the

accuracy of aerial bombing.

Drift Sight was the first such aid, developed by

Harry Wimperis in 1916 for the

Royal Naval Air Service; it measured the

wind speed

from the air, and used that measurement to calculate the wind's effects

on the trajectory of the bombs. The system was later improved with the

Course Setting Bomb Sight, and reached a climax with

World War II bomb sights,

Mark XIV bomb sight (

RAF Bomber Command) and the

Norden[48] (

United States Army Air Forces).

The art of mechanical analog computing reached its zenith with the

differential analyzer,

[49] built by H. L. Hazen and

Vannevar Bush at

MIT starting in 1927, which built on the mechanical integrators of

James Thomson and the

torque amplifiers

invented by H. W. Nieman. A dozen of these devices were built before

their obsolescence became obvious; the most powerful was constructed at

the

University of Pennsylvania's

Moore School of Electrical Engineering, where the

ENIAC was built.

A fully electronic analog computer was built by

Helmut Hölzer in 1942 at

Peenemünde Army Research Center .

[50][51][52]

By the 1950s the success of digital electronic computers had spelled the end for most analog computing machines, but

hybrid analog computers,

controlled by digital electronics, remained in substantial use into the

1950s and 1960s, and later in some specialized applications.

Advent of the digital computer

The principle of the modern computer was first described by

computer scientist Alan Turing, who set out the idea in his seminal 1936 paper,

[53] On Computable Numbers. Turing reformulated

Kurt Gödel's

1931 results on the limits of proof and computation, replacing Gödel's

universal arithmetic-based formal language with the formal and simple

hypothetical devices that became known as

Turing machines.

He proved that some such machine would be capable of performing any

conceivable mathematical computation if it were representable as an

algorithm. He went on to prove that there was no solution to the

Entscheidungsproblem by first showing that the

halting problem for Turing machines is

undecidable: in general, it is not possible to decide algorithmically whether a given Turing machine will ever halt.

He also introduced the notion of a 'Universal Machine' (now known as a

Universal Turing machine),

with the idea that such a machine could perform the tasks of any other

machine, or in other words, it is provably capable of computing anything

that is computable by executing a program stored on tape, allowing the

machine to be programmable.

Von Neumann acknowledged that the central concept of the modern computer was due to this paper.

[54] Turing machines are to this day a central object of study in

theory of computation. Except for the limitations imposed by their finite memory stores, modern computers are said to be

Turing-complete, which is to say, they have

algorithm execution capability equivalent to a

universal Turing machine.

Electromechanical computers

The

era of modern computing began with a flurry of development before and

during World War II. Most digital computers built in this period were

electromechanical – electric switches drove mechanical relays to perform

the calculation. These devices had a low operating speed and were

eventually superseded by much faster all-electric computers, originally

using

vacuum tubes.

The

Z2 was one of the earliest examples of an electromechanical relay

computer, and was created by German engineer

Konrad Zuse in 1940. It was an improvement on his earlier

Z1; although it used the same mechanical

memory, it replaced the arithmetic and control logic with electrical

relay circuits.

[55]

Replica of

Zuse's

Z3, the first fully automatic, digital (electromechanical) computer

In the same year, electro-mechanical devices called

bombes were built by British

cryptologists to help decipher

German Enigma-machine-encrypted secret messages during

World War II. The bombes' initial design was created in 1939 at the UK

Government Code and Cypher School (GC&CS) at

Bletchley Park by

Alan Turing,

[56] with an important refinement devised in 1940 by

Gordon Welchman.

[57] The engineering design and construction was the work of

Harold Keen of the

British Tabulating Machine Company. It was a substantial development from a device that had been designed in 1938 by

Polish Cipher Bureau cryptologist

Marian Rejewski, and known as the "

cryptologic bomb" (

Polish:

"bomba kryptologiczna").

In 1941, Zuse followed his earlier machine up with the

Z3,

[58] the world's first working

electromechanical programmable, fully automatic digital computer.

[59] The Z3 was built with 2000

relays, implementing a 22

bit word length that operated at a

clock frequency of about 5–10

Hz.

[60] Program code and data were stored on punched

film. It was quite similar to modern machines in some respects, pioneering numerous advances such as

floating point numbers. Replacement of the hard-to-implement decimal system (used in

Charles Babbage's earlier design) by the simpler

binary

system meant that Zuse's machines were easier to build and potentially

more reliable, given the technologies available at that time.

[61] The Z3 was probably a complete

Turing machine. In two 1936

patent

applications, Zuse also anticipated that machine instructions could be

stored in the same storage used for data—the key insight of what became

known as the

von Neumann architecture, first implemented in the British

SSEM of 1948.

[62]

Zuse suffered setbacks during World War II when some of his machines were destroyed in the course of

Allied

bombing campaigns. Apparently his work remained largely unknown to

engineers in the UK and US until much later, although at least IBM was

aware of it as it financed his post-war startup company in 1946 in

return for an option on Zuse's patents.

In 1944, the

Harvard Mark I was constructed at IBM's Endicott laboratories;

[63] it was a similar general purpose electro-mechanical computer to the Z3, but was not quite Turing-complete.

Digital computation

The

term digital is suggested by George Stibitz and refers to all

applications based on signals with two states – low (0) and high (1).

That is why the decimal and binary computing are two ways to implement

digital computing. A mathematical basis of digital computing is

Boolean algebra, developed by the British mathematician

George Boole in his work

The Laws of Thought, published in 1854. His Boolean algebra was further refined in the 1860s by

William Jevons and

Charles Sanders Peirce, and was first presented systematically by

Ernst Schröder and

A. N. Whitehead.

[64] In 1879 Gottlob Frege develops the formal approach to logic and proposes the first logic language for logical equations.

[65]

In the 1930s and working independently, American

electronic engineer Claude Shannon and Soviet

logician Victor Shestakov[66] both showed a

one-to-one correspondence between the concepts of

Boolean logic and certain electrical circuits, now called

logic gates, which are now ubiquitous in digital computers.

[67] They showed

[68] that electronic relays and switches can realize the

expressions of

Boolean algebra. This thesis essentially founded practical

digital circuit design.

Electronic data processing

Purely

electronic circuit

elements soon replaced their mechanical and electromechanical

equivalents, at the same time that digital calculation replaced analog.

Machines such as the first IBM electronic accounting machine (US

2,580,740), the NCR electronic calculating machine (US 2,595,045), the

Z3, the

Atanasoff–Berry Computer, the

Colossus computers, and the

ENIAC were built by hand, using circuits containing relays or valves (vacuum tubes), and often used

punched cards or

punched paper tape for input and as the main (non-volatile) storage medium.

[69]

The engineer

Tommy Flowers joined the telecommunications branch of the

General Post Office in 1926. While working at the

research station in

Dollis Hill in the 1930s, he began to explore the possible use of electronics for the

telephone exchange. Experimental equipment that he built in 1934 went into operation 5 years later, converting a portion of the

telephone exchange network into an electronic data processing system, using thousands of

vacuum tubes.

[46]

In the US, in the period summer 1937 to the fall of 1939 Arthur Dickinson (IBM) invented the first digital electronic computer.

[70] This calculating device was fully electronic – control, calculations and output (the first electronic display).

[71] John Vincent Atanasoff and Clifford E. Berry of Iowa State University developed the

Atanasoff–Berry Computer (ABC) in 1942,

[72] the first binary electronic digital calculating device.

[73]

This design was semi-electronic (electro-mechanical control and

electronic calculations), and used about 300 vacuum tubes, with

capacitors fixed in a mechanically rotating drum for memory. However,

its paper card writer/reader was unreliable and the regenerative drum

contact system was mechanical. The machine's special-purpose nature and

lack of changeable,

stored program distinguish it from modern computers.

[74]

The tests of the ABC computer in June 1942 were not successful. Instead

of solving system of equations with 29 unknowns, the computer could

solve system of equations with no more than three to five unknowns. The

ABC computer was not completed and was abandoned.

[75]

In 1942 – 1943 Joseph Desch from NCR developed with the help from Allan

Turing the first mass-produced electronic computer N530 for breaking

the four-wheel Enigma. From August 1943 to the end of the WWII 180

machines were manufactured, and one third of them were sent to Bletchley

Park, UK.

[76]

The electronic programmable computer

Colossus was the first

electronic digital programmable

computing device, and was used to break German ciphers during World War

II. It remained unknown, as a military secret, well into the 1970s

During World War II, the British at

Bletchley Park

(40 miles north of London) achieved a number of successes at breaking

encrypted German military communications. The German encryption machine,

Enigma, was first attacked with the help of the electro-mechanical

bombes.

[77]

They ruled out possible Enigma settings by performing chains of logical

deductions implemented electrically. Most possibilities led to a

contradiction, and the few remaining could be tested by hand.

The Germans also developed a series of teleprinter encryption systems, quite different from Enigma. The

Lorenz SZ 40/42

machine was used for high-level Army communications, termed "Tunny" by

the British. The first intercepts of Lorenz messages began in 1941. As

part of an attack on Tunny,

Max Newman and his colleagues helped specify the Colossus.

[78]

Tommy Flowers, still a senior engineer at the

Post Office Research Station[79] was recommended to Max Newman by Alan Turing

[80] and spent eleven months from early February 1943 designing and building the first Colossus.

[81][82] After a functional test in December 1943, Colossus was shipped to Bletchley Park, where it was delivered on 18 January 1944

[83] and attacked its first message on 5 February.

[74]

Colossus rebuild seen from the rear of the device.

Colossus was the world's first

electronic digital programmable computer.

[46]

It used a large number of valves (vacuum tubes). It had paper-tape

input and was capable of being configured to perform a variety of

boolean logical operations on its data, but it was not

Turing-complete.

Nine Mk II Colossi were built (The Mk I was converted to a Mk II making

ten machines in total). Colossus Mark I contained 1500 thermionic

valves (tubes), but Mark II with 2400 valves, was both 5 times faster

and simpler to operate than Mark 1, greatly speeding the decoding

process. Mark 2 was designed while Mark 1 was being constructed.

Allen Coombs took over leadership of the Colossus Mark 2 project when

Tommy Flowers moved on to other projects.

[84]

Colossus was able to process 5,000 characters per second with the

paper tape moving at 40 ft/s (12.2 m/s; 27.3 mph). Sometimes, two or

more Colossus computers tried different possibilities simultaneously in

what now is called

parallel computing, speeding the decoding process by perhaps as much as double the rate of comparison.

Colossus included the first ever use of

shift registers and

systolic arrays, enabling five simultaneous tests, each involving up to 100

Boolean calculations,

on each of the five channels on the punched tape (although in normal

operation only one or two channels were examined in any run). Initially

Colossus was only used to determine the initial wheel positions used for

a particular message (termed wheel setting). The Mark 2 included

mechanisms intended to help determine pin patterns (wheel breaking).

Both models were programmable using switches and plug panels in a way

their predecessors had not been.

ENIAC was the first Turing-complete electronic device, and performed ballistics trajectory calculations for the

United States Army.

[85]

W

ithout the use of these machines, the

Allies would have been deprived of the very valuable

intelligence that was obtained from reading the vast quantity of

encrypted high-level

telegraphic messages between the

German High Command (OKW) and their

army commands throughout occupied Europe. Details of their existence, design, and use were kept secret well into the 1970s.

Winston Churchill

personally issued an order for their destruction into pieces no larger

than a man's hand, to keep secret that the British were capable of

cracking

Lorenz SZ cyphers

(from German rotor stream cipher machines) during the oncoming Cold

War. Two of the machines were transferred to the newly formed

GCHQ and the others were destroyed. As a result, the machines were not included in many histories of computing.

[86] A reconstructed working copy of one of the Colossus machines is now on display at Bletchley Park.

The US-built

ENIAC

(Electronic Numerical Integrator and Computer) was the first electronic

programmable computer built in the US. Although the ENIAC was similar

to the Colossus it was much faster and more flexible. It was

unambiguously a Turing-complete device and could compute any problem

that would fit into its memory. Like the Colossus, a "program" on the

ENIAC was defined by the states of its patch cables and switches, a far

cry from the

stored program

electronic machines that came later. Once a program was written, it had

to be mechanically set into the machine with manual resetting of plugs

and switches.

It combined the high speed of electronics with the ability to be

programmed for many complex problems. It could add or subtract 5000

times a second, a thousand times faster than any other machine. It also

had modules to multiply, divide, and square root. High-speed memory was

limited to 20 words (about 80 bytes). Built under the direction of

John Mauchly and

J. Presper Eckert

at the University of Pennsylvania, ENIAC's development and construction

lasted from 1943 to full operation at the end of 1945. The machine was

huge, weighing 30 tons, using 200 kilowatts of electric power and

contained over 18,000 vacuum tubes, 1,500 relays, and hundreds of

thousands of resistors, capacitors, and inductors.

[87]

One of its major engineering feats was to minimize the effects of tube

burnout, which was a common problem in machine reliability at that time.

The machine was in almost constant use for the next ten years.

Stored-program computer

Early computing machines had fixed programs. For example, a desk

calculator is a fixed program computer. It can do basic

mathematics, but it cannot be used as a

word processor

or a gaming console. Changing the program of a fixed-program machine

requires re-wiring, re-structuring, or re-designing the machine. The

earliest computers were not so much "programmed" as they were

"designed". "Reprogramming", when it was possible at all, was a

laborious process, starting with

flowcharts

and paper notes, followed by detailed engineering designs, and then the

often-arduous process of physically re-wiring and re-building the

machine.

[88] With the proposal of the stored-program computer this changed. A stored-program computer includes by design an

instruction set and can store in memory a set of instructions (a

program) that details the

computation.

Theory

The theoretical basis for the stored-program computer had been composed by

Alan Turing in his 1936 paper. In 1945 Turing joined the

National Physical Laboratory

and began his work on developing an electronic stored-program digital

computer. His 1945 report ‘Proposed Electronic Calculator’ was the first

specification for such a device.

Meanwhile,

John von Neumann at the

Moore School of Electrical Engineering,

University of Pennsylvania, circulated his

First Draft of a Report on the EDVAC

in 1945. Although substantially similar to Turing's design and

containing comparatively little engineering detail, the computer

architecture it outlined became known as the "

von Neumann architecture". Turing presented a more detailed paper to the

National Physical Laboratory (NPL) Executive Committee in 1946, giving the first reasonably complete design of a

stored-program computer, a device he called the

Automatic Computing Engine (ACE). However, the better-known

EDVAC design of

John von Neumann,

who knew of Turing's theoretical work, received more publicity, despite

its incomplete nature and questionable lack of attribution of the

sources of some of the ideas.

[46]

Turing thought that the speed and the size of

computer memory were crucial elements, so he proposed a high-speed memory of what would today be called 25

KB, accessed at a speed of 1

MHz. The ACE implemented

subroutine calls, whereas the EDVAC did not, and the ACE also used

Abbreviated Computer Instructions, an early form of

programming language.

Manchester "Baby"

The Manchester Small-Scale Experimental Machine, nicknamed

Baby, was the world's first

stored-program computer. It was built at the

Victoria University of Manchester by

Frederic C. Williams,

Tom Kilburn and Geoff Tootill, and ran its first program on 21 June 1948.

[89]

The machine was not intended to be a practical computer but was instead designed as a

testbed for the

Williams tube, the first

random-access digital storage device.

[90] Invented by

Freddie Williams and

Tom Kilburn[91][92] at the University of Manchester in 1946 and 1947, it was a

cathode ray tube that used an effect called

secondary emission to temporarily store electronic

binary data, and was used successfully in several early computers.

Although the computer was considered "small and primitive" by the

standards of its time, it was the first working machine to contain all

of the elements essential to a modern electronic computer.

[93]

As soon as the SSEM had demonstrated the feasibility of its design, a

project was initiated at the university to develop it into a more usable

computer, the

Manchester Mark 1. The Mark 1 in turn quickly became the prototype for the

Ferranti Mark 1, the world's first commercially available general-purpose computer.

[94]

The SSEM had a 32-

bit word length and a

memory

of 32 words. As it was designed to be the simplest possible

stored-program computer, the only arithmetic operations implemented in

hardware were

subtraction and

negation;

other arithmetic operations were implemented in software. The first of

three programs written for the machine found the highest

proper divisor of 2

18

(262,144), a calculation that was known would take a long time to

run—and so prove the computer's reliability—by testing every integer

from 2

18 - 1 downwards, as division was implemented by

repeated subtraction of the divisor. The program consisted of

17 instructions and ran for 52 minutes before reaching the correct

answer of 131,072, after the SSEM had performed 3.5 million operations

(for an effective CPU speed of 1.1

kIPS).

Manchester Mark 1

The Experimental machine led on to the development of the

Manchester Mark 1 at the University of Manchester.

[95] Work began in August 1948, and the first version was operational by April 1949; a program written to search for

Mersenne primes

ran error-free for nine hours on the night of 16/17 June 1949. The

machine's successful operation was widely reported in the British press,

which used the phrase "electronic brain" in describing it to their

readers.

The computer is especially historically significant because of its pioneering inclusion of

index registers, an innovation which made it easier for a program to read sequentially through an array of

words

in memory. Thirty-four patents resulted from the machine's development,

and many of the ideas behind its design were incorporated in subsequent

commercial products such as the

IBM 701 and

702 as well as the Ferranti Mark 1. The chief designers,

Frederic C. Williams and

Tom Kilburn,

concluded from their experiences with the Mark 1 that computers would

be used more in scientific roles than in pure mathematics. In 1951 they

started development work on Meg, the Mark 1's successor, which would

include a

floating point unit.

EDSAC

The other contender for being the first recognizably modern digital stored-program computer

[96] was the

EDSAC,

[97] designed and constructed by

Maurice Wilkes and his team at the

University of Cambridge Mathematical Laboratory in

England at the

University of Cambridge in 1949. The machine was inspired by

John von Neumann's seminal

First Draft of a Report on the EDVAC and was one of the first usefully operational electronic digital

stored-program computer.

[98]

EDSAC ran its first programs on 6 May 1949, when it calculated a table of squares

[99] and a list of

prime numbers.The EDSAC also served as the basis for the first commercially applied computer, the

LEO I, used by food manufacturing company

J. Lyons & Co. Ltd. EDSAC 1 and was finally shut down on 11 July 1958, having been superseded by EDSAC 2 which stayed in use until 1965.

[100]

The “brain” [computer] may one day come down to our level [of the

common people] and help with our income-tax and book-keeping

calculations. But this is speculation and there is no sign of it so far.

—

British newspaper The Star in a June 1949 news article about the EDSAC computer, long before the era of the personal computers.[101]

EDVAC

ENIAC inventors

John Mauchly and

J. Presper Eckert proposed the

EDVAC's construction in August 1944, and design work for the EDVAC commenced at the

University of Pennsylvania's

Moore School of Electrical Engineering, before the

ENIAC

was fully operational. The design implemented a number of important

architectural and logical improvements conceived during the ENIAC's

construction, and a high speed

serial access memory.

[102] However, Eckert and Mauchly left the project and its construction floundered.

It was finally delivered to the

U.S. Army's

Ballistics Research Laboratory at the

Aberdeen Proving Ground in August 1949, but due to a number of problems, the computer only began operation in 1951, and then only on a limited basis.

Commercial computers

The first commercial computer was the

Ferranti Mark 1, built by

Ferranti and delivered to the

University of Manchester in February 1951. It was based on the

Manchester Mark 1. The main improvements over the Manchester Mark 1 were in the size of the

primary storage (using

random access Williams tubes),

secondary storage (using a

magnetic drum),

a faster multiplier, and additional instructions. The basic cycle time

was 1.2 milliseconds, and a multiplication could be completed in about

2.16 milliseconds. The multiplier used almost a quarter of the machine's

4,050 vacuum tubes (valves).

[103] A second machine was purchased by the

University of Toronto, before the design was revised into the

Mark 1 Star. At least seven of these later machines were delivered between 1953 and 1957, one of them to

Shell labs in Amsterdam.

[104]

In October 1947, the directors of

J. Lyons & Company,

a British catering company famous for its teashops but with strong

interests in new office management techniques, decided to take an active

role in promoting the commercial development of computers. The

LEO I computer became operational in April 1951

[105] and ran the world's first regular routine office computer

job.

On 17 November 1951, the J. Lyons company began weekly operation of a

bakery valuations job on the LEO (Lyons Electronic Office). This was the

first business

application to go live on a stored program computer.

[106]

In June 1951, the

UNIVAC I (Universal Automatic Computer) was delivered to the

U.S. Census Bureau. Remington Rand eventually sold 46 machines at more than US$1 million each ($9.43 million as of 2018).

[107]

UNIVAC was the first "mass produced" computer. It used 5,200 vacuum

tubes and consumed 125 kW of power. Its primary storage was

serial-access mercury delay lines capable of storing 1,000 words of 11 decimal digits plus sign (72-bit words).

IBM introduced a smaller, more affordable computer in 1954 that proved very popular.

[108] The

IBM 650

weighed over 900 kg, the attached power supply weighed around 1350 kg

and both were held in separate cabinets of roughly 1.5 meters by 0.9

meters by 1.8 meters. It cost US$500,000

[109] ($4.56 million as of 2018) or could be leased for US$3,500 a month ($30 thousand as of 2018).

[107] Its drum memory was originally 2,000 ten-digit words, later expanded to

4,000 words. Memory limitations such as this were to dominate

programming for decades afterward. The program instructions were fetched

from the spinning drum as the code ran. Efficient execution using drum

memory was provided by a combination of hardware architecture: the

instruction format included the address of the next instruction; and

software: the Symbolic Optimal Assembly Program, SOAP,

[110]

assigned instructions to the optimal addresses (to the extent possible

by static analysis of the source program). Thus many instructions were,

when needed, located in the next row of the drum to be read and

additional wait time for drum rotation was not required.

Microprogramming

In 1951, British scientist

Maurice Wilkes developed the concept of

microprogramming from the realisation that the

central processing unit of a computer could be controlled by a miniature, highly specialised

computer program in high-speed

ROM. Microprogramming allows the base instruction set to be defined or extended by built-in programs (now called

firmware or

microcode).

[111] This concept greatly simplified CPU development. He first described this at the

University of Manchester Computer Inaugural Conference in 1951, then published in expanded form in

IEEE Spectrum in 1955.

[citation needed]

It was widely used in the

CPUs and

floating-point units of

mainframe and other computers; it was implemented for the first time in

EDSAC 2,

[112]

which also used multiple identical "bit slices" to simplify design.

Interchangeable, replaceable tube assemblies were used for each bit of

the processor.

[113]

Magnetic memory

Magnetic

drum memories were developed for the US Navy during WW II with the work continuing at

Engineering Research Associates (ERA) in 1946 and 1947. ERA, then a part of Univac included a drum memory in its

1103, announced in February 1953. The first mass-produced computer, the

IBM 650, also announced in 1953 had about 8.5 kilobytes of drum memory.

The first use of

magnetic core was demonstrated for the

Whirlwind computer in August 1953.

[114]

Commercialization followed quickly. Magnetic core was used in

peripherals of the IBM 702 delivered in July 1955, and later in the 702

itself. The

IBM 704 (1955) and the Ferranti Mercury (1957) used magnetic-core memory. It went on to dominate the field through the mid-1970s.

[115]

As late as 1980, PDP-11/45 machines using magnetic core main memory

and drums for swapping were still in use at many of the original UNIX

sites

Early digital computer characteristics

Transistor computers

The bipolar

transistor was invented in 1947. From 1955 onwards transistors replaced

vacuum tubes in computer designs,

[117] giving rise to the "second generation" of computers. Initially the only devices available were

germanium point-contact transistors.

[118]

Compared to vacuum tubes, transistors have many advantages: they are

smaller, and require less power than vacuum tubes, so give off less

heat. Silicon junction transistors were much more reliable than vacuum

tubes and had longer, indefinite, service life. Transistorized computers

could contain tens of thousands of binary logic circuits in a

relatively compact space. Transistors greatly reduced computers' size,

initial cost, and

operating cost. Typically, second-generation computers were composed of large numbers of

printed circuit boards such as the

IBM Standard Modular System[119] each carrying one to four

logic gates or

flip-flops.

At the

University of Manchester, a team under the leadership of

Tom Kilburn designed and built a machine using the newly developed

transistors instead of valves. Initially the only devices available were

germanium point-contact transistors, less reliable than the valves they replaced but which consumed far less power. Their first

transistorised computer and the first in the world, was

operational by 1953,

[121] and a second version was completed there in April 1955. The 1955 version used 200 transistors, 1,300

solid-state diodes,

and had a power consumption of 150 watts. However, the machine did make

use of valves to generate its 125 kHz clock waveforms and in the

circuitry to read and write on its magnetic

drum memory, so it was not the first completely transistorized computer.

That distinction goes to the

Harwell CADET of 1955,

[123] built by the electronics division of the

Atomic Energy Research Establishment at

Harwell. The design featured a 64-kilobyte magnetic

drum memory store with multiple moving heads that had been designed at the

National Physical Laboratory, UK. By 1953 this team had transistor circuits operating to read and write on a smaller magnetic drum from the

Royal Radar Establishment. The machine used a low clock speed of only 58 kHz to avoid having to use any valves to generate the clock waveforms.

[124][125]

CADET used 324

point-contact transistors provided by the UK company

Standard Telephones and Cables; 76

junction transistors were used for the first stage amplifiers for data read from the drum, since

point-contact transistors

were too noisy. From August 1956 CADET was offering a regular computing

service, during which it often executed continuous computing runs of 80

hours or more.

[126][127] Problems with the reliability of early batches of point contact and alloyed junction transistors meant that the machine's

mean time between failures was about 90 minutes, but this improved once the more reliable

bipolar junction transistors became available.

[128]

The Transistor Computer's design was adopted by the local engineering firm of

Metropolitan-Vickers in their

Metrovick 950, the first commercial transistor computer anywhere.

[129]

Six Metrovick 950s were built, the first completed in 1956. They were

successfully deployed within various departments of the company and were

in use for about five years. A second generation computer, the

IBM 1401, captured about one third of the world market. IBM installed more than ten thousand 1401s between 1960 and 1964.

Transistorized peripherals

Transistorized electronics improved not only the

CPU (Central Processing Unit), but also the

peripheral devices. The second generation

disk data storage units were able to store tens of millions of letters and digits. Next to the

fixed disk storage units, connected to the CPU via high-speed data transmission, were removable disk data storage units. A removable

disk pack

can be easily exchanged with another pack in a few seconds. Even if the

removable disks' capacity is smaller than fixed disks, their

interchangeability guarantees a nearly unlimited quantity of data close

at hand.

Magnetic tape provided archival capability for this data, at a lower cost than disk.

Many second-generation CPUs delegated peripheral device

communications to a secondary processor. For example, while the

communication processor controlled

card reading and punching, the main CPU executed calculations and binary

branch instructions. One

databus would bear data between the main CPU and core memory at the CPU's

fetch-execute cycle rate, and other databusses would typically serve the peripheral devices. On the

PDP-1,

the core memory's cycle time was 5 microseconds; consequently most

arithmetic instructions took 10 microseconds (100,000 operations per

second) because most operations took at least two memory cycles; one for

the instruction, one for the

operand data fetch.

During the second generation

remote terminal units (often in the form of

Teleprinters like a

Friden Flexowriter) saw greatly increased use.

[130]

Telephone connections provided sufficient speed for early remote

terminals and allowed hundreds of kilometers separation between

remote-terminals and the computing center. Eventually these stand-alone

computer networks would be generalized into an interconnected

network of networks—the Internet.

[131]

Supercomputers

The University of Manchester Atlas in January 1963

The early 1960s saw the advent of

supercomputing. The

Atlas Computer was a joint development between the

University of Manchester,

Ferranti, and

Plessey, and was first installed at Manchester University and officially commissioned in 1962 as one of the world's first

supercomputers – considered to be the most powerful computer in the world at that time.

[132] It was said that whenever Atlas went offline half of the United Kingdom's computer capacity was lost.

[133] It was a second-generation machine, using

discrete germanium transistors. Atlas also pioneered the

Atlas Supervisor, "considered by many to be the first recognisable modern

operating system".

[134]

In the US, a series of computers at

Control Data Corporation (CDC) were designed by

Seymour Cray to use innovative designs and parallelism to achieve superior computational peak performance.

[135] The

CDC 6600, released in 1964, is generally considered the first supercomputer.

[136][137] The CDC 6600 outperformed its predecessor, the

IBM 7030 Stretch, by about a factor of three. With performance of about 1

megaFLOPS, the CDC 6600 was the world's fastest computer from 1964 to 1969, when it relinquished that status to its successor, the

CDC 7600.

Integrated circuit

The next great advance in computing power came with the advent of the

integrated circuit. The idea of the integrated circuit was conceived by a radar scientist working for the

Royal Radar Establishment of the

Ministry of Defence,

Geoffrey W.A. Dummer.

Dummer presented the first public description of an integrated circuit

at the Symposium on Progress in Quality Electronic Components in

Washington, D.C. on 7 May 1952:

[138]

- With the advent of the transistor and the work on semi-conductors

generally, it now seems possible to envisage electronic equipment in a

solid block with no connecting wires.[139] The block may consist of layers of insulating, conducting, rectifying and amplifying materials, the electronic functions being connected directly by cutting out areas of the various layers”.

The first practical ICs were invented by

Jack Kilby at

Texas Instruments and

Robert Noyce at

Fairchild Semiconductor.

[140]

Kilby recorded his initial ideas concerning the integrated circuit in

July 1958, successfully demonstrating the first working integrated

example on 12 September 1958.

[141]

In his patent application of 6 February 1959, Kilby described his new

device as “a body of semiconductor material ... wherein all the

components of the electronic circuit are completely integrated.”

[142] The first customer for the invention was the

US Air Force.

[143]

Noyce also came up with his own idea of an integrated circuit half a year later than Kilby.

[144] His chip solved many practical problems that Kilby's had not. Produced at Fairchild Semiconductor, it was made of

silicon, whereas Kilby's chip was made of

germanium.

Post-1960 (integrated circuit based)

Intel 8742 eight-bit microcontroller IC

The explosion in the use of computers began with "third-generation"

computers, making use of Jack St. Clair Kilby's and Robert Noyce's

independent invention of the

integrated circuit (or microchip). This led to the invention of the

microprocessor.

While the subject of exactly which device was the first microprocessor

is contentious, partly due to lack of agreement on the exact definition

of the term "microprocessor", it is largely undisputed that the first

single-chip microprocessor was the Intel 4004,

[145] designed and realized by

Ted Hoff,

Federico Faggin, and

Stanley Mazor at

Intel.

[146]

While the earliest microprocessor ICs literally contained only the

processor, i.e. the central processing unit, of a computer, their

progressive development naturally led to chips containing most or all of

the internal electronic parts of a computer. The integrated circuit in

the image on the right, for example, an

Intel 8742, is an

8-bit microcontroller that includes a

CPU running at 12 MHz, 128 bytes of

RAM, 2048 bytes of

EPROM, and

I/O in the same chip.

During the 1960s there was considerable overlap between second and third generation technologies.

[147] IBM implemented its

IBM Solid Logic Technology modules in

hybrid circuits

for the IBM System/360 in 1964. As late as 1975, Sperry Univac

continued the manufacture of second-generation machines such as the

UNIVAC 494. The

Burroughs large systems such as the B5000 were

stack machines, which allowed for simpler programming. These

pushdown automatons

were also implemented in minicomputers and microprocessors later, which

influenced programming language design. Minicomputers served as

low-cost computer centers for industry, business and universities.

[148] It became possible to simulate analog circuits with the

simulation program with integrated circuit emphasis, or

SPICE (1971) on minicomputers, one of the programs for electronic design automation (

EDA). The microprocessor led to the development of the

microcomputer,

small, low-cost computers that could be owned by individuals and small

businesses. Microcomputers, the first of which appeared in the 1970s,

became ubiquitous in the 1980s and beyond.

While which specific system is considered the first microcomputer is a

matter of debate, as there were several unique hobbyist systems

developed based on the

Intel 4004 and its successor, the

Intel 8008, the first commercially available microcomputer kit was the

Intel 8080-based

Altair 8800, which was announced in the January 1975 cover article of

Popular Electronics. However, this was an extremely limited system in its initial stages, having only 256 bytes of

DRAM

in its initial package and no input-output except its toggle switches

and LED register display. Despite this, it was initially surprisingly

popular, with several hundred sales in the first year, and demand

rapidly outstripped supply. Several early third-party vendors such as

Cromemco and

Processor Technology soon began supplying additional

S-100 bus hardware for the Altair 8800.

In April 1975 at the

Hannover Fair,

Olivetti presented the

P6060,

the world's first complete, pre-assembled personal computer system. The

central processing unit consisted of two cards, code named PUCE1 and

PUCE2, and unlike most other personal computers was built with

TTL components rather than a microprocessor. It had one or two 8"

floppy disk drives, a 32-character

plasma display, 80-column graphical

thermal printer, 48 Kbytes of

RAM, and

BASIC

language. It weighed 40 kg (88 lb). As a complete system, this was a

significant step from the Altair, though it never achieved the same

success. It was in competition with a similar product by IBM that had an

external floppy disk drive.

From 1975 to 1977, most microcomputers, such as the

MOS Technology KIM-1, the

Altair 8800, and some versions of the

Apple I,

were sold as kits for do-it-yourselfers. Pre-assembled systems did not

gain much ground until 1977, with the introduction of the

Apple II, the

Tandy TRS-80, the first

SWTPC computers, and the

Commodore PET.

Computing has evolved with microcomputer architectures, with features

added from their larger brethren, now dominant in most market segments.

A NeXT Computer and its

object-oriented development tools and libraries were used by

Tim Berners-Lee and

Robert Cailliau at

CERN to develop the world's first

web server software,

CERN httpd, and also used to write the first

web browser,

WorldWideWeb.

These facts, along with the close association with Steve Jobs, secure

the 68030 NeXT a place in history as one of the most significant

computers of all time.

[citation needed]

Systems as complicated as computers require very high

reliability.

ENIAC remained on, in continuous operation from 1947 to 1955, for eight

years before being shut down. Although a vacuum tube might fail, it

would be replaced without bringing down the system. By the simple

strategy of never shutting down ENIAC, the failures were dramatically

reduced. The vacuum-tube SAGE air-defense computers became remarkably

reliable – installed in pairs, one off-line, tubes likely to fail did so

when the computer was intentionally run at reduced power to find them.

Hot-pluggable

hard disks, like the hot-pluggable vacuum tubes of yesteryear, continue

the tradition of repair during continuous operation. Semiconductor

memories routinely have no errors when they operate, although operating

systems like Unix have employed memory tests on start-up to detect

failing hardware. Today, the requirement of reliable performance is made

even more stringent when

server farms are the delivery platform.

[149]

Google has managed this by using fault-tolerant software to recover

from hardware failures, and is even working on the concept of replacing

entire server farms on-the-fly, during a service event.

[150][151]

In the 21st century,

multi-core CPUs became commercially available.

[152] Content-addressable memory (CAM)

[153] has become inexpensive enough to be used in networking, and is frequently used for on-chip

cache memory

in modern microprocessors, although no computer system has yet

implemented hardware CAMs for use in programming languages. Currently,

CAMs (or associative arrays) in software are

programming-language-specific. Semiconductor memory cell arrays are very

regular structures, and manufacturers prove their processes on them;

this allows price reductions on memory products. During the 1980s, CMOS

logic gates

developed into devices that could be made as fast as other circuit

types; computer power consumption could therefore be decreased

dramatically. Unlike the continuous current draw of a gate based on

other logic types, a

CMOS gate only draws significant current during the 'transition' between logic states, except for leakage.

This has allowed computing to become a

commodity which is now ubiquitous, embedded in

many forms, from greeting cards and

telephones to

satellites. The

thermal design power

which is dissipated during operation has become as essential as

computing speed of operation. In 2006 servers consumed 1.5% of the total

energy budget of the U.S.

[154] The energy consumption of computer data centers was expected to double to 3% of world consumption by 2011. The

SoC (system on a chip) has compressed even more of the

integrated circuitry into a single chip; SoCs are enabling phones and PCs to converge into single hand-held wireless

mobile devices.

[155]

MIT Technology Review reported 10 November 2017 that IBM has created a 50-

qubit computer; currently its quantum state lasts 50 microseconds.

[156] See: Quantum supremacy[157]

Computing hardware and its software have even become a metaphor for the operation of the universe.

[158]

Epilogue

An

indication of the rapidity of development of this field can be inferred

from the history of the seminal 1947 article by Burks, Goldstine and von

Neumann.

[159] By the time that anyone had time to write anything down, it was obsolete. After 1945, others read John von Neumann's

First Draft of a Report on the EDVAC, and immediately started implementing their own systems. To this day, the rapid pace of development has continued, worldwide.

[160][161][162]

A 1966 article in

Time

predicted that: "By 2000, the machines will be producing so much that

everyone in the U.S. will, in effect, be independently wealthy. How to

use leisure time will be a major problem."

[163]