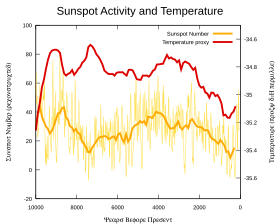

Patterns of solar irradiance and solar variation have been a main driver of climate change over the millions to billions of years of the geologic time scale, but their role in recent warming is insignificant. Evidence that this is the case comes from analysis on many timescales and from many sources, including: direct observations; composites from baskets of different proxy observations; and numerical climate models. On millennial timescales, paleoclimate indicators have been compared to cosmogenic isotope abundances as the latter are a proxy for solar activity. These have also been used on century times scales but, in addition, instrumental data are increasingly available (mainly telescopic observations of sunspots and thermometer measurements of air temperature) and show that, for example, the temperature fluctuations do not match the solar activity variations and that the commonly-invoked association of the Little Ice Age with the Maunder minimum is far too simplistic as, although solar variations may have played a minor role, a much bigger factor is known to be Little Ice Age volcanism. In recent decades observations of unprecedented accuracy, sensitivity and scope (of both solar activity and terrestrial climate) have become available from spacecraft and show unequivocally that recent global warming is not caused by changes in the Sun.

Geologic time

Earth formed around 4.54 billion years ago by accretion from the solar nebula. Volcanic outgassing probably created the primordial atmosphere, which contained almost no oxygen and would have been toxic to humans and most modern life. Much of the Earth was molten because of frequent collisions with other bodies which led to extreme volcanism. Over time, the planet cooled and formed a solid crust, eventually allowing liquid water to exist on the surface.

Three to four billion years ago the Sun emitted only 70% of its current power. Under the present atmospheric composition, this past solar luminosity would have been insufficient to prevent water from uniformly freezing. There is nonetheless evidence that liquid water was already present in the Hadean and Archean eons, leading to what is known as the faint young Sun paradox. Hypothesized solutions to this paradox include a vastly different atmosphere, with much higher concentrations of greenhouse gases than currently exist.

Over the following approximately 4 billion years, the Sun's energy output increased and the composition of the Earth atmosphere changed. The Great Oxygenation Event around 2.4 billion years ago was the most notable alteration of the atmosphere. Over the next five billion years, the Sun's ultimate death as it becomes a very bright red giant and then a very faint white dwarf will have dramatic effects on climate, with the red giant phase likely already ending any life on Earth.

Measurement

Since 1978, solar irradiance has been directly measured by satellites with very good accuracy. These measurements indicate that the Sun's total solar irradiance fluctuates by +-0.1% over the ~11 years of the solar cycle, but that its average value has been stable since the measurements started in 1978. Solar irradiance before the 1970s is estimated using proxy variables, such as tree rings, the number of sunspots, and the abundances of cosmogenic isotopes such as 10Be, all of which are calibrated to the post-1978 direct measurements.

Solar activity has been on a declining trend since the 1960s, as indicated by solar cycles 19-24, in which the maximum number of sunspots were 201, 111, 165, 159, 121 and 82, respectively. In the three decades following 1978, the combination of solar and volcanic activity is estimated to have had a slight cooling influence. A 2010 study found that the composition of solar radiation might have changed slightly, with in an increase of ultraviolet radiation and a decrease in other wavelengths."

Modern era

In the modern era, the Sun has operated within a sufficiently narrow band that climate has been little affected. Models indicate that the combination of solar variations and volcanic activity can explain periods of relative warmth and cold between A.D. 1000 and 1900.

The Holocene

Numerous paleoenvironmental reconstructions have looked for relationships between solar variability and climate. Arctic paleoclimate, in particular, has linked total solar irradiance variations and climate variability. A 2001 paper identified a ~1500 year solar cycle that was a significant influence on North Atlantic climate throughout the Holocene.

Little Ice Age

One historical long-term correlation between solar activity and climate change is the 1645–1715 Maunder minimum, a period of little or no sunspot activity which partially overlapped the "Little Ice Age" during which cold weather prevailed in Europe. The Little Ice Age encompassed roughly the 16th to the 19th centuries. Whether the low solar activity or other factors caused the cooling is debated.

The Spörer Minimum between 1460 and 1550 was matched to a significant cooling period.

A 2012 paper instead linked the Little Ice Age to volcanism, through an "unusual 50-year-long episode with four large sulfur-rich explosive eruptions," and claimed "large changes in solar irradiance are not required" to explain the phenomenon.

A 2010 paper suggested that a new 90-year period of low solar activity would reduce global average temperatures by about 0.3 °C, which would be far from enough to offset the increased forcing from greenhouse gases.

Fossil fuel era

The link between recent solar activity and climate has been quantified and is not a major driver of the warming that has occurred since early in the twentieth century. Human-induced forcings are needed to reproduce the late-20th century warming. Some studies associate solar cycle-driven irradiation increases with part of twentieth century warming.

Three mechanisms are proposed by which solar activity affects climate:

- Solar irradiance changes directly affecting the climate ("radiative forcing"). This is generally considered to be a minor effect, as the measured amplitudes of the variations are too small to have significant effect, absent some amplification process.

- Variations in the ultraviolet component. The UV component varies by more than the total, so if UV were for some (as yet unknown) reason to have a disproportionate effect, this might explain a larger solar signal.

- Effects mediated by changes in galactic cosmic rays (which are affected by the solar wind) such as changes in cloud cover.

Climate models have been unable to reproduce the rapid warming observed in recent decades when they only consider variations in total solar irradiance and volcanic activity. Hegerl et al. (2007) concluded that greenhouse gas forcing had "very likely" caused most of the observed global warming since the mid-20th century. In making this conclusion, they allowed for the possibility that climate models had been underestimating the effect of solar forcing.

Another line of evidence comes from looking at how temperatures at different levels in the Earth's atmosphere have changed. Models and observations show that greenhouse gas results in warming of the troposphere, but cooling of the stratosphere. Depletion of the ozone layer by chemical refrigerants stimulated a stratospheric cooling effect. If the Sun was responsible for observed warming, warming of the troposphere at the surface and warming at the top of the stratosphere would be expected as the increased solar activity would replenish ozone and oxides of nitrogen.

Lines of evidence

The assessment of the solar activity/climate relationship involves multiple, independent lines of evidence.

Sunspots

Early research attempted to find a correlation between weather and sunspot activity, mostly without notable success. Later research has concentrated more on correlating solar activity with global temperature.

Irradiation

Accurate measurement of solar forcing is crucial to understanding possible solar impact on terrestrial climate. Accurate measurements only became available during the satellite era, starting in the late 1970s, and even that is open to some residual disputes: different teams find different values, due to different methods of cross-calibrating measurements taken by instruments with different spectral sensitivity. Scafetta and Willson argue for significant variations of solar luminosity between 1980 and 2000, but Lockwood and Frohlich find that solar forcing declined after 1987.

The 2001 Intergovernmental Panel on Climate Change (IPCC) Third Assessment Report (TAR) concluded that the measured impact of recent solar variation is much smaller than the amplification effect due to greenhouse gases, but acknowledged that scientific understanding is poor with respect to solar variation.

Estimates of long-term solar irradiance changes have decreased since the TAR. However, empirical results of detectable tropospheric changes have strengthened the evidence for solar forcing of climate change. The most likely mechanism is considered to be some combination of direct forcing by TSI changes and indirect effects of ultraviolet (UV) radiation on the stratosphere. Least certain are indirect effects induced by galactic cosmic rays.

In 2002, Lean et al. stated that while "There is ... growing empirical evidence for the Sun's role in climate change on multiple time scales including the 11-year cycle", "changes in terrestrial proxies of solar activity (such as the 14C and 10Be cosmogenic isotopes and the aa geomagnetic index) can occur in the absence of long-term (i.e., secular) solar irradiance changes ... because the stochastic response increases with the cycle amplitude, not because there is an actual secular irradiance change." They conclude that because of this, "long-term climate change may appear to track the amplitude of the solar activity cycles," but that "Solar radiative forcing of climate is reduced by a factor of 5 when the background component is omitted from historical reconstructions of total solar irradiance ...This suggests that general circulation model (GCM) simulations of twentieth century warming may overestimate the role of solar irradiance variability." A 2006 review suggested that solar brightness had relatively little effect on global climate, with little likelihood of significant shifts in solar output over long periods of time. Lockwood and Fröhlich, 2007, found "considerable evidence for solar influence on the Earth's pre-industrial climate and the Sun may well have been a factor in post-industrial climate change in the first half of the last century", but that "over the past 20 years, all the trends in the Sun that could have had an influence on the Earth's climate have been in the opposite direction to that required to explain the observed rise in global mean temperatures." In a study that considered geomagnetic activity as a measure of known solar-terrestrial interaction, Love et al. found a statistically significant correlation between sunspots and geomagnetic activity, but not between global surface temperature and either sunspot number or geomagnetic activity.

Benestad and Schmidt concluded that "the most likely contribution from solar forcing a global warming is 7 ± 1% for the 20th century and is negligible for warming since 1980." This paper disagreed with Scafetta and West, who claimed that solar variability has a significant effect on climate forcing. Based on correlations between specific climate and solar forcing reconstructions, they argued that a "realistic climate scenario is the one described by a large preindustrial secular variability (e.g., the paleoclimate temperature reconstruction by Moberg et al.) with TSI experiencing low secular variability (as the one shown by Wang et al.). Under this scenario, they claimed the Sun might have contributed 50% of the observed global warming since 1900. Stott et al. estimated that the residual effects of the prolonged high solar activity during the last 30 years account for between 16% and 36% of warming from 1950 to 1999.

Direct measurement and time series

Neither direct measurements nor proxies of solar variation correlate well with Earth global temperature, particularly in recent decades when both quantities are best known.

The oppositely-directed trends highlighted by Lockwood and Fröhlich in 2007, with global mean temperatures continuing to rise while solar activity fell, have continued and become even more pronounced since then. In 2007 the difference in the trends was apparent after about 1987 and that difference has grown and accelerated in subsequent years. The updated figure (right) shows the variations and contrasts solar cycles 14 and 24, a century apart, that are quite similar in all solar activity measures (in fact cycle 24 is slightly less active than cycle 14 on average), yet the global mean air surface temperature is more than 1 degree Celsius higher for cycle 24 than cycle 14, showing the rise is not associated with solar activity. The total solar irradiance (TSI) panel shows the PMOD composite of observations with a modelled variation from the SATIRE-T2 model of the effect of sunspots and faculae with the addition of a quiet -Sun variation (due to sub-resolution photospheric features and any solar radius changes) derived from correlations with comic ray fluxes and cosmogenic isotopes. The finding that solar activity was approximately the same in cycles 14 and 24 applies to all solar outputs that have, in the past, been proposed as a potential cause of terrestrial climate change and includes total solar irradiance, cosmic ray fluxes, spectral UV irradiance, solar wind speed and/or density, heliospheric magnetic field and its distribution of orientations and the consequent level of geomagnetic activity.

Daytime/nighttime

Global average diurnal temperature range has decreased. Daytime temperatures have not risen as fast as nighttime temperatures. This is the opposite of the expected warming if solar energy (falling primarily or wholly during daylight, depending on energy regime) were the principal means of forcing. It is, however, the expected pattern if greenhouse gases were preventing radiative escape, which is more prevalent at night.

Hemisphere and latitude

The Northern Hemisphere is warming faster than the Southern Hemisphere. This is the opposite of the expected pattern if the Sun, currently closer to the Earth during austral summer, were the principal climate forcing. In particular, the Southern Hemisphere, with more ocean area and less land area, has a lower albedo ("whiteness") and absorbs more light. The Northern Hemisphere, however, has higher population, industry and emissions.

Furthermore, the Arctic region is warming faster than the Antarctic and faster than northern mid-latitudes and subtropics, despite polar regions receiving less sun than lower latitudes.

Altitude

Solar forcing should warm Earth's atmosphere roughly evenly by altitude, with some variation by wavelength/energy regime. However, the atmosphere is warming at lower altitudes while cooling higher up. This is the expected pattern if greenhouse gases drive temperature, as on Venus.

Solar variation theory

A 1994 study of the US National Research Council concluded that TSI variations were the most likely cause of significant climate change in the pre-industrial era, before significant human-generated carbon dioxide entered the atmosphere.

Scafetta and West correlated solar proxy data and lower tropospheric temperature for the preindustrial era, before significant anthropogenic greenhouse forcing, suggesting that TSI variations may have contributed 50% of the warming observed between 1900 and 2000 (although they conclude "our estimates about the solar effect on climate might be overestimated and should be considered as an upper limit.") If interpreted as a detection rather than an upper limit, this would contrast with global climate models predicting that solar forcing of climate through direct radiative forcing makes an insignificant contribution.

In 2000, Stott and others reported on the most comprehensive model simulations of 20th century climate to that date. Their study looked at both "natural forcing agents" (solar variations and volcanic emissions) as well as "anthropogenic forcing" (greenhouse gases and sulphate aerosols). They found that "solar effects may have contributed significantly to the warming in the first half of the century although this result is dependent on the reconstruction of total solar irradiance that is used. In the latter half of the century, we find that anthropogenic increases in greenhouses gases are largely responsible for the observed warming, balanced by some cooling due to anthropogenic sulphate aerosols, with no evidence for significant solar effects." Stott's group found that combining these factors enabled them to closely simulate global temperature changes throughout the 20th century. They predicted that continued greenhouse gas emissions would cause additional future temperature increases "at a rate similar to that observed in recent decades". In addition, the study notes "uncertainties in historical forcing" — in other words, past natural forcing may still be having a delayed warming effect, most likely due to the oceans.

Stott's 2003 work largely revised his assessment, and found a significant solar contribution to recent warming, although still smaller (between 16 and 36%) than that of greenhouse gases.

A study in 2004 concluded that solar activity affects the climate - based on sunspot activity, yet plays only a small role in the current global warming.

Correlations to solar cycle length

In 1991, Friis-Christensen and Lassen claimed a strong correlation of the length of the solar cycle with northern hemispheric temperature changes. They initially used sunspot and temperature measurements from 1861 to 1989 and later extended the period using four centuries of climate records. Their reported relationship appeared to account for nearly 80 per cent of measured temperature changes over this period. The mechanism behind these claimed correlations was a matter of speculation.

In a 2003 paper Laut identified problems with some of these correlation analyses. Damon and Laut claimed:

the apparent strong correlations displayed on these graphs have been obtained by incorrect handling of the physical data. The graphs are still widely referred to in the literature, and their misleading character has not yet been generally recognized.

Damon and Laut stated that when the graphs are corrected for filtering errors, the sensational agreement with the recent global warming, which drew worldwide attention, totally disappeared.

In 2000, Lassen and Thejll updated their 1991 research and concluded that while the solar cycle accounted for about half the temperature rise since 1900, it failed to explain a rise of 0.4 °C since 1980. Benestad's 2005 review found that the solar cycle did not follow Earth's global mean surface temperature.

Weather

Solar activity may also impact regional climates, such as for the rivers Paraná and Po. Measurements from NASA's Solar Radiation and Climate Experiment show that solar UV output is more variable than total solar irradiance. Climate modelling suggests that low solar activity may result in, for example, colder winters in the US and northern Europe and milder winters in Canada and southern Europe, with little change in global averages. More broadly, links have been suggested between solar cycles, global climate and regional events such as El Niño. Hancock and Yarger found "statistically significant relationships between the double [~21-year] sunspot cycle and the 'January thaw' phenomenon along the East Coast and between the double sunspot cycle and 'drought' (June temperature and precipitation) in the Midwest."

Cloud condensation

Recent research at CERN's CLOUD facility examined links between cosmic rays and cloud condensation nuclei, demonstrating the effect of high-energy particulate radiation in nucleating aerosol particles that are precursors to cloud condensation nuclei. Kirkby (CLOUD team leader) said, "At the moment, it [the experiment] actually says nothing about a possible cosmic-ray effect on clouds and climate." After further investigation, the team concluded that "variations in cosmic ray intensity do not appreciably affect climate through nucleation."

1983–1994 global low cloud formation data from the International Satellite Cloud Climatology Project (ISCCP) was highly correlated with galactic cosmic ray (GCR) flux; subsequent to this period, the correlation broke down. Changes of 3–4% in cloudiness and concurrent changes in cloud top temperatures correlated to the 11 and 22-year solar (sunspot) cycles, with increased GCR levels during "antiparallel" cycles. Global average cloud cover change was measured at 1.5–2%. Several GCR and cloud cover studies found positive correlation at latitudes greater than 50° and negative correlation at lower latitudes. However, not all scientists accept this correlation as statistically significant, and some who do attribute it to other solar variability (e.g. UV or total irradiance variations) rather than directly to GCR changes. Difficulties in interpreting such correlations include the fact that many aspects of solar variability change at similar times, and some climate systems have delayed responses.

Historical perspective

Physicist and historian Spencer R. Weart in The Discovery of Global Warming (2003) wrote:

The study of [sun spot] cycles was generally popular through the first half of the century. Governments had collected a lot of weather data to play with and inevitably people found correlations between sun spot cycles and select weather patterns. If rainfall in England didn't fit the cycle, maybe storminess in New England would. Respected scientists and enthusiastic amateurs insisted they had found patterns reliable enough to make predictions. Sooner or later though every prediction failed. An example was a highly credible forecast of a dry spell in Africa during the sunspot minimum of the early 1930s. When the period turned out to be wet, a meteorologist later recalled "the subject of sunspots and weather relationships fell into dispute, especially among British meteorologists who witnessed the discomfiture of some of their most respected superiors." Even in the 1960s he said, "For a young [climate] researcher to entertain any statement of sun-weather relationships was to brand oneself a crank."