| Author | Terrence W. Deacon |

|---|---|

| Country | United States |

| Language | English |

| Subject | Science |

| Published | W. W. Norton & Company; 1 edition (November 21, 2011) |

| Media type | |

| Pages | 670 |

| ISBN | 978-0393049916 |

| OCLC | 601107605 |

| 612.8/2 |

Incomplete Nature: How Mind Emerged from Matter is a 2011 book by biological anthropologist Terrence Deacon. The book covers topics in biosemiotics, philosophy of mind, and the origins of life. Broadly, the book seeks to naturalistically explain "aboutness", that is, concepts like intentionality, meaning, normativity, purpose, and function; which Deacon groups together and labels as ententional phenomena.

Core Ideas

Constraints

A central thesis of the book is that absence can still be efficacious. Deacon makes the claim that just as the concept of zero revolutionized mathematics, thinking about life, mind, and other ententional phenomena in terms of constraints (i.e. what is absent) can similarly help us overcome the artificial dichotomy of the mind body problem. A good example of this concept is the hole that defines the hub of a wagon wheel. The hole itself is not a physical thing, but rather a source of constraint that helps to restrict the conformational possibilities of the wheel's components, such that, on a global scale, the property of rolling emerges. Constraints which produce emergent phenomena may not be a process which can be understood by looking at the make-up of the constituents of a pattern. Emergent phenomena are difficult to study because their complexity does not necessarily decompose into parts. When a pattern is broken down, the constraints are no longer at work; there is no hole, no absence to notice. Imagine a hub, a hole for an axle, produced only when the wheel is rolling, thus breaking the wheel may not show you how the hub emerges.Orthograde and contragrade

Deacon notes that the apparent patterns of causality exhibited by living systems seem to be in some ways the inverse of the causal patterns of non-living systems.[citation needed] In an attempt to find a solution to the philosophical problems associated with teleological explanations, Deacon returns to Aristotle's four causes and attempts to modernize them with thermodynamic concepts.

Orthograde changes are caused internally. They are spontaneous changes. That is, orthograde changes are generated by the spontaneous elimination of asymmetries in a thermodynamic system in disequilibrium. Because orthograde changes are driven by the internal geometry of a changing system, orthograde causes can be seen as analogous to Aristotle's formal cause. More loosely, Aristotle's final cause can also be considered orthograde, because goal oriented actions are caused from within.[3]

Contragrade changes are imposed from the outside. They are non-spontaneous changes. Contragrade change is induced when one thermodynamic system interacts with the orthograde changes of another thermodynamic system. The interaction drives the first system into a higher energy, more asymmetrical state. Contragrade changes do work. Because contragrade changes are driven by external interactions with another changing system, contragrade causes can be seen as analogous to Aristotle's efficient cause.[4]

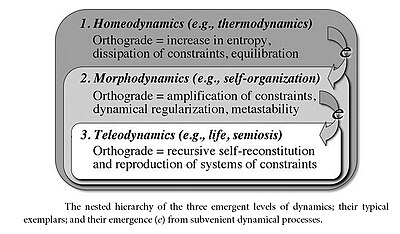

Homeodynamics, morphodynamics, and teleodynamics

Much of the book is devoted to expanding upon the ideas of classical thermodynamics, with an extended discussion about how consistently far from equilibrium systems can interact and combine to produce novel emergent properties.Deacon defines three hierarchically nested levels of thermodynamic systems: Homeodynamic systems combine to produce morphodynamic systems which combine to produce teleodynamic systems. Teleodynamic systems can be further combined to produce higher orders of self organization.

Homeodynamics

Homeodynamic systems are essentially equivalent to classical thermodynamic systems like a gas under pressure or solute in solution, but the term serves to emphasize that homeodynamics is an abstract process that can be realized in forms beyond the scope of classic thermodynamics. For example, the diffuse brain activity normally associated with emotional states can be considered to be a homeodynamic system because there is a general state of equilibrium which its components (neural activity) distribute towards.[5] In general, a homeodynamic system is any collection of components that will spontaneously eliminate constraints by rearranging the parts until a maximum entropy state (disorderliness) is achieved.Morphodynamics

A morphodynamic system consists of a coupling of two homeodynamic systems such that the constraint dissipation of each complements the other, producing macroscopic order out of microscopic interactions. Morphodynamic systems require constant perturbation to maintain their structure, so they are relatively rare in nature. The paradigm example of a morphodynamic system is a Rayleigh–Bénard cell. Other common examples are snowflake formation, whirlpools and the stimulated emission of laser light.

Benard Cell

Maximum entropy production: The organized structure of a morphodynamic system forms to facilitate maximal entropy production. In the case of a Rayleigh–Bénard cell, heat at the base of the liquid produces an uneven distribution of high energy molecules which will tend to diffuse towards the surface. As the temperature of the heat source increases, density effects come into play. Simple diffusion can no longer dissipate energy as fast as it is added and so the bottom of the liquid becomes hot and more buoyant than the cooler, denser liquid at the top. The bottom of the liquid begins to rise, and the top begins to sink - producing convection currents.

Two systems: The significant heat differential on the liquid produces two homeodynamic systems. The first is a diffusion system, where high energy molecules on the bottom collide with lower energy molecules on the top until the added kinetic energy from the heat source is evenly distributed. The second is a convection system, where the low density fluid on the bottom mixes with the high density fluid on the top until the density becomes evenly distributed. The second system arises when there is too much energy to be effectively dissipated by the first, and once both systems are in place, they will begin to interact.

Self organization: The convection creates currents in the fluid that disrupt the pattern of heat diffusion from bottom to top. Heat begins to diffuse into the denser areas of current, irrespective of the vertical location of these denser portions of fluid. The areas of the fluid where diffusion is occurring most rapidly will be the most viscous because molecules are rubbing against each other in opposite directions. The convection currents will shun these areas in favor of parts of the fluid where they can flow more easily. And so the fluid spontaneously segregates itself into cells where high energy, low density fluid flows up from the center of the cell and cooler, denser fluid flows down along the edges, with diffusion effects dominating in the area between the center and the edge of each cell.

Synergy and constraint: What is notable about morphodynamic processes is that order spontaneously emerges explicitly because the ordered system that results is more efficient at increasing entropy than a chaotic one. In the case of the Rayleigh–Bénard cell, neither diffusion nor convection on their own will produce as much entropy as both effects coupled together. When both effects are brought into interaction, they constrain each other into a particular geometric form because that form facilitates minimal interference between the two processes. The orderly hexagonal form is stable as long as the energy differential persists, and yet the orderly form more effectively degrades the energy differential than any other form. This is why morphodynamic processes in nature are usually so short lived. They are self organizing, but also self undermining.

Teleodynamics

A teleodynamic system consists of coupling two morphodynamic systems such that the self undermining quality of each is constrained by the other. Each system prevents the other from dissipating all of the energy available, and so long term organizational stability is obtained. Deacon claims that we should pinpoint the moment when two morphodynamic systems reciprocally constrain each other as the point when ententional qualities like function, purpose and normativity emerge.[6]Autogenesis

Deacon explores the properties of teleodynamic systems by describing a chemically plausible model system called an autogen. Deacon emphasizes that the specific autogen he describes is not a proposed description of the first life form, but rather a description of the kinds of thermodynamic synergies that the first living creature likely possessed.[7]

Autogen pg 339

Reciprocal catalysis: An autogen consists of two self catalyzing cyclical morphodynamic chemical reactions, similar to a chemoton. In one reaction, organic molecules react in a looped series, the products of one reaction becoming the reactants for the next. This looped reaction is self amplifying, producing more and more reactants until all the substrate is consumed. A side product of this reciprocally catalytic loop is a lipid that can be used as a reactant in a second reaction. This second reaction creates a boundary (either a microtubule or some other closed capsid like structure), that serves to contain the first reaction. The boundary limits diffusion; it keeps all of the necessary catalysts in close proximity to each other. In addition, the boundary prevents the first reaction from completely consuming all of the available substrate in the environment.

The first self: Unlike an isolated morphodynamic process whose organization rapidly eliminates the energy gradient necessary to maintain its structure, a teleodynamic process is self-limiting and self preserving. The two reactions complement each other, and ensure that neither ever runs to equilibrium - that is completion, cessation, and death. So, in a teleodynamic system there will be structures that embody a preliminary sketch of a biological function. The internal reaction network functions to create the substrates for the boundary reaction, and the boundary reaction functions to protect and constrain the internal reaction network. Either process in isolation would be abiotic but together they create a system with a normative status dependent on the functioning of its component parts.

Work

As with other concepts in the book, in his discussion of work Deacon seeks to generalize the Newtonian conception of work such that the term can be used to describe and differentiate mental phenomena - to describe "that which makes daydreaming effortless but metabolically equivalent problem solving difficult."[8] Work is generally described as "activity that is necessary to overcome resistance to change. Resistance can be either active or passive, and so work can be directed towards enacting change that wouldn't otherwise occur or preventing change that would happen in its absence."[9] Using the terminology developed earlier in the book, work can be considered to be "the organization of differences between orthograde processes such that a locus of contragrade process is created. Or, more simply, work is a spontaneous change inducing a non-spontaneous change to occur."[10]Thermodynamic work

A thermodynamic systems capacity to do work depends less upon the total energy of the system and more upon the geometric distribution of its components. A glass of water at 20 degrees Celsius will have the same amount of energy as a glass divided in half with the top fluid at 30 degrees and the bottom at 10, but only in the second glass will the top half have the capacity to do work upon the bottom. This is because work occurs at both macroscopic and microscopic levels. Microscopically, there is constant work being performed on one molecule by another when they collide. But the potential for this microscopic work to additively sum to macroscopic work depends on there being an asymmetric distribution of particle speeds, so that the average collision pushes in a focused direction. Microscopic work is necessary but not sufficient for macroscopic work. A global property of asymmetric distribution is also required.Morphodynamic work

By recognizing that asymmetry is a general property of work - that work is done as asymmetric systems spontaneously tend towards symmetry, Deacon abstracts the concept of work and applies it to systems whose symmetries are vastly more complex than those covered by classical thermodynamics. In a morphodynamic system, the tendency towards symmetry produces not global equilibrium, but a complex geometric form like a hexagonal Benard cell or the resonant frequency of a flute. This tendency towards convolutedly symmetric forms can be harnessed to do work on other morphodynamic systems, if the systems are properly coupled.Resonance example: A good example of morphodynamic work is the induced resonance that can be observed by singing or playing a flute next to a string instrument like a harp or guitar. The vibrating air emitted from the flute will interact with the taut strings. If any of the strings are tuned to a resonant frequency that matches the note being played, they too will begin to vibrate and emit sound.

Contragrade change: When energy is added to the flute by blowing air into it, there is a spontaneous (orthograde) tendency for the system to dissipate the added energy by inducing the air within the flute to vibrate at a specific frequency. This orthograde morphodynamic form generation can be used to induce contragrade change in the system coupled to it - the taught string. Playing the flute does work on the string by causing it to enter a high energy state that could not be reached spontaneously in an uncoupled state.

Structure and form: Importantly, this is not just the macro scale propagation of random micro vibrations from one system to another. The global geometric structure of the system is essential. The total energy transferred from the flute to the string matters far less than the patterns it takes in transit. That is, the amplitude of the coupled note is irrelevant, what matters is its frequency. Notes that have a higher or lower frequency than the resonant frequency of the string will not be able to do morphodynamic work.

Teleodynamic work

Work is generally defined to be the interaction of two orthograde changing systems such that contragrade change is produced.[11] In teleodynamic systems, the spontaneous orthograde tendency is not to equilibriate (as in homeodynamic systems), nor to self simplify (as in morphodynamic systems) but rather to tend towards self-preservation. Living organisms spontaneously tend to heal, to reproduce and to pursue resources towards these ends. Teleodynamic work acts on these tendencies and pushes them in a contragrade, non-spontaneous direction.

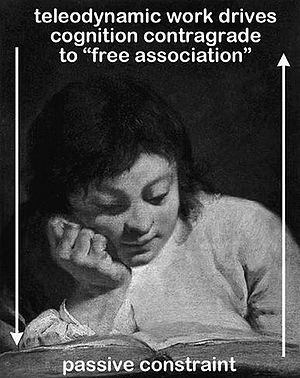

Reading

exemplifies the logic of teleodynamic work. A passive source of

cognitive constraints is potentially provided by the letterforms on a

page. A literate person has structured his or her sensory and cognitive

habits to use such letterforms to reorganize the neural activities

constituting thinking. This enables us to do teleodynamic work to shift

mental tendencies away from those that are spontaneous (such as

daydreaming) to those that are constrained by the text. Artist: Giovanni Battista Piazzetta (1682–1754).

Evolution as work: Natural selection, or perhaps more accurately, adaptation, can be considered to be a ubiquitous form of teleodynamic work. The othograde self-preservation and reproduction tendencies of individual organisms tends to undermine those same tendencies in conspecifics. This competition produces a constraint that tends to mold organisms into forms that are more adapted to their environments – forms that would otherwise not spontaneously persist.

For example, in a population of New Zealand wrybill who make a living by searching for grubs under rocks, those that have a bent beak gain access to more calories. Those with bent beaks are able to better provide for their young, and at the same time they remove a disproportionate quantity of grubs from their environment, making it more difficult for those with strait beaks to provide for their own young. Throughout their lives, all the wrybills in the population do work to structure the form of the next generation. The increased efficiency of the bent beak causes that morphology to dominate the next generation. Thus an asymmetry of beak shape distribution is produced in the population - an asymmetry produced by teleodynamic work.

Thought as work: Mental problem solving can also be considered teleodynamic work. Thought forms are spontaneously generated, and task of problem solving is the task of molding those forms to fit the context of the problem at hand. Deacon makes the link between evolution as teleodynamic work and thought as teleodynamic work explicit. "The experience of being sentient is what it feels like to be evolution."[12]

Emergent causal powers

By conceiving of work in this way, Deacon claims "we can begin to discern a basis for a form of causal openness in the universe."[13] While increases in complexity in no way alter the laws of physics, by juxtaposing systems together, pathways of spontaneous change can be made available that were inconceivably improbable prior to the systems coupling. The causal power of any complex living system lies not solely in the underlying quantum mechanics but also in the global arrangement of its components. A careful arrangement of parts can constrain possibilities such that phenomena that were formerly impossibly rare can become improbably common.Information

One of the central purposes of Incomplete Nature is to articulate a theory of biological information. The first formal theory of information was articulated by Claude Shannon in 1948 in his work A Mathematical Theory of Communication. Shannon's work is widely credited with ushering in the information age, but somewhat paradoxically, it was completely silent on questions of meaning and reference, i.e., what the information is about. As an engineer, Shannon was concerned with the challenge of reliably transmitting a message from one location to another. The meaning and content of the message was largely irrelevant. So, while Shannon information theory has been essential for the development of devices like computers, it has left open many philosophical questions regarding the nature of information. Incomplete Nature seeks to answer some of these questions.Shannon information

Shannon's key insight was to recognize a link between entropy and information. Entropy is often defined as a measurement of disorder, or randomness, but this can be misleading. For Shannon's purposes, the entropy of a system is the number of possible states that the system has the capacity to be in. Any one of these potential states can constitute a message. For example, a typewritten page can bear as many different messages as there are different combinations of characters that can be arranged on the page. The information content of a message can only be understood against the background context of all of the messages that could have been sent, but weren't. Information is produced by a reduction of entropy in the message medium.

Three nested conceptions of information