Linguistics is the

scientific[1] study of

language.

[2] There are three aspects to this study: language

form, language

meaning, and language in context.

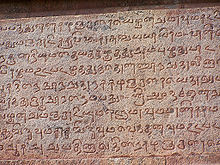

[3] The earliest activities in the

description of language have been attributed to

Pāṇini, who was an early student of linguistics

[4](fl. 4th century BCE),

[5] with his analysis of

Sanskrit in

Ashtadhyayi.

[6]

Linguistics analyzes human language as a system for relating

sounds (or signs in signed languages) and

meaning.

[7] Phonetics studies acoustic and articulatory properties of the production and perception of speech sounds and non-speech sounds. The study of language

meaning, on the other hand, deals with how languages encode relations between entities, properties, and other aspects of the world to convey, process, and assign meaning, as well as to manage and resolve

ambiguity. While the study of

semantics typically concerns itself with

truth conditions,

pragmatics deals with how context influences meanings.

[8]

Grammar is a system of rules which govern the form of the utterances in a given language. It encompasses both sound

[9] and meaning, and includes

phonology (how sounds and gestures function together),

morphology (the formation and composition of words), and

syntax (the formation and composition of phrases and sentences from words).

[10]

In the early 20th century,

Ferdinand de Saussure distinguished between the notions of

langue and parole in his formulation of

structural linguistics. According to him,

parole is the specific utterance of speech, whereas

langue refers to an abstract phenomenon that theoretically defines the principles and system of rules that govern a language.

[11] This distinction resembles the one made by

Noam Chomsky between

competence and performance, where competence is individual's ideal knowledge of a language, while performance is the specific way in which it is used.

[12]

The formal study of language has also led to the growth of fields like

psycholinguistics, which explores the representation and function of language in the mind;

neurolinguistics, which studies language processing in the brain; and

language acquisition, which investigates how children and adults acquire a particular language.

Linguistics also includes nonformal approaches to the study of other aspects of human language, such as social, cultural, historical and political factors.

[13] The study of cultural discourses and dialects is the domain of

sociolinguistics, which looks at the relation between linguistic variation and social structures, as well as that of

discourse analysis, which examines the structure of texts and

conversations.

[14] Research on language through

historical and

evolutionary linguistics focuses on how languages change, and on the origin and growth of languages, particularly over an extended

period of time.

Corpus linguistics takes naturally occurring

texts or films (in signed languages) as its primary object of analysis, and studies the variation of grammatical and other features based on such corpora.

Stylistics involves the study of patterns of style: within written, signed, or spoken

discourse.

[15] Language documentation combines anthropological inquiry with linguistic inquiry to describe languages and their grammars.

Lexicography covers the study and construction of dictionaries.

Computational linguistics applies computer technology to address questions in

theoretical linguistics, as well as to create applications for use in

parsing, data retrieval,

machine translation, and other areas. People can apply actual knowledge of a language in

translation and

interpreting, as well as in

language education - the teaching of a second or

foreign language. Policy makers work with governments to implement new plans in education and teaching which are based on linguistic research.

Areas of study related to linguistics include

semiotics (the study of signs and symbols both within language and without),

literary criticism,

translation, and

speech-language pathology.

Nomenclature

Before the 20th century, the term

philology, first attested in 1716,

[16] was commonly used to refer to the science of language, which was then predominantly historical in focus.

[17][18] Since

Ferdinand de Saussure's insistence on the importance of

synchronic analysis, however, this focus has shifted

[19] and the term "philology" is now generally used for the "study of a language's grammar, history, and literary tradition", especially in the United States

[20] (where philology has never been very popularly considered as the "science of language").

[21]

Although the term "linguist" in the sense of "a student of language" dates from 1641,

[22] the term "linguistics" is first attested in 1847.

[22] It is now the common academic term in English for the scientific study of language.

Today, the term

linguist applies to someone who studies

language or is a researcher within the field, or to someone who uses the tools of the discipline to describe and analyze specific languages.

[23]

Variation and Universality

While some theories on linguistics focus on the different varieties that language produces, among different sections of society, others focus on the

universal properties that are common to all human languages. The theory of variation therefore would elaborate on the different usages of popular languages like

French and

English across the globe, as well as its smaller

dialects and regional permutations within their national boundaries. The theory of variation looks at the cultural stages that a particular language undergoes, and these include the following.

Lexicon

The

lexicon is a catalogue of words and terms that are stored in a speaker's mind. The lexicon consists of

words and

bound morphemes, which are words that can't stand alone, like

affixes. In some analyses, compound words and certain classes of idiomatic expressions and other collocations are also considered to be part of the lexicon. Dictionaries represent attempts at listing, in alphabetical order, the lexicon of a given language; usually, however, bound morphemes are not included.

Lexicography, closely linked with the domain of semantics, is the science of mapping the words into an

encyclopedia or a

dictionary. The creation and addition of new words (into the lexicon) are called

neologisms.

It is often believed that a speaker's capacity for language lies in the quantity of words stored in the lexicon. However, this is often considered a myth by linguists. The capacity for the use of language is considered by many linguists to lie primarily in the domain of grammar, and to be linked with

competence, rather than with the growth of vocabulary. Even a very small lexicon is theoretically capable of producing an infinite number of sentences.

Discourse

A discourse is a way of speaking that emerges within a certain social setting and is based on a certain subject matter. A particular discourse becomes a language variety when it is used in this way for a particular purpose, and is referred to as a

register.

[24] There may be certain

lexical additions (new words) that are brought into play because of the expertise of the community of people within a certain domain of specialisation. Registers and discourses therefore differentiate themselves through the use of

vocabulary, and at times through the use of style too. People in the medical fraternity, for example, may use some medical terminology in their communication that is specialised to the field of medicine. This is often referred to as being part of the "medical discourse", and so on.

Dialect

A dialect is a

variety of

language that is characteristic of a particular group among the language speakers.

[25] The group of people who are the speakers of a dialect are usually bound to each other by social identity. This is what differentiates a dialect from a

register or a

discourse, where in the latter case, cultural identity does not always play a role. Dialects are speech varieties that have their own grammatical and phonological rules, linguistic features, and stylistic aspects, but have not been given an official status as a language. Dialects often move on to gain the status of a language due to political and social reasons. Differentiation amongst dialects (and subsequently, languages too) is based upon the use of grammatical rules, syntactic rules, and stylistic features, though not always on lexical use or vocabulary. The popular saying that a "

language is a dialect with an army and navy" is attributed as a definition formulated by

Max Weinreich.

Universal grammar takes into account general formal structures and features that are common to all dialects and languages, and the template of which pre-exists in the mind of an infant child. This idea is based on the theory of generative grammar and the formal school of linguistics, whose proponents include

Noam Chomsky and those who follow his theory and work.

"We may as individuals be rather fond of our own dialect. This should not make us think, though, that it is actually any better than any other dialect. Dialects are not good or bad, nice or nasty, right or wrong – they are just different from one another, and it is the mark of a civilised society that it tolerates different dialects just as it tolerates different races, religions and sexes." [26]

Structures

Linguistic structures are pairings of meaning and form. Any particular pairing of meaning and form is a

Saussurean sign. For instance, the meaning "cat" is represented worldwide with a wide variety of different sound patterns (in oral languages), movements of the hands and face (in sign languages), and written symbols (in written languages).

Linguists focusing on structure attempt to understand the rules regarding language use that native speakers know (not always consciously). All linguistic structures can be broken down into component parts that are combined according to (sub)conscious rules, over multiple levels of analysis. For instance, consider the structure of the word "tenth" on two different levels of analysis. On the level of internal word structure (known as morphology), the word "tenth" is made up of one linguistic form indicating a number and another form indicating ordinality. The rule governing the combination of these forms ensures that the ordinality marker "th" follows the number "ten." On the level of sound structure (known as phonology), structural analysis shows that the "n" sound in "tenth" is made differently from the "n" sound in "ten" spoken alone. Although most speakers of English are consciously aware of the rules governing internal structure of the word pieces of "tenth", they are less often aware of the rule governing its sound structure. Linguists focused on structure find and analyze rules such as these, which govern how native speakers use language.

Linguistics has many sub-fields concerned with particular aspects of linguistic structure. The theory that elucidates on these, as propounded by Noam Chomsky, is known as

generative theory or

universal grammar. These sub-fields range from those focused primarily on form to those focused primarily on meaning. They also run the gamut of level of analysis of language, from individual sounds, to words, to phrases, up to cultural discourse.

Sub-fields that focus on a structure-focused study of language:

- Phonetics, the study of the physical properties of speech sound production and perception

- Phonology, the study of sounds as abstract elements in the speaker's mind that distinguish meaning (phonemes)

- Morphology, the study of morphemes, or the internal structures of words and how they can be modified

- Syntax, the study of how words combine to form grammatical phrases and sentences

- Semantics, the study of the meaning of words (lexical semantics) and fixed word combinations (phraseology), and how these combine to form the meanings of sentences

- Pragmatics, the study of how utterances are used in communicative acts, and the role played by context and non-linguistic knowledge in the transmission of meaning

- Discourse analysis, the analysis of language use in texts (spoken, written, or signed)

- Stylistics, the study of linguistic factors (rhetoric, diction, stress) that place a discourse in context

- Semiotics, the study of signs and sign processes (semiosis), indication, designation, likeness, analogy, metaphor, symbolism, signification, and communication.

Relativity

As constructed popularly through the "

Sapir-Whorf Hypothesis", relativists believe that the structure of a particular language is capable of influencing the cognitive patterns through which a person shapes his or her

world view. Universalists believe that there are commonalities between human perception as there is in the human capacity for language, while relativists believe that this varies from language to language and person to person. While the Sapir-Whorf hypothesis is an elaboration of this idea expressed through the writings of American linguists

Edward Sapir and

Benjamin Lee Whorf, it was Sapir's student

Harry Hoijer who termed it thus. The 20th century German linguist

Leo Weisgerber also wrote extensively about the theory of relativity. Relativists argue for the case of differentiation at the level of cognition and in semantic domains. The emergence of

cognitive linguistics in the 1980s also revived an interest in linguistic relativity. Thinkers like

George Lakoff have argued that language reflects different cultural metaphors, while the French philosopher of language

Jacques Derrida's writings have been seen to be closely associated with the relativist movement in linguistics, especially through

deconstruction[27] and was even heavily criticised in the media at the time of his death for his theory of relativism.

[28]

Style

Stylistics is the study and interpretation of texts for aspects of their linguistic and tonal style. Stylistic analysis entails the analysis of description of particular

dialects and

registers used by speech communities. Stylistic features include

rhetoric,

[29] diction, stress,

satire,

irony, dialogue, and other forms of phonetic variations. Stylistic analysis can also include the study of language in canonical works of literature, popular fiction, news, advertisements, and other forms of communication in popular culture as well. It is usually seen as a variation in communication that changes from speaker to speaker and community to community. In short, Stylistics is the interpretation of text.

Approach

Generative vs. functional theories of language

One major debate in linguistics concerns how language should be defined and understood. Some linguists use the term "language" primarily to refer to a hypothesized, innate

module in the

human brain that allows people to undertake linguistic behavior, which is part of the

formalist approach. This "

universal grammar" is considered to guide children when they learn languages and to constrain what sentences are considered grammatical in any language. Proponents of this view, which is predominant in those schools of linguistics that are based on the

generative theory of

Noam Chomsky, do not necessarily consider that language evolved for communication in particular. They consider instead that it has more to do with the process of structuring human thought (see also

formal grammar).

Another group of linguists, by contrast, use the term "language" to refer to a communication system that developed to support

cooperative activity and extend cooperative networks. Such

theories of grammar, called "functional", view language as a tool that emerged and is adapted to the communicative needs of its users, and the role of

cultural evolutionary processes are often emphasized over that of

biological evolution.

[30]

Methodology

Linguistics is primarily

descriptive. Linguists describe and explain features of language without making subjective judgments on whether a particular feature or usage is "good" or "bad". This is analogous to practice in other sciences: a

zoologist studies the animal kingdom without making subjective judgments on whether a particular species is "better" or "worse" than another.

Prescription, on the other hand, is an attempt to promote particular linguistic usages over others, often favoring a particular dialect or "

acrolect". This may have the aim of establishing a

linguistic standard, which can aid communication over large geographical areas. It may also, however, be an attempt by speakers of one language or dialect to exert influence over speakers of other languages or dialects (see

Linguistic imperialism). An extreme version of prescriptivism can be found among

censors, who attempt to eradicate words and structures that they consider to be destructive to society. Prescription, however, is practiced in the teaching of language, where certain fundamental grammatical rules and lexical terms need to be introduced to a second-language speaker who is attempting to

acquire the language.

Analysis

Before the 20th century, linguists analyzed language on a

diachronic plane, which was historical in focus. This meant that they would compare linguistic features and try to analyze language from the point of view of how it had changed between then and later. However, with

Saussurean linguistics in the 20th century, the focus shifted to a more

synchronic approach, where the study was more geared towards analysis and comparison between different language variations, which existed at the same given point of time.

At another level, the

syntagmatic plane of linguistic analysis entails the comparison between the way words are sequenced, within the syntax of a sentence. For example, the article "the" is followed by a noun, because of the syntagmatic relation between the words. The

paradigmatic plane on the other hand, focuses on an analysis that is based on the

paradigms or concepts that are embedded in a given text. In this case, words of the same type or class may be replaced in the text with each other to achieve the same conceptual understanding.

Anthropology

The objective of describing languages is to often uncover cultural knowledge about communities. The use of

anthropological methods of investigation on linguistic sources leads to the discovery of certain cultural traits among a speech community through its linguistic features. It is also widely used as a tool in

language documentation, with an endeavor to curate

endangered languages. However, now, linguistic inquiry uses the anthropological method to understand cognitive, historical, sociolinguistic and historical processes that languages undergo as they change and evolve, as well as general anthropological inquiry uses the linguistic method to excavate into culture. In all aspects, anthropological inquiry usually uncovers the different variations and relativities that underlie the usage of language.

Sources

Most contemporary linguists work under the assumption that

spoken data and

signed data is more fundamental than

written data. This is because:

- Speech appears to be universal to all human beings capable of producing and perceiving it, while there have been many cultures and speech communities that lack written communication;

- Features appear in speech which aren't always recorded in writing, including phonological rules, sound changes, and speech errors;

- All natural writing systems reflect a spoken language (or potentially a signed one) they are being used to write, with even pictographic scripts like Dongba writing Naxi homophones with the same pictogram, and text in writing systems used for two languages changing to fit the spoken language being recorded;

- Speech evolved before human beings invented writing;

- People learnt to speak and process spoken language more easily and earlier than they did with writing.

Nonetheless, linguists agree that the study of written language can be worthwhile and valuable. For research that relies on

corpus linguistics and

computational linguistics, written language is often much more convenient for processing large amounts of linguistic data. Large corpora of spoken language are difficult to create and hard to find, and are typically

transcribed and written. In addition, linguists have turned to text-based discourse occurring in various formats of

computer-mediated communication as a viable site for linguistic inquiry.

The study of

writing systems themselves,

graphemics, is, in any case, considered a branch of linguistics.

History of linguistic thought

Early grammarians

The formal study of language began in

India with

Pāṇini, the 5th century BC grammarian who formulated 3,959 rules of

Sanskrit morphology. Pāṇini's systematic classification of the sounds of Sanskrit into

consonants and

vowels, and word classes, such as nouns and verbs, was the first known instance of its kind. In the

Middle East Sibawayh (

سیبویه) made a detailed description of Arabic in 760 AD in his monumental work,

Al-kitab fi al-nahw (

الكتاب في النحو,

The Book on Grammar), the first known author to distinguish between

sounds and

phonemes (sounds as units of a linguistic system). Western interest in the study of languages began as early as in the East,

[31] but the grammarians of the classical languages did not use the same methods or reach the same conclusions as their contemporaries in the Indic world. Early interest in language in the West was a part of philosophy, not of grammatical description. The first insights into semantic theory were made by

Plato in his

Cratylus dialogue, where he argues that words denote concepts that are eternal and exist in the world of ideas. This work is the first to use the word

etymology to describe the history of a word's meaning. Around 280 BC, one of

Alexander the Great's successors founded a university (see

Musaeum) in

Alexandria, where a school of philologists studied the ancient texts in and taught

Greek to speakers of other languages. While this school was the first to use the word "

grammar" in its modern sense, Plato had used the word in its original meaning as "

téchnē grammatikḗ" (

Τέχνη Γραμματική), the "art of writing", which is also the title of one of the most important works of the Alexandrine school by

Dionysius Thrax.

[32] Throughout the

Middle Ages, the study of language was subsumed under the topic of philology, the study of ancient languages and texts, practiced by such educators as

Roger Ascham,

Wolfgang Ratke, and

John Amos Comenius.

[33]

Comparative philology

In the 18th century, the first use of the

comparative method by

William Jones sparked the rise of

comparative linguistics.

[34] Bloomfield attributes "the first great scientific linguistic work of the world" to

Jacob Grimm, who wrote

Deutsche Grammatik.

[35] It was soon followed by other authors writing similar comparative studies on other language groups of Europe. The scientific study of language was broadened from Indo-European to language in general by

Wilhelm von Humboldt, of whom Bloomfield asserts:

[35]

This study received its foundation at the hands of the Prussian statesman and scholar Wilhelm von Humboldt (1767–1835), especially in the first volume of his work on Kavi, the literary language of Java, entitled Über die Verschiedenheit des menschlichen Sprachbaues und ihren Einfluß auf die geistige Entwickelung des Menschengeschlechts (On the Variety of the Structure of Human Language and its Influence upon the Mental Development of the Human Race).

Structuralism

Early in the 20th century,

Saussure introduced the idea of language as a static system of interconnected units, defined through the oppositions between them. By introducing a distinction between

diachronic to

synchronic analyses of language, he laid the foundation of the modern discipline of linguistics. Saussure also introduced several basic dimensions of linguistic analysis that are still foundational in many contemporary linguistic theories, such as the distinctions between

syntagm and

paradigm, and the

langue- parole distinction, distinguishing language as an abstract system (

langue) from language as a concrete manifestation of this system (

parole).

[36] Substantial additional contributions following Saussure's definition of a structural approach to language came from

The Prague school,

Leonard Bloomfield,

Charles F. Hockett,

Louis Hjelmslev,

Émile Benveniste and

Roman Jakobson.

[37][38]

Generativism

During the last half of the 20th century, following the work of

Noam Chomsky, linguistics was dominated by the

generativist school. While formulated by Chomsky in part as a way to explain how human beings

acquire language and the biological constraints on this acquisition, in practice it has largely been concerned with giving formal accounts of specific phenomena in natural languages. Generative theory is

modularist and formalist in character. Chomsky built on earlier work of

Zellig Harris to formulate the generative theory of language. According to this theory the most basic form of language is a set of syntactic rules universal for all humans and underlying the grammars of all human languages. This set of rules is called

Universal Grammar, and for Chomsky describing it is the primary objective of the discipline of linguistics. For this reason the grammars of individual languages are of importance to linguistics only in so far as they allow us to discern the universal underlying rules from which the observable linguistic variability is generated.In the classic formalisation of generative grammars first proposed by

Noam Chomsky in the 1950s,

[39][40] a grammar

G consists of the following components:

- A finite set N of nonterminal symbols, none of which appear in strings formed from G.

- A finite set

of terminal symbols that is disjoint from N.

of terminal symbols that is disjoint from N.

- A finite set P of production rules, that map from one string of symbols to another.

A formal description of language attempts to replicate a speaker's knowledge of the rules of their language, and the aim is to produce a set of rules that is minimally sufficient to successfully model valid linguistic forms.

Functionalism

Functional theories of language propose that since language is fundamentally a tool, it is reasonable to assume that its structures are best analyzed and understood with reference to the functions they carry out. Functional theories of grammar differ from

formal theories of grammar, in that the latter seek to define the different elements of language and describe the way they relate to each other as systems of formal rules or operations, whereas the former defines the functions performed by language and then relates these functions to the linguistic elements that carry them out. This means that functional theories of grammar tend to pay attention to the way language is actually used, and not just to the formal relations between linguistic elements.

[41]

Functional theories describe language in term of the functions existing at all levels of language.

- Phonological function: the function of the phoneme is to distinguish between different lexical material.

- Semantic function: (Agent, Patient, Recipient, etc.), describing the role of participants in states of affairs or actions expressed.

- Syntactic functions: (e.g. subject and Object), defining different perspectives in the presentation of a linguistic expression

- Pragmatic functions: (Theme and Rheme, Topic and Focus, Predicate), defining the informational status of constituents, determined by the pragmatic context of the verbal interaction. Functional descriptions of grammar strive to explain how linguistic functions are performed in communication through the use of linguistic forms.

Cognitivism

In the 1950s, a new school of thought known as

cognitivism emerged through the field of

psychology. Cognitivists lay emphasis on

knowledge and information, as opposed to

behaviorism, for instance.

Cognitivism emerged in linguistics as a reaction to generativist theory in the 1970s and 1980s. Led by theorists like

Ronald Langacker and

George Lakoff, cognitive linguists propose that language is an

emergent property of basic, general-purpose cognitive processes. In contrast to the generativist school of linguistics, cognitive linguistics is non-modularist and functionalist in character. Important developments in cognitive linguistics include

cognitive grammar,

frame semantics, and

conceptual metaphor, all of which are based on the idea that form–function correspondences based on representations derived from

embodied experience constitute the basic units of language.Cognitive linguistics interprets language in terms of concepts (sometimes universal, sometimes specific to a particular tongue) that underlie its form. It is thus closely associated with

semantics but is distinct from

psycholinguistics, which draws upon empirical findings from cognitive psychology in order to explain the mental processes that underlie the acquisition, storage, production and understanding of speech and writing. Unlike generative theory,

cognitive linguistics denies that there is an

autonomous linguistic faculty in the mind; it understands grammar in terms of

conceptualization; and claims that knowledge of language arises out of

language use.

[42] Because of its conviction that knowledge of language is learned through use, cognitive linguistics is sometimes considered to be a functional approach, but it differs from other functional approaches in that it is primarily concerned with how the mind creates meaning through language, and not with the use of language as a tool of communication.

Areas of research

Historical linguistics

Historical linguists study the history of specific languages as well as general characteristics of language change. The study of language change is also referred to as "diachronic linguistics" (the study of how one particular language has changed over time), which can be distinguished from "synchronic linguistics" (the comparative study of more than one language at a given moment in time without regard to previous stages). Historical linguistics was among the first sub-disciplines to emerge in linguistics, and was the most widely practiced form of linguistics in the late 19th century. However, there was a shift to the synchronic approach in the early twentieth century with

Saussure, and became more predominant in western linguistics with the work of

Noam Chomsky.

Sociolinguistics

Sociolinguistics is the study of how language is shaped by social factors. This sub-discipline focuses on the synchronic approach of linguistics, and looks at how a language in general, or a set of languages, display variation and varieties at a given point in time. The study of language variation and the different varieties of language through dialects, registers, and ideolects can be tackled through a study of style, as well as through analysis of discourse. Sociolinguists research on both style and discourse in language, and also study the theoretical factors that are at play between language and society.

Developmental linguistics

Developmental linguistics is the study of the development of linguistic ability in individuals, particularly

the acquisition of language in childhood. Some of the questions that developmental linguistics looks into is how children acquire language, how adults can acquire a second language, and what the process of language acquisition is.

Neurolinguistics

Neurolinguistics is the study of the structures in the human brain that underlie grammar and communication. Researchers are drawn to the field from a variety of backgrounds, bringing along a variety of experimental techniques as well as widely varying theoretical perspectives. Much work in neurolinguistics is informed by models in

psycholinguistics and

theoretical linguistics, and is focused on investigating how the brain can implement the processes that theoretical and psycholinguistics propose are necessary in producing and comprehending language. Neurolinguists study the physiological mechanisms by which the brain processes information related to language, and evaluate linguistic and psycholinguistic theories, using aphasiology, brain imaging, electrophysiology, and computer modeling.

Applied linguistics

Linguists are largely concerned with finding and

describing the generalities and varieties both within particular languages and among all languages.

Applied linguistics takes the results of those findings and "applies" them to other areas. Linguistic research is commonly applied to areas such as

language education,

lexicography,

translation,

language planning, which involves governmental policy implementation related to language use, and

natural language processing. "Applied linguistics" has been argued to be something of a misnomer.

[43] Applied linguists actually focus on making sense of and engineering solutions for real-world linguistic problems, and not literally "applying" existing technical knowledge from linguistics. Moreover, they commonly apply technical knowledge from multiple sources, such as sociology (e.g., conversation analysis) and anthropology. (

Constructed language fits under Applied linguistics.)

Today, computers are widely used in many areas of applied linguistics.

Speech synthesis and

speech recognition use phonetic and phonemic knowledge to provide voice interfaces to computers. Applications of

computational linguistics in

machine translation,

computer-assisted translation, and

natural language processing are areas of applied linguistics that have come to the forefront. Their influence has had an effect on theories of syntax and semantics, as modeling syntactic and semantic theories on computers constraints.

Linguistic analysis is a sub-discipline of applied linguistics used by many governments to verify the claimed

nationality of people seeking asylum who do not hold the necessary documentation to prove their claim.

[44] This often takes the form of an

interview by personnel in an immigration department. Depending on the country, this interview is conducted either in the asylum seeker's

native language through an

interpreter or in an international

lingua franca like English.

[44] Australia uses the former method, while Germany employs the latter; the Netherlands uses either method depending on the languages involved.

[44] Tape recordings of the interview then undergo language analysis, which can be done either by private contractors or within a department of the government. In this analysis, linguistic features of the asylum seeker are used by analysts to make a determination about the speaker's nationality. The reported findings of the linguistic analysis can play a critical role in the government's decision on the refugee status of the asylum seeker.

[44]

Inter-disciplinary fields

Within the broad discipline of linguistics, various emerging sub-disciplines focus on a more detailed description and analysis of language, and are often organized on the basis of the school of thought and theoretical approach that they pre-suppose, or the external factors that influence them.

Semiotics

Semiotics is the study of sign processes (semiosis), or signification and communication, signs, and symbols, both individually and grouped into sign systems, including the study of how meaning is constructed and understood. Semioticians often do not restrict themselves to linguistic communication when studying the use of signs but extend the meaning of "sign" to cover all kinds of cultural symbols. Nonetheless, semiotic disciplines closely related to linguistics are

literary studies,

discourse analysis,

text linguistics, and

philosophy of language. Semiotics, within the linguistics paradigm, is the study of the relationship between language and culture. Historically,

Edward Sapir and

Ferdinand De Saussure's structuralist theories influenced the study of signs extensively until the late part of the 20th century, but later, post-modern and post-structural thought, through language philosophers including

Jacques Derrida,

Mikhail Bakhtin,

Michel Foucault, and others, have also been a considerable influence on the discipline in the late part of the 20th century and early 21st century.

[45] These theories emphasise the role of language variation, and the idea of subjective usage, depending on external elements like social and cultural factors, rather than merely on the interplay of formal elements.

Language documentation

Since the inception of the discipline of linguistics, linguists have been concerned with describing and analysing previously

undocumented languages. Starting with

Franz Boas in the early 1900s, this became the main focus of American linguistics until the rise of formal structural linguistics in the mid-20th century. This focus on language documentation was partly motivated by a concern to document the rapidly

disappearing languages of indigenous peoples. The ethnographic dimension of the Boasian approach to language description played a role in the development of disciplines such as

sociolinguistics,

anthropological linguistics, and

linguistic anthropology, which investigate the relations between language, culture, and society.

The emphasis on linguistic description and documentation has also gained prominence outside North America, with the documentation of rapidly dying indigenous languages becoming a primary focus in many university programs in linguistics. Language description is a work-intensive endeavour, usually requiring years of field work in the language concerned, so as to equip the linguist to write a sufficiently accurate reference grammar. Further, the task of documentation requires the linguist to collect a substantial corpus in the language in question, consisting of texts and recordings, both sound and video, which can be stored in an accessible format within open repositories, and used for further research.

[46]

Translation

The sub-field of

translation includes the translation of written and spoken texts across mediums, from digital to print and spoken. To translate literally means to transmute the meaning from one language into another. Translators are often employed by organisations, such as travel agencies as well as governmental embassies to facilitate communication between two speakers who do not know each other's language. Translators are also employed to work within

computational linguistics setups like

Google Translate for example, which is an automated, programmed facility to translate words and phrases between any two or more given languages. Translation is also conducted by publishing houses, which convert works of writing from one language to another in order to reach varied audiences. Academic Translators, specialize and semi specialize on various other disciplines such as; Technology, Science, Law, Economics etc.

Biolinguistics

Biolinguistics is the study of natural as well as human-taught communication systems in animals, compared to human language. Researchers in the field of biolinguistics have also over the years questioned the possibility and extent of language in animals.

Clinical linguistics

Clinical linguistics is the application of linguistic theory to the fields of

Speech-Language Pathology. Speech language pathologists work on corrective measures to cure

communication disorders and swallowing disorders.

Computational linguistics

Computational linguistics is the study of linguistic issues in a way that is 'computationally responsible', i.e., taking careful note of computational consideration of algorithmic specification and computational complexity, so that the linguistic theories devised can be shown to exhibit certain desirable computational properties and their implementations. Computational linguists also work on computer language and software development.

Evolutionary linguistics

Evolutionary linguistics is the interdisciplinary study of the emergence of the language faculty through

human evolution, and also the application of

evolutionary theory to the study of cultural evolution among different languages. It is also a study of the dispersal of various languages across the globe, through movements among ancient communities.

[47]

Forensic linguistics

Forensic linguistics is the application of linguistic analysis to

forensics. Forensic analysis investigates on the style, language, lexical use, and other linguistic and grammatical features used in the legal context to provide evidence in courts of law. Forensic linguists have also contributed expertise in criminal cases.

of

of