From Wikipedia, the free encyclopedia

The origin of speech refers to the general problem of the origin of language in the context of the physiological development of the human speech organs such as the tongue, lips, and vocal organs used to produce phonological units in all spoken languages. The origin of speech has been studied through many fields and topics such as: evolution, anatomy, and history of linguistics. The origin of speech is related to the more general problem of the origin of language, the evolution of distinctively human speech capacities has become a distinct and in many ways separate area of scientific research. The topic is a separate one because language is not necessarily spoken: it can equally be written or signed. Speech is in this sense optional, although it is the default modality for language.

Background

Places of articulation (passive and active):

1.

Exo-labial, 2. Endo-labial, 3. Dental, 4. Alveolar, 5. Post-alveolar,

6. Pre-palatal, 7. Palatal, 8. Velar, 9. Uvular, 10. Pharyngeal, 11.

Glottal, 12. Epiglottal, 13. Radical, 14. Postero-dorsal, 15.

Antero-dorsal, 16. Laminal, 17. Apical, 18. Sub-apical

There are many different theories and ideas that give us a

theoretical framework of how speech in humans originated. Multiple of

these theories play on the idea of how humans evolved over time.

Monkeys, apes and humans, like many other animals, have evolved specialized mechanisms for producing sound for purposes of social communication. On the other hand, no monkey or ape uses its tongue for such purposes.

The human species' unprecedented use of the tongue, lips and other

moveable parts seems to place speech in a quite separate category,

making its evolutionary emergence an intriguing theoretical challenge in

the eyes of many scholars.

Nevertheless, recent insights in human evolution – more specifically, human Pleistocene littoral evolution

– help understand how human speech evolved: different biological

pre-adaptations to spoken language find their origin in humanity's

waterside past, such as a larger brain (thanks to DHA and other brain-specific nutrients in seafoods), voluntary breathing (breath-hold diving for shellfish, etc.), and suction feeding of soft-slippery seafoods. Suction feeding explains why humans, as opposed to other hominoids, evolved hyoidal descent (tongue-bone descended in the throat), closed tooth-rows

(with incisiform canine teeth) and a globular tongue perfectly fitting

in a vaulted and smooth palate (without transverse ridges as in apes):

all this allowed the pronunciation of consonants. Other, probably older, pre-adaptations to human speech are territorial songs and gibbon-like duetting and vocal learning.

Vocal learning, the ability to imitate sounds – as in many birds and bats and a number of Cetacea and Pinnipedia

– is arguably required for locating or finding back (amid the foliage

or in the sea) the offspring or parents. Indeed, independent lines of

evidence (comparative, fossil, archeological, paleo-environmental, isotopic, nutritional, and physiological) show that early-Pleistocene "archaic" Homo spread intercontinentally along the Indian Ocean shores (they even reached overseas islands such as Flores) where they regularly dived for littoral foods such as shell- and crayfish, which are extremely rich in brain-specific nutrients, explaining Homo's brain enlargement. Shallow diving

for seafoods requires voluntary airway control, a prerequisite for

spoken language. Seafood such as shellfish generally does not require

biting and chewing, but stone tool use

and suction feeding. This finer control of the oral apparatus was

arguably another biological pre-adaptation to human speech, especially

for the production of consonants.

Modality-independence

The term modality

means the chosen representational format for encoding and transmitting

information. A striking feature of language is that it is modality-independent.

Should an impaired child be prevented from hearing or producing sound,

its innate capacity to master a language may equally find expression in

signing. Sign languages

of the deaf are independently invented and have all the major

properties of spoken language except for the modality of transmission. From this it appears that the language centres of the human brain must have evolved to function optimally, irrespective of the selected modality.

"The detachment from

modality-specific inputs may represent a substantial change in neural

organization, one that affects not only imitation but also

communication; only humans can lose one modality (e.g. hearing) and make

up for this deficit by communicating with complete competence in a

different modality (i.e. signing)."

— Marc Hauser, Noam Chomsky, and W. Tecumseh Fitch, 2002. The Faculty of Language: What Is It, Who Has It, and How Did It Evolve?

Animal communication systems routinely combine visible with audible

properties and effects, but none is modality-independent. For example,

no vocally-impaired whale, dolphin, or songbird could express its song

repertoire equally in visual display. Indeed, in the case of animal

communication, message and modality are not capable of being

disentangled. Whatever message is being conveyed stems from the

intrinsic properties of the signal.

Modality independence should not be confused with the ordinary phenomenon of multimodality.

Monkeys and apes rely on a repertoire of species-specific

"gesture-calls" – emotionally-expressive vocalisations inseparable from

the visual displays which accompany them.

Humans also have species-specific gesture-calls – laughs, cries, sobs,

etc. – together with involuntary gestures accompanying speech. Many animal displays are polymodal in that each appears designed to exploit multiple channels simultaneously.

The human linguistic property of modality independence is

conceptually distinct from polymodality. It allows the speaker to encode

the informational content of a message in a single channel whilst

switching between channels as necessary. Modern city-dwellers switch

effortlessly between the spoken word and writing in its various forms –

handwriting, typing, email,

etc. Whichever modality is chosen, it can reliably transmit the full

message content without external assistance of any kind. When talking on

the telephone,

for example, any accompanying facial or manual gestures, however

natural to the speaker, are not strictly necessary. When typing or

manually signing, conversely, there is no need to add sounds. In many Australian Aboriginal cultures, a section of the population – perhaps women observing a ritual taboo – traditionally restrict themselves for extended periods to a silent (manually-signed) version of their language.

Then, when released from the taboo, these same individuals resume

narrating stories by the fireside or in the dark, switching to pure

sound without sacrifice of informational content.

Evolution of the speech organs

Speaking is the default modality for language in all cultures.

Humans' first recourse is to encode our thoughts in sound – a method

which depends on sophisticated capacities for controlling the lips,

tongue and other components of the vocal apparatus.

The speech organs evolved in the first instance not for speech

but for more basic bodily functions such as feeding and breathing.

Nonhuman primates have broadly similar organs, but with different neural

controls.

Non-human apes use their highly-flexible, maneuverable tongues for

eating but not for vocalizing. When an ape is not eating, fine motor

control over its tongue is deactivated. Either it is performing gymnastics with its tongue or it is vocalising; it cannot perform both activities simultaneously. Since this applies to mammals in general, Homo sapiens are exceptional in harnessing mechanisms designed for respiration and ingestion for the radically different requirements of articulate speech.

Tongue

Spectrogram of American English vowels

[i, u, ɑ] showing the formants

f1 and

f2 The word "language" derives from the Latin lingua, "tongue". Phoneticians agree that the tongue is the most important speech articulator, followed by the lips. A natural language can be viewed as a particular way of using the tongue to express thought.

The human tongue has an unusual shape. In most mammals, it is a

long, flat structure contained largely within the mouth. It is attached

at the rear to the hyoid bone, situated below the oral level in the pharynx. In humans, the tongue has an almost circular sagittal (midline) contour, much of it lying vertically down an extended pharynx,

where it is attached to a hyoid bone in a lowered position. Partly as a

result of this, the horizontal (inside-the-mouth) and vertical

(down-the-throat) tubes forming the supralaryngeal vocal tract (SVT) are

almost equal in length (whereas in other species, the vertical section

is shorter). As we move our jaws up and down, the tongue can vary the

cross-sectional area of each tube independently by about 10:1, altering

formant frequencies accordingly. That the tubes are joined at a right

angle permits pronunciation of the vowels [i], [u] and [a], which nonhuman primates cannot do.

Even when not performed particularly accurately, in humans the

articulatory gymnastics needed to distinguish these vowels yield

consistent, distinctive acoustic results, illustrating the quantal nature of human speech sounds. It may not be coincidental that [i], [u] and [a] are the most common vowels in the world's languages.

Human tongues are a lot shorter and thinner than other mammals and are

composed of a large number of muscles, which helps shape a variety of

sounds within the oral cavity. The diversity of sound production is also

increased with the human’s ability to open and close the airway,

allowing varying amounts of air to exit through the nose. The fine motor

movements associated with the tongue and the airway, make humans more

capable of producing a wide range of intricate shapes in order to

produce sounds at different rates and intensities.

Lips

In humans, the lips are important for the production of stops and fricatives, in addition to vowels. Nothing, however, suggests that the lips evolved for those reasons. During primate evolution, a shift from nocturnal to diurnal activity in tarsiers, monkeys and apes (the haplorhines) brought with it an increased reliance on vision at the expense of olfaction. As a result, the snout became reduced and the rhinarium

or "wet nose" was lost. The muscles of the face and lips consequently

became less constrained, enabling their co-option to serve purposes of

facial expression. The lips also became thicker, and the oral cavity

hidden behind became smaller.

Hence, according to Ann MacLarnon, "the evolution of mobile, muscular

lips, so important to human speech, was the exaptive result of the

evolution of diurnality and visual communication in the common ancestor

of haplorhines". It is unclear whether human lips have undergone a more recent adaptation to the specific requirements of speech.

Respiratory control

Compared

with nonhuman primates, humans have significantly enhanced control of

breathing, enabling exhalations to be extended and inhalations shortened

as we speak. Whilst we are speaking, intercostal and interior abdominal muscles are recruited to expand the thorax

and draw air into the lungs, and subsequently to control the release of

air as the lungs deflate. The muscles concerned are markedly more innervated in humans than in nonhuman primates. Evidence from fossil hominins suggests that the necessary enlargement of the vertebral canal, and therefore spinal cord dimensions, may not have occurred in Australopithecus or Homo erectus but was present in the Neanderthals and early modern humans.

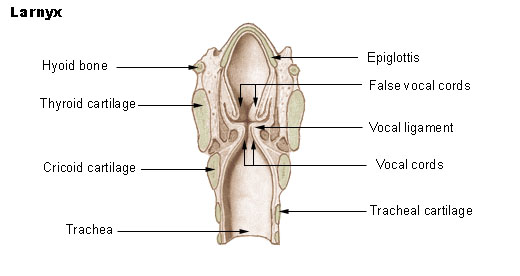

Larynx

The larynx or voice box is an organ in the neck housing the vocal folds, which are responsible for phonation. In humans, the larynx is descended,

it is positioned lower than in other primates. This is because the

evolution of humans to an upright position shifted the head directly

above the spinal cord, forcing everything else downward. The

repositioning of the larynx resulted in a longer cavity called the

pharynx, which is responsible for increasing the range and clarity of

the sound being produced. Other primates have almost no pharynx;

therefore, their vocal power is significantly lower. Humans are not unique in this respect: goats, dogs, pigs and tamarins lower the larynx temporarily, to emit loud calls. Several deer species have a permanently lowered larynx, which may be lowered still further by males during their roaring displays. Lions, jaguars, cheetahs and domestic cats also do this. However, laryngeal descent in nonhumans (according to Philip Lieberman)

is not accompanied by descent of the hyoid; hence the tongue remains

horizontal in the oral cavity, preventing it from acting as a pharyngeal

articulator.

Anterolateral view of head and neck

Despite all this, scholars remain divided as to how "special" the

human vocal tract really is. It has been shown that the larynx does

descend to some extent during development in chimpanzees, followed by hyoidal descent.

As against this, Philip Lieberman points out that only humans have

evolved permanent and substantial laryngeal descent in association with

hyoidal descent, resulting in a curved tongue and two-tube vocal tract

with 1:1 proportions. Uniquely in the human case, simple contact between the epiglottis and velum

is no longer possible, disrupting the normal mammalian separation of

the respiratory and digestive tracts during swallowing. Since this

entails substantial costs – increasing the risk of choking whilst

swallowing food – we are forced to ask what benefits might have

outweighed those costs. Some claim the clear benefit must have been

speech, but other contest this. One objection is that humans are in fact

not seriously at risk of choking on food: medical statistics indicate

that accidents of this kind are extremely rare.

Another objection is that in the view of most scholars, speech as we

know it emerged relatively late in human evolution, roughly

contemporaneously with the emergence of Homo sapiens.

A development as complex as the reconfiguration of the human vocal

tract would have required much more time, implying an early date of

origin. This discrepancy in timescales undermines the idea that human

vocal flexibility was initially driven by selection pressures for

speech.

At least one orangutan has demonstrated the ability to control the voice box.

The size exaggeration hypothesis

To lower the larynx is to increase the length of the vocal tract, in turn lowering formant frequencies so that the voice sounds "deeper" – giving an impression of greater size. John Ohala

argued that the function of the lowered larynx in humans, especially

males, is probably to enhance threat displays rather than speech itself.

Ohala pointed out that if the lowered larynx were an adaptation for

speech, we would expect adult human males to be better adapted in this

respect than adult females, whose larynx is considerably less low. In

fact, females invariably outperform males in verbal tests, falsifying

this whole line of reasoning. William Tecumseh Fitch

likewise argues that this was the original selective advantage of

laryngeal lowering in our species. Although, according to Fitch, the

initial lowering of the larynx in humans had nothing to do with speech,

the increased range of possible formant patterns was subsequently

co-opted for speech. Size exaggeration remains the sole function of the

extreme laryngeal descent observed in male deer. Consistent with the

size exaggeration hypothesis, a second descent of the larynx occurs at

puberty in humans, although only in males. In response to the objection

that the larynx is descended in human females, Fitch suggests that

mothers vocalising to protect their infants would also have benefited

from this ability.

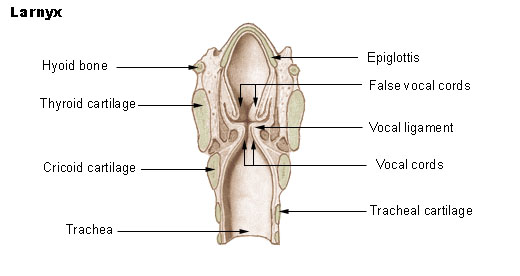

Neanderthal speech

Hyoid bone – anterior surface, enlarged

Most specialists credit the Neanderthals with speech abilities not radically different from those of modern Homo sapiens. An indirect line of argument is that their toolmaking and hunting tactics would have been difficult to learn or execute without some kind of speech. A recent extraction of DNA from Neanderthal bones indicates that Neanderthals had the same version of the FOXP2

gene as modern humans. This gene, mistakenly described as the "grammar

gene", plays a role in controlling the orofacial movements which (in

modern humans) are involved in speech.

During the 1970s, it was widely believed that the Neanderthals lacked modern speech capacities.

It was claimed that they possessed a hyoid bone so high up in the vocal

tract as to preclude the possibility of producing certain vowel sounds.

The hyoid bone is present in many mammals. It allows a wide range

of tongue, pharyngeal and laryngeal movements by bracing these

structures alongside each other in order to produce variation. It is now realised that its lowered position is not unique to Homo sapiens,

whilst its relevance to vocal flexibility may have been overstated:

although men have a lower larynx, they do not produce a wider range of

sounds than women or two-year-old babies. There is no evidence that the

larynx position of the Neanderthals impeded the range of vowel sounds

they could produce. The discovery of a modern-looking hyoid bone of a Neanderthal man in the Kebara Cave in Israel led its discoverers to argue that the Neanderthals had a descended larynx, and thus human-like speech capabilities. However, other researchers have claimed that the morphology of the hyoid is not indicative of the larynx's position. It is necessary to take into consideration the skull base, the mandible, the cervical vertebrae and a cranial reference plane.

The morphology of the outer and middle ear of Middle Pleistocene hominins from Atapuerca,

Spain, believed to be proto-Neanderthal, suggests they had an auditory

sensitivity similar to modern humans and very different from

chimpanzees. They were probably able to differentiate between many

different speech sounds.

Hypoglossal canal

The hypoglossal nerve plays an important role in controlling movements of the tongue. In 1998, a research team used the size of the hypoglossal canal in the base of fossil skulls in an attempt to estimate the relative number of nerve fibres, claiming on this basis that Middle Pleistocene hominins and Neanderthals had more fine-tuned tongue control than either Australopithecines or apes. Subsequently, however, it was demonstrated that hypoglossal canal size and nerve sizes are not correlated, and it is now accepted that such evidence is uninformative about the timing of human speech evolution.

Distinctive features theory

According to one influential school,

the human vocal apparatus is intrinsically digital on the model of a

keyboard or digital computer (see below). Nothing about a chimpanzee's

vocal apparatus suggests a digital keyboard, notwithstanding the

anatomical and physiological similarities. This poses the question as to

when and how, during the course of human evolution, the transition from

analog to digital structure and function occurred.

The human supralaryngeal tract is said to be digital in the sense

that it is an arrangement of moveable toggles or switches, each of

which, at any one time, must be in one state or another. The vocal

cords, for example, are either vibrating (producing a sound) or not

vibrating (in silent mode). By virtue of simple physics, the

corresponding distinctive feature

– in this case, "voicing" – cannot be somewhere in between. The options

are limited to "off" and "on". Equally digital is the feature known as "nasalisation". At any given moment the soft palate or velum either allows or does not allow sound to resonate in the nasal chamber. In the case of lip and tongue positions, more than two digital states may be allowed.

The theory that speech sounds are composite entities constituted

by complexes of binary phonetic features was first advanced in 1938 by

the Russian linguist Roman Jakobson. A prominent early supporter of this approach was Noam Chomsky, who went on to extend it from phonology to language more generally, in particular to the study of syntax and semantics. In his 1965 book, Aspects of the Theory of Syntax,

Chomsky treated semantic concepts as combinations of binary-digital

atomic elements explicitly on the model of distinctive features theory.

The lexical item "bachelor", on this basis, would be expressed as [+

Human], [+ Male], [- Married].

Supporters of this approach view the vowels and consonants recognised by speakers of a particular language or dialect

at a particular time as cultural entities of little scientific

interest. From a natural science standpoint, the units which matter are

those common to Homo sapiens by virtue of our biological nature.

By combining the atomic elements or "features" with which all humans are

innately equipped, anyone may in principle generate the entire range of

vowels and consonants to be found in any of the world's languages,

whether past, present or future. The distinctive features are in this

sense atomic components of a universal language.

Voicing contrast in English fricatives

| Articulation

|

Voiceless

|

Voiced

|

| Pronounced with the lower lip against the teeth:

|

[f] (fan)

|

[v] (van)

|

| Pronounced with the tongue against the teeth:

|

[θ] (thin, thigh)

|

[ð] (then, thy)

|

| Pronounced with the tongue near the gums:

|

[s] (sip)

|

[z] (zip)

|

| Pronounced with the tongue bunched up:

|

[ʃ] (pressure)

|

[ʒ] (pleasure)

|

Criticism

In

recent years, the notion of an innate "universal grammar" underlying

phonological variation has been called into question. The most

comprehensive monograph ever written about speech sounds, The Sounds of the World's Languages, by Peter Ladefoged and Ian Maddieson,

found virtually no basis for the postulation of some small number of

fixed, discrete, universal phonetic features. Examining 305 languages,

for example, they encountered vowels that were positioned basically

everywhere along the articulatory and acoustic continuum. Ladefoged

concluded that phonological features are not determined by human nature:

"Phonological features are best regarded as artifacts that linguists

have devised in order to describe linguistic systems".

Self-organisation theory

Self-organisation

characterises systems where macroscopic structures are spontaneously

formed out of local interactions between the many components of the

system.

In self-organised systems, global organisational properties are not to

be found at the local level. In colloquial terms, self-organisation is

roughly captured by the idea of "bottom-up" (as opposed to "top-down")

organisation. Examples of self-organised systems range from ice crystals

to galaxy spirals in the inorganic world.

A termite mound (Macrotermitinae) in the Okavango Delta just outside

Maun,

BotswanaAccording to many phoneticians, the sounds of language arrange and re-arrange themselves through self-organisation.

Speech sounds have both perceptual (how one hears them) and

articulatory (how one produces them) properties, all with continuous

values. Speakers tend to minimise effort, favouring ease of articulation

over clarity. Listeners do the opposite, favouring sounds that are easy

to distinguish even if difficult to pronounce. Since speakers and

listeners are constantly switching roles, the syllable systems actually

found in the world's languages turn out to be a compromise between

acoustic distinctiveness on the one hand, and articulatory ease on the

other.

Agent-based computer models

take the perspective of self-organisation at the level of the speech

community or population. The two main paradigms are (1) the iterated

learning model and (2) the language game model. Iterated learning

focuses on transmission from generation to generation, typically with

just one agent in each generation.

In the language game model, a whole population of agents simultaneously

produce, perceive and learn language, inventing novel forms when the

need arises.

Several models have shown how relatively simple peer-to-peer

vocal interactions, such as imitation, can spontaneously self-organise a

system of sounds shared by the whole population, and different in

different populations. For example, models elaborated by Berrah et al.

(1996) and de Boer (2000), and recently reformulated using Bayesian theory,

showed how a group of individuals playing imitation games can

self-organise repertoires of vowel sounds which share substantial

properties with human vowel systems. For example, in de Boer's model,

initially vowels are generated randomly, but agents learn from each

other as they interact repeatedly over time. Agent A chooses a vowel

from her repertoire and produces it, inevitably with some noise. Agent B

hears this vowel and chooses the closest equivalent from her own

repertoire. To check whether this truly matches the original, B produces

the vowel she thinks she has heard, whereupon A refers once

again to her own repertoire to find the closest equivalent. If this

matches the one she initially selected, the game is successful,

otherwise, it has failed. "Through repeated interactions", according to

de Boer, "vowel systems emerge that are very much like the ones found in

human languages".

In a different model, the phonetician Björn Lindblom

was able to predict, on self-organisational grounds, the favoured

choices of vowel systems ranging from three to nine vowels on the basis

of a principle of optimal perceptual differentiation.

Further models studied the role of self-organisation in the

origins of phonemic coding and combinatoriality, which is the existence

of phonemes and their systematic reuse to build structured syllables. Pierre-Yves Oudeyer

developed models which showed that basic neural equipment for adaptive

holistic vocal imitation, coupling directly motor and perceptual

representations in the brain, can generate spontaneously shared

combinatorial systems of vocalisations, including phonotactic patterns,

in a society of babbling individuals.

These models also characterised how morphological and physiological

innate constraints can interact with these self-organised mechanisms to

account for both the formation of statistical regularities and diversity

in vocalisation systems.

Gestural theory

The

gestural theory states that speech was a relatively late development,

evolving by degrees from a system that was originally gestural. Our

ancestors were unable to control their vocalisation at the time when

gestures were used to communicate; however, as they slowly began to

control their vocalisations, spoken language began to evolve.

Three types of evidence support this theory:

- Gestural language and vocal language depend on similar neural systems. The regions on the cortex that are responsible for mouth and hand movements border each other.

- Nonhuman primates

minimise vocal signals in favour of manual, facial and other visible

gestures in order to express simple concepts and communicative

intentions in the wild. Some of these gestures resemble those of humans,

such as the "begging posture", with the hands stretched out, which

humans share with chimpanzees.

- Mirror Neurons

Research has found strong support for the idea that spoken language

and signing depend on similar neural structures. Patients who used sign

language, and who suffered from a left-hemisphere lesion, showed the same disorders with their sign language as vocal patients did with their oral language.

Other researchers found that the same left-hemisphere brain regions

were active during sign language as during the use of vocal or written

language.

Humans spontaneously use hand and facial gestures when formulating ideas to be conveyed in speech. There are also, of course, many sign languages in existence, commonly associated with deaf

communities; as noted above, these are equal in complexity,

sophistication, and expressive power, to any oral language. The main

difference is that the "phonemes" are produced on the outside of the

body, articulated with hands, body, and facial expression, rather than

inside the body articulated with tongue, teeth, lips, and breathing.

Many psychologists and scientists have looked into the mirror

system in the brain to answer this theory as well as other behavioural

theories. Evidence to support mirror neurons as a factor in the

evolution of speech includes mirror neurons in primates, the success of

teaching apes to communicate gesturally, and pointing/gesturing to teach

young children language. Fogassi and Ferrari (2014) monitored motor cortex activity in monkeys, specifically area F5 in the

Broca’s area, where mirror neurons are located. They observed changes

in electrical activity in this area when the monkey executed or observed

different hand actions performed by someone else. Broca’s area is a

region in the frontal lobe responsible for language production and

processing. The discovery of mirror neurons in this region, which fire

when an action is done or observed specifically with the hand, strongly

supports the belief that communication was once accomplished with

gestures. The same is true when teaching young children language. When

one points at a specific object or location, mirror neurons in the child

fire as though they were doing the action, which results in long-term

learning

Criticism

Critics

note that for mammals in general, sound turns out to be the best medium

in which to encode information for transmission over distances at

speed. Given the probability that this applied also to early humans, it

is hard to see why they should have abandoned this efficient method in

favour of more costly and cumbersome systems of visual gesturing – only

to return to sound at a later stage.

By way of explanation, it has been proposed that at a relatively

late stage in human evolution, our ancestors' hands became so much in

demand for making and using tools that the competing demands of manual

gesturing became a hindrance. The transition to spoken language is said

to have occurred only at that point.

Since humans throughout evolution have been making and using tools,

however, most scholars remain unconvinced by this argument. (For a

different approach to this issue – one setting out from considerations

of signal reliability and trust – see "from pantomime to speech" below).

Timeline of speech evolution

|

|

−10 — – −9 — – −8 — – −7 — – −6 — – −5 — – −4 — – −3 — – −2 — – −1 — – 0 — | | |

|

Little is known about the timing of language's emergence in the human

species. Unlike writing, speech leaves no material trace, making it

archaeologically invisible. Lacking direct linguistic evidence,

specialists in human origins have resorted to the study of anatomical

features and genes arguably associated with speech production. Whilst

such studies may provide information as to whether pre-modern Homo species had speech capacities,

it is still unknown whether they actually spoke. Whilst they may have

communicated vocally, the anatomical and genetic data lack the

resolution necessary to differentiate proto-language from speech.

Using statistical methods to estimate the time required to achieve the current spread and diversity in modern languages today, Johanna Nichols –

a linguist at the University of California, Berkeley – argued in 1998

that vocal languages must have begun diversifying in our species at

least 100,000 years ago.

More recently – in 2012 – anthropologists Charles Perreault and

Sarah Mathew used phonemic diversity to suggest a date consistent with

this.

"Phonemic diversity" denotes the number of perceptually distinct units

of sound – consonants, vowels and tones – in a language. The current

worldwide pattern of phonemic diversity potentially contains the

statistical signal of the expansion of modern Homo sapiens out of

Africa, beginning around 60-70 thousand years ago. Some scholars argue

that phonemic diversity evolves slowly and can be used as a clock to

calculate how long the oldest African languages would have to have been

around in order to accumulate the number of phonemes they possess today.

As human populations left Africa and expanded into the rest of the

world, they underwent a series of bottlenecks – points at which only a

very small population survived to colonise a new continent or region.

Allegedly such a population crash led to a corresponding reduction in

genetic, phenotypic and phonemic diversity. African languages

today have some of the largest phonemic inventories in the world,

whilst the smallest inventories are found in South America and Oceania,

some of the last regions of the globe to be colonised. For example, Rotokas, a language of New Guinea, and Pirahã, spoken in South America, both have just 11 phonemes, whilst !Xun, a language spoken in Southern Africa has 141 phonemes.

The authors use a natural experiment – the colonization of mainland Southeast Asia on the one hand, the long-isolated Andaman Islands

on the other – to estimate the rate at which phonemic diversity

increases through time. Using this rate, they estimate that the world's

languages date back to the Middle Stone Age

in Africa, sometime between 350 thousand and 150 thousand years ago.

This corresponds to the speciation event which gave rise to Homo sapiens.

These and similar studies have however been criticised by

linguists who argue that they are based on a flawed analogy between

genes and phonemes, since phonemes are frequently transferred laterally

between languages unlike genes, and on a flawed sampling of the world's

languages, since both Oceania and the Americas also contain languages

with very high numbers of phonemes, and Africa contains languages with

very few. They argue that the actual distribution of phonemic diversity

in the world reflects recent language contact and not deep language

history - since it is well demonstrated that languages can lose or gain

many phonemes over very short periods. In other words, there is no valid

linguistic reason to expect genetic founder effects to influence

phonemic diversity.

Speculative scenarios

Early speculations

"I

cannot doubt that language owes its origin to the imitation and

modification, aided by signs and gestures, of various natural sounds,

the voices of other animals, and man's own instinctive cries."

— Charles Darwin, 1871. The Descent of Man, and Selection in Relation to Sex.

In 1861, historical linguist Max Müller published a list of speculative theories concerning the origins of spoken language:

These theories have been grouped under the category named invention

hypotheses. These hypotheses were all meant to understand how the first

language could have developed and postulate that human mimicry of

natural sounds were how the first words with meaning were derived.

- Bow-wow. The bow-wow or cuckoo theory, which Müller attributed to the German philosopher Johann Gottfried Herder,

saw early words as imitations of the cries of beasts and birds. This

theory, believed to be derived from onomatopoeia, relates the meaning of

the sound to the actual sound formulated by the speaker.

- Pooh-pooh. The Pooh-Pooh theory saw the first words as emotional interjections and exclamations

triggered by pain, pleasure, surprise and so on. These sounds were all

produced on sudden intakes of breath, which is unlike any other

language. Unlike emotional reactions, spoken language is produced on the

exhale, so the sounds contained in this form of communication are

unlike those used in normal speech production, which makes this theory a

less plausible one for language acquisition.

- Ding-dong. Müller suggested what he called the Ding-Dong

theory, which states that all things have a vibrating natural

resonance, echoed somehow by man in his earliest words. Words are

derived from the sound associated with their meaning; for example, “crash became a word for thunder, boom for explosion.” This theory also heavily relies on the concept of onomatopoeia.

- Yo-he-ho. The yo-he-ho theory saw language emerging

out of collective rhythmic labor, the attempt to synchronize muscular

effort resulting in sounds such as heave alternating with sounds such as ho.

Believed to be derived from the basis of human collaborative efforts,

this theory states that humans needed words, which might have started

off as chanting, to communicate. This need could have been to ward off

predators, or served as a unifying battle cry.

- Ta-ta. This did not feature in Max Müller's list, having been proposed in 1930 by Sir Richard Paget.[93] According to the ta-ta theory, humans made the earliest words by tongue movements that mimicked manual gestures, rendering them audible.

A common concept of onomatopoeia as the first source of words is

present; however, there is a problem with this theory. Onomatopoeia can

explain the first couple of words all derived from natural phenomenon,

but there is no explanation as to how more complex words without a

natural counterpart came to be.

Most scholars today consider all such theories not so much wrong – they

occasionally offer peripheral insights – as drastically limited.

These theories are too narrowly mechanistic to comprehensively explain

the origin of language. They assume that once the ancestors of humans

had stumbled upon the appropriate ingenious mechanism for linking sounds with meanings, language automatically evolved and changed.

Problems of reliability and deception

From

the perspective of modern science, the main obstacle to the evolution

of speech-like communication in nature is not a mechanistic one. Rather,

it is that symbols – arbitrary associations of sounds with

corresponding meanings – are unreliable and may well be false. As the saying goes, "words are cheap". The problem of reliability was not recognised at all by Darwin, Müller or the other early evolutionist theorists.

Animal vocal signals are for the most part intrinsically

reliable. When a cat purrs, the signal constitutes direct evidence of

the animal's contented state. One can "trust" the signal not because the

cat is inclined to be honest, but because it just can't fake that

sound. Primate vocal calls may be slightly more manipulable, but they remain reliable for the same reason – because they are hard to fake. Primate social intelligence is Machiavellian –

self-serving and unconstrained by moral scruples. Monkeys and apes

often attempt to deceive one another, whilst at the same time remaining

constantly on guard against falling victim to deception themselves.

Paradoxically, it is precisely primates' resistance to deception that

blocks the evolution of their vocal communication systems along

language-like lines. Language is ruled out because the best way to guard

against being deceived is to ignore all signals except those that are

instantly verifiable. Words automatically fail this test.

Words are easy to fake. Should they turn out to be lies,

listeners will adapt by ignoring them in favour of hard-to-fake indices

or cues. For language to work, then, listeners must be confident that

those with whom they are on speaking terms are generally likely to be

honest.

A peculiar feature of language is "displaced reference", which means

reference to topics outside the currently perceptible situation. This

property prevents utterances from being corroborated in the immediate

"here" and "now". For this reason, language presupposes relatively high

levels of mutual trust in order to become established over time as an evolutionarily stable strategy.

A theory of the origins of language must, therefore, explain why humans

could begin trusting cheap signals in ways that other animals

apparently cannot (see signalling theory).

"Kin selection"

The "mother tongues" hypothesis was proposed in 2004 as a possible solution to this problem. W. Tecumseh Fitch suggested that the Darwinian principle of "kin selection" –

the convergence of genetic interests between relatives – might be part

of the answer. Fitch suggests that spoken languages were originally

"mother tongues". If speech evolved initially for communication between

mothers and their own biological offspring, extending later to include

adult relatives as well, the interests of speakers and listeners would

have tended to coincide. Fitch argues that shared genetic interests

would have led to sufficient trust and cooperation for intrinsically

unreliable vocal signals – spoken words – to become accepted as

trustworthy and so begin evolving for the first time.

Criticism

Critics

of this theory point out that kin selection is not unique to humans.

Ape mothers also share genes with their offspring, as do all animals, so

why is it only humans who speak? Furthermore, it is difficult to

believe that early humans restricted linguistic communication to genetic

kin: the incest taboo must have forced men and women to interact and

communicate with non-kin. The extension of the posited "mother tongue"

networks from relatives to non-relatives remains unexplained.

"Reciprocal altruism"

Ib Ulbæk invokes another standard Darwinian principle – "reciprocal altruism" –

to explain the unusually high levels of intentional honesty necessary

for language to evolve. 'Reciprocal altruism' can be expressed as the

principle that if you scratch my back, I'll scratch yours. In linguistic terms, it would mean that if you speak truthfully to me, I'll speak truthfully to you.

Ordinary Darwinian reciprocal altruism, Ulbæk points out, is a

relationship established between frequently interacting individuals. For

language to prevail across an entire community, however, the necessary

reciprocity would have needed to be enforced universally instead of

being left to individual choice. Ulbæk concludes that for language to

evolve, early society as a whole must have been subject to moral

regulation.

Criticism

Critics

point out that this theory fails to explain when, how, why or by whom

"obligatory reciprocal altruism" could possibly have been enforced.

Various proposals have been offered to remedy this defect.

A further criticism is that language doesn't work on the basis of

reciprocal altruism anyway. Humans in conversational groups don't

withhold information to all except listeners likely to offer valuable

information in return. On the contrary, they seem to want to advertise

to the world their access to socially relevant information, broadcasting

it to anyone who will listen without thought of return.

"Gossip and grooming"

Gossip, according to Robin Dunbar, does for group-living humans what manual grooming

does for other primates – it allows individuals to service their

relationships and so maintain their alliances. As humans began living in

larger and larger social groups, the task of manually grooming all

one's friends and acquaintances became so time-consuming as to be

unaffordable. In response to this problem, humans invented "a cheap and

ultra-efficient form of grooming" – vocal grooming. To keep your

allies happy, you now needed only to "groom" them with low-cost vocal

sounds, servicing multiple allies simultaneously whilst keeping both

hands free for other tasks. Vocal grooming (the production of pleasing

sounds lacking syntax or combinatorial semantics) then evolved somehow

into syntactical speech.

Criticism

Critics

of this theory point out that the very efficiency of "vocal grooming" –

that words are so cheap – would have undermined its capacity to signal

commitment of the kind conveyed by time-consuming and costly manual

grooming.

A further criticism is that the theory does nothing to explain the

crucial transition from vocal grooming – the production of pleasing but

meaningless sounds – to the cognitive complexities of syntactical

speech.

From pantomime to speech

According to another school of thought, language evolved from mimesis – the "acting out" of scenarios using vocal and gestural pantomime.

Charles Darwin, who himself was skeptical, hypothesised that human

speech and language is derived from gestures and mouth pantomime. This theory, further elaborated on by various authors, postulates that the genus Homo,

different from our ape ancestors, evolved a new type of cognition. Apes

are capable of associational learning. They can tie a sensory cue to a

motor response often trained through classical conditioning.

However, in apes, the conditioned sensory cue is necessary for a

conditioned response to be observed again. The motor response will not

occur without an external cue from an outside agent. A remarkable

ability that humans possess is the ability to voluntarily retrieve

memories without the need for a cue (e.g. conditioned stimulus). This is

not an ability that has been observed in animals except

language-trained apes. There is still much controversy on whether

pantomime is a capability for apes, both wild and captured.

For as long as utterances needed to be emotionally expressive and

convincing, it was not possible to complete the transition to purely

conventional signs. On this assumption, pre-linguistic gestures and vocalisations would

have been required not just to disambiguate intended meanings, but also

to inspire confidence in their intrinsic reliability. If contractual commitments

were necessary in order to inspire community-wide trust in

communicative intentions, it would follow that these had to be in place

before humans could shift at last to an ultra-efficient, high-speed –

digital as opposed to analog – signalling format. Vocal distinctive features

(sound contrasts) are ideal for this purpose. It is therefore suggested

that the establishment of contractual understandings enabled the

decisive transition from mimetic gesture to fully conventionalised,

digitally encoded speech.

"Ritual/speech coevolution"

The ritual/speech coevolution theory was originally proposed by the distinguished social anthropologist Roy Rappaport before being elaborated by anthropologists such as Chris Knight, Jerome Lewis, Nick Enfield, Camilla Power and Ian Watts. Cognitive scientist and robotics engineer Luc Steels is another prominent supporter of this general approach, as is biological anthropologist/neuroscientist Terrence Deacon.

These scholars argue that there can be no such thing as a "theory

of the origins of language". This is because language is not a separate

adaptation but an internal aspect of something much wider – namely,

human symbolic culture as a whole.

Attempts to explain language independently of this wider context have

spectacularly failed, say these scientists, because they are addressing a

problem with no solution. Can we imagine a historian attempting to

explain the emergence of credit cards independently of the wider system

of which they are a part? Using a credit card makes sense only if you

have a bank account institutionally recognised within a certain kind of

advanced capitalist society – one where communications technology has

already been invented and fraud can be detected and prevented. In much

the same way, language would not work outside a specific array of social

mechanisms and institutions. For example, it would not work for an ape

communicating with other apes in the wild. Not even the cleverest ape

could make language work under such conditions.

"Lie and alternative, inherent in

language, ... pose problems to any society whose structure is founded on

language, which is to say all human societies. I have therefore argued

that if there are to be words at all it is necessary to establish The Word, and that The Word is established by the invariance of liturgy."

Advocates of this school of thought point out that words are cheap. As

digital hallucinations, they are intrinsically unreliable. Should an

especially clever ape, or even a group of articulate apes, try to use

words in the wild, they would carry no conviction. The primate

vocalizations that do carry conviction – those they actually

use – are unlike words, in that they are emotionally expressive,

intrinsically meaningful and reliable because they are relatively costly

and hard to fake.

Speech consists of digital contrasts whose cost is essentially

zero. As pure social conventions, signals of this kind cannot evolve in a

Darwinian social world – they are a theoretical impossibility.

Being intrinsically unreliable, language works only if you can build up

a reputation for trustworthiness within a certain kind of society –

namely, one where symbolic cultural facts (sometimes called

"institutional facts") can be established and maintained through

collective social endorsement. In any hunter-gatherer society, the basic mechanism for establishing trust in symbolic cultural facts is collective ritual.

Therefore, the task facing researchers into the origins of language is

more multidisciplinary than is usually supposed. It involves addressing

the evolutionary emergence of human symbolic culture as a whole, with

language an important but subsidiary component.

Criticism

Critics of the theory include Noam Chomsky,

who terms it the "non-existence" hypothesis – a denial of the very

existence of language as an object of study for natural science. Chomsky's own theory is that language emerged in an instant and in perfect form,

prompting his critics in turn to retort that only something that

doesn't exist – a theoretical construct or convenient scientific

fiction – could possibly emerge in such a miraculous way. The controversy remains unresolved.

Twentieth century speculations

Festal origins

The essay "The festal origin of human speech", though published in the late nineteenth century, made little impact until the American philosopher Susanne Langer re-discovered and publicised it in 1941.

"In

the early history of articulate sounds they could make no meaning

themselves, but they preserved and got intimately associated with the

peculiar feelings and perceptions that came most prominently into the

minds of the festal players during their excitement."

— J. Donovan, 1891. The Festal Origin of Human Speech.

The theory sets out from the observation that primate vocal sounds are above all emotionally

expressive. The emotions aroused are socially contagious. Because of

this, an extended bout of screams, hoots or barks will tend to express

not just the feelings of this or that individual but the mutually

contagious ups and downs of everyone within earshot.

Turning to the ancestors of Homo sapiens, the "festal

origin" theory suggests that in the "play-excitement" preceding or

following a communal hunt or other group activity, everyone might have

combined their voices in a comparable way, emphasizing their mood of

togetherness with such noises as rhythmic drumming and hand-clapping.

Variably pitched voices would have formed conventional patterns, such

that choral singing became an integral part of communal celebration.

Although this was not yet speech, according to Langer, it

developed the vocal capacities from which speech would later derive.

There would be conventional modes of ululating, clapping or dancing

appropriate to different festive occasions, each so intimately

associated with that kind of occasion that it would tend to

collectively uphold and embody the concept of it. Anyone hearing a

snatch of sound from such a song would recall the associated occasion

and mood. A melodic, rhythmic sequence of syllables conventionally

associated with a certain type of celebration would become, in effect,

its vocal mark. On that basis, certain familiar sound sequences would

become "symbolic".

In support of all this, Langer cites ethnographic reports of

tribal songs consisting entirely of "rhythmic nonsense syllables". She

concedes that an English equivalent such as "hey-nonny-nonny", although

perhaps suggestive of certain feelings or ideas, is neither noun, verb,

adjective, nor any other syntactical part of speech. So long as

articulate sound served only in the capacity of "hey nonny-nonny",

"hallelujah" or "alack-a-day", it cannot yet have been speech. For that

to arise, according to Langer, it was necessary for such sequences to be

emitted increasingly out of context – outside the total

situation that gave rise to them. Extending a set of associations from

one cognitive context to another, completely different one, is the

secret of metaphor. Langer invokes an early version of what is

nowadays termed "grammaticalization" theory to show how, from, such a

point of departure, syntactically complex speech might progressively

have arisen.

Langer acknowledges Emile Durkheim as having proposed a strikingly similar theory back in 1912. For recent thinking along broadly similar lines, see Steven Brown on "musilanguage", Chris Knight on "ritual" and "play", Jerome Lewis on "mimicry", Steven Mithen on "Hmmmmm" Bruce Richman on "nonsense syllables" and Alison Wray on "holistic protolanguage".

Mirror neuron hypothesis (MSH) and the Motor Theory of Speech Perception

Mirror

Neurons, originally found in the macaque monkey, are neurons which are

activated in both the action-performer and action-observer. This is a

proposed mechanism in humans.

The mirror neuron hypothesis, based on a phenomenon discovered in

2008 by Rizzolatti and Fabbri, supports the motor theory of speech

perception. The motor theory of speech perception was proposed in 1967

by Liberman, who believed that the motor system and language systems

were closely interlinked.

This would result in a more streamlined process of generating speech;

both the cognition and speech formulation could occur simultaneously.

Essentially, it is wasteful to have a speech decoding and speech

encoding process independent of each other. This hypothesis was further

supported by the discovery of motor neurons. Rizzolatti and Fabbri found

that there were specific neurons in the motor cortex of macaque monkeys

which were activated when seeing an action.

The neurons which are activated are the same neurons in which would be

required to perform the same action themselves. Mirror neurons fire when

observing an action and performing an action, indicating that these

neurons found in the motor cortex are necessary for understanding a

visual process.

The presence of mirror neurons may indicate that non-verbal, gestural

communication is far more ancient than previously thought to be. Motor

theory of speech perception relies on the understanding of motor

representations that underlie speech gestures, such as lip movement.

There is no clear understanding of speech perception currently, but it

is generally accepted that the motor cortex is activated in speech

perception to some capacity.

"Musilanguage"

The

term "musilanguage" (or "hmmmmm") refers to a pre-linguistic system of

vocal communication from which (according to some scholars) both music and

language later derived. The idea is that rhythmic, melodic, emotionally

expressive vocal ritual helped bond coalitions and, over time, set up

selection pressures for enhanced volitional control over the speech

articulators. Patterns of synchronized choral chanting are imagined to

have varied according to the occasion. For example, "we're setting off

to find honey" might sound qualitatively different from "we're setting

off to hunt" or "we're grieving over our relative's death". If social

standing depended on maintaining a regular beat and harmonizing one's

own voice with that of everyone else, group members would have come

under pressure to demonstrate their choral skills.

Archaeologist Steven Mithen

speculates that the Neanderthals possessed some such system, expressing

themselves in a "language" known as "Hmmmmm", standing for Holistic, manipulative, multi-modal, musical and mimetic. In Bruce Richman's earlier version of essentially the same idea,

frequent repetition of the same few songs by many voices made it easy

for people to remember those sequences as whole units. Activities that a

group of people were doing whilst they were vocalizing together –

activities that were important or striking or richly emotional – came to

be associated with particular sound sequences, so that each time a

fragment was heard, it evoked highly specific memories. The idea is that

the earliest lexical items (words) started out as abbreviated fragments

of what were originally communal songs.

"Whenever people sang or chanted a

particular sound sequence they would remember the concrete particulars

of the situation most strongly associated with it: ah, yes! we sing this

during this particular ritual admitting new members to the group; or,

we chant this during a long journey in the forest; or, when a clearing

is finished for a new camp, this is what we chant; or these are the

keenings we sing during ceremonies over dead members of our group."

— Richman,

B. 2000. How music fixed "nonsense" into significant formulas: on

rhythm, repetition, and meaning. In N. L. Wallin, B. Merker and S. Brown

(eds), The Origins of Music: An introduction to evolutionary musicology. Cambridge, Massachusetts: MIT Press, pp. 301-314.

As group members accumulated an expanding repertoire of songs for

different occasions, interpersonal call-and-response patterns evolved

along one trajectory to assume linguistic form. Meanwhile, along a

divergent trajectory, polyphonic singing and other kinds of music became

increasingly specialised and sophisticated.

To explain the establishment of syntactical speech, Richman cites

English "I wanna go home". He imagines this to have been learned in the

first instance not as a combinatorial sequence of free-standing words,

but as a single stuck-together combination – the melodic sound people

make to express "feeling homesick". Someone might sing "I wanna go

home", prompting other voices to chime in with "I need to go home", "I'd

love to go home", "Let's go home" and so forth. Note that one part of

the song remains constant, whilst another is permitted to vary. If this

theory is accepted, syntactically complex speech began evolving as each

chanted mantra allowed for variation at a certain point, allowing for

the insertion of an element from some other song. For example, whilst

mourning during a funeral rite, someone might want to recall a memory of

collecting honey with the deceased, signaling this at an appropriate

moment with a fragment of the "we're collecting honey" song. Imagine

that such practices became common. Meaning-laden utterances would now

have become subject to a distinctively linguistic creative principle –

that of recursive embedding.

Hunter-gatherer egalitarianism

Mbendjele hunter-gatherer meat sharing

Many scholars associate the evolutionary emergence of speech with

profound social, sexual, political and cultural developments. One view

is that primate-style dominance needed to give way to a more cooperative

and egalitarian lifestyle of the kind characteristic of modern

hunter-gatherers.

Intersubjectivity

According to Michael Tomasello, the key cognitive capacity distinguishing Homo sapiens from our ape cousins is "intersubjectivity". This entails turn-taking

and role-reversal: your partner strives to read your mind, you

simultaneously strive to read theirs, and each of you makes a conscious

effort to assist the other in the process. The outcome is that each

partner forms a representation of the other's mind in which their own

can be discerned by reflection.

Tomasello argues that this kind of bi-directional cognition is

central to the very possibility of linguistic communication. Drawing on

his research with both children and chimpanzees, he reports that human

infants, from one year old onwards, begin viewing their own mind as if

from the standpoint of others. He describes this as a cognitive

revolution. Chimpanzees, as they grow up, never undergo such a

revolution. The explanation, according to Tomasello, is that their

evolved psychology is adapted to a deeply competitive way of life.

Wild-living chimpanzees from despotic social hierarchies, most

interactions involving calculations of dominance and submission. An

adult chimp will strive to outwit its rivals by guessing at their

intentions whilst blocking them from reciprocating. Since bi-directional

intersubjective communication is impossible under such conditions, the

cognitive capacities necessary for language don't evolve.

Counter-dominance

In the scenario favoured by David Erdal and Andrew Whiten, primate-style dominance provoked equal and opposite coalitionary resistance – counter-dominance.

During the course of human evolution, increasingly effective strategies

of rebellion against dominant individuals led to a compromise. Whilst

abandoning any attempt to dominate others, group members vigorously

asserted their personal autonomy, maintaining their alliances to make

potentially dominant individuals think twice. Within increasingly stable

coalitions, according to this perspective, status began to be earned in

novel ways, social rewards accruing to those perceived by their peers

as especially cooperative and self-aware.

Reverse dominance

Whilst counter-dominance, according to this evolutionary narrative, culminates in a stalemate, anthropologist Christopher Boehm

extends the logic a step further. Counter-dominance tips over at last

into full-scale "reverse dominance". The rebellious coalition decisively

overthrows the figure of the primate alpha-male. No dominance is

allowed except that of the self-organised community as a whole.

As a result of this social and political change, hunter-gatherer

egalitarianism is established. As children grow up, they are motivated

by those around them to reverse perspective, engaging with other minds

on the model of their own. Selection pressures favor such psychological

innovations as imaginative empathy, joint attention, moral judgment,

project-oriented collaboration and the ability to evaluate one's own

behaviour from the standpoint of others. Underpinning enhanced

probabilities of cultural transmission and cumulative cultural

evolution, these developments culminated in the establishment of

hunter-gatherer-style egalitarianism in association with intersubjective

communication and cognition. It is in this social and political context

that language evolves.

Scenarios involving mother-infant interactions

"Putting the baby down"

According

to Dean Falk's "putting the baby down" theory, vocal interactions

between early hominin mothers and infants sparked a sequence of events

that led, eventually, to our ancestors' earliest words.

The basic idea is that evolving human mothers, unlike their monkey and

ape counterparts, couldn't move around and forage with their infants

clinging onto their backs. Loss of fur in the human case left infants

with no means of clinging on. Frequently, therefore, mothers had to put

their babies down. As a result, these babies needed reassurance that

they were not being abandoned. Mothers responded by developing

"motherese" – an infant-directed communicative system embracing facial

expressions, body language, touching, patting, caressing, laughter,

tickling and emotionally expressive contact calls. The argument is that

language somehow developed out of all this.

- Criticism

Whilst this theory may explain a certain kind of infant-directed

"protolanguage" – known today as "motherese" – it does little to solve

the really difficult problem, which is the emergence amongst adults of

syntactical speech.

Co-operative breeding

Evolutionary anthropologist Sarah Hrdy

observes that only human mothers amongst great apes are willing to let

another individual take hold of their own babies; further, we are

routinely willing to let others babysit. She identifies lack of trust as

the major factor preventing chimpanzee, bonobo or gorilla

mothers from doing the same: "If ape mothers insist on carrying their

babies everywhere ... it is because the available alternatives are not

safe enough". The fundamental problem is that ape mothers (unlike monkey

mothers who may often babysit) do not have female relatives nearby. The

strong implication is that, in the course of Homo evolution, allocare could develop because Homo mothers did have female kin close by – in the first place, most reliably, their own mothers. Extending the Grandmother hypothesis, Hrdy argues that evolving Homo erectus

females necessarily relied on female kin initially; this novel

situation in ape evolution of mother, infant and mother's mother as

allocarer provided the evolutionary ground for the emergence of

intersubjectivity. She relates this onset of "cooperative breeding in an

ape" to shifts in life history and slower child development, linked to

the change in brain and body size from the 2 million year mark.

Primatologist Klaus Zuberbühler

uses these ideas to help explain the emergence of vocal flexibility in

the human species. Co-operative breeding would have compelled infants to

struggle actively to gain the attention of caregivers, not all of whom

would have been directly related. A basic primate repertoire of vocal

signals may have been insufficient for this social challenge. Natural

selection, according to this view, would have favoured babies with

advanced vocal skills, beginning with babbling (which triggers positive

responses in care-givers) and paving the way for the elaborate and

unique speech abilities of modern humans.

Was "mama" the first word?

These

ideas might be linked to those of the renowned structural linguist

Roman Jakobson, who claimed that "the sucking activities of the child

are accompanied by a slight nasal murmur, the only phonation to be

produced when the lips are pressed to the mother's breast ... and the

mouth is full".

He proposed that later in the infant's development, "this phonatory

reaction to nursing is reproduced as an anticipatory signal at the mere

sight of food and finally as a manifestation of a desire to eat, or more

generally, as an expression of discontent and impatient longing for

missing food or absent nurser, and any ungranted wish". So, the action

of opening and shutting the mouth, combined with the production of a

nasal sound when the lips are closed, yielded the sound sequence "Mama",

which may, therefore, count as the very first word. Peter MacNeilage

sympathetically discusses this theory in his major book, The Origin of Speech, linking it with Dean Falk's "putting the baby down" theory (see above). Needless to say, other scholars have suggested completely different candidates for Homo sapiens' very first word.

Niche construction theory

A beaver dam in Tierra del Fuego. Beavers adapt to an environmental niche which they shape by their own activities.

Whilst

the biological language faculty is genetically inherited, actual

languages or dialects are culturally transmitted, as are social norms,

technological traditions and so forth. Biologists expect a robust

co-evolutionary trajectory linking human genetic evolution with the

evolution of culture.

Individuals capable of rudimentary forms of protolanguage would have

enjoyed enhanced access to cultural understandings, whilst these,

conveyed in ways that young brains could readily learn, would, in turn,

have become transmitted with increasing efficiency.

In some ways like beavers, as they construct their dams, humans have always engaged in niche construction,

creating novel environments to which they subsequently become adapted.

Selection pressures associated with prior niches tend to become relaxed

as humans depend increasingly on novel environments created continuously

by their own productive activities. According to Steven Pinker,

language is an adaptation to "the cognitive niche". Variations on the

theme of ritual/speech co-evolution – according to which speech evolved

for purposes of internal communication within a ritually constructed

domain – have attempted to specify more precisely when, why and how this

special niche was created by human collaborative activity.

Conceptual frameworks

Structuralism

"Consider

a knight in chess. Is the piece by itself an element of the game?

Certainly not. For as a material object, separated from its square on

the board and the other conditions of play, it is of no significance for

the player. It becomes a real, concrete element only when it takes on

or becomes identified with its value in the game. Suppose that during a

game this piece gets destroyed or lost. Can it be replaced? Of course,

it can. Not only by some other knight but even by an object of quite a

different shape, which can be counted as a knight, provided it is

assigned the same value as the missing piece."

— de Saussure, F. (1983) [1916]. Course in General Linguistics. Translated by R. Harris. London: Duckworth. pp. 108–09.

The Swiss scholar Ferdinand de Saussure

founded linguistics as a twentieth-century professional discipline.

Saussure regarded a language as a rule-governed system, much like a

board game such as chess. In order to understand chess, he insisted, we

must ignore such external factors as the weather prevailing during a

particular session or the material composition of this or that piece.

The game is autonomous with respect to its material embodiments. In the

same way, when studying language, it's essential to focus on its

internal structure as a social institution. External matters (e.g., the shape of the human tongue) are irrelevant from this standpoint. Saussure regarded 'speaking' (parole) as individual, ancillary and more or less accidental by comparison with "language" (langue), which he viewed as collective, systematic and essential.

Saussure showed little interest in Darwin's theory of evolution

by natural selection. Nor did he consider it worthwhile to speculate

about how language might originally have evolved. Saussure's assumptions

in fact cast doubt on the validity of narrowly conceived origins

scenarios. His structuralist paradigm, when accepted in its original

form, turns scholarly attention to a wider problem: how our species

acquired the capacity to establish social institutions in general.

Behaviourism

"The basic processes and relations

which give verbal behavior its special characteristics are now fairly

well understood. Much of the experimental work responsible for this

advance has been carried out on other species, but the results have

proved to be surprisingly free of species restrictions. Recent work has

shown that the methods can be extended to human behavior without serious

modification."

— Skinner, B.F. (1957). Verbal Behavior. New York: Appleton Century Crofts. p. 3.

In the United States, prior to and immediately following World War II, the dominant psychological paradigm was behaviourism. Within this conceptual framework, language was seen as a certain kind of behaviour – namely, verbal behaviour,

to be studied much like any other kind of behaviour in the animal

world. Rather as a laboratory rat learns how to find its way through an

artificial maze, so a human child learns the verbal behaviour of the

society into which it is born. The phonological, grammatical and other

complexities of speech are in this sense "external" phenomena, inscribed

into an initially unstructured brain. Language's emergence in Homo sapiens,

from this perspective, presents no special theoretical challenge. Human

behaviour, whether verbal or otherwise, illustrates the malleable

nature of the mammalian – and especially the human – brain.

Chomskyan Nativism

Nativism is the theory that humans are born with certain specialised cognitive modules enabling us to acquire highly complex bodies of knowledge such as the grammar of a language.

"There is a long history of

study of the origin of language, asking how it arose from calls of apes

and so forth. That investigation in my view is a complete waste of time

because language is based on an entirely different principle than any

animal communication system."

— Chomsky, N. (1988). Language and Problems of Knowledge. Cambridge, Massachusetts: MIT Press. p. 183.

From the mid-1950s onwards, Noam Chomsky, Jerry Fodor and others mounted what they conceptualised as a 'revolution' against behaviourism. Retrospectively, this became labelled 'the cognitive revolution'.

Whereas behaviourism had denied the scientific validity of the concept

of "mind", Chomsky replied that, in fact, the concept of "body" is more

problematic. Behaviourists tended to view the child's brain as a tabula rasa,

initially lacking structure or cognitive content. According to B. F.

Skinner, for example, richness of behavioural detail (whether verbal or

non-verbal) emanated from the environment. Chomsky turned this idea on

its head. The linguistic environment encountered by a young child,

according to Chomsky's version of psychological nativism,

is in fact hopelessly inadequate. No child could possibly acquire the

complexities of grammar from such an impoverished source.

Far from viewing language as wholly external, Chomsky re-conceptualised

it as wholly internal. To explain how a child so rapidly and

effortlessly acquires its natal language, he insisted, we must conclude

that it comes into the world with the essentials of grammar already

pre-installed.

No other species, according to Chomsky, is genetically equipped with a

language faculty – or indeed with anything remotely like one. The emergence of such a faculty in Homo sapiens, from this standpoint, presents biological science with a major theoretical challenge.

Speech act theory

One way to explain biological complexity is by reference to its inferred function. According to the influential philosopher John Austin, speech's primary function is active in the social world.

Speech acts,

according to this body of theory, can be analyzed on three different

levels: elocutionary, illocutionary and perlocutionary. An act is locutionary