From Wikipedia, the free encyclopedia

In

computer science and

information science, an

ontology

encompasses a representation, formal naming and definition of the

categories, properties and relations between the concepts, data and

entities that substantiate one, many or all

domains of discourse.

More simply, an ontology is a way of showing the properties of a

subject area and how they are related, by defining a set of concepts and

categories that represent the subject.

Every academic discipline or field creates ontologies to limit

complexity and organize data into information and knowledge. New

ontologies improve problem solving within that domain. Translating

research papers within every field is a problem made easier when experts

from different countries maintain a

controlled vocabulary of

jargon between each of their languages.

Etymology

The

compound word

ontology combines

onto-, from the

Greek ὄν,

on (

gen. ὄντος,

ontos), i.e. "being; that which is", which is the

present participle of the

verb εἰμί,

eimí, i.e. "to be, I am", and

-λογία,

-logia, i.e. "logical discourse", see

classical compounds for this type of word formation.

Overview

What ontologies in both

information science and

philosophy

have in common is the attempt to represent entities, ideas and events,

with all their interdependent properties and relations, according to a

system of categories. In both fields, there is considerable work on

problems of

ontology engineering (e.g.,

Quine and

Kripke in philosophy,

Sowa and

Guarino in computer science), and debates concerning to what extent

normative ontology is possible (e.g.,

foundationalism and

coherentism in philosophy,

BFO and

Cyc in artificial intelligence).

Applied ontology

is considered a spiritual successor to prior work in philosophy,

however many current efforts are more concerned with establishing

controlled vocabularies of narrow domains than

first principles, the existence of

fixed essences or whether enduring objects (e.g.,

perdurantism and

endurantism) may be ontologically more primary than

processes.

History

Ontologies arise out of the branch of

philosophy known as

metaphysics,

which deals with questions like "what exists?" and "what is the nature

of reality?". One of five traditional branches of philosophy,

metaphysics, is concerned with exploring existence through properties,

entities and relations such as those between

particulars and

universals,

intrinsic and extrinsic properties, or

essence and

existence. Metaphysics has been an ongoing topic of discussion since recorded history.

Since the mid-1970s, researchers in the field of

artificial intelligence (AI) have recognized that

knowledge engineering is the key to building large and powerful AI systems. AI researchers argued that they could create new ontologies as

computational models that enable certain kinds of

automated reasoning, which was only

marginally successful. In the 1980s, the AI community began to use the term

ontology to refer to both a theory of a modeled world and a component of

knowledge-based systems. In particular, David Powers introduced the word

ontology to AI to refer to real world or robotic grounding,

publishing in 1990 literature reviews emphasizing grounded ontology in

association with the call for papers for a AAAI Summer Symposium Machine

Learning of Natural Language and Ontology, with an expanded version

published in SIGART Bulletin and included as a preface to the

proceedings.

Some researchers, drawing inspiration from philosophical ontologies,

viewed computational ontology as a kind of applied philosophy.

In 1993, the widely cited web page and paper "Toward Principles for the Design of Ontologies Used for Knowledge Sharing" by

Tom Gruber used

ontology as a technical term in

computer science closely related to earlier idea of

semantic networks and

taxonomies. Gruber introduced the term as

a specification of a conceptualization:

An

ontology is a description (like a formal specification of a program) of

the concepts and relationships that can formally exist for an agent or a

community of agents. This definition is consistent with the usage of

ontology as set of concept definitions, but more general. And it is a

different sense of the word than its use in philosophy.

Ontologies are often equated with taxonomic hierarchies of classes, class definitions, and the subsumption relation, but ontologies need not be limited to these forms. Ontologies are also not limited to conservative definitions —

that is, definitions in the traditional logic sense that only introduce

terminology and do not add any knowledge about the world. To specify a conceptualization, one needs to state axioms that do constrain the possible interpretations for the defined terms.

As refinement of Gruber's definition Feilmayr and Wöß (2016) stated:

"An ontology is a formal, explicit specification of a shared

conceptualization that is characterized by high semantic expressiveness

required for increased complexity."

Components

Contemporary ontologies share many structural similarities,

regardless of the language in which they are expressed. Most ontologies

describe individuals (instances), classes (concepts), attributes and

relations. In this section each of these components is discussed in

turn.

Common components of ontologies include:

- Individuals

- Instances or objects (the basic or "ground level" objects)

- Classes

- Sets, collections, concepts, classes in programming, types of objects or kinds of things

- Attributes

- Aspects, properties, features, characteristics or parameters that objects (and classes) can have

- Relations

- Ways in which classes and individuals can be related to one another

- Function terms

- Complex structures formed from certain relations that can be used in place of an individual term in a statement

- Restrictions

- Formally stated descriptions of what must be true in order for some assertion to be accepted as input

- Rules

- Statements in the form of an if-then (antecedent-consequent)

sentence that describe the logical inferences that can be drawn from an

assertion in a particular form

- Axioms

- Assertions (including rules) in a logical form

that together comprise the overall theory that the ontology describes

in its domain of application. This definition differs from that of

"axioms" in generative grammar and formal logic. In those disciplines, axioms include only statements asserted as a priori knowledge. As used here, "axioms" also include the theory derived from axiomatic statements

- Events

- The changing of attributes or relations

Types

Domain ontology

A

domain ontology (or domain-specific ontology) represents concepts which

belong to a realm of the world, such as biology or politics. Each

domain ontology typically models domain-specific definitions of terms.

For example, the word

card has many different meanings. An ontology about the domain of

poker would model the "

playing card" meaning of the word, while an ontology about the domain of

computer hardware would model the "

punched card" and "

video card" meanings.

Since domain ontologies are written by different people, they

represent concepts in very specific and unique ways, and are often

incompatible within the same project. As systems that rely on domain

ontologies expand, they often need to merge domain ontologies by

hand-tuning each entity or using a combination of software merging and

hand-tuning. This presents a challenge to the ontology designer.

Different ontologies in the same domain arise due to different

languages, different intended usage of the ontologies, and different

perceptions of the domain (based on cultural background, education,

ideology, etc.).

At present, merging ontologies that are not developed from a common

upper ontology

is a largely manual process and therefore time-consuming and expensive.

Domain ontologies that use the same upper ontology to provide a set of

basic elements with which to specify the meanings of the domain ontology

entities can be merged with less effort. There are studies on

generalized techniques for merging ontologies,

but this area of research is still ongoing, and it's a recent event to

see the issue sidestepped by having multiple domain ontologies using the

same upper ontology like the

OBO Foundry.

Upper ontology

An

upper ontology

(or foundation ontology) is a model of the commonly shared relations

and objects that are generally applicable across a wide range of domain

ontologies. It usually employs a

core glossary that overarches the terms and associated object descriptions as they are used in various relevant domain ontologies.

Standardized upper ontologies available for use include

BFO,

BORO method,

Dublin Core,

GFO,

Cyc,

SUMO,

UMBEL, the Unified Foundational Ontology (UFO), and

DOLCE.

WordNet has been considered an upper ontology by some and has been used as a linguistic tool for learning domain ontologies.

Hybrid ontology

The

Gellish ontology is an example of a combination of an upper and a domain ontology.

Visualization

A survey of ontology visualization methods is presented by Katifori et al. An updated survey of ontology visualization methods and tools was published by Dudás et al. The most established ontology visualization methods, namely indented tree and graph visualization are evaluated by Fu et al. A visual language for ontologies represented in

OWL is specified by the

Visual Notation for OWL Ontologies (VOWL).

Engineering

Ontology engineering (also called ontology building) is a set of tasks related to the development of ontologies for a particular domain. It is a subfield of

knowledge engineering

that studies the ontology development process, the ontology life cycle,

the methods and methodologies for building ontologies, and the tools

and languages that support them.

Ontology engineering aims to make explicit the knowledge

contained in software applications, and organizational procedures for a

particular domain. Ontology engineering offers a direction for

overcoming semantic obstacles, such as those related to the definitions

of business terms and software classes. Known challenges with ontology

engineering include:

- Ensuring the ontology is current with domain knowledge and term use

- Providing sufficient specificity and concept coverage for the domain of interest, thus minimizing the content completeness problem

- Ensuring the ontology can support its use cases

Editors

Ontology editors are applications designed to assist in the

creation or manipulation of ontologies. It is common for ontology

editors to use one or more

ontology languages.

| a.k.a. software |

|

|

Ontology, taxonomy and thesaurus management software |

The Synercon Group

|

| Anzo for Excel |

|

|

Includes an RDFS and OWL ontology editor within Excel; generates ontologies from Excel spreadsheets |

Cambridge Semantics

|

| Be Informed Suite |

|

Commercial |

tool for building large ontology based applications. Includes visual editors, inference engines, export to standard formats |

|

| CENtree |

Java |

Commercial |

Web based client-server ontology management tool for life sciences, supports OWL, RDFS, OBO |

SciBite

|

| Chimaera |

|

|

Other web service |

Stanford University

|

| CmapTools |

Java based |

|

Ontology Editor (COE) ontology editor Supports numerous formats |

Florida Institute for Human and Machine Cognition

|

| dot15926 Editor |

Python? |

Open source |

ontology editor for data compliant to engineering ontology standard ISO 15926. Allows Python scripting and pattern-based data analysis. Supports extensions. |

|

| EMFText OWL2 Manchester Editor |

Eclipse-based |

open-source |

Pellet integration |

|

| Enterprise Architect |

|

|

along with UML modeling, supports OMG's Ontology Definition MetaModel which includes OWL and RDF |

Sparx Systems

|

| Fluent Editor |

|

|

ontology editor for OWL and SWRL with Controlled Natural Language (Controlled English). Supports OWL, RDF, DL and Functional rendering, unlimited imports and built-in reasoning services. |

|

| Gra.fo |

|

Free and Commercial |

A visual, collaborative and real time ontology and knowledge graph

schema editor. Features include sharing documents, commenting, search

and tracking history. Support W3C Semantic Web standards: RDF, RDFS, OWL and also Property Graph schemas. |

Capsenta

|

| HOZO |

Java |

|

graphical editor especially created to produce heavy-weight and well thought out ontologies |

Osaka University and Enegate Co, ltd.

|

| Java Ontology Editor (JOE)[35] |

Java |

|

Can be used to create and browse ontologies, and construct ontology

based queries. Incorporates abstraction mechanisms that enable users to

manage large ontologies |

Center for Information Technology, Department of Electrical and Computer Engineering, University of South Carolina

|

| KAON |

|

open source |

single user and server based solutions possible |

FZI/AIFB Karlsruhe

|

| KMgen |

|

|

Ontology editor for the KM language. km: The Knowledge Machine |

|

| Knoodl |

|

Free |

web application/service that is an ontology editor, wiki, and ontology registry.

Supports creation of communities where members can collaboratively

import, create, discuss, document and publish ontologies. Supports OWL, RDF, RDFS, and SPARQL queries. |

Revelytix, Inc..

|

| Menthor Editor |

|

|

An ontology engineering tool for dealing with OntoUML. It also includes OntoUML syntax validation, Alloy simulation, Anti-Pattern verification, and transformations from OntoUML to OWL, SBVR and Natural Language (Brazilian Portuguese) |

|

| Model Futures IDEAS AddIn |

|

free |

A plug-in for Enterprise Architect] that allows IDEAS Group 4D ontologies to be developed using a UML profile |

|

| Model Futures OWL Editor |

|

Free |

Able to work with very large OWL files (e.g. Cyc) and has extensive import and export capabilities (inc. UML, Thesaurus Descriptor, MS Word, CA ERwin Data Modeler, CSV, etc.) |

|

| myWeb |

Java |

|

mySQL connection, bundled with applet that allows online browsing of ontologies (including OBO) |

|

| Neologism |

built on Drupal |

open source |

Web-based, supports RDFS and a subset of OWL |

|

| NeOn Toolkit |

Eclipse-based |

open source |

OWL support, several import mechanisms, support for reuse and management of networked ontologies, visualization, etc. |

NeOn Project

|

| OBIS |

|

|

Web based user interface that allows users to input ontology instances that can be accessed via SPARQL endpoint |

|

| OBO-Edit |

Java |

open source |

downloadable, developed by the Gene Ontology Consortium for editing biological ontologies. OBO-Edit is no longer actively developed |

Gene Ontology Consortium

|

| Ontosight

|

|

Free and Commercial

|

Machine learning-based auto-scaling biomedical ontology combining all public biomedical ontologies

|

Innoplexus

|

| OntoStudio |

Eclipse |

downloadable, support for RDF(S), OWL and ObjectLogic (derived from F-Logic), graphical rule editor, visualizations |

semafora systems |

|

| Ontolingua |

|

|

Web service |

Stanford University

|

| ONTOLIS |

|

Commercial |

Collaborative web application for managing ontologies and knowledge

engineering, web-browser-based graphical rules editor, sophisticated

search and export interface. Web service available to link ontology

information to existing data |

ONTOLIS

|

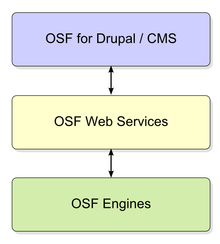

| Open Semantic Framework (OSF) |

|

|

an integrated software stack using semantic technologies for knowledge management, which includes an ontology editor |

|

| OWLGrEd |

|

|

A graphical ontology editor, easy-to-use |

|

| PoolParty Thesaurus Server |

|

Commercial |

ontology, taxonomy and thesaurus management software, fully based on standards like RDFS, SKOS and SPARQL, integrated with Virtuoso Universal Server |

Semantic Web Company

|

| Protégé |

Java |

open source |

downloadable, supports OWL, many sample ontologies |

Stanford University

|

| ScholOnto |

|

|

net-centric representations of research |

|

| Semantic Turkey |

Firefox extension - based on Java |

|

for managing ontologies and acquiring new knowledge from the Web |

developed at University of Rome, Tor Vergata

|

| Sigma knowledge engineering environment |

|

|

is a system primarily for development of the Suggested Upper Merged Ontology |

|

| Swoop |

Java |

open source |

downloadable, OWL Ontology browser and editor |

University of Maryland

|

| Semaphore Ontology Manager |

|

Commercial |

ontology, taxonomy and thesaurus management software. Tool to manage

the entire "build - enhance - review - maintain" ontology lifecycle. |

Smartlogic Semaphore Limited

|

| Synaptica |

|

|

Ontology, taxonomy and thesaurus management software. Web based, supports OWL and SKOS. |

Synaptica, LLC.

|

| TopBraid Composer |

Eclipse-based |

|

downloadable, full support for RDFS and OWL, built-in inference

engine, SWRL editor and SPARQL queries, visualization, import of XML and

UML |

TopQuadrant

|

| Transinsight |

|

|

Editor especially designed for creating text mining ontologies and part of GoPubMed.org |

|

| WebODE |

|

|

Web service |

Technical University of Madrid

|

| TwoUse Toolkit |

Eclipse-based |

open source |

model-driven ontology editing environment especially designed for software engineers |

|

| Thesaurus Master |

|

|

Manages creation and use of ontologies for use in data management

and semantic enrichment by enterprise, government, and scholarly

publishers. |

|

| TODE |

.Net |

|

Tool for Ontology Development and Editing |

|

| VocBench |

|

|

Collaborative Web Platform for Management of SKOS thesauri, OWL ontologies and OntoLex lexicons, now in its third incarnation supported by the ISA2 program of the EU |

originally developed on a joint effort between University of Rome

Tor Vergata and the Food and the Agriculture Organization of the United

Nations: FAO

|

Learning

Ontology learning is the automatic or semi-automatic creation of

ontologies, including extracting a domain's terms from natural language

text. As building ontologies manually is extremely labor-intensive and

time-consuming, there is great motivation to automate the process.

Information extraction and text mining have been explored to

automatically link ontologies to documents, for example in the context

of the BioCreative challenges.

Languages

An

ontology language is a

formal language used to encode an ontology. There are a number of such languages for ontologies, both proprietary and standards-based:

- Common Algebraic Specification Language

is a general logic-based specification language developed within the

IFIP working group 1.3 "Foundations of System Specifications" and is a de facto

standard language for software specifications. It is now being applied

to ontology specifications in order to provide modularity and

structuring mechanisms.

- Common logic is ISO standard 24707, a specification of a family of ontology languages that can be accurately translated into each other.

- The Cyc project has its own ontology language called CycL, based on first-order predicate calculus with some higher-order extensions.

- DOGMA

(Developing Ontology-Grounded Methods and Applications) adopts the

fact-oriented modeling approach to provide a higher level of semantic

stability.

- The Gellish language includes rules for its own extension and thus integrates an ontology with an ontology language.

- IDEF5 is a software engineering method to develop and maintain usable, accurate, domain ontologies.

- KIF is a syntax for first-order logic that is based on S-expressions. SUO-KIF is a derivative version supporting the Suggested Upper Merged Ontology.

- MOF and UML are standards of the OMG

- Olog is a category theoretic approach to ontologies, emphasizing translations between ontologies using functors.

- OBO, a language used for biological and biomedical ontologies.

- OntoUML is an ontologically well-founded profile of UML for conceptual modeling of domain ontologies.

- OWL is a language for making ontological statements, developed as a follow-on from RDF and RDFS, as well as earlier ontology language projects including OIL, DAML, and DAML+OIL. OWL is intended to be used over the World Wide Web, and all its elements (classes, properties and individuals) are defined as RDF resources, and identified by URIs.

- Rule Interchange Format (RIF) and F-Logic combine ontologies and rules.

- Semantic Application Design Language (SADL) captures a subset of the expressiveness of OWL, using an English-like language entered via an Eclipse Plug-in.

- SBVR (Semantics of Business Vocabularies and Rules) is an OMG standard adopted in industry to build ontologies.

- TOVE Project, TOronto Virtual Enterprise project

Published examples

- Arabic Ontology, a linguistic ontology for Arabic, which can be used as an Arabic Wordnet but with ontologically-clean content.

- AURUM - Information Security Ontology,

An ontology for information security knowledge sharing, enabling users

to collaboratively understand and extend the domain knowledge body. It

may serve as a basis for automated information security risk and

compliance management.

- BabelNet, a very large multilingual semantic network and ontology, lexicalized in many languages

- Basic Formal Ontology, a formal upper ontology designed to support scientific research

- BioPAX, an ontology for the exchange and interoperability of biological pathway (cellular processes) data

- BMO, an e-Business Model Ontology based on a review of enterprise ontologies and business model literature

- SSBMO,

a Strongly Sustainable Business Model Ontology based on a review of the

systems based natural and social science literature (including

business). Includes critique of and significant extensions to the

Business Model Ontology (BMO).

- CCO and GexKB,

Application Ontologies (APO) that integrate diverse types of knowledge

with the Cell Cycle Ontology (CCO) and the Gene Expression Knowledge

Base (GexKB)

- CContology (Customer Complaint Ontology), an e-business ontology to support online customer complaint management

- CIDOC Conceptual Reference Model, an ontology for cultural heritage

- COSMO,

a Foundation Ontology (current version in OWL) that is designed to

contain representations of all of the primitive concepts needed to

logically specify the meanings of any domain entity. It is intended to

serve as a basic ontology that can be used to translate among the

representations in other ontologies or databases. It started as a

merger of the basic elements of the OpenCyc and SUMO ontologie5s, and has

been supplemented with other ontology elements (types, relations) so as

to include representations of all of the words in the Longman dictionary defining vocabulary.

- Cyc, a large Foundation Ontology for formal representation of the universe of discourse

- Disease Ontology, designed to facilitate the mapping of diseases and associated conditions to particular medical codes

- DOLCE, a Descriptive Ontology for Linguistic and Cognitive Engineering

- Drammar, ontology of drama

- Dublin Core, a simple ontology for documents and publishing

- Financial Industry Business Ontology (FIBO), a business conceptual ontology for the financial industry

- Foundational, Core and Linguistic Ontologies

- Foundational Model of Anatomy, an ontology for human anatomy

- Friend of a Friend, an ontology for describing persons, their activities and their relations to other people and objects

- Gene Ontology for genomics

- Gellish English dictionary,

an ontology that includes a dictionary and taxonomy that includes an

upper ontology and a lower ontology that focusses on industrial and

business applications in engineering, technology and procurement.

- Geopolitical ontology, an ontology describing geopolitical information created by Food and Agriculture Organization(FAO).

The geopolitical ontology includes names in multiple languages

(English, French, Spanish, Arabic, Chinese, Russian and Italian); maps

standard coding systems (UN, ISO, FAOSTAT, AGROVOC, etc.); provides

relations among territories (land borders, group membership, etc.); and

tracks historical changes. In addition, FAO provides web services of

geopolitical ontology and a module maker to download modules of the

geopolitical ontology into different formats (RDF, XML, and EXCEL). See

more information at FAO Country Profiles.

- GAO (General Automotive Ontology) - an ontology for the automotive industry that includes 'car' extensions

- GOLD, General Ontology for Linguistic Description

- GUM (Generalized Upper Model), a linguistically motivated ontology for mediating between clients systems and natural language technology

- IDEAS Group, a formal ontology for enterprise architecture being developed by the Australian, Canadian, UK and U.S. Defence Depts.

- Linkbase, a formal representation of the biomedical domain, founded upon Basic Formal Ontology.

- LPL, Landmark Pattern Language

- NCBO Bioportal, biological and biomedical ontologies and associated tools to search, browse and visualise

- NIFSTD Ontologies from the Neuroscience Information Framework: a modular set of ontologies for the neuroscience domain.

- OBO-Edit, an ontology browser for most of the Open Biological and Biomedical Ontologies

- OBO Foundry, a suite of interoperable reference ontologies in biology and biomedicine

- OMNIBUS Ontology, an ontology of learning, instruction, and instructional design

- Ontology for Biomedical Investigations, an open-access, integrated ontology of biological and clinical investigations

- ONSTR,

Ontology for Newborn Screening Follow-up and Translational Research,

Newborn Screening Follow-up Data Integration Collaborative, Emory

University, Atlanta.

- Plant Ontology for plant structures and growth/development stages, etc.

- POPE, Purdue Ontology for Pharmaceutical Engineering

- PRO, the Protein Ontology of the Protein Information Resource, Georgetown University

- ProbOnto, knowledge base and ontology of probability distributions.

- Program abstraction taxonomy

- Protein Ontology for proteomics

- RXNO Ontology, for name reactions in chemistry

- Sequence Ontology, for representing genomic feature types found on biological sequences

- SNOMED CT (Systematized Nomenclature of Medicine—Clinical Terms)

- Suggested Upper Merged Ontology, a formal upper ontology

- Systems Biology Ontology (SBO), for computational models in biology

- SWEET, Semantic Web for Earth and Environmental Terminology

- ThoughtTreasure ontology

- TIME-ITEM, Topics for Indexing Medical Education

- Uberon, representing animal anatomical structures

- UMBEL, a lightweight reference structure of 20,000 subject concept classes and their relationships derived from OpenCyc

- WordNet, a lexical reference system

- YAMATO, Yet Another More Advanced Top-level Ontology

Libraries

The

development of ontologies has led to the emergence of services

providing lists or directories of ontologies called ontology libraries.

The following are libraries of human-selected ontologies.

- COLORE is an open repository of first-order ontologies in Common Logic with formal links between ontologies in the repository.

- DAML Ontology Library maintains a legacy of ontologies in DAML.

- Ontology Design Patterns portal is a wiki repository of reusable components and practices for ontology design, and also maintains a list of exemplary ontologies.

- Protégé Ontology Library contains a set of OWL, Frame-based and other format ontologies.

- SchemaWeb is a directory of RDF schemata expressed in RDFS, OWL and DAML+OIL.

The following are both directories and search engines.

- OBO Foundry is a suite of interoperable reference ontologies in biology and biomedicine.

- Bioportal (ontology repository of NCBO)

- OntoSelect Ontology Library offers similar services for RDF/S, DAML and OWL ontologies.

- Ontaria

is a "searchable and browsable directory of semantic web data" with a

focus on RDF vocabularies with OWL ontologies. (NB Project "on hold"

since 2004).

- Swoogle is a directory and search engine for all RDF resources available on the Web, including ontologies.

- Open Ontology Repository initiative

- ROMULUS is a foundational ontology repository aimed at improving

semantic interoperability. Currently there are three foundational

ontologies in the repository: DOLCE, BFO and GFO.

Examples of applications

In general, ontologies can be used beneficially in several fields.

- Enterprise applications. A more concrete example is SAPPHIRE (Health care) or Situational Awareness and Preparedness for Public Health Incidences and Reasoning Engines which is a semantics-based health information system capable of tracking and evaluating situations and occurrences that may affect public health.

- Geographic information systems

bring together data from different sources and benefit therefore from

ontological metadata which helps to connect the semantics of the data.

- Domain-specific ontologies are extremely important in biomedical

research, which requires named entity disambiguation of various

biomedical terms and abbreviations that have the same string of

characters but represent different biomedical concepts. For example, CSF

can represent Colony Stimulating Factor or Cerebral Spinal Fluid, both

of which are represented by the same term, CSF, in biomedical

literature.

This is why a large number of public ontologies are related to the life

sciences. Life science data science tools that fail to implement these

types of biomedical ontologies will not be able to accurately determine

causal relationships between concepts.