From Wikipedia, the free encyclopedia

Rainforest ecosystems are rich in biodiversity. This is the Gambia River in Senegal's Niokolo-Koba National Park.

An ecosystem is a community of living organisms (plants, animals and microbes) in conjunction with the nonliving components of their environment (things like air, water and mineral soil), interacting as a system.[2] These biotic and abiotic components are regarded as linked together through nutrient cycles and energy flows.[3] As ecosystems are defined by the network of interactions among organisms, and between organisms and their environment,[4] they can be of any size but usually encompass specific, limited spaces[5] (although some scientists say that the entire planet is an ecosystem).[6]

Energy, water, nitrogen and soil minerals are other essential abiotic components of an ecosystem. The energy that flows through ecosystems is obtained primarily from the sun. It generally enters the system through photosynthesis, a process that also captures carbon from the atmosphere. By feeding on plants and on one another, animals play an important role in the movement of matter and energy through the system. They also influence the quantity of plant and microbial biomass present. By breaking down dead organic matter, decomposers release carbon back to the atmosphere and facilitate nutrient cycling by converting nutrients stored in dead biomass back to a form that can be readily used by plants and other microbes.[7]

Ecosystems are controlled both by external and internal factors. External factors such as climate, the parent material which forms the soil and topography, control the overall structure of an ecosystem and the way things work within it, but are not themselves influenced by the ecosystem.[8] Other external factors include time and potential biota. Ecosystems are dynamic entities—invariably, they are subject to periodic disturbances and are in the process of recovering from some past disturbance.[9] Ecosystems in similar environments that are located in different parts of the world can have very different characteristics simply because they contain different species.[8] The introduction of non-native species can cause substantial shifts in ecosystem function. Internal factors not only control ecosystem processes but are also controlled by them and are often subject to feedback loops.[8] While the resource inputs are generally controlled by external processes like climate and parent material, the availability of these resources within the ecosystem is controlled by internal factors like decomposition, root competition or shading.[8] Other internal factors include disturbance, succession and the types of species present. Although humans exist and operate within ecosystems, their cumulative effects are large enough to influence external factors like climate.[8]

Biodiversity affects ecosystem function, as do the processes of disturbance and succession. Ecosystems provide a variety of goods and services upon which people depend; the principles of ecosystem management suggest that rather than managing individual species, natural resources should be managed at the level of the ecosystem itself. Classifying ecosystems into ecologically homogeneous units is an important step towards effective ecosystem management, but there is no single, agreed-upon way to do this.

History and development

The term "ecosystem" was first used in a publication by British ecologist Arthur Tansley.[fn 1][10] Tansley devised the concept to draw attention to the importance of transfers of materials between organisms and their environment.[11] He later refined the term, describing it as "The whole system, ... including not only the organism-complex, but also the whole complex of physical factors forming what we call the environment".[12] Tansley regarded ecosystems not simply as natural units, but as mental isolates.[12] Tansley later[13] defined the spatial extent of ecosystems using the term ecotope.G. Evelyn Hutchinson, a pioneering limnologist who was a contemporary of Tansley's, combined Charles Elton's ideas about trophic ecology with those of Russian geochemist Vladimir Vernadsky to suggest that mineral nutrient availability in a lake limited algal production which would, in turn, limit the abundance of animals that feed on algae. Raymond Lindeman took these ideas one step further to suggest that the flow of energy through a lake was the primary driver of the ecosystem. Hutchinson's students, brothers Howard T. Odum and Eugene P. Odum, further developed a "systems approach" to the study of ecosystems, allowing them to study the flow of energy and material through ecological systems.[11]

Ecosystem processes

Energy and carbon enter ecosystems through photosynthesis, are incorporated into living tissue, transferred to other organisms that feed on the living and dead plant matter, and eventually released through respiration.[14] Most mineral nutrients, on the other hand, are recycled within ecosystems.[15]Ecosystems are controlled both by external and internal factors. External factors, also called state factors, control the overall structure of an ecosystem and the way things work within it, but are not themselves influenced by the ecosystem. The most important of these is climate.[8] Climate determines the biome in which the ecosystem is embedded. Rainfall patterns and temperature seasonality determine the amount of water available to the ecosystem and the supply of energy available (by influencing photosynthesis).[8] Parent material, the underlying geological material that gives rise to soils, determines the nature of the soils present, and influences the supply of mineral nutrients. Topography also controls ecosystem processes by affecting things like microclimate, soil development and the movement of water through a system. This may be the difference between the ecosystem present in wetland situated in a small depression on the landscape, and one present on an adjacent steep hillside.[8]

Other external factors that play an important role in ecosystem functioning include time and potential biota. Ecosystems are dynamic entities—invariably, they are subject to periodic disturbances and are in the process of recovering from some past disturbance.[9] Time plays a role in the development of soil from bare rock and the recovery of a community from disturbance.[8] Similarly, the set of organisms that can potentially be present in an area can also have a major impact on ecosystems. Ecosystems in similar environments that are located in different parts of the world can end up doing things very differently simply because they have different pools of species present.[8] The introduction of non-native species can cause substantial shifts in ecosystem function.

Unlike external factors, internal factors in ecosystems not only control ecosystem processes, but are also controlled by them. Consequently, they are often subject to feedback loops.[8] While the resource inputs are generally controlled by external processes like climate and parent material, the availability of these resources within the ecosystem is controlled by internal factors like decomposition, root competition or shading.[8] Other factors like disturbance, succession or the types of species present are also internal factors. Human activities are important in almost all ecosystems. Although humans exist and operate within ecosystems, their cumulative effects are large enough to influence external factors like climate.[8]

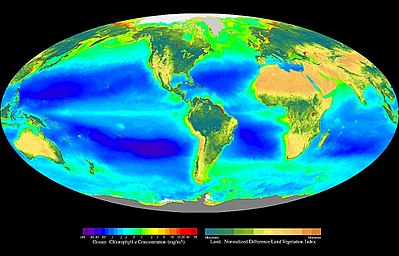

Primary production

Global oceanic and terrestrial phototroph abundance, from September 1997 to August 2000. As an estimate of autotroph biomass, it is only a rough indicator of primary production potential, and not an actual estimate of it. Provided by the SeaWiFS Project, NASA/Goddard Space Flight Center and ORBIMAGE.

Through the process of photosynthesis, plants capture energy from light and use it to combine carbon dioxide and water to produce carbohydrates and oxygen. The photosynthesis carried out by all the plants in an ecosystem is called the gross primary production (GPP).[16] About 48–60% of the GPP is consumed in plant respiration. The remainder, that portion of GPP that is not used up by respiration, is known as the net primary production (NPP).[14] Total photosynthesis is limited by a range of environmental factors. These include the amount of light available, the amount of leaf area a plant has to capture light (shading by other plants is a major limitation of photosynthesis), rate at which carbon dioxide can be supplied to the chloroplasts to support photosynthesis, the availability of water, and the availability of suitable temperatures for carrying out photosynthesis.[16]

Energy flow

Left: Energy flow diagram of a frog. The frog represents a node in an extended food web. The energy ingested is utilized for metabolic processes and transformed into biomass. The energy flow continues on its path if the frog is ingested by predators, parasites, or as a decaying carcass in soil. This energy flow diagram illustrates how energy is lost as it fuels the metabolic process that transforms the energy and nutrients into biomass.

Right: An expanded three link energy food chain (1. plants, 2. herbivores, 3. carnivores) illustrating the relationship between food flow diagrams and energy transformity. The transformity of energy becomes degraded, dispersed, and diminished from higher quality to lesser quantity as the energy within a food chain flows from one trophic species into another. Abbreviations: I=input, A=assimilation, R=respiration, NU=not utilized, P=production, B=biomass.[17]

Right: An expanded three link energy food chain (1. plants, 2. herbivores, 3. carnivores) illustrating the relationship between food flow diagrams and energy transformity. The transformity of energy becomes degraded, dispersed, and diminished from higher quality to lesser quantity as the energy within a food chain flows from one trophic species into another. Abbreviations: I=input, A=assimilation, R=respiration, NU=not utilized, P=production, B=biomass.[17]

Decomposition

The carbon and nutrients in dead organic matter are broken down by a group of processes known as decomposition. This releases nutrients that can then be re-used for plant and microbial production, and returns carbon dioxide to the atmosphere (or water) where it can be used for photosynthesis. In the absence of decomposition, dead organic matter would accumulate in an ecosystem and nutrients and atmospheric carbon dioxide would be depleted.[19] Approximately 90% of terrestrial NPP goes directly from plant to decomposer.[18]Decomposition processes can be separated into three categories—leaching, fragmentation and chemical alteration of dead material. As water moves through dead organic matter, it dissolves and carries with it the water-soluble components. These are then taken up by organisms in the soil, react with mineral soil, or are transported beyond the confines of the ecosystem (and are considered "lost" to it).[19] Newly shed leaves and newly dead animals have high concentrations of water-soluble components, and include sugars, amino acids and mineral nutrients. Leaching is more important in wet environments, and much less important in dry ones.[19]

Fragmentation processes break organic material into smaller pieces, exposing new surfaces for colonization by microbes. Freshly shed leaf litter may be inaccessible due to an outer layer of cuticle or bark, and cell contents are protected by a cell wall. Newly dead animals may be covered by an exoskeleton. Fragmentation processes, which break through these protective layers, accelerate the rate of microbial decomposition.[19] Animals fragment detritus as they hunt for food, as does passage through the gut. Freeze-thaw cycles and cycles of wetting and drying also fragment dead material.[19]

The chemical alteration of dead organic matter is primarily achieved through bacterial and fungal action. Fungal hyphae produce enzymes which can break through the tough outer structures surrounding dead plant material. They also produce enzymes which break down lignin, which allows to them access to both cell contents and to the nitrogen in the lignin. Fungi can transfer carbon and nitrogen through their hyphal networks and thus, unlike bacteria, are not dependent solely on locally available resources.[19]

Decomposition rates vary among ecosystems. The rate of decomposition is governed by three sets of factors—the physical environment (temperature, moisture and soil properties), the quantity and quality of the dead material available to decomposers, and the nature of the microbial community itself.[20] Temperature controls the rate of microbial respiration; the higher the temperature, the faster microbial decomposition occurs. It also affects soil moisture, which slows microbial growth and reduces leaching. Freeze-thaw cycles also affect decomposition—freezing temperatures kill soil microorganisms, which allows leaching to play a more important role in moving nutrients around. This can be especially important as the soil thaws in the Spring, creating a pulse of nutrients which become available.[20]

Decomposition rates are low under very wet or very dry conditions. Decomposition rates are highest in wet, moist conditions with adequate levels of oxygen. Wet soils tend to become deficient in oxygen (this is especially true in wetlands), which slows microbial growth. In dry soils, decomposition slows as well, but bacteria continue to grow (albeit at a slower rate) even after soils become too dry to support plant growth. When the rains return and soils become wet, the osmotic gradient between the bacterial cells and the soil water causes the cells to gain water quickly. Under these conditions, many bacterial cells burst, releasing a pulse of nutrients.[20] Decomposition rates also tend to be slower in acidic soils.[20] Soils which are rich in clay minerals tend to have lower decomposition rates, and thus, higher levels of organic matter.[20] The smaller particles of clay result in a larger surface area that can hold water. The higher the water content of a soil, the lower the oxygen content[21] and consequently, the lower the rate of decomposition. Clay minerals also bind particles of organic material to their surface, making them less accessibly to microbes.[20] Soil disturbance like tilling increase decomposition by increasing the amount of oxygen in the soil and by exposing new organic matter to soil microbes.[20]

The quality and quantity of the material available to decomposers is another major factor that influences the rate of decomposition. Substances like sugars and amino acids decompose readily and are considered "labile". Cellulose and hemicellulose, which are broken down more slowly, are "moderately labile". Compounds which are more resistant to decay, like lignin or cutin, are considered "recalcitrant".[20] Litter with a higher proportion of labile compounds decomposes much more rapidly than does litter with a higher proportion of recalcitrant material. Consequently, dead animals decompose more rapidly than dead leaves, which themselves decompose more rapidly than fallen branches.[20] As organic material in the soil ages, its quality decreases. The more labile compounds decompose quickly, leaving and increasing proportion of recalcitrant material. Microbial cell walls also contain a recalcitrant materials like chitin, and these also accumulate as the microbes die, further reducing the quality of older soil organic matter.[20]

Nutrient cycling

Ecosystems continually exchange energy and carbon with the wider environment; mineral nutrients, on the other hand, are mostly cycled back and forth between plants, animals, microbes and the soil. Most nitrogen enters ecosystems through biological nitrogen fixation, is deposited through precipitation, dust, gases or is applied as fertilizer.[15] Since most terrestrial ecosystems are nitrogen-limited, nitrogen cycling is an important control on ecosystem production.[15]

Until modern times, nitrogen fixation was the major source of nitrogen for ecosystems. Nitrogen fixing bacteria either live symbiotically with plants, or live freely in the soil. The energetic cost is high for plants which support nitrogen-fixing symbionts—as much as 25% of GPP when measured in controlled conditions. Many members of the legume plant family support nitrogen-fixing symbionts. Some cyanobacteria are also capable of nitrogen fixation. These are phototrophs, which carry out photosynthesis. Like other nitrogen-fixing bacteria, they can either be free-living or have symbiotic relationships with plants.[15] Other sources of nitrogen include acid deposition produced through the combustion of fossil fuels, ammonia gas which evaporates from agricultural fields which have had fertilizers applied to them, and dust.[15] Anthropogenic nitrogen inputs account for about 80% of all nitrogen fluxes in ecosystems.[15]

When plant tissues are shed or are eaten, the nitrogen in those tissues becomes available to animals and microbes. Microbial decomposition releases nitrogen compounds from dead organic matter in the soil, where plants, fungi and bacteria compete for it. Some soil bacteria use organic nitrogen-containing compounds as a source of carbon, and release ammonium ions into the soil. This process is known as nitrogen mineralization. Others convert ammonium to nitrite and nitrate ions, a process known as nitrification. Nitric oxide and nitrous oxide are also produced during nitrification.[15] Under nitrogen-rich and oxygen-poor conditions, nitrates and nitrites are converted to nitrogen gas, a process known as denitrification.[15]

Other important nutrients include phosphorus, sulfur, calcium, potassium, magnesium and manganese.[22] Phosphorus enters ecosystems through weathering. As ecosystems age this supply diminishes, making phosphorus-limitation more common in older landscapes (especially in the tropics).[22] Calcium and sulfur are also produced by weathering, but acid deposition is an important source of sulfur in many ecosystems. Although magnesium and manganese are produced by weathering, exchanges between soil organic matter and living cells account for a significant portion of ecosystem fluxes. Potassium is primarily cycled between living cells and soil organic matter.[22]

Function and biodiversity

Loch Lomond in Scotland forms a relatively isolated ecosystem. The fish community of this lake has remained stable over a long period until a number of introductions in the 1970s restructured its food web.[23]

Spiny forest at Ifaty, Madagascar, featuring various Adansonia (baobab) species, Alluaudia procera (Madagascar ocotillo) and other vegetation.

Ecosystem processes are broad generalizations that actually take place through the actions of individual organisms. The nature of the organisms—the species, functional groups and trophic levels to which they belong—dictates the sorts of actions these individuals are capable of carrying out, and the relative efficiency with which they do so. Thus, ecosystem processes are driven by the number of species in an ecosystem, the exact nature of each individual species, and the relative abundance organisms within these species.[24] Biodiversity plays an important role in ecosystem functioning.[25]

Ecological theory suggests that in order to coexist, species must have some level of limiting similarity—they must be different from one another in some fundamental way, otherwise one species would competitively exclude the other.[26] Despite this, the cumulative effect of additional species in an ecosystem is not linear—additional species may enhance nitrogen retention, for example, but beyond some level of species richness, additional species may have little additive effect.[24] The addition (or loss) of species which are ecologically similar to those already present in an ecosystem tends to only have a small effect on ecosystem function. Ecologically distinct species, on the other hand, have a much larger effect. Similarly, dominant species have a large impact on ecosystem function, while rare species tend to have a small effect. Keystone species tend to have an effect on ecosystem function that is disproportionate to their abundance in an ecosystem.[24]

Ecosystem goods and services

Ecosystems provide a variety of goods and services upon which people depend.[27] Ecosystem goods include the "tangible, material products"[28] of ecosystem processes—food, construction material, medicinal plants—in addition to less tangible items like tourism and recreation, and genes from wild plants and animals that can be used to improve domestic species.[27] Ecosystem services, on the other hand, are generally "improvements in the condition or location of things of value".[28] These include things like the maintenance of hydrological cycles, cleaning air and water, the maintenance of oxygen in the atmosphere, crop pollination and even things like beauty, inspiration and opportunities for research.[27] While ecosystem goods have traditionally been recognized as being the basis for things of economic value, ecosystem services tend to be taken for granted.[28] While Gretchen Daily's original definition distinguished between ecosystem goods and ecosystem services, Robert Costanza and colleagues' later work and that of the Millennium Ecosystem Assessment lumped all of these together as ecosystem services.[28]Ecosystem management

When natural resource management is applied to whole ecosystems, rather than single species, it is termed ecosystem management.[29] A variety of definitions exist: F. Stuart Chapin and coauthors define it as "the application of ecological science to resource management to promote long-term sustainability of ecosystems and the delivery of essential ecosystem goods and services",[30] while Norman Christensen and coauthors defined it as "management driven by explicit goals, executed by policies, protocols, and practices, and made adaptable by monitoring and research based on our best understanding of the ecological interactions and processes necessary to sustain ecosystem structure and function"[27] and Peter Brussard and colleagues defined it as "managing areas at various scales in such a way that ecosystem services and biological resources are preserved while appropriate human use and options for livelihood are sustained".[31]Although definitions of ecosystem management abound, there is a common set of principles which underlie these definitions.[30] A fundamental principle is the long-term sustainability of the production of goods and services by the ecosystem;[30] "intergenerational sustainability [is] a precondition for management, not an afterthought".[27] It also requires clear goals with respect to future trajectories and behaviors of the system being managed. Other important requirements include a sound ecological understanding of the system, including connectedness, ecological dynamics and the context in which the system is embedded. Other important principles include an understanding of the role of humans as components of the ecosystems and the use of adaptive management.[27] While ecosystem management can be used as part of a plan for wilderness conservation, it can also be used in intensively managed ecosystems[27] (see, for example, agroecosystem and close to nature forestry).

Ecosystem dynamics

The High Peaks Wilderness Area in the 6,000,000-acre (2,400,000 ha) Adirondack Park is an example of a diverse ecosystem.

Ecosystems are dynamic entities—invariably, they are subject to periodic disturbances and are in the process of recovering from some past disturbance.[9] When an ecosystem is subject to some sort of perturbation, it responds by moving away from its initial state. The tendency of a system to remain close to its equilibrium state, despite that disturbance, is termed its resistance. On the other hand, the speed with which it returns to its initial state after disturbance is called its resilience.[9]

From one year to another, ecosystems experience variation in their biotic and abiotic environments. A drought, an especially cold winter and a pest outbreak all constitute short-term variability in environmental conditions. Animal populations vary from year to year, building up during resource-rich periods and crashing as they overshoot their food supply. These changes play out in changes in NPP, decomposition rates, and other ecosystem processes.[9] Longer-term changes also shape ecosystem processes—the forests of eastern North America still show legacies of cultivation which ceased 200 years ago, while methane production in eastern Siberian lakes is controlled by organic matter which accumulated during the Pleistocene.[9]

Disturbance also plays an important role in ecological processes. F. Stuart Chapin and coauthors define disturbance as "a relatively discrete event in time and space that alters the structure of populations, communities and ecosystems and causes changes in resources availability or the physical environment".[32] This can range from tree falls and insect outbreaks to hurricanes and wildfires to volcanic eruptions and can cause large changes in plant, animal and microbe populations, as well soil organic matter content.[9] Disturbance is followed by succession, a "directional change in ecosystem structure and functioning resulting from biotically driven changes in resources supply."[32]

The frequency and severity of disturbance determines the way it impacts ecosystem function. Major disturbance like a volcanic eruption or glacial advance and retreat leave behind soils that lack plants, animals or organic matter. Ecosystems that experience disturbances that undergo primary succession. Less severe disturbance like forest fires, hurricanes or cultivation result in secondary succession.[9] More severe disturbance and more frequent disturbance result in longer recovery times. Ecosystems recover more quickly from less severe disturbance events.[9]

The early stages of primary succession are dominated by species with small propagules (seed and spores) which can be dispersed long distances. The early colonizers—often algae, cyanobacteria and lichens—stabilize the substrate. Nitrogen supplies are limited in new soils, and nitrogen-fixing species tend to play an important role early in primary succession. Unlike in primary succession, the species that dominate secondary succession, are usually present from the start of the process, often in the soil seed bank. In some systems the successional pathways are fairly consistent, and thus, are easy to predict. In others, there are many possible pathways—for example, the introduced nitrogen-fixing legume, Myrica faya, alter successional trajectories in Hawaiian forests.[9]

The theoretical ecologist Robert Ulanowicz has used information theory tools to describe the structure of ecosystems, emphasizing mutual information (correlations) in studied systems. Drawing on this methodology and prior observations of complex ecosystems, Ulanowicz depicts approaches to determining the stress levels on ecosystems and predicting system reactions to defined types of alteration in their settings (such as increased or reduced energy flow, and eutrophication.[33]

Ecosystem ecology

A hydrothermal vent is an ecosystem on the ocean floor. (The scale bar is 1 m.)

Ecosystem ecology studies "the flow of energy and materials through organisms and the physical environment". It seeks to understand the processes which govern the stocks of material and energy in ecosystems, and the flow of matter and energy through them. The study of ecosystems can cover 10 orders of magnitude, from the surface layers of rocks to the surface of the planet.[34]

There is no single definition of what constitutes an ecosystem.[35] German ecologist Ernst-Detlef Schulze and coauthors defined an ecosystem as an area which is "uniform regarding the biological turnover, and contains all the fluxes above and below the ground area under consideration." They explicitly reject Gene Likens' use of entire river catchments as "too wide a demarcation" to be a single ecosystem, given the level of heterogeneity within such an area.[36] Other authors have suggested that an ecosystem can encompass a much larger area, even the whole planet.[6] Schulze and coauthors also rejected the idea that a single rotting log could be studied as an ecosystem because the size of the flows between the log and its surroundings are too large, relative to the proportion cycles within the log.[36] Philosopher of science Mark Sagoff considers the failure to define "the kind of object it studies" to be an obstacle to the development of theory in ecosystem ecology.[35]

Ecosystems can be studied through a variety of approaches—theoretical studies, studies monitoring specific ecosystems over long periods of time, those that look at differences between ecosystems to elucidate how they work and direct manipulative experimentation.[37] Studies can be carried out at a variety of scales, from microcosms and mesocosms which serve as simplified representations of ecosystems, through whole-ecosystem studies.[38] American ecologist Stephen R. Carpenter has argued that microcosm experiments can be "irrelevant and diversionary" if they are not carried out in conjunction with field studies carried out at the ecosystem scale, because microcosm experiments often fail to accurately predict ecosystem-level dynamics.[39]

The Hubbard Brook Ecosystem Study, established in the White Mountains, New Hampshire in 1963, was the first successful attempt to study an entire watershed as an ecosystem. The study used stream chemistry as a means of monitoring ecosystem properties, and developed a detailed biogeochemical model of the ecosystem.[40] Long-term research at the site led to the discovery of acid rain in North America in 1972, and was able to document the consequent depletion of soil cations (especially calcium) over the next several decades.[41]

Classification

Classifying ecosystems into ecologically homogeneous units is an important step towards effective ecosystem management.[42] A variety of systems exist, based on vegetation cover, remote sensing, and bioclimatic classification systems.[42] American geographer Robert Bailey defines a hierarchy of ecosystem units ranging from microecosystems (individual homogeneous sites, on the order of 10 square kilometres (4 sq mi) in area), through mesoecosystems (landscape mosaics, on the order of 1,000 square kilometres (400 sq mi)) to macroecosystems (ecoregions, on the order of 100,000 square kilometres (40,000 sq mi)).[43]

Bailey outlined five different methods for identifying ecosystems: gestalt ("a whole that is not derived through considerable of its parts"), in which regions are recognized and boundaries drawn intuitively; a map overlay system where different layers like geology, landforms and soil types are overlain to identify ecosystems; multivariate clustering of site attributes; digital image processing of remotely sensed data grouping areas based on their appearance or other spectral properties; or by a "controlling factors method" where a subset of factors (like soils, climate, vegetation physiognomy or the distribution of plant or animal species) are selected from a large array of possible ones are used to delineate ecosystems.[44] In contrast with Bailey's methodology, Puerto Rico ecologist Ariel Lugo and coauthors identified ten characteristics of an effective classification system: that it be based on georeferenced, quantitative data; that it should minimize subjectivity and explicitly identify criteria and assumptions; that it should be structured around the factors that drive ecosystem processes; that it should reflect the hierarchical nature of ecosystems; that it should be flexible enough to conform to the various scales at which ecosystem management operates; that it should be tied to reliable measures of climate so that it can "anticipat[e] global climate change; that it be applicable worldwide; that it should be validated against independent data; that it take into account the sometimes complex relationship between climate, vegetation and ecosystem functioning; and that it should be able to adapt and improve as new data become available".[42]