The technological singularity (also, simply, the singularity)[1] is the hypothesis that the invention of artificial superintelligence (ASI) will abruptly trigger runaway technological growth, resulting in unfathomable changes to human civilization.[2] According to this hypothesis, an upgradable intelligent agent (such as a computer running software-based artificial general intelligence) would enter a "runaway reaction" of self-improvement cycles, with each new and more intelligent generation appearing more and more rapidly, causing an intelligence explosion and resulting in a powerful superintelligence that would, qualitatively, far surpass all human intelligence. Stanislaw Ulam reports a discussion with John von Neumann "centered on the accelerating progress of technology and changes in the mode of human life, which gives the appearance of approaching some essential singularity in the history of the race beyond which human affairs, as we know them, could not continue".[3] Subsequent authors have echoed this viewpoint.[2][4] I. J. Good's "intelligence explosion" model predicts that a future superintelligence will trigger a singularity.[5] Emeritus professor of computer science at San Diego State University and science fiction author Vernor Vinge said in his 1993 essay The Coming Technological Singularity that this would signal the end of the human era, as the new superintelligence would continue to upgrade itself and would advance technologically at an incomprehensible rate.[5]

Four polls were conducted in 2012 and 2013 which suggested that the median estimate was a one in two chance that artificial general intelligence (AGI) would be developed by 2040–2050, depending on the poll.[6][7]

In the 2010s public figures such as Stephen Hawking and Elon Musk expressed concern that full artificial intelligence could result in human extinction.[8][9] The consequences of the singularity and its potential benefit or harm to the human race have been hotly debated.

Manifestations

Intelligence explosion

I. J. Good speculated in 1965 that artificial general intelligence might bring about an intelligence explosion. Good's scenario runs as follows: as computers increase in power, it becomes possible for people to build a machine that is more intelligent than humanity; this superhuman intelligence possesses greater problem-solving and inventive skills than current humans are capable of. This superintelligent machine then designs an even more capable machine, or re-writes its own software to become even more intelligent; this (ever more capable) machine then goes on to design a machine of yet greater capability, and so on. These iterations of recursive self-improvement accelerate, allowing enormous qualitative change before any upper limits imposed by the laws of physics or theoretical computation set in.[10]Emergence of superintelligence

John von Neumann, Vernor Vinge and Ray Kurzweil define the concept in terms of the technological creation of super intelligence. They argue that it is difficult or impossible for present-day humans to predict what human beings' lives would be like in a post-singularity world.[5][11]Non-AI singularity

Some writers use "the singularity" in a broader way to refer to any radical changes in our society brought about by new technologies such as molecular nanotechnology,[12][13][14] although Vinge and other writers specifically state that without superintelligence, such changes would not qualify as a true singularity.[5] Many writers also tie the singularity to observations of exponential growth in various technologies (with Moore's law being the most prominent example), using such observations as a basis for predicting that the singularity is likely to happen sometime within the 21st century.[13][15]Plausibility

Many prominent technologists and academics dispute the plausibility of a technological singularity, including Paul Allen, Jeff Hawkins, John Holland, Jaron Lanier, and Gordon Moore, whose law is often cited in support of the concept.[16][17][18]Claimed cause: exponential growth

Ray Kurzweil writes that, due to paradigm shifts, a trend of exponential growth extends Moore's law from integrated circuits to earlier transistors, vacuum tubes, relays, and electromechanical

computers. He predicts that the exponential growth will continue, and

that in a few decades the computing power of all computers will exceed

that of ("unenhanced") human brains, with superhuman artificial intelligence appearing around the same time.

An updated version of Moore's law over 120 Years (based on Kurzweil’s graph). The 7 most recent data points are all NVIDIA GPUs.

The exponential growth in computing technology suggested by Moore's law is commonly cited as a reason to expect a singularity in the relatively near future, and a number of authors have proposed generalizations of Moore's law. Computer scientist and futurist Hans Moravec proposed in a 1998 book[19] that the exponential growth curve could be extended back through earlier computing technologies prior to the integrated circuit.

Ray Kurzweil postulates a law of accelerating returns in which the speed of technological change (and more generally, all evolutionary processes[20]) increases exponentially, generalizing Moore's law in the same manner as Moravec's proposal, and also including material technology (especially as applied to nanotechnology), medical technology and others.[21] Between 1986 and 2007, machines' application-specific capacity to compute information per capita roughly doubled every 14 months; the per capita capacity of the world's general-purpose computers has doubled every 18 months; the global telecommunication capacity per capita doubled every 34 months; and the world's storage capacity per capita doubled every 40 months.[22]

Kurzweil reserves the term "singularity" for a rapid increase in artificial intelligence (as opposed to other technologies), writing for example that "The Singularity will allow us to transcend these limitations of our biological bodies and brains ... There will be no distinction, post-Singularity, between human and machine".[23] He also defines his predicted date of the singularity (2045) in terms of when he expects computer-based intelligences to significantly exceed the sum total of human brainpower, writing that advances in computing before that date "will not represent the Singularity" because they do "not yet correspond to a profound expansion of our intelligence."[24]

Accelerating change

According to Kurzweil, his logarithmic graph of 15 lists of paradigm shifts for key historic events shows an exponential trend

Some singularity proponents argue its inevitability through extrapolation of past trends, especially those pertaining to shortening gaps between improvements to technology. In one of the first uses of the term "singularity" in the context of technological progress, Stanislaw Ulam tells of a conversation with John von Neumann about accelerating change:

One conversation centered on the ever accelerating progress of technology and changes in the mode of human life, which gives the appearance of approaching some essential singularity in the history of the race beyond which human affairs, as we know them, could not continue.[3]Kurzweil claims that technological progress follows a pattern of exponential growth, following what he calls the "law of accelerating returns". Whenever technology approaches a barrier, Kurzweil writes, new technologies will surmount it. He predicts paradigm shifts will become increasingly common, leading to "technological change so rapid and profound it represents a rupture in the fabric of human history".[25] Kurzweil believes that the singularity will occur by approximately 2045.[26] His predictions differ from Vinge's in that he predicts a gradual ascent to the singularity, rather than Vinge's rapidly self-improving superhuman intelligence.

Oft-cited dangers include those commonly associated with molecular nanotechnology and genetic engineering. These threats are major issues for both singularity advocates and critics, and were the subject of Bill Joy's Wired magazine article "Why the future doesn't need us".[4][27]

Criticisms

Some critics assert that no computer or machine will ever achieve human intelligence, while others hold that the definition of intelligence is irrelevant if the net result is the same.[28]Steven Pinker stated in 2008:

... There is not the slightest reason to believe in a coming singularity. The fact that you can visualize a future in your imagination is not evidence that it is likely or even possible. Look at domed cities, jet-pack commuting, underwater cities, mile-high buildings, and nuclear-powered automobiles—all staples of futuristic fantasies when I was a child that have never arrived. Sheer processing power is not a pixie dust that magically solves all your problems. ...[16]University of California, Berkeley, philosophy professor John Searle writes:

[Computers] have, literally ..., no intelligence, no motivation, no autonomy, and no agency. We design them to behave as if they had certain sorts of psychology, but there is no psychological reality to the corresponding processes or behavior. ... [T]he machinery has no beliefs, desires, [or] motivations.[29]Martin Ford in The Lights in the Tunnel: Automation, Accelerating Technology and the Economy of the Future[30] postulates a "technology paradox" in that before the singularity could occur most routine jobs in the economy would be automated, since this would require a level of technology inferior to that of the singularity. This would cause massive unemployment and plummeting consumer demand, which in turn would destroy the incentive to invest in the technologies that would be required to bring about the Singularity. Job displacement is increasingly no longer limited to work traditionally considered to be "routine".[31]

Theodore Modis[32][33] and Jonathan Huebner[34] argue that the rate of technological innovation has not only ceased to rise, but is actually now declining. Evidence for this decline is that the rise in computer clock rates is slowing, even while Moore's prediction of exponentially increasing circuit density continues to hold. This is due to excessive heat build-up from the chip, which cannot be dissipated quickly enough to prevent the chip from melting when operating at higher speeds. Advancements in speed may be possible in the future by virtue of more power-efficient CPU designs and multi-cell processors.[35] While Kurzweil used Modis' resources, and Modis' work was around accelerating change, Modis distanced himself from Kurzweil's thesis of a "technological singularity", claiming that it lacks scientific rigor.[33]

Others[who?] propose that other "singularities" can be found through analysis of trends in world population, world gross domestic product, and other indices. Andrey Korotayev and others argue that historical hyperbolic growth curves can be attributed to feedback loops that ceased to affect global trends in the 1970s, and thus hyperbolic growth should not be expected in the future.[36][37][improper synthesis?]

In a detailed empirical accounting, The Progress of Computing, William Nordhaus argued that, prior to 1940, computers followed the much slower growth of a traditional industrial economy, thus rejecting extrapolations of Moore's law to 19th-century computers.[38]

In a 2007 paper, Schmidhuber stated that the frequency of subjectively "notable events" appears to be approaching a 21st-century singularity, but cautioned readers to take such plots of subjective events with a grain of salt: perhaps differences in memory of recent and distant events could create an illusion of accelerating change where none exists.[39]

Paul Allen argues the opposite of accelerating returns, the complexity brake;[18] the more progress science makes towards understanding intelligence, the more difficult it becomes to make additional progress. A study of the number of patents shows that human creativity does not show accelerating returns, but in fact, as suggested by Joseph Tainter in his The Collapse of Complex Societies,[40] a law of diminishing returns. The number of patents per thousand peaked in the period from 1850 to 1900, and has been declining since.[34] The growth of complexity eventually becomes self-limiting, and leads to a widespread "general systems collapse".

Jaron Lanier refutes the idea that the Singularity is inevitable. He states: "I do not think the technology is creating itself. It's not an autonomous process."[41] He goes on to assert: "The reason to believe in human agency over technological determinism is that you can then have an economy where people earn their own way and invent their own lives. If you structure a society on not emphasizing individual human agency, it's the same thing operationally as denying people clout, dignity, and self-determination ... to embrace [the idea of the Singularity] would be a celebration of bad data and bad politics."[41]

Economist Robert J. Gordon, in The Rise and Fall of American Growth: The U.S. Standard of Living Since the Civil War (2016), points out that measured economic growth has slowed around 1970 and slowed even further since the financial crisis of 2008, and argues that the economic data show no trace of a coming Singularity as imagined by mathematician I.J. Good.[42]

In addition to general criticisms of the singularity concept, several critics have raised issues with Kurzweil's iconic chart. One line of criticism is that a log-log chart of this nature is inherently biased toward a straight-line result. Others identify selection bias in the points that Kurzweil chooses to use. For example, biologist PZ Myers points out that many of the early evolutionary "events" were picked arbitrarily.[43] Kurzweil has rebutted this by charting evolutionary events from 15 neutral sources, and showing that they fit a straight line on a log-log chart. The Economist mocked the concept with a graph extrapolating that the number of blades on a razor, which has increased over the years from one to as many as five, will increase ever-faster to infinity.[44]

Ramifications

Uncertainty and risk

The term "technological singularity" reflects the idea that such change may happen suddenly, and that it is difficult to predict how the resulting new world would operate.[45][46] It is unclear whether an intelligence explosion of this kind would be beneficial or harmful, or even an existential threat,[47][48] as the issue has not been dealt with by most artificial general intelligence researchers, although the topic of friendly artificial intelligence is investigated by the Future of Humanity Institute and the Machine Intelligence Research Institute.[45]Next step of sociobiological evolution

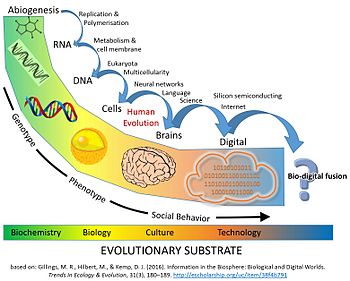

Schematic Timeline of Information and Replicators in the Biosphere: Gillings et al.'s "major evolutionary transitions" in information processing.[49]

Amount of digital information worldwide (5x10^21 bytes) versus human genome information worldwide (10^19 bytes) in 2014.[49]

Implications for human society

In February 2009, under the auspices of the Association for the Advancement of Artificial Intelligence (AAAI), Eric Horvitz chaired a meeting of leading computer scientists, artificial intelligence researchers and roboticists at Asilomar in Pacific Grove, California. The goal was to discuss the potential impact of the hypothetical possibility that robots could become self-sufficient and able to make their own decisions. They discussed the extent to which computers and robots might be able to acquire autonomy, and to what degree they could use such abilities to pose threats or hazards.[50]Some machines are programmed with various forms of semi-autonomy, including the ability to locate their own power sources and choose targets to attack with weapons. Also, some computer viruses can evade elimination and, according to scientists in attendance, could therefore be said to have reached a "cockroach" stage of machine intelligence. The conference attendees noted that self-awareness as depicted in science-fiction is probably unlikely, but that other potential hazards and pitfalls exist.[50]

Some experts and academics have questioned the use of robots for military combat, especially when such robots are given some degree of autonomous functions.[51][improper synthesis?]

Immortality

In his 2005 book, The Singularity is Near, Kurzweil suggests that medical advances would allow people to protect their bodies from the effects of aging, making the life expectancy limitless. Kurzweil argues that the technological advances in medicine would allow us to continuously repair and replace defective components in our bodies, prolonging life to an undetermined age.[52] Kurzweil further buttresses his argument by discussing current bio-engineering advances. Kurzweil suggests somatic gene therapy; after synthetic viruses with specific genetic information, the next step would be to apply this technology to gene therapy, replacing human DNA with synthesized genes.[53]K. Eric Drexler, one of the founders of nanotechnology, postulated cell repair devices, including ones operating within cells and utilizing as yet hypothetical biological machines, in his 1986 book Engines of Creation. According to Richard Feynman, it was his former graduate student and collaborator Albert Hibbs who originally suggested to him (circa 1959) the idea of a medical use for Feynman's theoretical micromachines . Hibbs suggested that certain repair machines might one day be reduced in size to the point that it would, in theory, be possible to (as Feynman put it) "swallow the doctor".

The idea was incorporated into Feynman's 1959 essay There's Plenty of Room at the Bottom.[54]

Beyond merely extending the operational life of the physical body, Jaron Lanier argues for a form of immortality called "Digital Ascension" that involves "people dying in the flesh and being uploaded into a computer and remaining conscious".[55] Singularitarianism has also been likened to a religion by John Horgan.[56]

History of the concept

In his obituary for John von Neumann, Ulam recalled a conversation with von Neumann about the "ever accelerating progress of technology and changes in the mode of human life, which gives the appearance of approaching some essential singularity in the history of the race beyond which human affairs, as we know them, could not continue."[3]In 1965, Good wrote his essay postulating an "intelligence explosion" of recursive self-improvement of a machine intelligence. In 1985, in "The Time Scale of Artificial Intelligence", artificial intelligence researcher Ray Solomonoff articulated mathematically the related notion of what he called an "infinity point": if a research community of human-level self-improving AIs take four years to double their own speed, then two years, then one year and so on, their capabilities increase infinitely in finite time.[4][57]

In 1981, Stanisław Lem published his science fiction novel Golem XIV. It describes a military AI computer (Golem XIV) who obtains consciousness and starts to increase his own intelligence, moving towards personal technological singularity. Golem XIV was originally created to aid its builders in fighting wars, but as its intelligence advances to a much higher level than that of humans, it stops being interested in the military requirement because it finds them lacking internal logical consistency.

In 1983, Vinge greatly popularized Good's intelligence explosion in a number of writings, first addressing the topic in print in the January 1983 issue of Omni magazine. In this op-ed piece, Vinge seems to have been the first to use the term "singularity" in a way that was specifically tied to the creation of intelligent machines:[58][59] writing

We will soon create intelligences greater than our own. When this happens, human history will have reached a kind of singularity, an intellectual transition as impenetrable as the knotted space-time at the center of a black hole, and the world will pass far beyond our understanding. This singularity, I believe, already haunts a number of science-fiction writers. It makes realistic extrapolation to an interstellar future impossible. To write a story set more than a century hence, one needs a nuclear war in between ... so that the world remains intelligible.Vinge's 1993 article "The Coming Technological Singularity: How to Survive in the Post-Human Era",[5] spread widely on the internet and helped to popularize the idea.[60] This article contains the statement, "Within thirty years, we will have the technological means to create superhuman intelligence. Shortly after, the human era will be ended." Vinge argues that science-fiction authors cannot write realistic post-singularity characters who surpass the human intellect, as the thoughts of such an intellect would be beyond the ability of humans to express.[5]

In 2000, Bill Joy, a prominent technologist and a co-founder of Sun Microsystems, voiced concern over the potential dangers of the singularity.[27]

In 2005, Kurzweil published The Singularity is Near. Kurzweil's publicity campaign included an appearance on The Daily Show with Jon Stewart.[61]

In 2007, Eliezer Yudkowsky suggested that many of the varied definitions that have been assigned to "singularity" are mutually incompatible rather than mutually supporting.[13][62] For example, Kurzweil extrapolates current technological trajectories past the arrival of self-improving AI or superhuman intelligence, which Yudkowsky argues represents a tension with both I. J. Good's proposed discontinuous upswing in intelligence and Vinge's thesis on unpredictability.[13]

In 2009, Kurzweil and X-Prize founder Peter Diamandis announced the establishment of Singularity University, a nonaccredited private institute whose stated mission is "to educate, inspire and empower leaders to apply exponential technologies to address humanity's grand challenges."[63] Funded by Google, Autodesk, ePlanet Ventures, and a group of technology industry leaders, Singularity University is based at NASA's Ames Research Center in Mountain View, California. The not-for-profit organization runs an annual ten-week graduate program during the northern-hemisphere summer that covers ten different technology and allied tracks, and a series of executive programs throughout the year.

In politics

In 2007, the joint Economic Committee of the United States Congress released a report about the future of nanotechnology. It predicts significant technological and political changes in the mid-term future, including possible technological singularity.[64][65][66]Former President of the United States Barack Obama spoke about singularity in his interview to Wired in 2016:[67]

One thing that we haven't talked about too much, and I just want to go back to, is we really have to think through the economic implications. Because most people aren't spending a lot of time right now worrying about singularity—they are worrying about "Well, is my job going to be replaced by a machine?"