Plant breeding is the science of changing the traits of plants in order to produce desired characteristics. It has been used to improve the quality of nutrition in products for humans and animals.

Plant breeding can be accomplished through many different techniques

ranging from simply selecting plants with desirable characteristics for

propagation, to methods that make use of knowledge of genetics and

chromosomes, to more complex molecular techniques.

Genes in a plant are what determine what type of qualitative or

quantitative traits it will have. Plant breeders strive to create a

specific outcome of plants and potentially new plant varieties.

Plant breeding has been practiced for thousands of years, since

near the beginning of human civilization. It is practiced worldwide by

individuals such as gardeners and farmers, and by professional plant

breeders employed by organizations such as government institutions, universities, crop-specific industry associations or research centers.

International development agencies believe that breeding new crops is important for ensuring food security

by developing new varieties that are higher yielding, disease

resistant, drought tolerant or regionally adapted to different

environments and growing conditions.

History

Plant breeding started with sedentary agriculture and particularly the domestication of the first agricultural plants, a practice which is estimated to date back 9,000 to 11,000 years. Initially early farmers simply selected food plants with particular desirable characteristics, and employed these as progenitors for subsequent generations, resulting in an accumulation of valuable traits over time.

Grafting technology had been practiced in China before 2000 BCE.

By 500 BCE grafting was well established and practiced.

Gregor Mendel (1822–84) is considered the "father of modern genetics". His experiments with plant hybridization led to his establishing laws of inheritance. Genetics stimulated research to improve crop production through plant breeding.

Modern plant breeding is applied genetics, but its scientific basis is broader, covering molecular biology, cytology, systematics, physiology, pathology, entomology, chemistry, and statistics (biometrics). It has also developed its own technology.

Classical plant breeding

One major technique of plant breeding is selection, the process of

selectively propagating plants with desirable characteristics and

eliminating or "culling" those with less desirable characteristics.

Another technique is the deliberate interbreeding (crossing)

of closely or distantly related individuals to produce new crop

varieties or lines with desirable properties. Plants are crossbred to

introduce traits/genes from one variety or line into a new genetic background. For example, a mildew-resistant pea

may be crossed with a high-yielding but susceptible pea, the goal of

the cross being to introduce mildew resistance without losing the

high-yield characteristics. Progeny from the cross would then be crossed

with the high-yielding parent to ensure that the progeny were most like

the high-yielding parent, (backcrossing).

The progeny from that cross would then be tested for yield (selection,

as described above) and mildew resistance and high-yielding resistant

plants would be further developed. Plants may also be crossed with

themselves to produce inbred varieties for breeding. Pollinators may be excluded through the use of pollination bags.

Classical breeding relies largely on homologous recombination between chromosomes to generate genetic diversity. The classical plant breeder may also make use of a number of in vitro techniques such as protoplast fusion, embryo rescue or mutagenesis (see below) to generate diversity and produce hybrid plants that would not exist in nature.

Traits that breeders have tried to incorporate into crop plants include:

- Improved quality, such as increased nutrition, improved flavor, or greater beauty

- Increased yield of the crop

- Increased tolerance of environmental pressures (salinity, extreme temperature, drought)

- Resistance to viruses, fungi and bacteria

- Increased tolerance to insect pests

- Increased tolerance of herbicides

- Longer storage period for the harvested crop

Before World War II

Garton's catalogue from 1902

Successful commercial plant breeding concerns were founded from the late 19th century. Gartons Agricultural Plant Breeders

in England was established in the 1890s by John Garton, who was one of

the first to commercialize new varieties of agricultural crops created

through cross-pollination. The firm's first introduction was Abundance Oat, one of the first agricultural grain varieties bred from a controlled cross, introduced to commerce in 1892.

In the early 20th century, plant breeders realized that Mendel's findings on the non-random nature of inheritance could be applied to seedling populations produced through deliberate pollinations to predict the frequencies of different types. Wheat hybrids were bred to increase the crop production of Italy during the so-called "Battle for Grain" (1925–1940). Heterosis was explained by George Harrison Shull.

It describes the tendency of the progeny of a specific cross to

outperform both parents. The detection of the usefulness of heterosis

for plant breeding has led to the development of inbred lines that

reveal a heterotic yield advantage when they are crossed. Maize was the first species where heterosis was widely used to produce hybrids.

Statistical

methods were also developed to analyze gene action and distinguish

heritable variation from variation caused by environment. In 1933

another important breeding technique, cytoplasmic male sterility (CMS), developed in maize, was described by Marcus Morton Rhoades. CMS is a maternally inherited trait that makes the plant produce sterile pollen. This enables the production of hybrids without the need for labor-intensive detasseling.

These early breeding techniques resulted in large yield increase in the United States in the early 20th century. Similar yield increases were not produced elsewhere until after World War II, the Green Revolution increased crop production in the developing world in the 1960s.

After World War II

In vitro-culture of Vitis (grapevine), Geisenheim Grape Breeding Institute

Following World War II

a number of techniques were developed that allowed plant breeders to

hybridize distantly related species, and artificially induce genetic

diversity.

When distantly related species are crossed, plant breeders make use of a number of plant tissue culture

techniques to produce progeny from otherwise fruitless mating.

Interspecific and intergeneric hybrids are produced from a cross of

related species or genera that do not normally sexually reproduce with each other. These crosses are referred to as Wide crosses. For example, the cereal triticale is a wheat and rye

hybrid. The cells in the plants derived from the first generation

created from the cross contained an uneven number of chromosomes and as

result was sterile. The cell division inhibitor colchicine was used to double the number of chromosomes in the cell and thus allow the production of a fertile line.

Failure to produce a hybrid may be due to pre- or post-fertilization incompatibility. If fertilization is possible between two species or genera, the hybrid embryo

may abort before maturation. If this does occur the embryo resulting

from an interspecific or intergeneric cross can sometimes be rescued and

cultured to produce a whole plant. Such a method is referred to as Embryo Rescue. This technique has been used to produce new rice for Africa, an interspecific cross of Asian rice (Oryza sativa) and African rice (Oryza glaberrima).

Hybrids may also be produced by a technique called protoplast fusion. In this case protoplasts are fused, usually in an electric field. Viable recombinants can be regenerated in culture.

Chemical mutagens like EMS and DMS, radiation and transposons are used to generate mutants with desirable traits to be bred with other cultivars – a process known as Mutation Breeding. Classical plant breeders also generate genetic diversity within a species by exploiting a process called somaclonal variation, which occurs in plants produced from tissue culture, particularly plants derived from callus. Induced polyploidy, and the addition or removal of chromosomes using a technique called chromosome engineering may also be used.

Agricultural research on potato plants

When a desirable trait has been bred into a species, a number of

crosses to the favored parent are made to make the new plant as similar

to the favored parent as possible. Returning to the example of the

mildew resistant pea being crossed with a high-yielding but susceptible

pea, to make the mildew resistant progeny of the cross most like the

high-yielding parent, the progeny will be crossed back to that parent

for several generations. This process removes most of the genetic contribution of the mildew

resistant parent. Classical breeding is therefore a cyclical process.

With classical breeding techniques, the breeder does not know

exactly what genes have been introduced to the new cultivars. Some scientists therefore argue that plants produced by classical breeding methods should undergo the same safety testing regime as genetically modified

plants. There have been instances where plants bred using classical

techniques have been unsuitable for human consumption, for example the poison solanine was unintentionally increased to unacceptable levels in certain varieties of potato through plant breeding. New potato varieties are often screened for solanine levels before reaching the marketplace.

Modern plant breeding

Modern

plant breeding may use techniques of molecular biology to select, or in

the case of genetic modification, to insert, desirable traits into

plants. Application of biotechnology or molecular biology is also known

as molecular breeding.

Modern facilities in molecular biology are now used in plant breeding.

Marker assisted selection

Sometimes many different genes can influence a desirable trait in plant breeding. The use of tools such as molecular markers or DNA fingerprinting

can map thousands of genes. This allows plant breeders to screen large

populations of plants for those that possess the trait of interest. The

screening is based on the presence or absence of a certain gene as

determined by laboratory procedures, rather than on the visual

identification of the expressed trait in the plant. The purpose of

marker assisted selection, or plant genomes analysis, is to identify the

location and function (phenotype) of various genes within the genome. If all of the genes are identified it leads to Genome sequence.

All plants have varying sizes and lengths of genomes with genes that

code for different proteins, but many are also the same. If a gene's

location and function is identified in one plant species, a very similar

gene likely can also be found in a similar location in another species

genome.

Reverse breeding and doubled haploids (DH)

Homozygous plants with desirable traits can be produced from heterozygous starting plants, if a haploid cell with the alleles for those traits can be produced, and then used to make a doubled haploid.

The doubled haploid will be homozygous for the desired traits.

Furthermore, two different homozygous plants created in that way can be

used to produce a generation of F1 hybrid

plants which have the advantages of heterozygosity and a greater range

of possible traits. Thus, an individual heterozygous plant chosen for

its desirable characteristics can be converted into a heterozygous

variety (F1 hybrid) without the necessity of vegetative reproduction but as the result of the cross of two homozygous/doubled haploid lines derived from the originally selected plant.

Using plant tissue culturing can produce haploid or double haploid

plant lines and generations. This minimizes the amount of genetic

diversity among that plant species in order to select for desirable

traits that will increase the fitness of the individuals. Using this

method decreases the need for breeding multiple generations of plants to

get a generation that is homologous for the desired traits, therefore

save much time in the process. There are many plant tissue culturing

techniques that can be used to achieve the haploid plants, but

microspore culturing is currently the most promising for producing the

largest numbers of them.

Genetic modification

Genetic modification of plants is achieved by adding a specific gene or genes to a plant, or by knocking down a gene with RNAi, to produce a desirable phenotype. The plants resulting from adding a gene are often referred to as transgenic plants.

If for genetic modification genes of the species or of a crossable

plant are used under control of their native promoter, then they are

called cisgenic plants.

Sometimes genetic modification can produce a plant with the desired

trait or traits faster than classical breeding because the majority of

the plant's genome is not altered.

To genetically modify a plant, a genetic construct must be

designed so that the gene to be added or removed will be expressed by

the plant. To do this, a promoter to drive transcription

and a termination sequence to stop transcription of the new gene, and

the gene or genes of interest must be introduced to the plant. A marker

for the selection of transformed plants is also included. In the laboratory, antibiotic resistance

is a commonly used marker: Plants that have been successfully

transformed will grow on media containing antibiotics; plants that have

not been transformed will die. In some instances markers for selection

are removed by backcrossing with the parent plant prior to commercial release.

The construct can be inserted in the plant genome by genetic recombination using the bacteria Agrobacterium tumefaciens or A. rhizogenes, or by direct methods like the gene gun or microinjection. Using plant viruses

to insert genetic constructs into plants is also a possibility, but the

technique is limited by the host range of the virus. For example, Cauliflower mosaic virus (CaMV) only infects cauliflower

and related species. Another limitation of viral vectors is that the

virus is not usually passed on the progeny, so every plant has to be

inoculated.

The majority of commercially released transgenic plants are currently limited to plants that have introduced resistance to insect pests and herbicides. Insect resistance is achieved through incorporation of a gene from Bacillus thuringiensis (Bt) that encodes a protein that is toxic to some insects. For example, the cotton bollworm, a common cotton pest, feeds on Bt cotton it will ingest the toxin and die. Herbicides usually work by binding to certain plant enzymes and inhibiting their action. The enzymes that the herbicide inhibits are known as the herbicides target site. Herbicide resistance can be engineered into crops by expressing a version of target site protein that is not inhibited by the herbicide. This is the method used to produce glyphosate resistant crop plants.

Genetic modification can further increase yields by increasing

stress tolerance to a given environment. Stresses such as temperature

variation, are signalled to the plant via a cascade of signalling

molecules which will activate a Transcription factor to regulate Gene expression.

Overexpression of particular genes involved in cold acclimation has

been shown to become more resistant to freezing, which is one common

cause of yield loss.

Genetic modification of plants that can produce pharmaceuticals (and industrial chemicals), sometimes called pharming, is a rather radical new area of plant breeding.

Issues and concerns

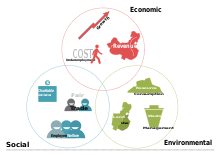

Modern

plant breeding, whether classical or through genetic engineering, comes

with issues of concern, particularly with regard to food crops. The

question of whether breeding can have a negative effect on nutritional

value is central in this respect. Although relatively little direct

research in this area has been done, there are scientific indications

that, by favoring certain aspects of a plant's development, other

aspects may be retarded. A study published in the Journal of the American College of Nutrition in 2004, entitled Changes in USDA Food Composition Data for 43 Garden Crops, 1950 to 1999, compared nutritional analysis of vegetables done in 1950 and in 1999, and found substantial decreases in six of 13 nutrients measured, including 6% of protein and 38% of riboflavin. Reductions in calcium, phosphorus, iron and ascorbic acid were also found. The study, conducted at the Biochemical Institute, University of Texas at Austin, concluded in summary: "We

suggest that any real declines are generally most easily explained by

changes in cultivated varieties between 1950 and 1999, in which there

may be trade-offs between yield and nutrient content."

The debate surrounding genetically modified food during the 1990s peaked in 1999 in terms of media coverage and risk perception, and continues today – for example, "Germany has thrown its weight behind a growing European mutiny over genetically modified crops by banning the planting of a widely grown pest-resistant corn variety." The debate encompasses the ecological impact of genetically modified plants, the safety of genetically modified food and concepts used for safety evaluation like substantial equivalence.

Such concerns are not new to plant breeding. Most countries have

regulatory processes in place to help ensure that new crop varieties

entering the marketplace are both safe and meet farmers' needs. Examples

include variety registration, seed schemes, regulatory authorizations

for GM plants, etc.

Plant breeders' rights

is also a major and controversial issue. Today, production of new

varieties is dominated by commercial plant breeders, who seek to protect

their work and collect royalties through national and international

agreements based in intellectual property rights.

The range of related issues is complex. In the simplest terms, critics

of the increasingly restrictive regulations argue that, through a

combination of technical and economic pressures, commercial breeders are

reducing biodiversity and significantly constraining individuals (such as farmers) from developing and trading seed on a regional level. Efforts to strengthen breeders' rights, for example, by lengthening periods of variety protection, are ongoing.

When new plant breeds or cultivars are bred, they must be

maintained and propagated. Some plants are propagated by asexual means

while others are propagated by seeds. Seed propagated cultivars require

specific control over seed source and production procedures to maintain

the integrity of the plant breeds results. Isolation is necessary to

prevent cross contamination with related plants or the mixing of seeds

after harvesting. Isolation is normally accomplished by planting

distance but in certain crops, plants are enclosed in greenhouses or

cages (most commonly used when producing F1 hybrids.)

Role of plant breeding in organic agriculture

Critics of organic agriculture

claim it is too low-yielding to be a viable alternative to conventional

agriculture. However, part of that poor performance may be the result

of growing poorly adapted varieties.

It is estimated that over 95% of organic agriculture is based on

conventionally adapted varieties, even though the production

environments found in organic vs. conventional farming systems are

vastly different due to their distinctive management practices.

Most notably, organic farmers have fewer inputs available than

conventional growers to control their production environments. Breeding

varieties specifically adapted to the unique conditions of organic

agriculture is critical for this sector to realize its full potential.

This requires selection for traits such as:

- Water use efficiency

- Nutrient use efficiency (particularly nitrogen and phosphorus)

- Weed competitiveness

- Tolerance of mechanical weed control

- Pest/disease resistance

- Early maturity (as a mechanism for avoidance of particular stresses)

- Abiotic stress tolerance (i.e. drought, salinity, etc...)

Currently, few breeding programs are directed at organic agriculture

and until recently those that did address this sector have generally

relied on indirect selection (i.e. selection in conventional

environments for traits considered important for organic agriculture).

However, because the difference between organic and conventional

environments is large, a given genotype may perform very differently in each environment due to an interaction between genes and the environment.

If this interaction is severe enough, an important trait required for

the organic environment may not be revealed in the conventional

environment, which can result in the selection of poorly adapted

individuals.

To ensure the most adapted varieties are identified, advocates of

organic breeding now promote the use of direct selection (i.e. selection

in the target environment) for many agronomic traits.

There are many classical and modern breeding techniques that can

be utilized for crop improvement in organic agriculture despite the ban

on genetically modified organisms.

For instance, controlled crosses between individuals allow desirable

genetic variation to be recombined and transferred to seed progeny via

natural processes. Marker assisted selection

can also be employed as a diagnostics tool to facilitate selection of

progeny who possess the desired trait(s), greatly speeding up the

breeding process.

This technique has proven particularly useful for the introgression of

resistance genes into new backgrounds, as well as the efficient

selection of many resistance genes pyramided into a single individual.

Unfortunately, molecular markers are not currently available for many important traits, especially complex ones controlled by many genes.

Addressing global food security through plant breeding

For future agriculture to thrive there are necessary changes which must be made in accordance to arising global issues.

These issues are arable land, harsh cropping conditions and food

security which involves, being able to provide the world population with

food containing sufficient nutrients. These crops need to be able to

mature in several environments allowing for worldwide access, this is

involves issues such as drought tolerance. These global issues are

achievable through the process of plant breeding, as it offers the

ability to select specific genes allowing the crop to perform at a level

which yields the desired results.

Increased yield without expansion

With

an increasing population, the production of food needs to increase with

it. It is estimated that a 70% increase in food production is needed

by 2050 in order to meet the Declaration of the World Summit on Food

Security. But with the degradation of agricultural land, simply planting

more crops is no longer a viable option. New varieties of plants can in

some cases be developed through plant breeding that generate an

increase of yield without relying on an increase in land area. An

example of this can be seen in Asia, where food production per capita

has increased twofold. This has been achieved through not only the use

of fertilisers, but through the use of better crops that have been

specifically designed for the area.

Breeding for increased nutritional value

Plant

breeding can contribute to global food security as it is a

cost-effective tool for increasing nutritional value of forage and

crops. Improvements in nutritional value for forage crops from the use

of analytical chemistry and rumen fermentation technology have been

recorded since 1960; this science and technology gave breeders the

ability to screen thousands of samples within a small amount of time,

meaning breeders could identify a high performing hybrid quicker. The

main area genetic increases were made was in vitro dry matter

digestibility (IVDMD) resulting in 0.7-2.5% increase, at just 1%

increase in IVDMD a single Bos Taurus also known as beef cattle reported

3.2% increase in daily gains. This improvement indicates plant breeding

is an essential tool in gearing future agriculture to perform at a more

advanced level.

Breeding for tolerance

Plant

breeding of hybrid crops has become extremely popular worldwide in an

effort to combat the harsh environment. With long periods of drought and

lack of water or nitrogen stress tolerance has become a significant

part of agriculture. Plant breeders have focused on identifying crops

which will ensure crops perform under these conditions; a way to achieve

this is finding strains of the crop that is resistance to drought

conditions with low nitrogen. It is evident from this that plant

breeding is vital for future agriculture to survive as it enables

farmers to produce stress resistant crops hence improving food security.

In countries that experience harsh winters such as Iceland, Germany and

further east in Europe, plant breeders are involved in breeding for

tolerance to frost, continuous snow-cover, frost-drought (desiccation

from wind and solar radiation under frost) and high moisture levels in

soil in winter.

Participatory plant breeding

Participatory

plant breeding (PPB) is when farmers are involved in a crop improvement

programme with opportunities to make decisions and contribute to the

research process at different stages. Participatory approaches to crop improvement can also be applied when

plant biotechnologies are being used for crop improvement.

Local agricultural systems and genetic diversity are developed and

strengthened by crop improvement, which participatory crop improvement

(PCI) plays a large role. PPB is enhanced by farmers knowledge of the

quality required and evaluation of target environment which affects the

effectiveness of PPB.

List of notable plant breeders

- Thomas Andrew Knight

- Keith Downey

- Luther Burbank

- Nazareno Strampelli

- Niels Ebbesen Hansen

- Norman Borlaug

- Mentor pollen, inactivated pollen that is compatible with the female plant is mixed with pollen that would normally be incompatible. The mentor pollen has the effect of guiding the foreign pollen to the ovules.

- S1 generation, the product of self-fertilization.