The duality represents a major advance in our understanding of string theory and quantum gravity. This is because it provides a non-perturbative formulation of string theory with certain boundary conditions and because it is the most successful realization of the holographic principle, an idea in quantum gravity originally proposed by Gerard 't Hooft and promoted by Leonard Susskind.

It also provides a powerful toolkit for studying strongly coupled quantum field theories. Much of the usefulness of the duality results from the fact that it is a strong-weak duality: when the fields of the quantum field theory are strongly interacting, the ones in the gravitational theory are weakly interacting and thus more mathematically tractable. This fact has been used to study many aspects of nuclear and condensed matter physics by translating problems in those subjects into more mathematically tractable problems in string theory.

The AdS/CFT correspondence was first proposed by Juan Maldacena in late 1997. Important aspects of the correspondence were elaborated in articles by Steven Gubser, Igor Klebanov, and Alexander Polyakov, and by Edward Witten. By 2015, Maldacena's article had over 10,000 citations, becoming the most highly cited article in the field of high energy physics.

It also provides a powerful toolkit for studying strongly coupled quantum field theories. Much of the usefulness of the duality results from the fact that it is a strong-weak duality: when the fields of the quantum field theory are strongly interacting, the ones in the gravitational theory are weakly interacting and thus more mathematically tractable. This fact has been used to study many aspects of nuclear and condensed matter physics by translating problems in those subjects into more mathematically tractable problems in string theory.

The AdS/CFT correspondence was first proposed by Juan Maldacena in late 1997. Important aspects of the correspondence were elaborated in articles by Steven Gubser, Igor Klebanov, and Alexander Polyakov, and by Edward Witten. By 2015, Maldacena's article had over 10,000 citations, becoming the most highly cited article in the field of high energy physics.

Background

Quantum gravity and strings

Current understanding of gravity is based on Albert Einstein's general theory of relativity. Formulated in 1915, general relativity explains gravity in terms of the geometry of space and time, or spacetime. It is formulated in the language of classical physics developed by physicists such as Isaac Newton and James Clerk Maxwell. The other nongravitational forces are explained in the framework of quantum mechanics.

Developed in the first half of the twentieth century by a number of

different physicists, quantum mechanics provides a radically different

way of describing physical phenomena based on probability.

Quantum gravity

is the branch of physics that seeks to describe gravity using the

principles of quantum mechanics. Currently, the most popular approach to

quantum gravity is string theory, which models elementary particles not as zero-dimensional points but as one-dimensional objects called strings.

In the AdS/CFT correspondence, one typically considers theories of

quantum gravity derived from string theory or its modern extension, M-theory.

In everyday life, there are three familiar dimensions of space

(up/down, left/right, and forward/backward), and there is one dimension

of time. Thus, in the language of modern physics, one says that

spacetime is four-dimensional. One peculiar feature of string theory and M-theory is that these theories require extra dimensions

of spacetime for their mathematical consistency: in string theory

spacetime is ten-dimensional, while in M-theory it is

eleven-dimensional.

The quantum gravity theories appearing in the AdS/CFT correspondence

are typically obtained from string and M-theory by a process known as compactification.

This produces a theory in which spacetime has effectively a lower

number of dimensions and the extra dimensions are "curled up" into

circles.

A standard analogy for compactification is to consider a

multidimensional object such as a garden hose. If the hose is viewed

from a sufficient distance, it appears to have only one dimension, its

length, but as one approaches the hose, one discovers that it contains a

second dimension, its circumference. Thus, an ant crawling inside it

would move in two dimensions.

Quantum field theory

The application of quantum mechanics to physical objects such as the electromagnetic field, which are extended in space and time, is known as quantum field theory. In particle physics,

quantum field theories form the basis for our understanding of

elementary particles, which are modeled as excitations in the

fundamental fields. Quantum field theories are also used throughout

condensed matter physics to model particle-like objects called quasiparticles.

In the AdS/CFT correspondence, one considers, in addition to a

theory of quantum gravity, a certain kind of quantum field theory called

a conformal field theory. This is a particularly symmetric and mathematically well behaved type of quantum field theory. Such theories are often studied in the context of string theory, where they are associated with the surface swept out by a string propagating through spacetime, and in statistical mechanics, where they model systems at a thermodynamic critical point.

Overview of the correspondence

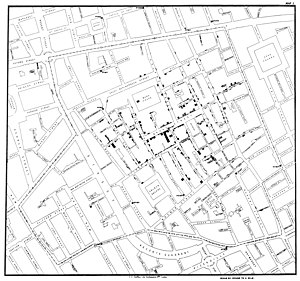

A tessellation of the hyperbolic plane by triangles and squares.

The geometry of anti-de Sitter space

In the AdS/CFT correspondence, one considers string theory or

M-theory on an anti-de Sitter background. This means that the geometry

of spacetime is described in terms of a certain vacuum solution of Einstein's equation called anti-de Sitter space.

In very elementary terms, anti-de Sitter space is a mathematical

model of spacetime in which the notion of distance between points (the metric) is different from the notion of distance in ordinary Euclidean geometry. It is closely related to hyperbolic space, which can be viewed as a disk as illustrated on the right. This image shows a tessellation

of a disk by triangles and squares. One can define the distance between

points of this disk in such a way that all the triangles and squares

are the same size and the circular outer boundary is infinitely far from

any point in the interior.

Now imagine a stack of hyperbolic disks where each disk represents the state of the universe at a given time. The resulting geometric object is three-dimensional anti-de Sitter space. It looks like a solid cylinder in which any cross section

is a copy of the hyperbolic disk. Time runs along the vertical

direction in this picture. The surface of this cylinder plays an

important role in the AdS/CFT correspondence. As with the hyperbolic

plane, anti-de Sitter space is curved in such a way that any point in the interior is actually infinitely far from this boundary surface.

Three-dimensional anti-de Sitter space is like a stack of hyperbolic disks, each one representing the state of the universe at a given time. The resulting spacetime looks like a solid cylinder.

This construction describes a hypothetical universe with only two

space and one time dimension, but it can be generalized to any number of

dimensions. Indeed, hyperbolic space can have more than two dimensions

and one can "stack up" copies of hyperbolic space to get

higher-dimensional models of anti-de Sitter space.

The idea of AdS/CFT

An

important feature of anti-de Sitter space is its boundary (which looks

like a cylinder in the case of three-dimensional anti-de Sitter space).

One property of this boundary is that, locally around any point, it

looks just like Minkowski space, the model of spacetime used in nongravitational physics.

One can therefore consider an auxiliary theory in which

"spacetime" is given by the boundary of anti-de Sitter space. This

observation is the starting point for AdS/CFT correspondence, which

states that the boundary of anti-de Sitter space can be regarded as the

"spacetime" for a conformal field theory. The claim is that this

conformal field theory is equivalent to the gravitational theory on the

bulk anti-de Sitter space in the sense that there is a "dictionary" for

translating calculations in one theory into calculations in the other.

Every entity in one theory has a counterpart in the other theory. For

example, a single particle in the gravitational theory might correspond

to some collection of particles in the boundary theory. In addition, the

predictions in the two theories are quantitatively identical so that if

two particles have a 40 percent chance of colliding in the

gravitational theory, then the corresponding collections in the boundary

theory would also have a 40 percent chance of colliding.

A hologram

is a two-dimensional image which stores information about all three

dimensions of the object it represents. The two images here are

photographs of a single hologram taken from different angles.

Notice that the boundary of anti-de Sitter space has fewer dimensions

than anti-de Sitter space itself. For instance, in the

three-dimensional example illustrated above, the boundary is a

two-dimensional surface. The AdS/CFT correspondence is often described

as a "holographic duality" because this relationship between the two

theories is similar to the relationship between a three-dimensional

object and its image as a hologram.

Although a hologram is two-dimensional, it encodes information about

all three dimensions of the object it represents. In the same way,

theories which are related by the AdS/CFT correspondence are conjectured

to be exactly equivalent, despite living in different numbers of

dimensions. The conformal field theory is like a hologram which

captures information about the higher-dimensional quantum gravity

theory.

Examples of the correspondence

Following

Maldacena's insight in 1997, theorists have discovered many different

realizations of the AdS/CFT correspondence. These relate various

conformal field theories to compactifications of string theory and

M-theory in various numbers of dimensions. The theories involved are

generally not viable models of the real world, but they have certain

features, such as their particle content or high degree of symmetry,

which make them useful for solving problems in quantum field theory and

quantum gravity.

The most famous example of the AdS/CFT correspondence states that type IIB string theory on the product space is equivalent to N = 4 supersymmetric Yang–Mills theory on the four-dimensional boundary. In this example, the spacetime on which the gravitational theory lives is effectively five-dimensional (hence the notation ), and there are five additional compact dimensions (encoded by the

factor). In the real world, spacetime is four-dimensional, at least

macroscopically, so this version of the correspondence does not provide a

realistic model of gravity. Likewise, the dual theory is not a viable

model of any real-world system as it assumes a large amount of supersymmetry. Nevertheless, as explained below, this boundary theory shares some features in common with quantum chromodynamics, the fundamental theory of the strong force. It describes particles similar to the gluons of quantum chromodynamics together with certain fermions. As a result, it has found applications in nuclear physics, particularly in the study of the quark–gluon plasma.

Another realization of the correspondence states that M-theory on is equivalent to the so-called (2,0)-theory in six dimensions.

In this example, the spacetime of the gravitational theory is

effectively seven-dimensional. The existence of the (2,0)-theory that

appears on one side of the duality is predicted by the classification of

superconformal field theories. It is still poorly understood because it is a quantum mechanical theory without a classical limit.

Despite the inherent difficulty in studying this theory, it is

considered to be an interesting object for a variety of reasons, both

physical and mathematical.

Yet another realization of the correspondence states that M-theory on is equivalent to the ABJM superconformal field theory in three dimensions.

Here the gravitational theory has four noncompact dimensions, so this

version of the correspondence provides a somewhat more realistic

description of gravity.

Applications to quantum gravity

A non-perturbative formulation of string theory

Interaction in the quantum world: world lines of point-like particles or a world sheet swept up by closed strings in string theory.

In quantum field theory, one typically computes the probabilities of various physical events using the techniques of perturbation theory. Developed by Richard Feynman and others in the first half of the twentieth century, perturbative quantum field theory uses special diagrams called Feynman diagrams to organize computations. One imagines that these diagrams depict the paths of point-like particles and their interactions.

Although this formalism is extremely useful for making predictions,

these predictions are only possible when the strength of the

interactions, the coupling constant, is small enough to reliably describe the theory as being close to a theory without interactions.

The starting point for string theory is the idea that the

point-like particles of quantum field theory can also be modeled as

one-dimensional objects called strings. The interaction of strings is

most straightforwardly defined by generalizing the perturbation theory

used in ordinary quantum field theory. At the level of Feynman diagrams,

this means replacing the one-dimensional diagram representing the path

of a point particle by a two-dimensional surface representing the motion

of a string. Unlike in quantum field theory, string theory does not yet

have a full non-perturbative definition, so many of the theoretical

questions that physicists would like to answer remain out of reach.

The problem of developing a non-perturbative formulation of

string theory was one of the original motivations for studying the

AdS/CFT correspondence.

As explained above, the correspondence provides several examples of

quantum field theories which are equivalent to string theory on anti-de

Sitter space. One can alternatively view this correspondence as

providing a definition of string theory in the special case where

the gravitational field is asymptotically anti-de Sitter (that is, when

the gravitational field resembles that of anti-de Sitter space at

spatial infinity). Physically interesting quantities in string theory

are defined in terms of quantities in the dual quantum field theory.

Black hole information paradox

In 1975, Stephen Hawking published a calculation which suggested that black holes are not completely black but emit a dim radiation due to quantum effects near the event horizon.

At first, Hawking's result posed a problem for theorists because it

suggested that black holes destroy information. More precisely,

Hawking's calculation seemed to conflict with one of the basic postulates of quantum mechanics, which states that physical systems evolve in time according to the Schrödinger equation. This property is usually referred to as unitarity

of time evolution. The apparent contradiction between Hawking's

calculation and the unitarity postulate of quantum mechanics came to be

known as the black hole information paradox.

The AdS/CFT correspondence resolves the black hole information

paradox, at least to some extent, because it shows how a black hole can

evolve in a manner consistent with quantum mechanics in some contexts.

Indeed, one can consider black holes in the context of the AdS/CFT

correspondence, and any such black hole corresponds to a configuration

of particles on the boundary of anti-de Sitter space.

These particles obey the usual rules of quantum mechanics and in

particular evolve in a unitary fashion, so the black hole must also

evolve in a unitary fashion, respecting the principles of quantum

mechanics.

In 2005, Hawking announced that the paradox had been settled in favor

of information conservation by the AdS/CFT correspondence, and he

suggested a concrete mechanism by which black holes might preserve

information.

Applications to quantum field theory

Nuclear physics

One physical system which has been studied using the AdS/CFT correspondence is the quark–gluon plasma, an exotic state of matter produced in particle accelerators. This state of matter arises for brief instants when heavy ions such as gold or lead nuclei are collided at high energies. Such collisions cause the quarks that make up atomic nuclei to deconfine at temperatures of approximately two trillion kelvins, conditions similar to those present at around seconds after the Big Bang.

The physics of the quark–gluon plasma is governed by quantum

chromodynamics, but this theory is mathematically intractable in

problems involving the quark–gluon plasma. In an article appearing in 2005, Đàm Thanh Sơn

and his collaborators showed that the AdS/CFT correspondence could be

used to understand some aspects of the quark–gluon plasma by describing

it in the language of string theory.

By applying the AdS/CFT correspondence, Sơn and his collaborators were

able to describe the quark gluon plasma in terms of black holes in

five-dimensional spacetime. The calculation showed that the ratio of two

quantities associated with the quark–gluon plasma, the shear viscosity and volume density of entropy , should be approximately equal to a certain universal constant:

where denotes the reduced Planck's constant and is Boltzmann's constant. In addition, the authors conjectured that this universal constant provides a lower bound for in a large class of systems. In 2008, the predicted value of this ratio for the quark–gluon plasma was confirmed at the Relativistic Heavy Ion Collider at Brookhaven National Laboratory.

Another important property of the quark–gluon plasma is that very

high energy quarks moving through the plasma are stopped or "quenched"

after traveling only a few femtometers. This phenomenon is characterized by a number called the jet quenching

parameter, which relates the energy loss of such a quark to the squared

distance traveled through the plasma. Calculations based on the AdS/CFT

correspondence have allowed theorists to estimate ,

and the results agree roughly with the measured value of this

parameter, suggesting that the AdS/CFT correspondence will be useful for

developing a deeper understanding of this phenomenon.

Condensed matter physics

A magnet levitating above a high-temperature superconductor. Today some physicists are working to understand high-temperature superconductivity using the AdS/CFT correspondence.

Over the decades, experimental condensed matter physicists have discovered a number of exotic states of matter, including superconductors and superfluids.

These states are described using the formalism of quantum field theory,

but some phenomena are difficult to explain using standard field

theoretic techniques. Some condensed matter theorists including Subir Sachdev

hope that the AdS/CFT correspondence will make it possible to describe

these systems in the language of string theory and learn more about

their behavior.

So far some success has been achieved in using string theory methods to describe the transition of a superfluid to an insulator. A superfluid is a system of electrically neutral atoms that flows without any friction. Such systems are often produced in the laboratory using liquid helium,

but recently experimentalists have developed new ways of producing

artificial superfluids by pouring trillions of cold atoms into a lattice

of criss-crossing lasers.

These atoms initially behave as a superfluid, but as experimentalists

increase the intensity of the lasers, they become less mobile and then

suddenly transition to an insulating state. During the transition, the

atoms behave in an unusual way. For example, the atoms slow to a halt at

a rate that depends on the temperature

and on Planck's constant, the fundamental parameter of quantum

mechanics, which does not enter into the description of the other phases.

This behavior has recently been understood by considering a dual

description where properties of the fluid are described in terms of a

higher dimensional black hole.

Criticism

With

many physicists turning towards string-based methods to attack problems

in nuclear and condensed matter physics, some theorists working in

these areas have expressed doubts about whether the AdS/CFT

correspondence can provide the tools needed to realistically model

real-world systems. In a talk at the Quark Matter conference in 2006, an American physicist, Larry McLerran

pointed out that the N=4 super Yang–Mills theory that appears in the

AdS/CFT correspondence differs significantly from quantum

chromodynamics, making it difficult to apply these methods to nuclear

physics. According to McLerran,

supersymmetric Yang–Mills is not QCD ... It has no mass scale and is conformally invariant. It has no confinement and no running coupling constant. It is supersymmetric. It has no chiral symmetry breaking or mass generation. It has six scalar and fermions in the adjoint representation ... It may be possible to correct some or all of the above problems, or, for various physical problems, some of the objections may not be relevant. As yet there is not consensus nor compelling arguments for the conjectured fixes or phenomena which would insure that the supersymmetric Yang Mills results would reliably reflect QCD.

In a letter to Physics Today, Nobel laureate Philip W. Anderson voiced similar concerns about applications of AdS/CFT to condensed matter physics, stating

As a very general problem with the AdS/CFT approach in condensed-matter theory, we can point to those telltale initials "CFT"—conformal field theory. Condensed-matter problems are, in general, neither relativistic nor conformal. Near a quantum critical point, both time and space may be scaling, but even there we still have a preferred coordinate system and, usually, a lattice. There is some evidence of other linear-T phases to the left of the strange metal about which they are welcome to speculate, but again in this case the condensed-matter problem is overdetermined by experimental facts.

History and development

Gerard 't Hooft obtained results related to the AdS/CFT correspondence in the 1970s by studying analogies between string theory and nuclear physics.

String theory and nuclear physics

The discovery of the AdS/CFT correspondence in late 1997 was the

culmination of a long history of efforts to relate string theory to

nuclear physics. In fact, string theory was originally developed during the late 1960s and early 1970s as a theory of hadrons, the subatomic particles like the proton and neutron that are held together by the strong nuclear force.

The idea was that each of these particles could be viewed as a

different oscillation mode of a string. In the late 1960s,

experimentalists had found that hadrons fall into families called Regge trajectories with squared energy proportional to angular momentum, and theorists showed that this relationship emerges naturally from the physics of a rotating relativistic string.

On the other hand, attempts to model hadrons as strings faced serious problems. One problem was that string theory includes a massless spin-2 particle whereas no such particle appears in the physics of hadrons. Such a particle would mediate a force with the properties of gravity. In 1974, Joel Scherk and John Schwarz

suggested that string theory was therefore not a theory of nuclear

physics as many theorists had thought but instead a theory of quantum

gravity.

At the same time, it was realized that hadrons are actually made of

quarks, and the string theory approach was abandoned in favor of quantum

chromodynamics.

In quantum chromodynamics, quarks have a kind of charge that comes in three varieties called colors. In a paper from 1974, Gerard 't Hooft

studied the relationship between string theory and nuclear physics from

another point of view by considering theories similar to quantum

chromodynamics, where the number of colors is some arbitrary number , rather than three. In this article, 't Hooft considered a certain limit where

tends to infinity and argued that in this limit certain calculations in

quantum field theory resemble calculations in string theory.

Stephen Hawking predicted in 1975 that black holes emit radiation due to quantum effects.

Black holes and holography

In 1975, Stephen Hawking published a calculation which suggested that

black holes are not completely black but emit a dim radiation due to

quantum effects near the event horizon. This work extended previous results of Jacob Bekenstein who had suggested that black holes have a well defined entropy.

At first, Hawking's result appeared to contradict one of the main

postulates of quantum mechanics, namely the unitarity of time evolution.

Intuitively, the unitarity postulate says that quantum mechanical

systems do not destroy information as they evolve from one state to

another. For this reason, the apparent contradiction came to be known as

the black hole information paradox.

Leonard Susskind made early contributions to the idea of holography in quantum gravity.

Later, in 1993, Gerard 't Hooft wrote a speculative paper on quantum gravity in which he revisited Hawking's work on black hole thermodynamics, concluding that the total number of degrees of freedom in a region of spacetime surrounding a black hole is proportional to the surface area of the horizon. This idea was promoted by Leonard Susskind and is now known as the holographic principle.

The holographic principle and its realization in string theory through

the AdS/CFT correspondence have helped elucidate the mysteries of black

holes suggested by Hawking's work and are believed to provide a

resolution of the black hole information paradox. In 2004, Hawking conceded that black holes do not violate quantum mechanics, and he suggested a concrete mechanism by which they might preserve information.

Maldacena's paper

In late 1997, Juan Maldacena published a landmark paper that initiated the study of AdS/CFT. According to Alexander Markovich Polyakov, "[Maldacena's] work opened the flood gates." The conjecture immediately excited great interest in the string theory community and was considered in articles by Steven Gubser, Igor Klebanov and Polyakov, and by Edward Witten.

These papers made Maldacena's conjecture more precise and showed that

the conformal field theory appearing in the correspondence lives on the

boundary of anti-de Sitter space.

Juan Maldacena first proposed the AdS/CFT correspondence in late 1997.

One special case of Maldacena's proposal says that N=4 super Yang–Mills theory, a gauge theory similar in some ways to quantum chromodynamics, is equivalent to string theory in five-dimensional anti-de Sitter space.

This result helped clarify the earlier work of 't Hooft on the

relationship between string theory and quantum chromodynamics, taking

string theory back to its roots as a theory of nuclear physics.

Maldacena's results also provided a concrete realization of the

holographic principle with important implications for quantum gravity

and black hole physics. By the year 2015, Maldacena's paper had become the most highly cited paper in high energy physics with over 10,000 citations.

These subsequent articles have provided considerable evidence that the

correspondence is correct, although so far it has not been rigorously proved.

AdS/CFT finds applications

In 1999, after taking a job at Columbia University,

nuclear physicist Đàm Thanh Sơn paid a visit to Andrei Starinets, a

friend from Sơn's undergraduate days who happened to be doing a Ph.D. in

string theory at New York University.

Although the two men had no intention of collaborating, Sơn soon

realized that the AdS/CFT calculations Starinets was doing could shed

light on some aspects of the quark–gluon plasma, an exotic state of

matter produced when heavy ions

are collided at high energies. In collaboration with Starinets and

Pavel Kovtun, Sơn was able to use the AdS/CFT correspondence to

calculate a key parameter of the plasma.

As Sơn later recalled, "We turned the calculation on its head to give

us a prediction for the value of the shear viscosity of a plasma ... A

friend of mine in nuclear physics joked that ours was the first useful

paper to come out of string theory."

Today physicists continue to look for applications of the AdS/CFT correspondence in quantum field theory.

In addition to the applications to nuclear physics advocated by Đàm

Thanh Sơn and his collaborators, condensed matter physicists such as

Subir Sachdev have used string theory methods to understand some aspects

of condensed matter physics. A notable result in this direction was the

description, via the AdS/CFT correspondence, of the transition of a

superfluid to an insulator. Another emerging subject is the fluid/gravity correspondence, which uses the AdS/CFT correspondence to translate problems in fluid dynamics into problems in general relativity.

Generalizations

Three-dimensional gravity

In order to better understand the quantum aspects of gravity in our four-dimensional universe, some physicists have considered a lower-dimensional mathematical model in which spacetime has only two spatial dimensions and one time dimension. In this setting, the mathematics describing the gravitational field

simplifies drastically, and one can study quantum gravity using

familiar methods from quantum field theory, eliminating the need for

string theory or other more radical approaches to quantum gravity in

four dimensions.

Beginning with the work of J. D. Brown and Marc Henneaux in 1986,

physicists have noticed that quantum gravity in a three-dimensional

spacetime is closely related to two-dimensional conformal field theory.

In 1995, Henneaux and his coworkers explored this relationship in more

detail, suggesting that three-dimensional gravity in anti-de Sitter

space is equivalent to the conformal field theory known as Liouville field theory.

Another conjecture formulated by Edward Witten states that

three-dimensional gravity in anti-de Sitter space is equivalent to a

conformal field theory with monster group symmetry. These conjectures provide examples of the AdS/CFT correspondence that do not require the full apparatus of string or M-theory.

dS/CFT correspondence

Unlike our universe, which is now known to be expanding at an

accelerating rate, anti-de Sitter space is neither expanding nor

contracting. Instead it looks the same at all times. In more technical language, one says that anti-de Sitter space corresponds to a universe with a negative cosmological constant, whereas the real universe has a small positive cosmological constant.

Although the properties of gravity at short distances should be somewhat independent of the value of the cosmological constant, it is desirable to have a version of the AdS/CFT correspondence for positive cosmological constant. In 2001, Andrew Strominger introduced a version of the duality called the dS/CFT correspondence. This duality involves a model of spacetime called de Sitter space with a positive cosmological constant. Such a duality is interesting from the point of view of cosmology since many cosmologists believe that the very early universe was close to being de Sitter space. Our universe may also resemble de Sitter space in the distant future.

Kerr/CFT correspondence

Although the AdS/CFT correspondence is often useful for studying the properties of black holes,

most of the black holes considered in the context of AdS/CFT are

physically unrealistic. Indeed, as explained above, most versions of the

AdS/CFT correspondence involve higher-dimensional models of spacetime

with unphysical supersymmetry.

In 2009, Monica Guica, Thomas Hartman, Wei Song, and Andrew

Strominger showed that the ideas of AdS/CFT could nevertheless be used

to understand certain astrophysical black holes. More precisely, their results apply to black holes that are approximated by extremal Kerr black holes, which have the largest possible angular momentum compatible with a given mass.

They showed that such black holes have an equivalent description in

terms of conformal field theory. The Kerr/CFT correspondence was later

extended to black holes with lower angular momentum.

Higher spin gauge theories

The

AdS/CFT correspondence is closely related to another duality

conjectured by Igor Klebanov and Alexander Markovich Polyakov in 2002.

This duality states that certain "higher spin gauge theories" on

anti-de Sitter space are equivalent to conformal field theories with O(N)

symmetry. Here the theory in the bulk is a type of gauge theory

describing particles of arbitrarily high spin. It is similar to string

theory, where the excited modes of vibrating strings correspond to

particles with higher spin, and it may help to better understand the

string theoretic versions of AdS/CFT and possibly even prove the correspondence. In 2010, Simone Giombi and Xi Yin obtained further evidence for this duality by computing quantities called three-point functions.