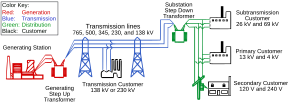

An electrical grid is an interconnected network for electricity delivery from producers to consumers. Electrical grids vary in size and can cover whole countries or continents. It consists of:

- power stations: often located near energy and away from heavily populated areas

- electrical substations to step voltage up or down

- electric power transmission to carry power long distances

- electric power distribution to individual customers, where voltage is stepped down again to the required service voltage(s).

Grids are nearly always synchronous, meaning all distribution areas operate with three phase alternating current (AC) frequencies synchronized (so that voltage swings occur at almost the same time). This allows transmission of AC power throughout the area, connecting a large number of electricity generators and consumers and potentially enabling more efficient electricity markets and redundant generation.

The combined transmission and distribution network is part of electricity delivery, known as the "power grid" in North America, or just "the grid." In the United Kingdom, India, Tanzania, Myanmar, Malaysia and New Zealand, the network is known as the National Grid.

Although electrical grids are widespread, as of 2016, 1.4 billion people worldwide were not connected to an electricity grid. As electrification increases, the number of people with access to grid electricity is growing. About 840 million people (mostly in Africa) had no access to grid electricity in 2017, down from 1.2 billion in 2010.

Electrical grids can be prone to malicious intrusion or attack; thus, there is a need for electric grid security. Also as electric grids modernize and introduce computer technology, cyber threats start to become a security risk. Particular concerns relate to the more complex computer systems needed to manage grids.

History

Early electric energy was produced near the device or service requiring that energy. In the 1880s, electricity competed with steam, hydraulics, and especially coal gas. Coal gas was first produced on customer's premises but later evolved into gasification plants that enjoyed economies of scale. In the industrialized world, cities had networks of piped gas, used for lighting. But gas lamps produced poor light, wasted heat, made rooms hot and smoky, and gave off hydrogen and carbon monoxide. They also posed a fire hazard. In the 1880s electric lighting soon became advantageous compared to gas lighting.

Electric utility companies established central stations to take advantage of economies of scale and moved to centralized power generation, distribution, and system management. After the war of the currents was settled in favor of AC power, with long-distance power transmission it became possible to interconnect stations to balance the loads and improve load factors. Historically, transmission and distribution lines were owned by the same company, but starting in the 1990s, many countries have liberalized the regulation of the electricity market in ways that have led to the separation of the electricity transmission business from the distribution business.

In the United Kingdom, Charles Merz, of the Merz & McLellan consulting partnership, built the Neptune Bank Power Station near Newcastle upon Tyne in 1901, and by 1912 had developed into the largest integrated power system in Europe. Merz was appointed head of a parliamentary committee and his findings led to the Williamson Report of 1918, which in turn created the Electricity (Supply) Act 1919. The bill was the first step towards an integrated electricity system. The Electricity (Supply) Act 1926 led to the setting up of the National Grid. The Central Electricity Board standardized the nation's electricity supply and established the first synchronized AC grid, running at 132 kilovolts and 50 Hertz. This started operating as a national system, the National Grid, in 1938.

In the United States in the 1920s, utilities formed joint-operations to share peak load coverage and backup power. In 1934, with the passage of the Public Utility Holding Company Act (USA), electric utilities were recognized as public goods of importance and were given outlined restrictions and regulatory oversight of their operations. The Energy Policy Act of 1992 required transmission line owners to allow electric generation companies open access to their network and led to a restructuring of how the electric industry operated in an effort to create competition in power generation. No longer were electric utilities built as vertical monopolies, where generation, transmission and distribution were handled by a single company. Now, the three stages could be split among various companies, in an effort to provide fair access to high voltage transmission. The Energy Policy Act of 2005 allowed incentives and loan guarantees for alternative energy production and advance innovative technologies that avoided greenhouse emissions.

In France, electrification began in the 1900s, with 700 communes in 1919, and 36,528 in 1938. At the same time, these close networks began to interconnect: Paris in 1907 at 12 kV, the Pyrénées in 1923 at 150 kV, and finally almost all of the country interconnected by 1938 at 220 kV. In 1946, the grid was the world's most dense. That year the state nationalised the industry, by uniting the private companies as Électricité de France. The frequency was standardised at 50 Hz, and the 225 kV network replaced 110 kV and 120 kV. Since 1956, service voltage has been standardised at 220/380 V, replacing the previous 127/220 V. During the 1970s, the 400 kV network, the new European standard, was implemented.

In China, electrification began in the 1950s. In August 1961, the electrification of the Baoji-Fengzhou section of the Baocheng Railway was completed and delivered for operation, becoming China's first electrified railway. From 1958 to 1998, China's electrified railway reached 6,200 miles (10,000 kilometers). As of the end of 2017, this number has reached 54,000 miles (87,000 kilometers). In the current railway electrification system of China, State Grid Corporation of China is an important power supplier. In 2019, it completed the power supply project of China's important electrified railways in its operating areas, such as Jingtong Railway, Haoji Railway, Zhengzhou–Wanzhou high-speed railway, et cetera, providing power supply guarantee for 110 traction stations, and its cumulative power line construction length reached 6,586 kilometers.

Components

Generation

Electricity generation is the process of generating electric power from sources of primary energy typically at power stations. Usually this is done with electromechanical generators driven by heat engines or the kinetic energy of water or wind. Other energy sources include solar photovoltaics and geothermal power.

The sum of the power outputs of generators on the grid is the production of the grid, typically measured in gigawatts (GW).

Transmission

Electric power transmission is the bulk movement of electrical energy from a generating site, via a web of interconnected lines, to an electrical substation, from which is connected to the distribution system. This networked system of connections is distinct from the local wiring between high-voltage substations and customers.

Because the power is often generated far from where it is consumed, the transmission system can cover great distances. For a given amount of power, transmission efficiency is greater at higher voltages and lower amperages. Therefore voltages are stepped up at the generating station, at stepped down at local substations for distribution to customers.

Most transmission is three-phase. Three phase, compared to single phase, can deliver much more power for a given amount of wire, since the neutral and ground wires are shared. Further, three-phase generators and motors are more efficient than their single-phase counterparts.

However for conventional conductors one of the main losses are resistive losses which are a square law on current, and depend on distance. High voltage AC transmission lines can lose 1-4% per hundred miles. However, high-voltage direct current can have half the losses of AC. Over very long distances, these efficiencies can offset the additional cost of the required AC/DC converter stations at each end.

Transmission networks are complex with redundant pathways. The physical layout is often forced by what land is available and its geology. Most transmission grids offer the reliability that more complex mesh networks provide. Redundancy allows line failures to occur and power is simply rerouted while repairs are done.

Substations

Substations may perform many different functions but usually transform voltage from low to high (step up) and from high to low (step down). Between the generator and the final consumer, the voltage may be transformed several times.

The three main types of substations, by function, are:

- Step-up substation: these use transformers to raise the voltage coming from the generators and power plants so that power can be transmitted long distances more efficiently, with smaller currents.

- Step-down substation: these transformers lower the voltage coming from the transmission lines which can be used in industry or sent to a distribution substation.

- Distribution substation: these transform the voltage lower again for the distribution to end users.

Aside from transformers, other major components or functions of substations include:

- Circuit breakers: used to automatically break a circuit and isolate a fault in the system.

- Switches: to control the flow of electricity, and isolate equipment.

- The substation busbar: typically a set of three conductors, one for each phase of current. The substation is orgranized around the buses, and they are connected to incoming lines, transformers, protection equipment, switches, and the outgoing lines.

- Lightning arresters

- Capacitors for power factor correction

Electric power distribution

Distribution is the final stage in the delivery of power; it carries electricity from the transmission system to individual consumers. Substations connect to the transmission system and lower the transmission voltage to medium voltage ranging between 2 kV and 35 kV. Primary distribution lines carry this medium voltage power to distribution transformers located near the customer's premises. Distribution transformers again lower the voltage to the utilization voltage. Customers demanding a much larger amount of power may be connected directly to the primary distribution level or the subtransmission level.

Distribution networks are divided into two types, radial or network.

In cities and towns of North America, the grid tends to follow the classic radially fed design. A substation receives its power from the transmission network, the power is stepped down with a transformer and sent to a bus from which feeders fan out in all directions across the countryside. These feeders carry three-phase power, and tend to follow the major streets near the substation. As the distance from the substation grows, the fanout continues as smaller laterals spread out to cover areas missed by the feeders. This tree-like structure grows outward from the substation, but for reliability reasons, usually contains at least one unused backup connection to a nearby substation. This connection can be enabled in case of an emergency, so that a portion of a substation's service territory can be alternatively fed by another substation.

Storage

Grid energy storage (also called large-scale energy storage) is a collection of methods used for energy storage on a large scale within an electrical power grid. Electrical energy is stored during times when electricity is plentiful and inexpensive (especially from intermittent power sources such as renewable electricity from wind power, tidal power and solar power) or when demand is low, and later returned to the grid when demand is high, and electricity prices tend to be higher.

As of 2020, the largest form of grid energy storage is dammed hydroelectricity, with both conventional hydroelectric generation as well as pumped storage hydroelectricity.

Developments in battery storage have enabled commercially viable projects to store energy during peak production and release during peak demand, and for use when production unexpectedly falls giving time for slower responding resources to be brought online.

Two alternatives to grid storage are the use of peaking power plants to fill in supply gaps and demand response to shift load to other times.

Functionalities

Demand

The demand, or load on an electrical grid is the total electrical power being removed by the users of the grid.

The graph of the demand over time is called the demand curve.

Baseload is the minimum load on the grid over any given period, peak demand is the maximum load. Historically, baseload was commonly met by equipment that was relatively cheap to run, that ran continuously for weeks or months at a time, but globally this is becoming less common. The extra peak demand requirements are sometimes produced by expensive peaking plants that are generators optimised to come on-line quickly but these too are becoming less common.

Voltage

Grids are designed to supply electricity to their customers at largely constant voltages. This has to be achieved with varying demand, variable reactive loads, and even nonlinear loads, with electricity provided by generators and distribution and transmission equipment that are not perfectly reliable. Often grids use tap changers on transformers near to the consumers to adjust the voltage and keep it within specification.

Frequency

In a synchronous grid all the generators must run at the same frequency, and must stay very nearly in phase with each other and the grid. Generation and consumption must be balanced across the entire grid, because energy is consumed as it is produced. For rotating generators, a local governor regulates the driving torque, maintaining almost constant rotation speed as loading changes. Energy is stored in the immediate short term by the rotational kinetic energy of the generators.

Although the speed is kept largely constant, small deviations from the nominal system frequency are very important in regulating individual generators and are used as a way of assessing the equilibrium of the grid as a whole. When the grid is lightly loaded the grid frequency runs above the nominal frequency, and this is taken as an indication by Automatic Generation Control systems across the network that generators should reduce their output. Conversely, when the grid is heavily loaded, the frequency naturally slows, and governors adjust their generators so that more power is output (droop speed control). When generators have identical droop speed control settings it ensures that multiple parallel generators with the same settings share load in proportion to their rating.

In addition, there's often central control, which can change the parameters of the AGC systems over timescales of a minute or longer to further adjust the regional network flows and the operating frequency of the grid.

For timekeeping purposes, the nominal frequency will be allowed to vary in the short term, but is adjusted to prevent line-operated clocks from gaining or losing significant time over the course of a whole 24 hour period.

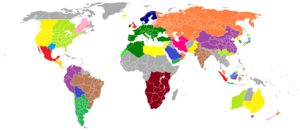

An entire synchronous grid runs at the same frequency, neighbouring grids would not be synchronised even if they run at the same nominal frequency. High-voltage direct current lines or variable-frequency transformers can be used to connect two alternating current interconnection networks which are not synchronized with each other. This provides the benefit of interconnection without the need to synchronize an even wider area. For example, compare the wide area synchronous grid map of Europe with the map of HVDC lines.

Capacity and firm capacity

The sum of the maximum power outputs (nameplate capacity) of the generators attached to an electrical grid might be considered to be the capacity of the grid.

However, in practice, they are never run flat out simultaneously. Typically, some generators are kept running at lower output powers (spinning reserve) to deal with failures as well as variation in demand. In addition generators can be off-line for maintenance or other reasons, such as availability of energy inputs (fuel, water, wind, sun etc.) or pollution constraints.

Firm capacity is the maximum power output on a grid that is immediately available over a given time period, and is a far more useful figure.

Production

Most grid codes specify that the load is shared between the generators in merit order according to their marginal cost (i.e. cheapest first) and sometimes their environmental impact. Thus cheap electricity providers tend to be run flat out almost all the time, and the more expensive producers are only run when necessary.

Handling failure

Failures are usually associated with generators or power transmission lines tripping circuit breakers due to faults leading to a loss of generation capacity for customers, or excess demand. This will often cause the frequency to reduce, and the remaining generators will react and together attempt to stabilize above the minimum. If that is not possible then a number of scenarios can occur.

A large failure in one part of the grid—unless quickly compensated for—can cause current to re-route itself to flow from the remaining generators to consumers over transmission lines of insufficient capacity, causing further failures. One downside to a widely connected grid is thus the possibility of cascading failure and widespread power outage. A central authority is usually designated to facilitate communication and develop protocols to maintain a stable grid. For example, the North American Electric Reliability Corporation gained binding powers in the United States in 2006, and has advisory powers in the applicable parts of Canada and Mexico. The U.S. government has also designated National Interest Electric Transmission Corridors, where it believes transmission bottlenecks have developed.

Brownout

A brownout is an intentional or unintentional drop in voltage in an electrical power supply system. Intentional brownouts are used for load reduction in an emergency. The reduction lasts for minutes or hours, as opposed to short-term voltage sag (or dip). The term brownout comes from the dimming experienced by incandescent lighting when the voltage sags. A voltage reduction may be an effect of disruption of an electrical grid, or may occasionally be imposed in an effort to reduce load and prevent a power outage, known as a blackout.

Blackout

A power outage (also called a power cut, a power out, a power blackout, power failure or a blackout) is a loss of the electric power to a particular area.

Power failures can be caused by faults at power stations, damage to electric transmission lines, substations or other parts of the distribution system, a short circuit, cascading failure, fuse or circuit breaker operation, and human error.

Power failures are particularly critical at sites where the environment and public safety are at risk. Institutions such as hospitals, sewage treatment plants, mines, shelters and the like will usually have backup power sources such as standby generators, which will automatically start up when electrical power is lost. Other critical systems, such as telecommunication, are also required to have emergency power. The battery room of a telephone exchange usually has arrays of lead–acid batteries for backup and also a socket for connecting a generator during extended periods of outage.

Load shedding

Electrical generation and transmission systems may not always meet peak demand requirements— the greatest amount of electricity required by all utility customers within a given region. In these situations, overall demand must be lowered, either by turning off service to some devices or cutting back the supply voltage (brownouts), in order to prevent uncontrolled service disruptions such as power outages (widespread blackouts) or equipment damage. Utilities may impose load shedding on service areas via targeted blackouts, rolling blackouts or by agreements with specific high-use industrial consumers to turn off equipment at times of system-wide peak demand.

Black start

A black start is the process of restoring an electric power station or a part of an electric grid to operation without relying on the external electric power transmission network to recover from a total or partial shutdown.

Normally, the electric power used within the plant is provided from the station's own generators. If all of the plant's main generators are shut down, station service power is provided by drawing power from the grid through the plant's transmission line. However, during a wide-area outage, off-site power from the grid is not available. In the absence of grid power, a so-called black start needs to be performed to bootstrap the power grid into operation.

To provide a black start, some power stations have small diesel generators, normally called the black start diesel generator (BSDG), which can be used to start larger generators (of several megawatts capacity), which in turn can be used to start the main power station generators. Generating plants using steam turbines require station service power of up to 10% of their capacity for boiler feedwater pumps, boiler forced-draft combustion air blowers, and for fuel preparation. It is uneconomical to provide such a large standby capacity at each station, so black-start power must be provided over designated tie lines from another station. Often hydroelectric power plants are designated as the black-start sources to restore network interconnections. A hydroelectric station needs very little initial power to start (just enough to open the intake gates and provide excitation current to the generator field coils), and can put a large block of power on line very quickly to allow start-up of fossil-fuel or nuclear stations. Certain types of combustion turbine can be configured for black start, providing another option in places without suitable hydroelectric plants. In 2017 a utility in Southern California has successfully demonstrated the use of a battery energy storage system to provide a black start, firing up a combined cycle gas turbine from an idle state.

Scale

Microgrid

A microgrid is a local grid that is usually part of the regional wide-area synchronous grid but which can disconnect and operate autonomously. It might do this in times when the main grid is affected by outages. This is known as islanding, and it might run indefinitely on its own resources.

Compared to larger grids, microgrids typically use a lower voltage distribution network and distributed generators. Microgrids may not only be more resilient, but may be cheaper to implement in isolated areas.

A design goal is that a local area produces all of the energy it uses.

Example implementations include:

- Hajjah and Lahj, Yemen: community-owned solar microgrids.

- Île d'Yeu pilot program: sixty-four solar panels with a peak capacity of 23.7 kW on five houses and a battery with a storage capacity of 15 kWh.

- Les Anglais, Haiti: includes energy theft detection.

- Mpeketoni, Kenya: a community-based diesel-powered micro-grid system.

- Stone Edge Farm Winery: micro-turbine, fuel-cell, multiple battery, hydrogen electrolyzer, and PV enabled winery in Sonoma, California.

Wide area synchronous grid

A wide area synchronous grid, also known as an "interconnection" in North America, directly connects many generators delivering AC power with the same relative frequency to many consumers. For example, there are four major interconnections in North America (the Western Interconnection, the Eastern Interconnection, the Quebec Interconnection and the Texas Interconnection). In Europe one large grid connects most of continental Europe.

A wide area synchronous grid (also called an "interconnection" in North America) is an electrical grid at a regional scale or greater that operates at a synchronized frequency and is electrically tied together during normal system conditions. These are also known as synchronous zones, the largest of which is the synchronous grid of Continental Europe (ENTSO-E) with 667 gigawatts (GW) of generation, and the widest region served being that of the IPS/UPS system serving countries of the former Soviet Union. Synchronous grids with ample capacity facilitate electricity market trading across wide areas. In the ENTSO-E in 2008, over 350,000 megawatt hours were sold per day on the European Energy Exchange (EEX).

Each of the interconnects in North America are run at a nominal 60 Hz, while those of Europe run at 50 Hz. Neighbouring interconnections with the same frequency and standards can be synchronized and directly connected to form a larger interconnection, or they may share power without synchronization via high-voltage direct current power transmission lines (DC ties), or with variable-frequency transformers (VFTs), which permit a controlled flow of energy while also functionally isolating the independent AC frequencies of each side.

The benefits of synchronous zones include pooling of generation, resulting in lower generation costs; pooling of load, resulting in significant equalizing effects; common provisioning of reserves, resulting in cheaper primary and secondary reserve power costs; opening of the market, resulting in possibility of long-term contracts and short term power exchanges; and mutual assistance in the event of disturbances.

One disadvantage of a wide-area synchronous grid is that problems in one part can have repercussions across the whole grid. For example, in 2018 Kosovo used more power than it generated due to a dispute with Serbia, leading to the phase across the whole synchronous grid of Continental Europe lagging behind what it should have been. The frequency dropped to 49.996 Hz. This caused certain kinds of clocks to become six minutes slow.

Super grid

A super grid or supergrid is a wide-area transmission network that is intended to make possible the trade of high volumes of electricity across great distances. It is sometimes also referred to as a mega grid. Super grids can support a global energy transition by smoothing local fluctuations of wind energy and solar energy. In this context they are considered as a key technology to mitigate global warming. Super grids typically use High-voltage direct current (HVDC) to transmit electricity long distances. The latest generation of HVDC power lines can transmit energy with losses of only 1.6% per 1000 km.

Electric utilities between regions are many times interconnected for improved economy and reliability. Electrical interconnectors allow for economies of scale, allowing energy to be purchased from large, efficient sources. Utilities can draw power from generator reserves from a different region to ensure continuing, reliable power and diversify their loads. Interconnection also allows regions to have access to cheap bulk energy by receiving power from different sources. For example, one region may be producing cheap hydro power during high water seasons, but in low water seasons, another area may be producing cheaper power through wind, allowing both regions to access cheaper energy sources from one another during different times of the year. Neighboring utilities also help others to maintain the overall system frequency and also help manage tie transfers between utility regions.

Electricity Interconnection Level (EIL) of a grid is the ratio of the total interconnector power to the grid divided by the installed production capacity of the grid. Within the EU, it has set a target of national grids reaching 10% by 2020, and 15% by 2030.

Trends

Demand response

Demand response is a grid management technique where retail or wholesale customers are requested or incentivised either electronically or manually to reduce their load. Currently, transmission grid operators use demand response to request load reduction from major energy users such as industrial plants. Technologies such as smart metering can encourage customers to use power when electricity is plentiful by allowing for variable pricing.

Aging infrastructure

Despite the novel institutional arrangements and network designs of the electrical grid, its power delivery infrastructures suffer aging across the developed world. Contributing factors to the current state of the electric grid and its consequences include:

- Aging equipment – older equipment has higher failure rates, leading to customer interruption rates affecting the economy and society; also, older assets and facilities lead to higher inspection maintenance costs and further repair and restoration costs.

- Obsolete system layout – older areas require serious additional substation sites and rights-of-way that cannot be obtained in the current area and are forced to use existing, insufficient facilities.

- Outdated engineering – traditional tools for power delivery planning and engineering are ineffective in addressing current problems of aged equipment, obsolete system layouts, and modern deregulated loading levels.

- Old cultural value – planning, engineering, operating of system using concepts and procedures that worked in vertically integrated industry exacerbate the problem under a deregulated industry.

Distributed generation

With everything interconnected, and open competition occurring in a free market economy, it starts to make sense to allow and even encourage distributed generation (DG). Smaller generators, usually not owned by the utility, can be brought on-line to help supply the need for power. The smaller generation facility might be a home-owner with excess power from their solar panel or wind turbine. It might be a small office with a diesel generator. These resources can be brought on-line either at the utility's behest, or by owner of the generation in an effort to sell electricity. Many small generators are allowed to sell electricity back to the grid for the same price they would pay to buy it.

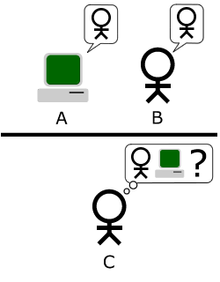

As the 21st century progresses, the electric utility industry seeks to take advantage of novel approaches to meet growing energy demand. Utilities are under pressure to evolve their classic topologies to accommodate distributed generation. As generation becomes more common from rooftop solar and wind generators, the differences between distribution and transmission grids will continue to blur. In July 2017 the CEO of Mercedes-Benz said that the energy industry needs to work better with companies from other industries to form a "total ecosystem", to integrate central and distributed energy resources (DER) to give customers what they want. The electrical grid was originally constructed so that electricity would flow from power providers to consumers. However, with the introduction of DER, power needs to flow both ways on the electric grid, because customers may have power sources such as solar panels.

Smart grid

The smart grid would be an enhancement of the 20th century electrical grid, using two-way communications and distributed so-called intelligent devices. Two-way flows of electricity and information could improve the delivery network. Research is mainly focused on three systems of a smart grid – the infrastructure system, the management system, and the protection system.

The infrastructure system is the energy, information, and communication infrastructure underlying of the smart grid that supports:

- Advanced electricity generation, delivery, and consumption

- Advanced information metering, monitoring, and management

- Advanced communication technologies

A smart grid would allow the power industry to observe and control parts of the system at higher resolution in time and space. One of the purposes of the smart grid is real time information exchange to make operation as efficient as possible. It would allow management of the grid on all time scales from high-frequency switching devices on a microsecond scale, to wind and solar output variations on a minute scale, to the future effects of the carbon emissions generated by power production on a decade scale.

The management system is the subsystem in smart grid that provides advanced management and control services. Most of the existing works aim to improve energy efficiency, demand profile, utility, cost, and emission, based on the infrastructure by using optimization, machine learning, and game theory. Within the advanced infrastructure framework of smart grid, more and more new management services and applications are expected to emerge and eventually revolutionize consumers' daily lives.

The protection system of a smart grid provides grid reliability analysis, failure protection, and security and privacy protection services. While the additional communication infrastructure of a smart grid provides additional protective and security mechanisms, it also presents a risk of external attack and internal failures. In a report on cyber security of smart grid technology first produced in 2010, and later updated in 2014, the US National Institute of Standards and Technology pointed out that the ability to collect more data about energy use from customer smart meters also raises major privacy concerns, since the information stored at the meter, which is potentially vulnerable to data breaches, can be mined for personal details about customers.

In the U.S., the Energy Policy Act of 2005 and Title XIII of the Energy Independence and Security Act of 2007 are providing funding to encourage smart grid development. The objective is to enable utilities to better predict their needs, and in some cases involve consumers in a time-of-use tariff. Funds have also been allocated to develop more robust energy control technologies.

Grid defection

As there is some resistance in the electric utility sector to the concepts of distributed generation with various renewable energy sources and microscale cogen units, several authors have warned that mass-scale grid defection is possible because consumers can produce electricity using off grid systems primarily made up of solar photovoltaic technology.

The Rocky Mountain Institute has proposed that there may be widescale grid defection. This is backed up by studies in the Midwest. However, the paper points out that grid defection may be less likely in countries like Germany which have greater power demands in winter.