The history of logic deals with the study of the development of the science of valid inference (logic). Formal logics developed in ancient times in India, China, and Greece. Greek methods, particularly Aristotelian logic (or term logic) as found in the Organon, found wide application and acceptance in Western science and mathematics for millennia. The Stoics, especially Chrysippus, began the development of predicate logic.

Christian and Islamic philosophers such as Boethius (died 524) and William of Ockham (died 1347) further developed Aristotle's logic in the Middle Ages, reaching a high point in the mid-fourteenth century, with Jean Buridan. The period between the fourteenth century and the beginning of the nineteenth century saw largely decline and neglect, and at least one historian of logic regards this time as barren. Empirical methods ruled the day, as evidenced by Sir Francis Bacon's Novum Organon of 1620.

Logic revived in the mid-nineteenth century, at the beginning of a revolutionary period when the subject developed into a rigorous and formal discipline which took as its exemplar the exact method of proof used in mathematics, a hearkening back to the Greek tradition. The development of the modern "symbolic" or "mathematical" logic during this period by the likes of Boole, Frege, Russell, and Peano is the most significant in the two-thousand-year history of logic, and is arguably one of the most important and remarkable events in human intellectual history.

Progress in mathematical logic in the first few decades of the twentieth century, particularly arising from the work of Gödel and Tarski, had a significant impact on analytic philosophy and philosophical logic, particularly from the 1950s onwards, in subjects such as modal logic, temporal logic, deontic logic, and relevance logic.

Logic in the East

Logic in India

Logic began independently in ancient India and continued to develop to early modern times without any known influence from Greek logic. Medhatithi Gautama (c. 6th century BC) founded the anviksiki school of logic. The Mahabharata (12.173.45), around the 5th century BC, refers to the anviksiki and tarka schools of logic. Pāṇini (c. 5th century BC) developed a form of logic (to which Boolean logic has some similarities) for his formulation of Sanskrit grammar. Logic is described by Chanakya (c. 350-283 BC) in his Arthashastra as an independent field of inquiry.Two of the six Indian schools of thought deal with logic: Nyaya and Vaisheshika. The Nyaya Sutras of Aksapada Gautama (c. 2nd century AD) constitute the core texts of the Nyaya school, one of the six orthodox schools of Hindu philosophy. This realist school developed a rigid five-member schema of inference involving an initial premise, a reason, an example, an application, and a conclusion. The idealist Buddhist philosophy became the chief opponent to the Naiyayikas. Nagarjuna (c. 150-250 AD), the founder of the Madhyamika ("Middle Way") developed an analysis known as the catuṣkoṭi (Sanskrit), a "four-cornered" system of argumentation that involves the systematic examination and rejection of each of the 4 possibilities of a proposition, P:

- P; that is, being.

- not P; that is, not being.

- P and not P; that is, being and not being.

- not (P or not P); that is, neither being nor not being.Under propositional logic, De Morgan's laws imply that this is equivalent to the third case (P and not P), and is therefore superfluous; there are actually only 3 cases to consider.

Dignāga's famous "wheel of reason" (Hetucakra) is a method of indicating when one thing (such as smoke) can be taken as an invariable sign of another thing (like fire), but the inference is often inductive and based on past observation. Matilal remarks that Dignāga's analysis is much like John Stuart Mill's Joint Method of Agreement and Difference, which is inductive.

In addition, the traditional five-member Indian syllogism, though deductively valid, has repetitions that are unnecessary to its logical validity. As a result, some commentators see the traditional Indian syllogism as a rhetorical form that is entirely natural in many cultures of the world, and yet not as a logical form—not in the sense that all logically unnecessary elements have been omitted for the sake of analysis.

Logic in China

In China, a contemporary of Confucius, Mozi, "Master Mo", is credited with founding the Mohist school, whose canons dealt with issues relating to valid inference and the conditions of correct conclusions. In particular, one of the schools that grew out of Mohism, the Logicians, are credited by some scholars for their early investigation of formal logic. Due to the harsh rule of Legalism in the subsequent Qin Dynasty, this line of investigation disappeared in China until the introduction of Indian philosophy by Buddhists.Logic in the West

Prehistory of logic

Valid reasoning has been employed in all periods of human history. However, logic studies the principles of valid reasoning, inference and demonstration. It is probable that the idea of demonstrating a conclusion first arose in connection with geometry, which originally meant the same as "land measurement". The ancient Egyptians discovered geometry, including the formula for the volume of a truncated pyramid. Ancient Babylon was also skilled in mathematics. Esagil-kin-apli's medical Diagnostic Handbook in the 11th century BC was based on a logical set of axioms and assumptions, while Babylonian astronomers in the 8th and 7th centuries BC employed an internal logic within their predictive planetary systems, an important contribution to the philosophy of science.Ancient Greece before Aristotle

While the ancient Egyptians empirically discovered some truths of geometry, the great achievement of the ancient Greeks was to replace empirical methods by demonstrative proof. Both Thales and Pythagoras of the Pre-Socratic philosophers seem aware of geometry's methods.Fragments of early proofs are preserved in the works of Plato and Aristotle, and the idea of a deductive system was probably known in the Pythagorean school and the Platonic Academy. The proofs of Euclid of Alexandria are a paradigm of Greek geometry. The three basic principles of geometry are as follows:

- Certain propositions must be accepted as true without demonstration; such a proposition is known as an axiom of geometry.

- Every proposition that is not an axiom of geometry must be demonstrated as following from the axioms of geometry; such a demonstration is known as a proof or a "derivation" of the proposition.

- The proof must be formal; that is, the derivation of the proposition must be independent of the particular subject matter in question.

Thales Theorem

Thales

It is said Thales, most widely regarded as the first philosopher in the Greek tradition, measured the height of the pyramids by their shadows at the moment when his own shadow was equal to his height. Thales was said to have had a sacrifice in celebration of discovering Thales' theorem just as Pythagoras had the Pythagorean theorem.Thales is the first known individual to use deductive reasoning applied to geometry, by deriving four corollaries to his theorem, and the first known individual to whom a mathematical discovery has been attributed. Indian and Babylonian mathematicians knew his theorem for special cases before he proved it. It is believed that Thales learned that an angle inscribed in a semicircle is a right angle during his travels to Babylon.

Pythagoras

Proof of the Pythagorean Theorem in Euclid's Elements

Before 520 BC, on one of his visits to Egypt or Greece, Pythagoras might have met the c. 54 years older Thales. The systematic study of proof seems to have begun with the school of Pythagoras (i. e. the Pythagoreans) in the late sixth century BC. Indeed, the Pythagoreans, believing all was number, are the first philosophers to emphasize form rather than matter.

Heraclitus and Parmenides

The writing of Heraclitus (c. 535 – c. 475 BC) was the first place where the word logos was given special attention in ancient Greek philosophy, Heraclitus held that everything changes and all was fire and conflicting opposites, seemingly unified only by this Logos. He is known for his obscure sayings.This logos holds always but humans always prove unable to understand it, both before hearing it and when they have first heard it. For though all things come to be in accordance with this logos, humans are like the inexperienced when they experience such words and deeds as I set out, distinguishing each in accordance with its nature and saying how it is. But other people fail to notice what they do when awake, just as they forget what they do while asleep.

— Diels-Kranz, 22B1

Parmenides has been called the discoverer of logic.

In contrast to Heraclitus, Parmenides held that all is one and nothing changes. He may have been a dissident Pythagorean, disagreeing that One (a number) produced the many. "X is not" must always be false or meaningless. What exists can in no way not exist. Our sense perceptions with its noticing of generation and destruction are in grievous error. Instead of sense perception, Parmenides advocated logos as the means to Truth. He has been called the discoverer of logic,

- For this view, that That Which Is Not exists, can never predominate. You must debar your thought from this way of search, nor let ordinary experience in its variety force you along this way, (namely, that of allowing) the eye, sightless as it is, and the ear, full of sound, and the tongue, to rule; but (you must) judge by means of the Reason (Logos) the much-contested proof which is expounded by me. (B 7.1–8.2)

Plato

Let no one ignorant of geometry enter here.

— Inscribed over the entrance to Plato's Academy.

None of the surviving works of the great fourth-century philosopher Plato (428–347 BC) include any formal logic, but they include important contributions to the field of philosophical logic. Plato raises three questions:

- What is it that can properly be called true or false?

- What is the nature of the connection between the assumptions of a valid argument and its conclusion?

- What is the nature of definition?

Aristotle

Aristotle

The logic of Aristotle, and particularly his theory of the syllogism, has had an enormous influence in Western thought. Aristotle was the first logician to attempt a systematic analysis of logical syntax, of noun (or term), and of verb. He was the first formal logician, in that he demonstrated the principles of reasoning by employing variables to show the underlying logical form of an argument. He sought relations of dependence which characterize necessary inference, and distinguished the validity of these relations, from the truth of the premises (the soundness of the argument). He was the first to deal with the principles of contradiction and excluded middle in a systematic way.

Aristotle's logic was still influential in the Renaissance

The Organon

His logical works, called the Organon, are the earliest formal study of logic that have come down to modern times. Though it is difficult to determine the dates, the probable order of writing of Aristotle's logical works is:- The Categories, a study of the ten kinds of primitive term.

- The Topics (with an appendix called On Sophistical Refutations), a discussion of dialectics.

- On Interpretation, an analysis of simple categorical propositions into simple terms, negation, and signs of quantity.

- The Prior Analytics, a formal analysis of what makes a syllogism (a valid argument, according to Aristotle).

- The Posterior Analytics, a study of scientific demonstration, containing Aristotle's mature views on logic.

This diagram shows the contradictory relationships between categorical propositions in the square of opposition of Aristotelian logic.

These works are of outstanding importance in the history of logic. In the Categories, he attempts to discern all the possible things to which a term can refer; this idea underpins his philosophical work Metaphysics, which itself had a profound influence on Western thought.

He also developed a theory of non-formal logic (i.e., the theory of fallacies), which is presented in Topics and Sophistical Refutations.

On Interpretation contains a comprehensive treatment of the notions of opposition and conversion; chapter 7 is at the origin of the square of opposition (or logical square); chapter 9 contains the beginning of modal logic.

The Prior Analytics contains his exposition of the "syllogism", where three important principles are applied for the first time in history: the use of variables, a purely formal treatment, and the use of an axiomatic system.

Stoics

The other great school of Greek logic is that of the Stoics. Stoic logic traces its roots back to the late 5th century BC philosopher Euclid of Megara, a pupil of Socrates and slightly older contemporary of Plato, probably following in the tradition of Parmenides and Zeno. His pupils and successors were called "Megarians", or "Eristics", and later the "Dialecticians". The two most important dialecticians of the Megarian school were Diodorus Cronus and Philo, who were active in the late 4th century BC.

Chrysippus of Soli

The Stoics adopted the Megarian logic and systemized it. The most important member of the school was Chrysippus (c. 278–c. 206 BC), who was its third head, and who formalized much of Stoic doctrine. He is supposed to have written over 700 works, including at least 300 on logic, almost none of which survive. Unlike with Aristotle, we have no complete works by the Megarians or the early Stoics, and have to rely mostly on accounts (sometimes hostile) by later sources, including prominently Diogenes Laertius, Sextus Empiricus, Galen, Aulus Gellius, Alexander of Aphrodisias, and Cicero.

Three significant contributions of the Stoic school were (i) their account of modality, (ii) their theory of the Material conditional, and (iii) their account of meaning and truth.

- Modality. According to Aristotle, the Megarians of his day claimed there was no distinction between potentiality and actuality. Diodorus Cronus defined the possible as that which either is or will be, the impossible as what will not be true, and the contingent as that which either is already, or will be false. Diodorus is also famous for what is known as his Master argument, which states that each pair of the following 3 propositions contradicts the third proposition:

- Everything that is past is true and necessary.

- The impossible does not follow from the possible.

- What neither is nor will be is possible.

- Diodorus used the plausibility of the first two to prove that nothing is possible if it neither is nor will be true. Chrysippus, by contrast, denied the second premise and said that the impossible could follow from the possible.

- Conditional statements. The first logicians to debate conditional statements were Diodorus and his pupil Philo of Megara. Sextus Empiricus refers three times to a debate between Diodorus and Philo. Philo regarded a conditional as true unless it has both a true antecedent and a false consequent. Precisely, let T0 and T1 be true statements, and let F0 and F1 be false statements; then, according to Philo, each of the following conditionals is a true statement, because it is not the case that the consequent is false while the antecedent is true (it is not the case that a false statement is asserted to follow from a true statement):

- If T0, then T1

- If F0, then T0

- If F0, then F1

- The following conditional does not meet this requirement, and is therefore a false statement according to Philo:

- If T0, then F0

- If T0, then F0

- Indeed, Sextus says "According to [Philo], there are three ways in which a conditional may be true, and one in which it may be false." Philo's criterion of truth is what would now be called a truth-functional definition of "if ... then"; it is the definition used in modern logic.

- In contrast, Diodorus allowed the validity of conditionals only when the antecedent clause could never lead to an untrue conclusion. A century later, the Stoic philosopher Chrysippus attacked the assumptions of both Philo and Diodorus.

- Meaning and truth. The most important and striking difference between Megarian-Stoic logic and Aristotelian logic is that Megarian-Stoic logic concerns propositions, not terms, and is thus closer to modern propositional logic. The Stoics distinguished between utterance (phone), which may be noise, speech (lexis), which is articulate but which may be meaningless, and discourse (logos), which is meaningful utterance. The most original part of their theory is the idea that what is expressed by a sentence, called a lekton, is something real; this corresponds to what is now called a proposition. Sextus says that according to the Stoics, three things are linked together: that which signifies, that which is signified, and the object; for example, that which signifies is the word Dion, and that which is signified is what Greeks understand but barbarians do not, and the object is Dion himself.

Medieval logic

Logic in the Middle East

A text by Avicenna, founder of Avicennian logic

The works of Al-Kindi, Al-Farabi, Avicenna, Al-Ghazali, Averroes and other Muslim logicians were based on Aristotelian logic and were important in communicating the ideas of the ancient world to the medieval West. Al-Farabi (Alfarabi) (873–950) was an Aristotelian logician who discussed the topics of future contingents, the number and relation of the categories, the relation between logic and grammar, and non-Aristotelian forms of inference. Al-Farabi also considered the theories of conditional syllogisms and analogical inference, which were part of the Stoic tradition of logic rather than the Aristotelian.

Ibn Sina (Avicenna) (980–1037) was the founder of Avicennian logic, which replaced Aristotelian logic as the dominant system of logic in the Islamic world, and also had an important influence on Western medieval writers such as Albertus Magnus. Avicenna wrote on the hypothetical syllogism and on the propositional calculus, which were both part of the Stoic logical tradition. He developed an original "temporally modalized" syllogistic theory, involving temporal logic and modal logic. He also made use of inductive logic, such as the methods of agreement, difference, and concomitant variation which are critical to the scientific method. One of Avicenna's ideas had a particularly important influence on Western logicians such as William of Ockham: Avicenna's word for a meaning or notion (ma'na), was translated by the scholastic logicians as the Latin intentio; in medieval logic and epistemology, this is a sign in the mind that naturally represents a thing. This was crucial to the development of Ockham's conceptualism: A universal term (e.g., "man") does not signify a thing existing in reality, but rather a sign in the mind (intentio in intellectu) which represents many things in reality; Ockham cites Avicenna's commentary on Metaphysics V in support of this view.

Fakhr al-Din al-Razi (b. 1149) criticised Aristotle's "first figure" and formulated an early system of inductive logic, foreshadowing the system of inductive logic developed by John Stuart Mill (1806–1873). Al-Razi's work was seen by later Islamic scholars as marking a new direction for Islamic logic, towards a Post-Avicennian logic. This was further elaborated by his student Afdaladdîn al-Khûnajî (d. 1249), who developed a form of logic revolving around the subject matter of conceptions and assents. In response to this tradition, Nasir al-Din al-Tusi (1201–1274) began a tradition of Neo-Avicennian logic which remained faithful to Avicenna's work and existed as an alternative to the more dominant Post-Avicennian school over the following centuries.

The Illuminationist school was founded by Shahab al-Din Suhrawardi (1155–1191), who developed the idea of "decisive necessity", which refers to the reduction of all modalities (necessity, possibility, contingency and impossibility) to the single mode of necessity. Ibn al-Nafis (1213–1288) wrote a book on Avicennian logic, which was a commentary of Avicenna's Al-Isharat (The Signs) and Al-Hidayah (The Guidance). Ibn Taymiyyah (1263–1328), wrote the Ar-Radd 'ala al-Mantiqiyyin, where he argued against the usefulness, though not the validity, of the syllogism and in favour of inductive reasoning. Ibn Taymiyyah also argued against the certainty of syllogistic arguments and in favour of analogy; his argument is that concepts founded on induction are themselves not certain but only probable, and thus a syllogism based on such concepts is no more certain than an argument based on analogy. He further claimed that induction itself is founded on a process of analogy. His model of analogical reasoning was based on that of juridical arguments. This model of analogy has been used in the recent work of John F. Sowa.

The Sharh al-takmil fi'l-mantiq written by Muhammad ibn Fayd Allah ibn Muhammad Amin al-Sharwani in the 15th century is the last major Arabic work on logic that has been studied. However, "thousands upon thousands of pages" on logic were written between the 14th and 19th centuries, though only a fraction of the texts written during this period have been studied by historians, hence little is known about the original work on Islamic logic produced during this later period.

Logic in medieval Europe

Brito's questions on the Old Logic

"Medieval logic" (also known as "Scholastic logic") generally means the form of Aristotelian logic developed in medieval Europe throughout roughly the period 1200–1600. For centuries after Stoic logic had been formulated, it was the dominant system of logic in the classical world. When the study of logic resumed after the Dark Ages, the main source was the work of the Christian philosopher Boethius, who was familiar with some of Aristotle's logic, but almost none of the work of the Stoics. Until the twelfth century, the only works of Aristotle available in the West were the Categories, On Interpretation, and Boethius's translation of the Isagoge of Porphyry (a commentary on the Categories). These works were known as the "Old Logic" (Logica Vetus or Ars Vetus). An important work in this tradition was the Logica Ingredientibus of Peter Abelard (1079–1142). His direct influence was small, but his influence through pupils such as John of Salisbury was great, and his method of applying rigorous logical analysis to theology shaped the way that theological criticism developed in the period that followed.

By the early thirteenth century, the remaining works of Aristotle's Organon (including the Prior Analytics, Posterior Analytics, and the Sophistical Refutations) had been recovered in the West. Logical work until then was mostly paraphrasis or commentary on the work of Aristotle. The period from the middle of the thirteenth to the middle of the fourteenth century was one of significant developments in logic, particularly in three areas which were original, with little foundation in the Aristotelian tradition that came before. These were:

- The theory of supposition. Supposition theory deals with the way that predicates (e.g., 'man') range over a domain of individuals (e.g., all men). In the proposition 'every man is an animal', does the term 'man' range over or 'supposit for' men existing just in the present, or does the range include past and future men? Can a term supposit for a non-existing individual? Some medievalists have argued that this idea is a precursor of modern first-order logic. "The theory of supposition with the associated theories of copulatio (sign-capacity of adjectival terms), ampliatio (widening of referential domain), and distributio constitute one of the most original achievements of Western medieval logic".

- The theory of syncategoremata. Syncategoremata are terms which are necessary for logic, but which, unlike categorematic terms, do not signify on their own behalf, but 'co-signify' with other words. Examples of syncategoremata are 'and', 'not', 'every', 'if', and so on.

- The theory of consequences. A consequence is a hypothetical, conditional proposition: two propositions joined by the terms 'if ... then'. For example, 'if a man runs, then God exists' (Si homo currit, Deus est). A fully developed theory of consequences is given in Book III of William of Ockham's work Summa Logicae. There, Ockham distinguishes between 'material' and 'formal' consequences, which are roughly equivalent to the modern material implication and logical implication respectively. Similar accounts are given by Jean Buridan and Albert of Saxony.

Traditional logic

The textbook tradition

Dudley Fenner's Art of Logic (1584)

Traditional logic generally means the textbook tradition that begins with Antoine Arnauld's and Pierre Nicole's Logic, or the Art of Thinking, better known as the Port-Royal Logic. Published in 1662, it was the most influential work on logic after Aristotle until the nineteenth century. The book presents a loosely Cartesian doctrine (that the proposition is a combining of ideas rather than terms, for example) within a framework that is broadly derived from Aristotelian and medieval term logic. Between 1664 and 1700, there were eight editions, and the book had considerable influence after that. The Port-Royal introduces the concepts of extension and intension. The account of propositions that Locke gives in the Essay is essentially that of the Port-Royal: "Verbal propositions, which are words, [are] the signs of our ideas, put together or separated in affirmative or negative sentences. So that proposition consists in the putting together or separating these signs, according as the things which they stand for agree or disagree."

Dudley Fenner helped popularize Ramist logic, a reaction against Aristotle. Another influential work was the Novum Organum by Francis Bacon, published in 1620. The title translates as "new instrument". This is a reference to Aristotle's work known as the Organon. In this work, Bacon rejects the syllogistic method of Aristotle in favor of an alternative procedure "which by slow and faithful toil gathers information from things and brings it into understanding". This method is known as inductive reasoning, a method which starts from empirical observation and proceeds to lower axioms or propositions; from these lower axioms, more general ones can be induced. For example, in finding the cause of a phenomenal nature such as heat, 3 lists should be constructed:

- The presence list: a list of every situation where heat is found.

- The absence list: a list of every situation that is similar to at least one of those of the presence list, except for the lack of heat.

- The variability list: a list of every situation where heat can vary.

Other works in the textbook tradition include Isaac Watts's Logick: Or, the Right Use of Reason (1725), Richard Whately's Logic (1826), and John Stuart Mill's A System of Logic (1843). Although the latter was one of the last great works in the tradition, Mill's view that the foundations of logic lie in introspection influenced the view that logic is best understood as a branch of psychology, a view which dominated the next fifty years of its development, especially in Germany.

Logic in Hegel's philosophy

Georg Wilhelm Friedrich Hegel

G.W.F. Hegel indicated the importance of logic to his philosophical system when he condensed his extensive Science of Logic into a shorter work published in 1817 as the first volume of his Encyclopaedia of the Philosophical Sciences. The "Shorter" or "Encyclopaedia" Logic, as it is often known, lays out a series of transitions which leads from the most empty and abstract of categories—Hegel begins with "Pure Being" and "Pure Nothing"—to the "Absolute, the category which contains and resolves all the categories which preceded it. Despite the title, Hegel's Logic is not really a contribution to the science of valid inference. Rather than deriving conclusions about concepts through valid inference from premises, Hegel seeks to show that thinking about one concept compels thinking about another concept (one cannot, he argues, possess the concept of "Quality" without the concept of "Quantity"); this compulsion is, supposedly, not a matter of individual psychology, because it arises almost organically from the content of the concepts themselves. His purpose is to show the rational structure of the "Absolute"—indeed of rationality itself. The method by which thought is driven from one concept to its contrary, and then to further concepts, is known as the Hegelian dialectic.

Although Hegel's Logic has had little impact on mainstream logical studies, its influence can be seen elsewhere:

- Carl von Prantl's Geschichte der Logik in Abendland (1855–1867).

- The work of the British Idealists, such as F.H. Bradley's Principles of Logic (1883).

- The economic, political, and philosophical studies of Karl Marx, and in the various schools of Marxism.

Logic and psychology

Between the work of Mill and Frege stretched half a century during which logic was widely treated as a descriptive science, an empirical study of the structure of reasoning, and thus essentially as a branch of psychology. The German psychologist Wilhelm Wundt, for example, discussed deriving "the logical from the psychological laws of thought", emphasizing that "psychological thinking is always the more comprehensive form of thinking." This view was widespread among German philosophers of the period:- Theodor Lipps described logic as "a specific discipline of psychology".

- Christoph von Sigwart understood logical necessity as grounded in the individual's compulsion to think in a certain way.

- Benno Erdmann argued that "logical laws only hold within the limits of our thinking".

Such criticisms did not immediately extirpate what is called "psychologism". For example, the American philosopher Josiah Royce, while acknowledging the force of Husserl's critique, remained "unable to doubt" that progress in psychology would be accompanied by progress in logic, and vice versa.

Rise of modern logic

The period between the fourteenth century and the beginning of the nineteenth century had been largely one of decline and neglect, and is generally regarded as barren by historians of logic. The revival of logic occurred in the mid-nineteenth century, at the beginning of a revolutionary period where the subject developed into a rigorous and formalistic discipline whose exemplar was the exact method of proof used in mathematics. The development of the modern "symbolic" or "mathematical" logic during this period is the most significant in the 2000-year history of logic, and is arguably one of the most important and remarkable events in human intellectual history.A number of features distinguish modern logic from the old Aristotelian or traditional logic, the most important of which are as follows: Modern logic is fundamentally a calculus whose rules of operation are determined only by the shape and not by the meaning of the symbols it employs, as in mathematics. Many logicians were impressed by the "success" of mathematics, in that there had been no prolonged dispute about any truly mathematical result. C.S. Peirce noted that even though a mistake in the evaluation of a definite integral by Laplace led to an error concerning the moon's orbit that persisted for nearly 50 years, the mistake, once spotted, was corrected without any serious dispute. Peirce contrasted this with the disputation and uncertainty surrounding traditional logic, and especially reasoning in metaphysics. He argued that a truly "exact" logic would depend upon mathematical, i.e., "diagrammatic" or "iconic" thought. "Those who follow such methods will ... escape all error except such as will be speedily corrected after it is once suspected". Modern logic is also "constructive" rather than "abstractive"; i.e., rather than abstracting and formalising theorems derived from ordinary language (or from psychological intuitions about validity), it constructs theorems by formal methods, then looks for an interpretation in ordinary language. It is entirely symbolic, meaning that even the logical constants (which the medieval logicians called "syncategoremata") and the categoric terms are expressed in symbols.

Modern logic

The development of modern logic falls into roughly five periods:- The embryonic period from Leibniz to 1847, when the notion of a logical calculus was discussed and developed, particularly by Leibniz, but no schools were formed, and isolated periodic attempts were abandoned or went unnoticed.

- The algebraic period from Boole's Analysis to Schröder's Vorlesungen. In this period, there were more practitioners, and a greater continuity of development.

- The logicist period from the Begriffsschrift of Frege to the Principia Mathematica of Russell and Whitehead. The aim of the "logicist school" was to incorporate the logic of all mathematical and scientific discourse in a single unified system which, taking as a fundamental principle that all mathematical truths are logical, did not accept any non-logical terminology. The major logicists were Frege, Russell, and the early Wittgenstein. It culminates with the Principia, an important work which includes a thorough examination and attempted solution of the antinomies which had been an obstacle to earlier progress.

- The metamathematical period from 1910 to the 1930s, which saw the development of metalogic, in the finitist system of Hilbert, and the non-finitist system of Löwenheim and Skolem, the combination of logic and metalogic in the work of Gödel and Tarski. Gödel's incompleteness theorem of 1931 was one of the greatest achievements in the history of logic. Later in the 1930s, Gödel developed the notion of set-theoretic constructibility.

- The period after World War II, when mathematical logic branched into four inter-related but separate areas of research: model theory, proof theory, computability theory, and set theory, and its ideas and methods began to influence philosophy.

Embryonic period

Leibniz

The idea that inference could be represented by a purely mechanical process is found as early as Raymond Llull, who proposed a (somewhat eccentric) method of drawing conclusions by a system of concentric rings. The work of logicians such as the Oxford Calculators led to a method of using letters instead of writing out logical calculations (calculationes) in words, a method used, for instance, in the Logica magna by Paul of Venice. Three hundred years after Llull, the English philosopher and logician Thomas Hobbes suggested that all logic and reasoning could be reduced to the mathematical operations of addition and subtraction. The same idea is found in the work of Leibniz, who had read both Llull and Hobbes, and who argued that logic can be represented through a combinatorial process or calculus. But, like Llull and Hobbes, he failed to develop a detailed or comprehensive system, and his work on this topic was not published until long after his death. Leibniz says that ordinary languages are subject to "countless ambiguities" and are unsuited for a calculus, whose task is to expose mistakes in inference arising from the forms and structures of words;[103] hence, he proposed to identify an alphabet of human thought comprising fundamental concepts which could be composed to express complex ideas, and create a calculus ratiocinator that would make all arguments "as tangible as those of the Mathematicians, so that we can find our error at a glance, and when there are disputes among persons, we can simply say: Let us calculate."

Gergonne (1816) said that reasoning does not have to be about objects about which one has perfectly clear ideas, because algebraic operations can be carried out without having any idea of the meaning of the symbols involved. Bolzano anticipated a fundamental idea of modern proof theory when he defined logical consequence or "deducibility" in terms of variables:

Hence I say that propositionsThis is now known as semantic validity.,

,

,… are deducible from propositions

,

,

,

,… with respect to variable parts

,

,…, if every class of ideas whose substitution for

,

,… makes all of

,

,

,

,… true, also makes all of

,

,

,… true. Occasionally, since it is customary, I shall say that propositions

,

,

,… follow, or can be inferred or derived, from

,

,

,

,…. Propositions

,

,

,

,… I shall call the premises,

,

,

,… the conclusions.

Algebraic period

George Boole

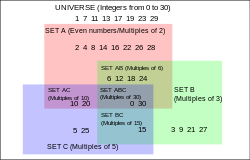

Modern logic begins with what is known as the "algebraic school", originating with Boole and including Peirce, Jevons, Schröder, and Venn. Their objective was to develop a calculus to formalise reasoning in the area of classes, propositions, and probabilities. The school begins with Boole's seminal work Mathematical Analysis of Logic which appeared in 1847, although De Morgan (1847) is its immediate precursor. The fundamental idea of Boole's system is that algebraic formulae can be used to express logical relations. This idea occurred to Boole in his teenage years, working as an usher in a private school in Lincoln, Lincolnshire. For example, let x and y stand for classes let the symbol = signify that the classes have the same members, xy stand for the class containing all and only the members of x and y and so on. Boole calls these elective symbols, i.e. symbols which select certain objects for consideration. An expression in which elective symbols are used is called an elective function, and an equation of which the members are elective functions, is an elective equation. The theory of elective functions and their "development" is essentially the modern idea of truth-functions and their expression in disjunctive normal form.

Boole's system admits of two interpretations, in class logic, and propositional logic. Boole distinguished between "primary propositions" which are the subject of syllogistic theory, and "secondary propositions", which are the subject of propositional logic, and showed how under different "interpretations" the same algebraic system could represent both. An example of a primary proposition is "All inhabitants are either Europeans or Asiatics." An example of a secondary proposition is "Either all inhabitants are Europeans or they are all Asiatics." These are easily distinguished in modern propositional calculus, where it is also possible to show that the first follows from the second, but it is a significant disadvantage that there is no way of representing this in the Boolean system.

In his Symbolic Logic (1881), John Venn used diagrams of overlapping areas to express Boolean relations between classes or truth-conditions of propositions. In 1869 Jevons realised that Boole's methods could be mechanised, and constructed a "logical machine" which he showed to the Royal Society the following year. In 1885 Allan Marquand proposed an electrical version of the machine that is still extant (picture at the Firestone Library).

Charles Sanders Peirce

The defects in Boole's system (such as the use of the letter v for existential propositions) were all remedied by his followers. Jevons published Pure Logic, or the Logic of Quality apart from Quantity in 1864, where he suggested a symbol to signify exclusive or, which allowed Boole's system to be greatly simplified. This was usefully exploited by Schröder when he set out theorems in parallel columns in his Vorlesungen (1890–1905). Peirce (1880) showed how all the Boolean elective functions could be expressed by the use of a single primitive binary operation, "neither ... nor ..." and equally well "not both ... and ...", however, like many of Peirce's innovations, this remained unknown or unnoticed until Sheffer rediscovered it in 1913. Boole's early work also lacks the idea of the logical sum which originates in Peirce (1867), Schröder (1877) and Jevons (1890), and the concept of inclusion, first suggested by Gergonne (1816) and clearly articulated by Peirce (1870).

Boolean multiples

The success of Boole's algebraic system suggested that all logic must be capable of algebraic representation, and there were attempts to express a logic of relations in such form, of which the most ambitious was Schröder's monumental Vorlesungen über die Algebra der Logik ("Lectures on the Algebra of Logic", vol iii 1895), although the original idea was again anticipated by Peirce.

Boole's unwavering acceptance of Aristotle's logic is emphasized by the historian of logic John Corcoran in an accessible introduction to Laws of Thought Corcoran also wrote a point-by-point comparison of Prior Analytics and Laws of Thought. According to Corcoran, Boole fully accepted and endorsed Aristotle's logic. Boole's goals were "to go under, over, and beyond" Aristotle's logic by 1) providing it with mathematical foundations involving equations, 2) extending the class of problems it could treat — from assessing validity to solving equations — and 3) expanding the range of applications it could handle — e.g. from propositions having only two terms to those having arbitrarily many.

More specifically, Boole agreed with what Aristotle said; Boole's 'disagreements', if they might be called that, concern what Aristotle did not say. First, in the realm of foundations, Boole reduced the four propositional forms of Aristotelian logic to formulas in the form of equations — by itself a revolutionary idea. Second, in the realm of logic's problems, Boole's addition of equation solving to logic — another revolutionary idea — involved Boole's doctrine that Aristotle's rules of inference (the "perfect syllogisms") must be supplemented by rules for equation solving. Third, in the realm of applications, Boole's system could handle multi-term propositions and arguments whereas Aristotle could handle only two-termed subject-predicate propositions and arguments. For example, Aristotle's system could not deduce "No quadrangle that is a square is a rectangle that is a rhombus" from "No square that is a quadrangle is a rhombus that is a rectangle" or from "No rhombus that is a rectangle is a square that is a quadrangle".

Logicist period

Gottlob Frege.

After Boole, the next great advances were made by the German mathematician Gottlob Frege. Frege's objective was the program of Logicism, i.e. demonstrating that arithmetic is identical with logic. Frege went much further than any of his predecessors in his rigorous and formal approach to logic, and his calculus or Begriffsschrift is important. Frege also tried to show that the concept of number can be defined by purely logical means, so that (if he was right) logic includes arithmetic and all branches of mathematics that are reducible to arithmetic. He was not the first writer to suggest this. In his pioneering work Die Grundlagen der Arithmetik (The Foundations of Arithmetic), sections 15–17, he acknowledges the efforts of Leibniz, J.S. Mill as well as Jevons, citing the latter's claim that "algebra is a highly developed logic, and number but logical discrimination."

Frege's first work, the Begriffsschrift ("concept script") is a rigorously axiomatised system of propositional logic, relying on just two connectives (negational and conditional), two rules of inference (modus ponens and substitution), and six axioms. Frege referred to the "completeness" of this system, but was unable to prove this. The most significant innovation, however, was his explanation of the quantifier in terms of mathematical functions. Traditional logic regards the sentence "Caesar is a man" as of fundamentally the same form as "all men are mortal." Sentences with a proper name subject were regarded as universal in character, interpretable as "every Caesar is a man". At the outset Frege abandons the traditional "concepts subject and predicate", replacing them with argument and function respectively, which he believes "will stand the test of time. It is easy to see how regarding a content as a function of an argument leads to the formation of concepts. Furthermore, the demonstration of the connection between the meanings of the words if, and, not, or, there is, some, all, and so forth, deserves attention". Frege argued that the quantifier expression "all men" does not have the same logical or semantic form as "all men", and that the universal proposition "every A is B" is a complex proposition involving two functions, namely ' – is A' and ' – is B' such that whatever satisfies the first, also satisfies the second. In modern notation, this would be expressed as

This means that in Frege's calculus, Boole's "primary" propositions can be represented in a different way from "secondary" propositions. "All inhabitants are either men or women" is

Frege's "Concept Script"

- "The real difference is that I avoid [the Boolean] division into two parts ... and give a homogeneous presentation of the lot. In Boole the two parts run alongside one another, so that one is like the mirror image of the other, but for that very reason stands in no organic relation to it'

Peano

means that to every girl there corresponds some boy (any one will do) who the girl kissed. But

Ernst Zermelo

This period overlaps with the work of what is known as the "mathematical school", which included Dedekind, Pasch, Peano, Hilbert, Zermelo, Huntington, Veblen and Heyting. Their objective was the axiomatisation of branches of mathematics like geometry, arithmetic, analysis and set theory. Most notable was Hilbert's Program, which sought to ground all of mathematics to a finite set of axioms, proving its consistency by "finitistic" means and providing a procedure which would decide the truth or falsity of any mathematical statement. The standard axiomatization of the natural numbers is named the Peano axioms in his honor. Peano maintained a clear distinction between mathematical and logical symbols. While unaware of Frege's work, he independently recreated his logical apparatus based on the work of Boole and Schröder.

The logicist project received a near-fatal setback with the discovery of a paradox in 1901 by Bertrand Russell. This proved Frege's naive set theory led to a contradiction. Frege's theory contained the axiom that for any formal criterion, there is a set of all objects that meet the criterion. Russell showed that a set containing exactly the sets that are not members of themselves would contradict its own definition (if it is not a member of itself, it is a member of itself, and if it is a member of itself, it is not). This contradiction is now known as Russell's paradox. One important method of resolving this paradox was proposed by Ernst Zermelo. Zermelo set theory was the first axiomatic set theory. It was developed into the now-canonical Zermelo–Fraenkel set theory (ZF). Russell's paradox symbolically is as follows:

Metamathematical period

Kurt Gödel

The names of Gödel and Tarski dominate the 1930s, a crucial period in the development of metamathematics – the study of mathematics using mathematical methods to produce metatheories, or mathematical theories about other mathematical theories. Early investigations into metamathematics had been driven by Hilbert's program. Work on metamathematics culminated in the work of Gödel, who in 1929 showed that a given first-order sentence is deducible if and only if it is logically valid – i.e. it is true in every structure for its language. This is known as Gödel's completeness theorem. A year later, he proved two important theorems, which showed Hibert's program to be unattainable in its original form. The first is that no consistent system of axioms whose theorems can be listed by an effective procedure such as an algorithm or computer program is capable of proving all facts about the natural numbers. For any such system, there will always be statements about the natural numbers that are true, but that are unprovable within the system. The second is that if such a system is also capable of proving certain basic facts about the natural numbers, then the system cannot prove the consistency of the system itself. These two results are known as Gödel's incompleteness theorems, or simply Gödel's Theorem. Later in the decade, Gödel developed the concept of set-theoretic constructibility, as part of his proof that the axiom of choice and the continuum hypothesis are consistent with Zermelo–Fraenkel set theory. In proof theory, Gerhard Gentzen developed natural deduction and the sequent calculus. The former attempts to model logical reasoning as it 'naturally' occurs in practice and is most easily applied to intuitionistic logic, while the latter was devised to clarify the derivation of logical proofs in any formal system. Since Gentzen's work, natural deduction and sequent calculi have been widely applied in the fields of proof theory, mathematical logic and computer science. Gentzen also proved normalization and cut-elimination theorems for intuitionistic and classical logic which could be used to reduce logical proofs to a normal form.

Alfred Tarski

Alfred Tarski, a pupil of Łukasiewicz, is best known for his definition of truth and logical consequence, and the semantic concept of logical satisfaction. In 1933, he published (in Polish) The concept of truth in formalized languages, in which he proposed his semantic theory of truth: a sentence such as "snow is white" is true if and only if snow is white. Tarski's theory separated the metalanguage, which makes the statement about truth, from the object language, which contains the sentence whose truth is being asserted, and gave a correspondence (the T-schema) between phrases in the object language and elements of an interpretation. Tarski's approach to the difficult idea of explaining truth has been enduringly influential in logic and philosophy, especially in the development of model theory. Tarski also produced important work on the methodology of deductive systems, and on fundamental principles such as completeness, decidability, consistency and definability. According to Anita Feferman, Tarski "changed the face of logic in the twentieth century".

Alonzo Church and Alan Turing proposed formal models of computability, giving independent negative solutions to Hilbert's Entscheidungsproblem in 1936 and 1937, respectively. The Entscheidungsproblem asked for a procedure that, given any formal mathematical statement, would algorithmically determine whether the statement is true. Church and Turing proved there is no such procedure; Turing's paper introduced the halting problem as a key example of a mathematical problem without an algorithmic solution.

Church's system for computation developed into the modern λ-calculus, while the Turing machine became a standard model for a general-purpose computing device. It was soon shown that many other proposed models of computation were equivalent in power to those proposed by Church and Turing. These results led to the Church–Turing thesis that any deterministic algorithm that can be carried out by a human can be carried out by a Turing machine. Church proved additional undecidability results, showing that both Peano arithmetic and first-order logic are undecidable. Later work by Emil Post and Stephen Cole Kleene in the 1940s extended the scope of computability theory and introduced the concept of degrees of unsolvability.

The results of the first few decades of the twentieth century also had an impact upon analytic philosophy and philosophical logic, particularly from the 1950s onwards, in subjects such as modal logic, temporal logic, deontic logic, and relevance logic.

Logic after WWII

After World War II, mathematical logic branched into four inter-related but separate areas of research: model theory, proof theory, computability theory, and set theory.

In set theory, the method of forcing revolutionized the field by providing a robust method for constructing models and obtaining independence results. Paul Cohen introduced this method in 1963 to prove the independence of the continuum hypothesis and the axiom of choice from Zermelo–Fraenkel set theory. His technique, which was simplified and extended soon after its introduction, has since been applied to many other problems in all areas of mathematical logic.

Computability theory had its roots in the work of Turing, Church, Kleene, and Post in the 1930s and 40s. It developed into a study of abstract computability, which became known as recursion theory. The priority method, discovered independently by Albert Muchnik and Richard Friedberg in the 1950s, led to major advances in the understanding of the degrees of unsolvability and related structures. Research into higher-order computability theory demonstrated its connections to set theory. The fields of constructive analysis and computable analysis were developed to study the effective content of classical mathematical theorems; these in turn inspired the program of reverse mathematics. A separate branch of computability theory, computational complexity theory, was also characterized in logical terms as a result of investigations into descriptive complexity.

Model theory applies the methods of mathematical logic to study models of particular mathematical theories. Alfred Tarski published much pioneering work in the field, which is named after a series of papers he published under the title Contributions to the theory of models. In the 1960s, Abraham Robinson used model-theoretic techniques to develop calculus and analysis based on infinitesimals, a problem that first had been proposed by Leibniz.

In proof theory, the relationship between classical mathematics and intuitionistic mathematics was clarified via tools such as the realizability method invented by Georg Kreisel and Gödel's Dialectica interpretation. This work inspired the contemporary area of proof mining. The Curry-Howard correspondence emerged as a deep analogy between logic and computation, including a correspondence between systems of natural deduction and typed lambda calculi used in computer science. As a result, research into this class of formal systems began to address both logical and computational aspects; this area of research came to be known as modern type theory. Advances were also made in ordinal analysis and the study of independence results in arithmetic such as the Paris–Harrington theorem.

This was also a period, particularly in the 1950s and afterwards, when the ideas of mathematical logic begin to influence philosophical thinking. For example, tense logic is a formalised system for representing, and reasoning about, propositions qualified in terms of time. The philosopher Arthur Prior played a significant role in its development in the 1960s. Modal logics extend the scope of formal logic to include the elements of modality (for example, possibility and necessity). The ideas of Saul Kripke, particularly about possible worlds, and the formal system now called Kripke semantics have had a profound impact on analytic philosophy. His best known and most influential work is Naming and Necessity (1980). Deontic logics are closely related to modal logics: they attempt to capture the logical features of obligation, permission and related concepts. Although some basic novelties syncretizing mathematical and philosophical logic were shown by Bolzano in the early 1800s, it was Ernst Mally, a pupil of Alexius Meinong, who was to propose the first formal deontic system in his Grundgesetze des Sollens, based on the syntax of Whitehead's and Russell's propositional calculus.

Another logical system founded after World War II was fuzzy logic by Azerbaijani mathematician Lotfi Asker Zadeh in 1965.