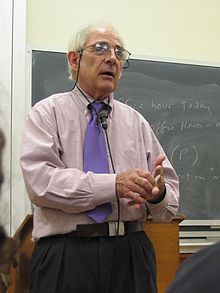

| John Rogers Searle | |

|---|---|

Searle at Christ Church, Oxford, 2005

| |

| Born | July 31, 1932 Denver, Colorado, U.S. |

| Alma mater | University of Wisconsin Christ Church, Oxford |

| Spouse(s) | Dagmar Searle |

| Era | Contemporary philosophy |

| Region | Western philosophy |

| School | Analytic Direct realism |

Main interests

| |

Notable ideas

| Indirect speech acts Chinese room Biological naturalism Direction of fit Cluster description theory of names |

| Website | Homepage at UC Berkeley |

| Signature | |

John Rogers Searle (/sɜːrl/; born 31 July 1932) is an American philosopher. He is currently Willis S. and Marion Slusser Professor Emeritus of the Philosophy of Mind and Language and Professor of the Graduate School at the University of California, Berkeley. Widely noted for his contributions to the philosophy of language, philosophy of mind, and social philosophy, he began teaching at UC Berkeley in 1959.

As an undergraduate at the University of Wisconsin, Searle was secretary of "Students against Joseph McCarthy". He received all his university degrees, BA, MA, and DPhil, from the University of Oxford, where he held his first faculty positions. Later, at UC Berkeley, he became the first tenured professor to join the 1964–1965 Free Speech Movement. In the late 1980s, Searle challenged the restrictions of Berkeley's 1980 rent stabilization ordinance. Following what came to be known as the California Supreme Court's "Searle Decision" of 1990, Berkeley changed its rent control policy, leading to large rent increases between 1991 and 1994.

In 2000 Searle received the Jean Nicod Prize; in 2004, the National Humanities Medal;[5] and in 2006, the Mind & Brain Prize. Searle's early work on speech acts, influenced by J. L. Austin and Ludwig Wittgenstein, helped establish his reputation. His notable concepts include the "Chinese room" argument against "strong" artificial intelligence. In March 2017, Searle was accused of sexual assault.

Biography

Searle began his college education at the University of Wisconsin-Madison and in his junior year became a Rhodes Scholar at the University of Oxford, where he obtained all his university degrees, BA, MA, and DPhil.

His first two faculty positions were at Oxford as Research Lecturer, and Lecturer and Tutor at Christ Church.

Politics

While an undergraduate at the University of Wisconsin, Searle became the secretary of "Students against Joseph McCarthy". (McCarthy at that time served as the junior senator from Wisconsin.) In 1959 Searle began teaching at Berkeley, and he was the first tenured professor to join the 1964–65 Free Speech Movement. In 1969, while serving as chairman of the Academic Freedom Committee of the Academic Senate of the University of California, he supported the university in its dispute with students over the People's Park. In The Campus War: A Sympathetic Look at the University in Agony (1971), Searle investigates the causes behind the campus protests of the era. In it he declares: "I have been attacked by both the House Un-American Activities Committee and ... several radical polemicists ... Stylistically, the attacks are interestingly similar. Both rely heavily on insinuation and innuendo, and both display a hatred – one might almost say terror – of close analysis and dissection of argument." He asserts that "My wife was threatened that I (and other members of the administration) would be assassinated or violently attacked."In the late 1980s, Searle, along with other landlords, petitioned Berkeley's rental board to raise the limits on how much he could charge tenants under the city's 1980 rent-stabilization ordinance. The rental board refused to consider Searle's petition and Searle filed suit, charging a violation of due process. In 1990, in what came to be known as the "Searle Decision", the California Supreme Court upheld Searle's argument in part and Berkeley changed its rent-control policy, leading to large rent-increases between 1991 and 1994. Searle was reported to see the issue as one of fundamental rights, being quoted as saying "The treatment of landlords in Berkeley is comparable to the treatment of blacks in the South...our rights have been massively violated and we are here to correct that injustice." The court described the debate as a "morass of political invective, ad hominem attack, and policy argument".

Shortly after the September 11 attacks, Searle wrote an article arguing that the attacks were a particular event in a long-term struggle against forces that are intractably opposed to the United States, and signaled support for a more aggressive neoconservative interventionist foreign policy. He called for the realization that the United States is in a more-or-less permanent state of war with these forces. Moreover, a probable course of action would be to deny terrorists the use of foreign territory from which to stage their attacks. Finally, he alluded to the long-term nature of the conflict and blamed the attacks on the lack of American resolve to deal forcefully with America's enemies over the past several decades.

Sexual assault allegations

In March 2017, Searle became the subject of sexual assault allegations. The Los Angeles Times reported: "A new lawsuit alleges that university officials failed to properly respond to complaints that John Searle, an 84-year-old renowned philosophy professor, sexually assaulted his 24-year-old research associate last July and cut her pay when she rejected his advances." The case brought to light several earlier complaints against Searle, on which Berkeley allegedly had failed to act.The lawsuit, filed in a California court on March 21, 2017, sought damages both from Searle and from the Regents of the University of California as his employers. It also claims that Jennifer Hudin, the director of the John Searle Center for Social Ontology, where the complainant had been employed as an assistant to Searle, has stated that Searle "has had sexual relationships with his students and others in the past in exchange for academic, monetary or other benefits". After news of the lawsuit became public, several previous allegations of sexual harassment by Searle were also revealed.

Awards and recognitions

Searle has five honorary-doctorate degrees from four different countries and is an honorary visiting professor at Tsing Hua University and at East China Normal University.In 2000 Searle received the Jean Nicod Prize; in 2004, the National Humanities Medal; and in 2006, the Mind & Brain Prize.

Philosophy

Speech acts

Searle's early work, which did a great deal to establish his reputation, was on speech acts. He attempted to synthesize ideas from many colleagues – including J. L. Austin (the "illocutionary act", from How To Do Things with Words), Ludwig Wittgenstein and G.C.J. Midgley (the distinction between regulative and constitutive rules) – with his own thesis that such acts are constituted by the rules of language. He also drew on the work of Paul Grice (the analysis of meaning as an attempt at being understood), Hare and Stenius (the distinction, concerning meaning, between illocutionary force and propositional content), P. F. Strawson, John Rawls and William Alston, who maintained that sentence meaning consists in sets of regulative rules requiring the speaker to perform the illocutionary act indicated by the sentence and that such acts involve the utterance of a sentence which (a) indicates that one performs the act; (b) means what one says; and (c) addresses an audience in the vicinity.In his 1969 book Speech Acts, Searle sets out to combine all these elements to give his account of illocutionary acts. There he provides an analysis of what he considers the prototypical illocutionary act of promising and offers sets of semantical rules intended to represent the linguistic meaning of devices indicating further illocutionary act types. Among the concepts presented in the book is the distinction between the "illocutionary force" and the "propositional content" of an utterance. Searle does not precisely define the former as such, but rather introduces several possible illocutionary forces by example. According to Searle, the sentences...

- Sam smokes habitually.

- Does Sam smoke habitually?

- Sam, smoke habitually!

- Would that Sam smoked habitually!

According to a later account, which Searle presents in Intentionality (1983) and which differs in important ways from the one suggested in Speech Acts, illocutionary acts are characterised by their having "conditions of satisfaction" (an idea adopted from Strawson's 1971 paper "Meaning and Truth") and a "direction of fit" (an idea adopted from Elizabeth Anscombe). For example, the statement "John bought two candy bars" is satisfied if and only if it is true, i.e. John did buy two candy bars. By contrast, the command "John, buy two candy bars!" is satisfied if and only if John carries out the action of purchasing two candy bars. Searle refers to the first as having the "word-to-world" direction of fit, since the words are supposed to change to accurately represent the world, and the second as having the "world-to-word" direction of fit, since the world is supposed to change to match the words. (There is also the double direction of fit, in which the relationship goes both ways, and the null or zero direction of fit, in which it goes neither way because the propositional content is presupposed, as in "I'm sorry I ate John's candy bars.")

In Foundations of Illocutionary Logic (1985, with Daniel Vanderveken), Searle prominently uses the notion of the "illocutionary point".

Searle's speech-act theory has been challenged by several thinkers in a variety of ways. Collections of articles referring to Searle's account are found in Burkhardt 1990 and Lepore / van Gulick 1991.

Searle–Derrida debate

In the early 1970s, Searle had a brief exchange with Jacques Derrida regarding speech-act theory. The exchange was characterized by a degree of mutual hostility between the philosophers, each of whom accused the other of having misunderstood his basic points. Searle was particularly hostile to Derrida's deconstructionist framework and much later refused to let his response to Derrida be printed along with Derrida's papers in the 1988 collection Limited Inc. Searle did not consider Derrida's approach to be legitimate philosophy or even intelligible writing and argued that he did not want to legitimize the deconstructionist point of view by dedicating any attention to it. Consequently, some critics have considered the exchange to be a series of elaborate misunderstandings rather than a debate, while others have seen either Derrida or Searle gaining the upper hand. The level of hostility can be seen from Searle's statement that "It would be a mistake to regard Derrida's discussion of Austin as a confrontation between two prominent philosophical traditions", to which Derrida replied that that sentence was "the only sentence of the 'reply' to which I can subscribe". Commentators have frequently interpreted the exchange as a prominent example of a confrontation between analytical and continental philosophy.The debate began in 1972, when, in his paper "Signature Event Context", Derrida analyzed J. L. Austin's theory of the illocutionary act. While sympathetic to Austin's departure from a purely denotational account of language to one that includes "force", Derrida was sceptical of the framework of normativity employed by Austin. He argued that Austin had missed the fact that any speech event is framed by a "structure of absence" (the words that are left unsaid due to contextual constraints) and by "iterability" (the repeatability of linguistic elements outside of their context). Derrida argued that the focus on intentionality in speech-act theory was misguided because intentionality is restricted to that which is already established as a possible intention. He also took issue with the way Austin had excluded the study of fiction, non-serious or "parasitic" speech, wondering whether this exclusion was because Austin had considered these speech genres governed by different structures of meaning, or simply due to a lack of interest.

In his brief reply to Derrida, "Reiterating the Differences: A Reply to Derrida", Searle argued that Derrida's critique was unwarranted because it assumed that Austin's theory attempted to give a full account of language and meaning when its aim was much narrower. Searle considered the omission of parasitic discourse forms to be justified by the narrow scope of Austin's inquiry. Searle agreed with Derrida's proposal that intentionality presupposes iterability, but did not apply the same concept of intentionality used by Derrida, being unable or unwilling to engage with the continental conceptual apparatus. This, in turn, caused Derrida to criticize Searle for not being sufficiently familiar with phenomenological perspectives on intentionality. Searle also argued that Derrida's disagreement with Austin turned on his having misunderstood Austin's (and Peirce's) type–token distinction and his failure to understand Austin's concept of failure in relation to performativity. Some critics have suggested that Searle, by being so grounded in the analytical tradition, was unable to engage with Derrida's continental phenomenological tradition and was at fault for the unsuccessful nature of the exchange.

Derrida, in his response to Searle ("a b c ..." in Limited Inc), ridiculed Searle's positions. Arguing that a clear sender of Searle's message could not be established, he suggested that Searle had formed with Austin a société à responsabilité limitée (a "limited liability company") due to the ways in which the ambiguities of authorship within Searle's reply circumvented the very speech act of his reply. Searle did not respond. Later in 1988, Derrida tried to review his position and his critiques of Austin and Searle, reiterating that he found the constant appeal to "normality" in the analytical tradition to be problematic.

In the debate, Derrida praises Austin's work, but argues that he is wrong to banish what Austin calls "infelicities" from the "normal" operation of language. One "infelicity," for instance, occurs when it cannot be known whether a given speech act is "sincere" or "merely citational" (and therefore possibly ironic, etc.). Derrida argues that every iteration is necessarily "citational", due to the graphematic nature of speech and writing, and that language could not work at all without the ever-present and ineradicable possibility of such alternate readings. Derrida takes Searle to task for his attempt to get around this issue by grounding final authority in the speaker's inaccessible "intention". Derrida argues that intention cannot possibly govern how an iteration signifies, once it becomes hearable or readable. All speech acts borrow a language whose significance is determined by historical-linguistic context, and by the alternate possibilities that this context makes possible. This significance, Derrida argues, cannot be altered or governed by the whims of intention.

In 1995, Searle gave a brief reply to Derrida in The Construction of Social Reality. "Derrida, as far as I can tell, does not have an argument. He simply declares that there is nothing outside of texts (Il n'y a pas de 'hors-texte')." Then, in Limited Inc., Derrida "apparently takes it all back", claiming that he meant only "the banality that everything exists in some context or other!" Derrida and others like him present "an array of weak or even nonexistent arguments for a conclusion that seems preposterous". In Of Grammatology (1967), Derrida claims that a text must not be interpreted by reference to anything "outside of language", which for him means "outside of writing in general". He adds: "There is nothing outside of the text [there is no outside-text; il n'y a pas de hors-texte]" (brackets in the translation). This is a metaphor: un hors-texte is a bookbinding term, referring to a 'plate' bound among pages of text. Searle cites Derrida's supplementary metaphor rather than his initial contention. However, whether Searle's objection is good against that contention is the point in debate.

Intentionality and the background

Searle defines intentionality as the power of minds to be about, to represent (see Correspondence theory of truth), or to stand for, things, properties and states of affairs in the world. The nature of intentionality is an important part of discussions of Searle's "Philosophy of Mind". Searle emphasizes that the word 'intentionality, (the part of the mind directed to/from/about objects and relations in the world independent of mind) should not be confused with the word 'intensionality' (the logical property of some sentences that do not pass the test of 'extensionality'). In Intentionality: An Essay in the Philosophy of Mind (1983), Searle applies certain elements of his account(s) of "illocutionary acts" to the investigation of intentionality. Searle also introduces a technical term the Background, which, according to him, has been the source of much philosophical discussion ("though I have been arguing for this thesis for almost twenty years," Searle writes, "many people whose opinions I respect still disagree with me about it"). He calls Background the set of abilities, capacities, tendencies, and dispositions that humans have and that are not themselves intentional states. Thus, when someone asks us to "cut the cake" we know to use a knife and when someone asks us to "cut the grass" we know to use a lawnmower (and not vice versa), even though the actual request did not include this detail. Searle sometimes supplements his reference to the Background with the concept of the Network, one's network of other beliefs, desires, and other intentional states necessary for any particular intentional state to make sense. Searle argues that the concept of a Background is similar to the concepts provided by several other thinkers, including Wittgenstein's private language argument ("the work of the later Wittgenstein is in large part about the Background") and Pierre Bourdieu's habitus.To give an example, two chess players might be engaged in a bitter struggle at the board, but they share all sorts of Background presuppositions: that they will take turns to move, that no one else will intervene, that they are both playing to the same rules, that the fire alarm won't go off, that the board won't suddenly disintegrate, that their opponent won't magically turn into a grapefruit, and so on indefinitely. As most of these possibilities won't have occurred to either player,[46] Searle thinks the Background must be unconscious, though elements of it can be called to consciousness (if the fire alarm does go off, say).

In his debate with Derrida, Searle argued against Derrida's view that a statement can be disjoined from the original intentionality of its author, for example when no longer connected to the original author, while still being able to produce meaning. Searle maintained that even if one was to see a written statement with no knowledge of authorship it would still be impossible to escape the question of intentionality, because "a meaningful sentence is just a standing possibility of the (intentional) speech act". For Searle ascribing intentionality to a statement was a basic requirement for attributing it any meaning at all.

Consciousness

Building upon his views about intentionality, Searle presents a view concerning consciousness in his book The Rediscovery of the Mind (1992). He argues that, starting with behaviorism (an early but influential scientific view, succeeded by many later accounts that Searle also dismisses), much of modern philosophy has tried to deny the existence of consciousness, with little success. In Intentionality, he parodies several alternative theories of consciousness by replacing their accounts of intentionality with comparable accounts of the hand:- No one would think of saying, for example, "Having a hand is just being disposed to certain sorts of behavior such as grasping" (manual behaviorism), or "Hands can be defined entirely in terms of their causes and effects" (manual functionalism), or "For a system to have a hand is just for it to be in a certain computer state with the right sorts of inputs and outputs" (manual Turing machine functionalism), or "Saying that a system has hands is just adopting a certain stance toward it" (the manual stance). (p. 263)

Searle says simply that both are true: consciousness is a real subjective experience, caused by the physical processes of the brain. (A view which he suggests might be called biological naturalism.)

Ontological subjectivity

Searle has argued that critics like Daniel Dennett, who (he claims) insist that discussing subjectivity is unscientific because science presupposes objectivity, are making a category error. Perhaps the goal of science is to establish and validate statements which are epistemically objective, (i.e., whose truth can be discovered and evaluated by any interested party), but are not necessarily ontologically objective.Searle calls any value judgment epistemically subjective. Thus, "McKinley is prettier than Everest" is "epistemically subjective", whereas "McKinley is higher than Everest" is "epistemically objective." In other words, the latter statement is evaluable (in fact, falsifiable) by an understood ('background') criterion for mountain height, like 'the summit is so many meters above sea level'. No such criteria exist for prettiness.

Beyond this distinction, Searle thinks there are certain phenomena (including all conscious experiences) that are ontologically subjective, i.e. can only exist as subjective experience. For example, although it might be subjective or objective in the epistemic sense, a doctor's note that a patient suffers from back pain is an ontologically objective claim: it counts as a medical diagnosis only because the existence of back pain is "an objective fact of medical science". The pain itself, however, is ontologically subjective: it is only experienced by the person having it.

Searle goes on to affirm that "where consciousness is concerned, the existence of the appearance is the reality". His view that the epistemic and ontological senses of objective/subjective are cleanly separable is crucial to his self-proclaimed biological naturalism.

Artificial intelligence

A consequence of biological naturalism is that if we want to create a conscious being, we will have to duplicate whatever physical processes the brain goes through to cause consciousness. Searle thereby means to contradict what he calls "Strong AI", defined by the assumption that as soon as a certain kind of software is running on a computer, a conscious being is thereby created.In 1980, Searle presented the "Chinese room" argument, which purports to prove the falsity of strong AI. Assume you do not speak Chinese and imagine yourself in a room with two slits, a book, and some scratch paper. Someone slides you some Chinese characters through the first slit, you follow the instructions in the book, transcribing characters as instructed onto the scratch paper, and slide the resulting sheet out the second slit. To people on the outside world, it appears the room speaks Chinese—they slide Chinese statements in one slit and get valid responses in return—yet you do not understand a word of Chinese. This suggests, according to Searle, that no computer can ever understand Chinese or English, because, as the thought experiment suggests, being able to 'translate' Chinese into English does not entail 'understanding' either Chinese or English: all which the person in the thought experiment, and hence a computer, is able to do is to execute certain syntactic manipulations.

Stevan Harnad argues that Searle's "Strong AI" is really just another name for functionalism and computationalism, and that these positions are the real targets of his critique. Functionalists argue that consciousness can be defined as a set of informational processes inside the brain. It follows that anything that carries out the same informational processes as a human is also conscious. Thus, if we wrote a computer program that was conscious, we could run that computer program on, say, a system of ping-pong balls and beer cups and the system would be equally conscious, because it was running the same information processes.

Searle argues that this is impossible, since consciousness is a physical property, like digestion or fire. No matter how good a simulation of digestion you build on the computer, it will not digest anything; no matter how well you simulate fire, nothing will get burnt. By contrast, informational processes are observer-relative: observers pick out certain patterns in the world and consider them information processes, but information processes are not things-in-the-world themselves. Since they do not exist at a physical level, Searle argues, they cannot have causal efficacy and thus cannot cause consciousness. There is no physical law, Searle insists, that can see the equivalence between a personal computer, a series of ping-pong balls and beer cans, and a pipe-and-water system all implementing the same program.

Social reality

Searle extended his inquiries into observer-relative phenomena by trying to understand social reality. Searle begins by arguing collective intentionality (e.g. "we're going for a walk") is a distinct form of intentionality, not simply reducible to individual intentionality (e.g. "I'm going for a walk with him and I think he thinks he's going for a walk with me and he thinks I think I'm going for a walk with him and ...").In The Construction of Social Reality (1995), Searle addresses the mystery of how social constructs like "baseball" or "money" can exist in a world consisting only of physical particles in fields of force. Adapting an idea by Elizabeth Anscombe in "On Brute Facts," Searle distinguishes between brute facts, like the height of a mountain, and institutional facts, like the score of a baseball game. Aiming at an explanation of social phenomena in terms of Anscombe's notion, he argues that society can be explained in terms of institutional facts, and institutional facts arise out of collective intentionality through constitutive rules with the logical form "X counts as Y in C". Thus, for instance, filling out a ballot counts as a vote in a polling place, getting so many votes counts as a victory in an election, getting a victory counts as being elected president in the presidential race, etc.

Many sociologists, however, do not see Searle's contributions to social theory as very significant. Neil Gross, for example, argues that Searle's views on society are more or less a reconstitution of the sociologist Émile Durkheim's theories of social facts, social institutions, collective representations, and the like. Searle's ideas are thus open to the same criticisms as Durkheim's. Searle responded that Durkheim's work was worse than he had originally believed and, admitting he had not read much of Durkheim's work, said that, "Because Durkheim's account seemed so impoverished I did not read any further in his work." Steven Lukes, however, responded to Searle's response to Gross and argued point by point against the allegations that Searle makes against Durkheim, essentially upholding Gross' argument that Searle's work bears great resemblance to Durkheim's. Lukes attributes Searle's miscomprehension of Durkheim's work to the fact that Searle never read Durkheim.

Searle–Lawson debate

In recent years, Searle's main interlocutor on issues of social ontology has been Tony Lawson. Although their accounts of social reality are similar, there are important differences. Lawson places emphasis on the notion of social totality whereas Searle prefers to refer to institutional facts. Furthermore, Searle believes that emergence implies causal reduction whereas Lawson argues that social totalities cannot be completed explained by the causal powers of their components. Searle also places language at the foundation of the construction of social reality while Lawson believes that community formation necessarily precedes the development of language and therefore there must be the possibility for non-linguistic social structure formation. The debate is ongoing and takes place additionally through regular meetings of the Centre for Social Ontology at the University of California, Berkeley and the Cambridge Social Ontology Group at the University of Cambridge.Rationality

In Rationality in Action (2001), Searle argues that standard notions of rationality are badly flawed. According to what he calls the Classical Model, rationality is seen as something like a train track: you get on at one point with your beliefs and desires and the rules of rationality compel you all the way to a conclusion. Searle doubts this picture of rationality holds generally.Searle briefly critiques one particular set of these rules: those of mathematical decision theory. He points out that its axioms require that anyone who valued a quarter and their life would, at some odds, bet their life for a quarter. Searle insists he would never take such a bet and believes that this stance is perfectly rational.

Most of his attack is directed against the common conception of rationality, which he believes is badly flawed. First, he argues that reasons don't cause you to do anything, because having sufficient reason wills (but doesn't force) you to do that thing. So in any decision situation we experience a gap between our reasons and our actions. For example, when we decide to vote, we do not simply determine that we care most about economic policy and that we prefer candidate Jones's economic policy. We also have to make an effort to cast our vote. Similarly, every time a guilty smoker lights a cigarette they are aware of succumbing to their craving, not merely of acting automatically as they do when they exhale. It is this gap that makes us think we have freedom of the will. Searle thinks whether we really have free will or not is an open question, but considers its absence highly unappealing because it makes the feeling of freedom of will an epiphenomenon, which is highly unlikely from the evolutionary point of view given its biological cost. He also says: " All rational activity presupposes free will ".

Second, Searle believes we can rationally do things that don't result from our own desires. It is widely believed that one cannot derive an "ought" from an "is", i.e. that facts about how the world is can never tell you what you should do ('Hume's Law'). By contrast, in so far as a fact is understood as relating to an institution (marriage, promises, commitments, etc.), which is to be understood as a system of constitutive rules, then what one should do can be understood as following from the institutional fact of what one has done; institutional fact, then, can be understood as opposed to the "brute facts" related to Hume's Law. For example, Searle believes the fact that you promised to do something means you should do it, because by making the promise you are participating in the constitutive rules that arrange the system of promise making itself, and therefore understand a "shouldness" as implicit in the mere factual action of promising. Furthermore, he believes that this provides a desire-independent reason for an action—if you order a drink at a bar, you should pay for it even if you have no desire to. This argument, which he first made in his paper, "How to Derive 'Ought' from 'Is'" (1964), remains highly controversial, but even three decades later Searle continued to defend his view that "..the traditional metaphysical distinction between fact and value cannot be captured by the linguistic distinction between 'evaluative' and 'descriptive' because all such speech act notions are already normative."

Third, Searle argues that much of rational deliberation involves adjusting our (often inconsistent) patterns of desires to decide between outcomes, not the other way around. While in the Classical Model, one would start from a desire to go to Paris greater than that of saving money and calculate the cheapest way to get there, in reality people balance the niceness of Paris against the costs of travel to decide which desire (visiting Paris or saving money) they value more. Hence, he believes rationality is not a system of rules, but more of an adverb. We see certain behavior as rational, no matter what its source, and our system of rules derives from finding patterns in what we see as rational.

Bibliography

Primary

- Speech Acts: An Essay in the Philosophy of Language (1969), Cambridge University Press, ISBN 978-0521096263

- The Campus War: A Sympathetic Look at the University in Agony (political commentary; 1971)

- Expression and Meaning: Studies in the Theory of Speech Acts (essay collection; 1979)

- Intentionality: An Essay in the Philosophy of Mind (1983)

- Minds, Brains and Science: The 1984 Reith Lectures (lecture collection; 1984)

- Foundations of Illocutionary Logic (John Searle & Daniel Vanderveken 1985)

- The Rediscovery of the Mind (1992)

- The Construction of Social Reality (1995)

- The Mystery of Consciousness (review collection; 1997)

- Mind, Language and Society: Philosophy in the Real World (summary of earlier work; 1998)

- Rationality in Action (2001)

- Consciousness and Language (essay collection; 2002)

- Freedom and Neurobiology (lecture collection; 2004)

- Mind: A Brief Introduction (summary of work in philosophy of mind; 2004)

- Philosophy in a New Century: Selected Essays (2008)

- Making the Social World: The Structure of Human Civilization (2010)

- "What Your Computer Can't Know" (review of Luciano Floridi, The Fourth Revolution: How the Infosphere Is Reshaping Human Reality, Oxford University Press, 2014; and Nick Bostrom, Superintelligence: Paths, Dangers, Strategies, Oxford University Press, 2014), The New York Review of Books, vol. LXI, no. 15 (October 9, 2014), pp. 52–55.

- Seeing Things As They Are: A Theory of Perception (2015)

Secondary

- John Searle and His Critics (Ernest Lepore and Robert Van Gulick, eds.; 1991)

- John Searle (Barry Smith, ed.; 2003)

- John Searle and the Construction of Social Reality (Joshua Rust; 2006)

- Intentional Acts and Institutional Facts (Savas Tsohatzidis, ed.; 2007)

- John Searle (Joshua Rust; 2009)