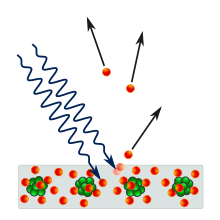

The photoelectric effect is the emission of electrons when electromagnetic radiation, such as light, hits a material. Electrons emitted in this manner are called photoelectrons. The phenomenon is studied in condensed matter physics, and solid state and quantum chemistry to draw inferences about the properties of atoms, molecules and solids. The effect has found use in electronic devices specialized for light detection and precisely timed electron emission.

The experimental results disagree with classical electromagnetism, which predicts that continuous light waves transfer energy to electrons, which would then be emitted when they accumulate enough energy. An alteration in the intensity of light would theoretically change the kinetic energy of the emitted electrons, with sufficiently dim light resulting in a delayed emission. The experimental results instead show that electrons are dislodged only when the light exceeds a certain frequency—regardless of the light's intensity or duration of exposure. Because a low-frequency beam at a high intensity does not build up the energy required to produce photoelectrons, as would be the case if light's energy accumulated over time from a continuous wave, Albert Einstein proposed that a beam of light is not a wave propagating through space, but a swarm of discrete energy packets, known as photons.

Emission of conduction electrons from typical metals requires a few electron-volt (eV) light quanta, corresponding to short-wavelength visible or ultraviolet light. In extreme cases, emissions are induced with photons approaching zero energy, like in systems with negative electron affinity and the emission from excited states, or a few hundred keV photons for core electrons in elements with a high atomic number.[1] Study of the photoelectric effect led to important steps in understanding the quantum nature of light and electrons and influenced the formation of the concept of wave–particle duality. Other phenomena where light affects the movement of electric charges include the photoconductive effect, the photovoltaic effect, and the photoelectrochemical effect.

Emission mechanism

The photons of a light beam have a characteristic energy, called photon energy, which is proportional to the frequency of the light). In the photoemission process, when an electron within some material absorbs the energy of a photon and acquires more energy than its binding energy, it is likely to be ejected. If the photon energy is too low, the electron is unable to escape the material. Since an increase in the intensity of low-frequency light will only increase the number of low-energy photons, this change in intensity will not create any single photon with enough energy to dislodge an electron. Moreover, the energy of the emitted electrons will not depend on the intensity of the incoming light of a given frequency, but only on the energy of the individual photons.

While free electrons can absorb any energy when irradiated as long as this is followed by an immediate re-emission, like in the Compton effect, in quantum systems all of the energy from one photon is absorbed—if the process is allowed by quantum mechanics—or none at all. Part of the acquired energy is used to liberate the electron from its atomic binding, and the rest contributes to the electron's kinetic energy as a free particle. Because electrons in a material occupy many different quantum states with different binding energies, and because they can sustain energy losses on their way out of the material, the emitted electrons will have a range of kinetic energies. The electrons from the highest occupied states will have the highest kinetic energy. In metals, those electrons will be emitted from the Fermi level.

When the photoelectron is emitted into a solid rather than into a vacuum, the term internal photoemission is often used, and emission into a vacuum is distinguished as external photoemission.

Experimental observation of photoelectric emission

Even though photoemission can occur from any material, it is most readily observed from metals and other conductors. This is because the process produces a charge imbalance which, if not neutralized by current flow, results in the increasing potential barrier until the emission completely ceases. The energy barrier to photoemission is usually increased by nonconductive oxide layers on metal surfaces, so most practical experiments and devices based on the photoelectric effect use clean metal surfaces in evacuated tubes. Vacuum also helps observing the electrons since it prevents gases from impeding their flow between the electrodes.

As sunlight, due to atmosphere's absorption, does not provide much ultraviolet light, the light rich in ultraviolet rays used to be obtained by burning magnesium or from an arc lamp. At the present time, mercury-vapor lamps, noble-gas discharge UV lamps and radio-frequency plasma sources, ultraviolet lasers, and synchrotron insertion device light sources prevail.

The classical setup to observe the photoelectric effect includes a light source, a set of filters to monochromatize the light, a vacuum tube transparent to ultraviolet light, an emitting electrode (E) exposed to the light, and a collector (C) whose voltage VC can be externally controlled.

A positive external voltage is used to direct the photoemitted electrons onto the collector. If the frequency and the intensity of the incident radiation are fixed, the photoelectric current I increases with an increase in the positive voltage, as more and more electrons are directed onto the electrode. When no additional photoelectrons can be collected, the photoelectric current attains a saturation value. This current can only increase with the increase of the intensity of light.

An increasing negative voltage prevents all but the highest-energy electrons from reaching the collector. When no current is observed through the tube, the negative voltage has reached the value that is high enough to slow down and stop the most energetic photoelectrons of kinetic energy Kmax. This value of the retarding voltage is called the stopping potential or cut off potential Vo. Since the work done by the retarding potential in stopping the electron of charge e is eVo, the following must hold eVo = Kmax.

The current-voltage curve is sigmoidal, but its exact shape depends on the experimental geometry and the electrode material properties.

For a given metal surface, there exists a certain minimum frequency of incident radiation below which no photoelectrons are emitted. This frequency is called the threshold frequency. Increasing the frequency of the incident beam increases the maximum kinetic energy of the emitted photoelectrons, and the stopping voltage has to increase. The number of emitted electrons may also change because the probability that each photon results in an emitted electron is a function of photon energy.

An increase in the intensity of the same monochromatic light (so long as the intensity is not too high[12]), which is proportional to the number of photons impinging on the surface in a given time, increases the rate at which electrons are ejected—the photoelectric current I—but the kinetic energy of the photoelectrons and the stopping voltage remain the same. For a given metal and frequency of incident radiation, the rate at which photoelectrons are ejected is directly proportional to the intensity of the incident light.

The time lag between the incidence of radiation and the emission of a photoelectron is very small, less than 10−9 second. Angular distribution of the photoelectrons is highly dependent on polarization (the direction of the electric field) of the incident light, as well as the emitting material's quantum properties such as atomic and molecular orbital symmetries and the electronic band structure of crystalline solids. In materials without macroscopic order, the distribution of electrons tends to peak in the direction of polarization of linearly polarized light. The experimental technique that can measure these distributions to infer the material's properties is angle-resolved photoemission spectroscopy.

Theoretical explanation

In 1905, Einstein proposed a theory of the photoelectric effect using a concept that light consists of tiny packets of energy known as photons or light quanta. Each packet carries energy that is proportional to the frequency of the corresponding electromagnetic wave. The proportionality constant has become known as the Planck constant. In the range of kinetic energies of the electrons that are removed from their varying atomic bindings by the absorption of a photon of energy , the highest kinetic energy is

Kinetic energy is positive, and is required for the photoelectric effect to occur. The frequency is the threshold frequency for the given material. Above that frequency, the maximum kinetic energy of the photoelectrons as well as the stopping voltage in the experiment rise linearly with the frequency, and have no dependence on the number of photons and the intensity of the impinging monochromatic light. Einstein's formula, however simple, explained all the phenomenology of the photoelectric effect, and had far-reaching consequences in the development of quantum mechanics.

Photoemission from atoms, molecules and solids

Electrons that are bound in atoms, molecules and solids each occupy distinct states of well-defined binding energies. When light quanta deliver more than this amount of energy to an individual electron, the electron may be emitted into free space with excess (kinetic) energy that is higher than the electron's binding energy. The distribution of kinetic energies thus reflects the distribution of the binding energies of the electrons in the atomic, molecular or crystalline system: an electron emitted from the state at binding energy is found at kinetic energy . This distribution is one of the main characteristics of the quantum system, and can be used for further studies in quantum chemistry and quantum physics.

Models of photoemission from solids

The electronic properties of ordered, crystalline solids are determined by the distribution of the electronic states with respect to energy and momentum—the electronic band structure of the solid. Theoretical models of photoemission from solids show that this distribution is, for the most part, preserved in the photoelectric effect. The phenomenological three-step model for ultraviolet and soft X-ray excitation decomposes the effect into these steps:

- Inner photoelectric effect in the bulk of the material that is a direct optical transition between an occupied and an unoccupied electronic state. This effect is subject to quantum-mechanical selection rules for dipole transitions. The hole left behind the electron can give rise to secondary electron emission, or the so-called Auger effect, which may be visible even when the primary photoelectron does not leave the material. In molecular solids phonons are excited in this step and may be visible as satellite lines in the final electron energy.

- Electron propagation to the surface in which some electrons may be scattered because of interactions with other constituents of the solid. Electrons that originate deeper in the solid are much more likely to suffer collisions and emerge with altered energy and momentum. Their mean-free path is a universal curve dependent on electron's energy.

- Electron escape through the surface barrier into free-electron-like states of the vacuum. In this step the electron loses energy in the amount of the work function of the surface, and suffers from the momentum loss in the direction perpendicular to the surface. Because the binding energy of electrons in solids is conveniently expressed with respect to the highest occupied state at the Fermi energy , and the difference to the free-space (vacuum) energy is the work function of the surface, the kinetic energy of the electrons emitted from solids is usually written as .

There are cases where the three-step model fails to explain peculiarities of the photoelectron intensity distributions. The more elaborate one-step model treats the effect as a coherent process of photoexcitation into the final state of a finite crystal for which the wave function is free-electron-like outside of the crystal, but has a decaying envelope inside.

History

19th century

In 1839, Alexandre Edmond Becquerel discovered the photovoltaic effect while studying the effect of light on electrolytic cells. Though not equivalent to the photoelectric effect, his work on photovoltaics was instrumental in showing a strong relationship between light and electronic properties of materials. In 1873, Willoughby Smith discovered photoconductivity in selenium while testing the metal for its high resistance properties in conjunction with his work involving submarine telegraph cables.

Johann Elster (1854–1920) and Hans Geitel (1855–1923), students in Heidelberg, investigated the effects produced by light on electrified bodies and developed the first practical photoelectric cells that could be used to measure the intensity of light. They arranged metals with respect to their power of discharging negative electricity: rubidium, potassium, alloy of potassium and sodium, sodium, lithium, magnesium, thallium and zinc; for copper, platinum, lead, iron, cadmium, carbon, and mercury the effects with ordinary light were too small to be measurable. The order of the metals for this effect was the same as in Volta's series for contact-electricity, the most electropositive metals giving the largest photo-electric effect.

In 1887, Heinrich Hertz observed the photoelectric effect and reported on the production and reception of electromagnetic waves. The receiver in his apparatus consisted of a coil with a spark gap, where a spark would be seen upon detection of electromagnetic waves. He placed the apparatus in a darkened box to see the spark better. However, he noticed that the maximum spark length was reduced when inside the box. A glass panel placed between the source of electromagnetic waves and the receiver absorbed ultraviolet radiation that assisted the electrons in jumping across the gap. When removed, the spark length would increase. He observed no decrease in spark length when he replaced the glass with quartz, as quartz does not absorb UV radiation.

The discoveries by Hertz led to a series of investigations by Hallwachs, Hoor, Righi and Stoletov on the effect of light, and especially of ultraviolet light, on charged bodies. Hallwachs connected a zinc plate to an electroscope. He allowed ultraviolet light to fall on a freshly cleaned zinc plate and observed that the zinc plate became uncharged if initially negatively charged, positively charged if initially uncharged, and more positively charged if initially positively charged. From these observations he concluded that some negatively charged particles were emitted by the zinc plate when exposed to ultraviolet light.

With regard to the Hertz effect, the researchers from the start showed the complexity of the phenomenon of photoelectric fatigue—the progressive diminution of the effect observed upon fresh metallic surfaces. According to Hallwachs, ozone played an important part in the phenomenon, and the emission was influenced by oxidation, humidity, and the degree of polishing of the surface. It was at the time unclear whether fatigue is absent in a vacuum.

In the period from 1888 until 1891, a detailed analysis of the photoeffect was performed by Aleksandr Stoletov with results reported in six publications. Stoletov invented a new experimental setup which was more suitable for a quantitative analysis of the photoeffect. He discovered a direct proportionality between the intensity of light and the induced photoelectric current (the first law of photoeffect or Stoletov's law). He measured the dependence of the intensity of the photo electric current on the gas pressure, where he found the existence of an optimal gas pressure corresponding to a maximum photocurrent; this property was used for the creation of solar cells.

Many substances besides metals discharge negative electricity under the action of ultraviolet light. G. C. Schmidt and O. Knoblauch compiled a list of these substances.

In 1897, J. J. Thomson investigated ultraviolet light in Crookes tubes. Thomson deduced that the ejected particles, which he called corpuscles, were of the same nature as cathode rays. These particles later became known as the electrons. Thomson enclosed a metal plate (a cathode) in a vacuum tube, and exposed it to high-frequency radiation. It was thought that the oscillating electromagnetic fields caused the atoms' field to resonate and, after reaching a certain amplitude, caused subatomic corpuscles to be emitted, and current to be detected. The amount of this current varied with the intensity and color of the radiation. Larger radiation intensity or frequency would produce more current.

During the years 1886–1902, Wilhelm Hallwachs and Philipp Lenard investigated the phenomenon of photoelectric emission in detail. Lenard observed that a current flows through an evacuated glass tube enclosing two electrodes when ultraviolet radiation falls on one of them. As soon as ultraviolet radiation is stopped, the current also stops. This initiated the concept of photoelectric emission. The discovery of the ionization of gases by ultraviolet light was made by Philipp Lenard in 1900. As the effect was produced across several centimeters of air and yielded a greater number of positive ions than negative, it was natural to interpret the phenomenon, as J. J. Thomson did, as a Hertz effect upon the particles present in the gas.

20th century

In 1902, Lenard observed that the energy of individual emitted electrons increased with the frequency (which is related to the color) of the light. This appeared to be at odds with Maxwell's wave theory of light, which predicted that the electron energy would be proportional to the intensity of the radiation.

Lenard observed the variation in electron energy with light frequency using a powerful electric arc lamp which enabled him to investigate large changes in intensity, and that had sufficient power to enable him to investigate the variation of the electrode's potential with light frequency. He found the electron energy by relating it to the maximum stopping potential (voltage) in a phototube. He found that the maximum electron kinetic energy is determined by the frequency of the light. For example, an increase in frequency results in an increase in the maximum kinetic energy calculated for an electron upon liberation – ultraviolet radiation would require a higher applied stopping potential to stop current in a phototube than blue light. However, Lenard's results were qualitative rather than quantitative because of the difficulty in performing the experiments: the experiments needed to be done on freshly cut metal so that the pure metal was observed, but it oxidized in a matter of minutes even in the partial vacuums he used. The current emitted by the surface was determined by the light's intensity, or brightness: doubling the intensity of the light doubled the number of electrons emitted from the surface.

The researches of Langevin and those of Eugene Bloch have shown that the greater part of the Lenard effect is certainly due to the Hertz effect. The Lenard effect upon the gas itself nevertheless does exist. Refound by J. J. Thomson and then more decisively by Frederic Palmer, Jr., the gas photoemission was studied and showed very different characteristics than those at first attributed to it by Lenard.

In 1900, while studying black-body radiation, the German physicist Max Planck suggested in his "On the Law of Distribution of Energy in the Normal Spectrum" paper that the energy carried by electromagnetic waves could only be released in packets of energy. In 1905, Albert Einstein published a paper advancing the hypothesis that light energy is carried in discrete quantized packets to explain experimental data from the photoelectric effect. Einstein theorized that the energy in each quantum of light was equal to the frequency of light multiplied by a constant, later called the Planck constant. A photon above a threshold frequency has the required energy to eject a single electron, creating the observed effect. This was a key step in the development of quantum mechanics. In 1914, Robert A. Millikan's highly accurate measurements of the Planck constant from the photoelectric effect supported Einstein's model, even though a corpuscular theory of light was for Millikan, at the time, "quite unthinkable". Einstein was awarded the 1921 Nobel Prize in Physics for "his discovery of the law of the photoelectric effect", and Millikan was awarded the Nobel Prize in 1923 for "his work on the elementary charge of electricity and on the photoelectric effect". In quantum perturbation theory of atoms and solids acted upon by electromagnetic radiation, the photoelectric effect is still commonly analyzed in terms of waves; the two approaches are equivalent because photon or wave absorption can only happen between quantized energy levels whose energy difference is that of the energy of photon.

Albert Einstein's mathematical description of how the photoelectric effect was caused by absorption of quanta of light was in one of his Annus Mirabilis papers, named "On a Heuristic Viewpoint Concerning the Production and Transformation of Light". The paper proposed a simple description of light quanta, or photons, and showed how they explained such phenomena as the photoelectric effect. His simple explanation in terms of absorption of discrete quanta of light agreed with experimental results. It explained why the energy of photoelectrons was dependent only on the frequency of the incident light and not on its intensity: at low-intensity, the high-frequency source could supply a few high energy photons, whereas at high-intensity, the low-frequency source would supply no photons of sufficient individual energy to dislodge any electrons. This was an enormous theoretical leap, but the concept was strongly resisted at first because it contradicted the wave theory of light that followed naturally from James Clerk Maxwell's equations of electromagnetism, and more generally, the assumption of infinite divisibility of energy in physical systems. Even after experiments showed that Einstein's equations for the photoelectric effect were accurate, resistance to the idea of photons continued.

Einstein's work predicted that the energy of individual ejected electrons increases linearly with the frequency of the light. Perhaps surprisingly, the precise relationship had not at that time been tested. By 1905 it was known that the energy of photoelectrons increases with increasing frequency of incident light and is independent of the intensity of the light. However, the manner of the increase was not experimentally determined until 1914 when Millikan showed that Einstein's prediction was correct.

The photoelectric effect helped to propel the then-emerging concept of wave–particle duality in the nature of light. Light simultaneously possesses the characteristics of both waves and particles, each being manifested according to the circumstances. The effect was impossible to understand in terms of the classical wave description of light, as the energy of the emitted electrons did not depend on the intensity of the incident radiation. Classical theory predicted that the electrons would 'gather up' energy over a period of time, and then be emitted.

Uses and effects

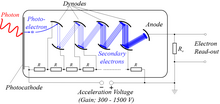

Photomultipliers

These are extremely light-sensitive vacuum tubes with a coated photocathode inside the envelope. The photo cathode contains combinations of materials such as cesium, rubidium, and antimony specially selected to provide a low work function, so when illuminated even by very low levels of light, the photocathode readily releases electrons. By means of a series of electrodes (dynodes) at ever-higher potentials, these electrons are accelerated and substantially increased in number through secondary emission to provide a readily detectable output current. Photomultipliers are still commonly used wherever low levels of light must be detected.

Image sensors

Video camera tubes in the early days of television used the photoelectric effect, for example, Philo Farnsworth's "Image dissector" used a screen charged by the photoelectric effect to transform an optical image into a scanned electronic signal.

Photoelectron spectroscopy

Because the kinetic energy of the emitted electrons is exactly the energy of the incident photon minus the energy of the electron's binding within an atom, molecule or solid, the binding energy can be determined by shining a monochromatic X-ray or UV light of a known energy and measuring the kinetic energies of the photoelectrons. The distribution of electron energies is valuable for studying quantum properties of these systems. It can also be used to determine the elemental composition of the samples. For solids, the kinetic energy and emission angle distribution of the photoelectrons is measured for the complete determination of the electronic band structure in terms of the allowed binding energies and momenta of the electrons. Modern instruments for angle-resolved photoemission spectroscopy are capable of measuring these quantities with a precision better than 1 meV and 0.1°.

Photoelectron spectroscopy measurements are usually performed in a high-vacuum environment, because the electrons would be scattered by gas molecules if they were present. However, some companies are now selling products that allow photoemission in air. The light source can be a laser, a discharge tube, or a synchrotron radiation source.

The concentric hemispherical analyzer is a typical electron energy analyzer. It uses an electric field between two hemispheres to change (disperse) the trajectories of incident electrons depending on their kinetic energies.

Night vision devices

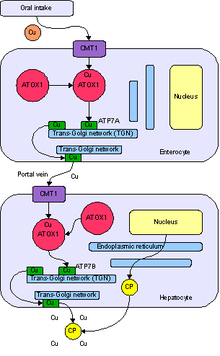

Photons hitting a thin film of alkali metal or semiconductor material such as gallium arsenide in an image intensifier tube cause the ejection of photoelectrons due to the photoelectric effect. These are accelerated by an electrostatic field where they strike a phosphor coated screen, converting the electrons back into photons. Intensification of the signal is achieved either through acceleration of the electrons or by increasing the number of electrons through secondary emissions, such as with a micro-channel plate. Sometimes a combination of both methods is used. Additional kinetic energy is required to move an electron out of the conduction band and into the vacuum level. This is known as the electron affinity of the photocathode and is another barrier to photoemission other than the forbidden band, explained by the band gap model. Some materials such as gallium arsenide have an effective electron affinity that is below the level of the conduction band. In these materials, electrons that move to the conduction band all have sufficient energy to be emitted from the material, so the film that absorbs photons can be quite thick. These materials are known as negative electron affinity materials.

Spacecraft

The photoelectric effect will cause spacecraft exposed to sunlight to develop a positive charge. This can be a major problem, as other parts of the spacecraft are in shadow which will result in the spacecraft developing a negative charge from nearby plasmas. The imbalance can discharge through delicate electrical components. The static charge created by the photoelectric effect is self-limiting, because a higher charged object doesn't give up its electrons as easily as a lower charged object does.

Moon dust

Light from the Sun hitting lunar dust causes it to become positively charged from the photoelectric effect. The charged dust then repels itself and lifts off the surface of the Moon by electrostatic levitation. This manifests itself almost like an "atmosphere of dust", visible as a thin haze and blurring of distant features, and visible as a dim glow after the sun has set. This was first photographed by the Surveyor program probes in the 1960s, and most recently the Chang'e 3 rover observed dust deposition on lunar rocks as high as about 28 cm. It is thought that the smallest particles are repelled kilometers from the surface and that the particles move in "fountains" as they charge and discharge.

Competing processes and photoemission cross section

When photon energies are as high as the electron rest energy of 511 keV, yet another process, the Compton scattering, may take place. Above twice this energy, at 1.022 MeV pair production is also more likely. Compton scattering and pair production are examples of two other competing mechanisms.

Even if the photoelectric effect is the favoured reaction for a particular interaction of a single photon with a bound electron, the result is also subject to quantum statistics and is not guaranteed. The probability of the photoelectric effect occurring is measured by the cross section of the interaction, σ. This has been found to be a function of the atomic number of the target atom and photon energy. In a crude approximation, for photon energies above the highest atomic binding energy, the cross section is given by:

Here Z is the atomic number and n is a number which varies between 4 and 5. The photoelectric effect rapidly decreases in significance in the gamma-ray region of the spectrum, with increasing photon energy. It is also more likely from elements with high atomic number. Consequently, high-Z materials make good gamma-ray shields, which is the principal reason why lead (Z = 82) is preferred and most widely used.