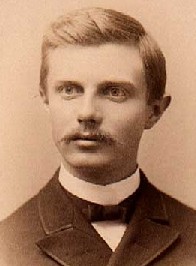

The Frontier Thesis or Turner's Thesis (also American frontierism) is the argument advanced by historian Frederick Jackson Turner in 1893 that a settler colonial exceptionalism, under the guise of American democracy, was formed by the appropriation of the rugged American frontier. He stressed the process of "winning a wilderness" to extend the frontier line further for U.S. colonization, and the impact this had on pioneer culture and character. A modern simplification describes it as Indigenous land possessing an "American ingenuity" that settlers are compelled to forcibly appropriate to create cultural identity that differs from their European ancestors. Turner's text follows in a long line of thought within the framework of Manifest Destiny established decades earlier. He stressed in this thesis that American democracy was the primary result, along with egalitarianism, of a lack of interest in bourgeois or high culture, and violence. "American democracy was born of no theorist's dream; it was not carried in the Susan Constant to Virginia, nor in the Mayflower to Plymouth. It came out of the American forest, and it gained new strength each time it touched a new frontier," said Turner.

In the thesis, the American frontier established liberty from European mindsets and eroding old, dysfunctional customs. Turner's ideal of frontier had no need for standing armies, established churches, aristocrats or nobles; there was no landed gentry who controlled the land or charged heavy rents and fees. Frontier land was practically free for the taking according to Turner. The Frontier Thesis was first published in a paper entitled "The Significance of the Frontier in American History", delivered to the American Historical Association in 1893 in Chicago. He won wide acclaim among historians and intellectuals. Turner elaborated on the theme in his advanced history lectures and in a series of essays published over the next 25 years, published along with his initial paper as The Frontier in American History.

Turner's emphasis on the importance of the frontier in shaping American character influenced the interpretation found in thousands of scholarly histories. By the time Turner died in 1932, 60% of the leading history departments in the U.S. were teaching courses in frontier history along Turnerian lines.

Summary

Turner begins the essay by calling to attention the fact that the western frontier line, which had defined the entirety of American history up to the 1880s, had ended. He elaborates by stating,

Behind institutions, behind constitutional forms and modifications, lie the vital forces that call these organs into life and shape them to meet changing conditions. The peculiarity of American institutions is, the fact that they have been compelled to adapt themselves to the changes of an expanding people to the changes involved in crossing a continent, in winning a wilderness, and in developing at each area of this progress out of the primitive economic and political conditions of the frontier into the complexity of city life.

According to Turner, American progress has repeatedly undergone a cyclical process on the frontier line as society has needed to redevelop with its movement westward. Everything in American history up to the 1880s somehow relates the western frontier, including slavery. In spite of this, Turner laments, the frontier has received little serious study from historians and economists.

The frontier line, which separates civilization from wilderness, is “the most rapid and effective Americanization” on the continent; it takes the European from across the Atlantic and shapes him into something new. American emigration west is not spurred by government incentives, but rather some “expansive power” inherent within them that seeks to dominate nature. Furthermore, there is a need to escape the confines of the State. The most important aspect of the frontier to Turner is its effect on democracy. The frontier transformed Jeffersonian democracy into Jacksonian democracy. The individualism fostered by the frontier's wilderness created a national spirit complementary to democracy, as the wilderness defies control. Therefore, Andrew Jackson's brand of popular democracy was a triumph of the frontier.

Turner sets up the East and the West as opposing forces; as the West strives for freedom, the East seeks to control it. He cites British attempts to stifle western emigration during the colonial era and as an example of eastern control. Even after independence, the eastern coast of the United States sought to control the West. Religious institutions from the eastern seaboard, in particular, battled for possession of the West. The tensions between small churches as a result of this fight, Turner states, exist today because of the religious attempt to master the West and those effects are worth further study.

American intellect owes its form to the frontier as well. The traits of the frontier are “coarseness and strength combined with acuteness and inquisitiveness; that practical, inventive turn of mind, quick to find expedients; that masterful grasp of material things, lacking in the artistic but powerful to effect great ends; that restless, nervous energy; that dominant individualism, working for good and for evil, and withal that buoyancy and exuberance which comes with freedom.”

Turner concludes the essay by saying that with the end of the frontier, the first period of American history has ended.

Intellectual context

Germanic germ theory

The Frontier Thesis came about at a time when the Germanic germ theory of history was popular. Proponents of the germ theory believed that political habits are determined by innate racial attributes. Americans inherited such traits as adaptability and self-reliance from the Germanic peoples of Europe. According to the theory, the Germanic race appeared and evolved in the ancient Teutonic forests, endowed with a great capacity for politics and government. Their germs were, directly and by way of England, carried to the New World where they were allowed to germinate in the North American forests. In so doing, the Anglo-Saxons and the Germanic people's descendants, being exposed to a forest like their Teutonic ancestors, birthed the free political institutions that formed the foundation of American government.

Historian and ethnologist Hubert Howe Bancroft articulated the latest iteration of the Germanic germ theory just three years before Turner's paper in 1893. He argued that the “tide of intelligence” had always moved from east to west. According to Bancroft, the Germanic germs had spread across of all Western Europe by the Middle Ages and had reached their height. This Germanic intelligence was only halted by “civil and ecclesiastical restraints” and a lack of “free land.” This was Bancroft's explanation for the Dark Ages.

Turner's theory of early American development, which relied on the frontier as a transformative force, starkly opposed the Bancroftian racial determinism. Turner referred to the Germanic germ theory by name in his essay, claiming that “too exclusive attention has been paid by institutional students to the Germanic origins.” Turner believed that historians should focus on the settlers’ struggle with the frontier as the catalyst for the creation of American character, not racial or hereditary traits.

Though Turner's view would win over the Germanic germ theory's version of Western history, the theory persisted for decades after Turner's thesis enraptured the American Historical Association. In 1946, medieval historian Carl Stephenson published an extended article refuting the Germanic germ theory. Evidently, the belief that free political institutions of the United States spawned in ancient Germanic forests endured well into the 1940s.

Racial warfare

A similarly race-based interpretation of Western history also occupied the intellectual sphere in the United States before Turner. The racial warfare theory was an emerging belief in the late nineteenth century advocated by Theodore Roosevelt in The Winning of the West. Though Roosevelt would later accept Turner's historiography on the West, calling Turner's work a correction or supplementation of his own, the two certainly contradict.

Roosevelt was not entirely unfounded in saying that he and Turner agreed; both Turner and Roosevelt agreed that the frontier had shaped what would become distinctly American institutions and the mysterious entity they each called “national character.” They also agreed that studying the history of the West was necessary to face the challenges to democracy in the late 1890s.

Turner and Roosevelt diverged on the exact aspect of frontier life that shaped the contemporary American. Roosevelt contended that the formation of the American character occurred not with early settlers struggling to survive while learning a foreign land, but “on the cutting edge of expansion” in the early battles with Native Americans in the New World. To Roosevelt, the journey westward was one of nonstop encounters with the “hostile races and cultures” of the New World, forcing the early colonists to defend themselves as they pressed forward. Each side, the Westerners and the native savages, struggled for mastery of the land through violence.

Whereas Turner saw the development of American character occur just behind the frontier line, as the colonists tamed and tilled the land, Roosevelt saw it form in battles just beyond the frontier line. In the end, Turner's view would win out among historians, which Roosevelt would accept.

Evolution

Turner set up an evolutionary model (he had studied evolution with a leading geologist, Thomas Chrowder Chamberlin), using the time dimension of American history, and the geographical space of the land that became the United States. The first settlers who arrived on the east coast in the 17th century acted and thought like Europeans. They adapted to the new physical, economic and political environment in certain ways—the cumulative effect of these adaptations was Americanization.

Successive generations moved further inland, shifting the lines of settlement and wilderness, but preserving the essential tension between the two. European characteristics fell by the wayside and the old country's institutions (e.g., established churches, established aristocracies, standing armies, intrusive government, and highly unequal land distribution) were increasingly out of place. Every generation moved further west and became more American, more democratic, and more intolerant of hierarchy. They also became more violent, more individualistic, more distrustful of authority, less artistic, less scientific, and more dependent on ad-hoc organizations they formed themselves. In broad terms, the further west, the more American the community.

Closed frontier

Turner saw the land frontier was ending, since the U.S. Census of 1890 had officially stated that the American frontier had broken up.

By 1890, settlement in the American West had reached sufficient population density that the frontier line had disappeared; in 1890 the Census Bureau released a bulletin declaring the closing of the frontier, stating: "Up to and including 1880 the country had a frontier of settlement, but at present the unsettled area has been so broken into by isolated bodies of settlement that there can hardly be said to be a frontier line. In the discussion of its extent, its westward movement, etc., it can not, therefore, any longer have a place in the census reports."

Comparative frontiers

Historians, geographers, and social scientists have studied frontier-like conditions in other countries, with an eye on the Turnerian model. South Africa, Canada, Russia, Brazil, Argentina and Australia—and even ancient Rome—had long frontiers that were also settled by pioneers. However these other frontier societies operated in a very difficult political and economic environment that made democracy and individualism much less likely to appear and it was much more difficult to throw off a powerful royalty, standing armies, established churches and an aristocracy that owned most of the land. The question is whether their frontiers were powerful enough to overcome conservative central forces based in the metropolis. Each nation had quite different frontier experiences. For example, the Dutch Boers in South Africa were defeated in war by Britain. In Australia, "mateship" and working together was valued more than individualism. Alexander Petrov noted that Russia had its own frontier and Russians moved over centuries across Siberia all the way from the Urals to the Pacific, struggling with nature in many physical ways similar to the American move across North America - without developing the social and political characteristics noted by Turner. To the contrary, Siberia - the Russian Frontier Land - became emblematic of the oppression of Czarist Absolute Monarchy. This comparison, Petrov suggests, shows that it is far from inevitable that an expanding settlement of wild land would produce the American type of cultural and political institutions. Other factors need to be taken into consideration, such as the great difference between British society from which settlers went across the Atlantic and the Russian society which sent its own pioneers across the Urals.

Impact and influence

Turner's thesis quickly became popular among intellectuals. It explained why the American people and American government were so different from their European counterparts. It was popular among New Dealers—Franklin D. Roosevelt and his top aides thought in terms of finding new frontiers. FDR, in celebrating the third anniversary of Social Security in 1938, advised, "There is still today a frontier that remains unconquered—an America unreclaimed. This is the great, the nation-wide frontier of insecurity, of human want and fear. This is the frontier—the America—we have set ourselves to reclaim." Historians adopted it, especially in studies of the west, but also in other areas, such as the influential work of Alfred D. Chandler Jr. (1918–2007) in business history.

Many believed that the end of the frontier represented the beginning of a new stage in American life and that the United States must expand overseas. However, others viewed this interpretation as the impetus for a new wave in the history of United States imperialism. William Appleman Williams led the "Wisconsin School" of diplomatic historians by arguing that the frontier thesis encouraged American overseas expansion, especially in Asia, during the 20th century. Williams viewed the frontier concept as a tool to promote democracy through both world wars, to endorse spending on foreign aid, and motivate action against totalitarianism. However, Turner's work, in contrast to Roosevelt's work The Winning of the West, places greater emphasis on the development of American republicanism than on territorial conquest. Other historians, who wanted to focus scholarship on minorities, especially Native Americans and Hispanics, started in the 1970s to criticize the frontier thesis because it did not attempt to explain the evolution of those groups. Indeed, their approach was to reject the frontier as an important process and to study the West as a region, ignoring the frontier experience east of the Mississippi River.

Turner never published a major book on the frontier for which he did 40 years of research. However his ideas presented in his graduate seminars at Wisconsin and Harvard influenced many areas of historiography. In the history of religion, for example, Boles (1993) notes that William Warren Sweet at the University of Chicago Divinity School as well as Peter G. Mode (in 1930), argued that churches adapted to the characteristics of the frontier, creating new denominations such as the Mormons, the Church of Christ, the Disciples of Christ, and the Cumberland Presbyterians. The frontier, they argued, shaped uniquely American institutions such as revivals, camp meetings, and itinerant preaching. This view dominated religious historiography for decades. Moos (2002) shows that the 1910s to 1940s black filmmaker and novelist Oscar Micheaux incorporated Turner's frontier thesis into his work. Micheaux promoted the West as a place where blacks could experience less institutionalized forms of racism and earn economic success through hard work and perseverance.

Slatta (2001) argues that the widespread popularization of Turner's frontier thesis influenced popular histories, motion pictures, and novels, which characterize the West in terms of individualism, frontier violence, and rough justice. Disneyland's Frontierland of the mid to late 20th century reflected the myth of rugged individualism that celebrated what was perceived to be the American heritage. The public has ignored academic historians' anti-Turnerian models, largely because they conflict with and often destroy the icons of Western heritage. However, the work of historians during the 1980s–1990s, some of whom sought to bury Turner's conception of the frontier, and others who sought to spare the concept but with nuance, have done much to place Western myths in context.

A 2020 study in Econometrica found empirical support for the frontier thesis, showing that frontier experience had a causal impact on individualism.

Early anti-Turnerian thought

Though Turner's work was massively popular in its time and for decades after, it received significant intellectual pushback in the midst of World War II. This quote from Turner's The Frontier in American History is arguably the most famous statement of his work and, to later historians, the most controversial:

American democracy was born of no theorist's dream; it was not carried in the Susan Constant to Virginia, nor in the Mayflower to Plymouth. It came out of the American forest, and it gained new strength each time it touched a new frontier. Not the constitution but free land and an abundance of natural resources open to a fit people, made the democratic type of society in America for three centuries while it occupied its empire.

This assertion's racial overtones concerned historians as Adolf Hitler and the Blood and soil ideology, stoking racial and destructive enthusiasm, rose to power in Germany. An example of this concern is in George Wilson Pierson’s influential essay on the frontier. He asked why the Turnerian American character was limited to the Thirteen Colonies that went on to form the United States, why the frontier did not produce that same character among pre-Columbian Native Americans and Spaniards in the New World.

Despite Pierson and other scholars’ work, Turner's influence did not end during World War II or even after the war. Indeed, his influence was felt in American classrooms until the 1970s and 80s.

New frontiers

Subsequent critics, historians, and politicians have suggested that other 'frontiers,' such as scientific innovation, could serve similar functions in American development. Historians have noted that John F. Kennedy in the early 1960s explicitly called upon the ideas of the frontier. At his acceptance speech upon securing the Democratic Party nomination for U.S. president on July 15, 1960, Kennedy called out to the American people, "I am asking each of you to be new pioneers on that New Frontier. My call is to the young in heart, regardless of age—to the stout in spirit, regardless of party." Mathiopoulos notes that he "cultivated this resurrection of frontier ideology as a motto of progress ('getting America moving') throughout his term of office." He promoted his political platform as the "New Frontier," with a particular emphasis on space exploration and technology. Limerick points out that Kennedy assumed that "the campaigns of the Old Frontier had been successful, and morally justified." The frontier metaphor thus maintained its rhetorical ties to American social progress.

Fermilab

Adrienne Kolb and Lillian Hoddeson argue that during the heyday of Kennedy's "New Frontier," the physicists who built Fermilab explicitly sought to recapture the excitement of the old frontier. They argue that, "Frontier imagery motivates Fermilab physicists, and a rhetoric remarkably similar to that of Turner helped them secure support for their research." Rejecting the East and West coast life styles that most scientists preferred, they selected a Chicago suburb on the prairie as the location of the lab. A small herd of American bison was started at the lab's founding to symbolize Fermilab's presence on the frontier of physics and its connection to the American prairie. The bison herd still lives on the grounds of Fermilab. Architecturally, The lab's designers rejected the militaristic design of Los Alamos and Brookhaven as well as the academic architecture of the Lawrence Berkeley National Laboratory and the Stanford Linear Accelerator Center. Instead Fermilab's planners sought to return to Turnerian themes. They emphasized the values of individualism, empiricism, simplicity, equality, courage, discovery, independence, and naturalism in the service of democratic access, human rights, ecological balance, and the resolution of social, economic, and political issues. Milton Stanley Livingston, the lab's associate director, said in 1968, "The frontier of high energy and the infinitesimally small is a challenge to the mind of man. If we can reach and cross this frontier, our generations will have furnished a significant milestone in human history."