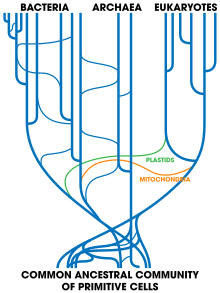

Common descent is a concept in evolutionary biology applicable when one species is the ancestor of two or more species later in time. According to modern evolutionary biology, all living beings could be descendants of a unique ancestor commonly referred to as the last universal common ancestor (LUCA) of all life on Earth.

Common descent is an effect of speciation, in which multiple species derive from a single ancestral population. The more recent the ancestral population two species have in common, the more closely are they related. The most recent common ancestor of all currently living organisms is the last universal ancestor, which lived about 3.9 billion years ago. The two earliest pieces of evidence for life on Earth are graphite found to be biogenic in 3.7 billion-year-old metasedimentary rocks discovered in western Greenland and microbial mat fossils found in 3.48 billion-year-old sandstone discovered in Western Australia. All currently living organisms on Earth share a common genetic heritage, though the suggestion of substantial horizontal gene transfer during early evolution has led to questions about the monophyly (single ancestry) of life. 6,331 groups of genes common to all living animals have been identified; these may have arisen from a single common ancestor that lived 650 million years ago in the Precambrian.

Universal common descent through an evolutionary process was first proposed by the British naturalist Charles Darwin in the concluding sentence of his 1859 book On the Origin of Species:

There is grandeur in this view of life, with its several powers, having been originally breathed into a few forms or into one; and that, whilst this planet has gone cycling on according to the fixed law of gravity, from so simple a beginning endless forms most beautiful and most wonderful have been, and are being, evolved.

History

The idea that all living things (including things considered non-living by science) are related is a recurring theme in many indigenous worldviews across the world. Later on, in the 1740s, the French mathematician Pierre Louis Maupertuis arrived at the idea that all organisms had a common ancestor, and had diverged through random variation and natural selection. In Essai de cosmologie (1750), Maupertuis noted:

May we not say that, in the fortuitous combination of the productions of Nature, since only those creatures could survive in whose organizations a certain degree of adaptation was present, there is nothing extraordinary in the fact that such adaptation is actually found in all these species which now exist? Chance, one might say, turned out a vast number of individuals; a small proportion of these were organized in such a manner that the animals' organs could satisfy their needs. A much greater number showed neither adaptation nor order; these last have all perished.... Thus the species which we see today are but a small part of all those that a blind destiny has produced.

In 1790, the philosopher Immanuel Kant wrote in Kritik der Urteilskraft (Critique of Judgment) that the similarity of animal forms implies a common original type, and thus a common parent.

In 1794, Charles Darwin's grandfather, Erasmus Darwin asked:

[W]ould it be too bold to imagine, that in the great length of time, since the earth began to exist, perhaps millions of ages before the commencement of the history of mankind, would it be too bold to imagine, that all warm-blooded animals have arisen from one living filament, which the great First Cause endued with animality, with the power of acquiring new parts attended with new propensities, directed by irritations, sensations, volitions, and associations; and thus possessing the faculty of continuing to improve by its own inherent activity, and of delivering down those improvements by generation to its posterity, world without end?

Charles Darwin's views about common descent, as expressed in On the Origin of Species, were that it was probable that there was only one progenitor for all life forms:

Therefore I should infer from analogy that probably all the organic beings which have ever lived on this earth have descended from some one primordial form, into which life was first breathed.

But he precedes that remark by, "Analogy would lead me one step further, namely, to the belief that all animals and plants have descended from some one prototype. But analogy may be a deceitful guide." And in the subsequent edition, he asserts rather,

"We do not know all the possible transitional gradations between the simplest and the most perfect organs; it cannot be pretended that we know all the varied means of Distribution during the long lapse of years, or that we know how imperfect the Geological Record is. Grave as these several difficulties are, in my judgment they do not overthrow the theory of descent from a few created forms with subsequent modification".

Common descent was widely accepted amongst the scientific community after Darwin's publication. In 1907, Vernon Kellogg commented that "practically no naturalists of position and recognized attainment doubt the theory of descent."

In 2008, biologist T. Ryan Gregory noted that:

No reliable observation has ever been found to contradict the general notion of common descent. It should come as no surprise, then, that the scientific community at large has accepted evolutionary descent as a historical reality since Darwin’s time and considers it among the most reliably established and fundamentally important facts in all of science.

Evidence

Common biochemistry

All known forms of life are based on the same fundamental biochemical organization: genetic information encoded in DNA, transcribed into RNA, through the effect of protein- and RNA-enzymes, then translated into proteins by (highly similar) ribosomes, with ATP, NADPH and others as energy sources. Analysis of small sequence differences in widely shared substances such as cytochrome c further supports universal common descent. Some 23 proteins are found in all organisms, serving as enzymes carrying out core functions like DNA replication. The fact that only one such set of enzymes exists is convincing evidence of a single ancestry. 6,331 genes common to all living animals have been identified; these may have arisen from a single common ancestor that lived 650 million years ago in the Precambrian.

Common genetic code

| Amino acids | nonpolar | polar | basic | acidic |

|

Stop codon |

| 1st base |

2nd base | |||||||

|---|---|---|---|---|---|---|---|---|

| T | C | A | G | |||||

| T | TTT | Phenyl- alanine |

TCT | Serine | TAT | Tyrosine | TGT | Cysteine |

| TTC | TCC | TAC | TGC | |||||

| TTA | Leucine | TCA | TAA | Stop | TGA | Stop | ||

| TTG | TCG | TAG | Stop | TGG | Tryptophan | |||

| C | CTT | CCT | Proline | CAT | Histidine | CGT | Arginine | |

| CTC | CCC | CAC | CGC | |||||

| CTA | CCA | CAA | Glutamine | CGA | ||||

| CTG | CCG | CAG | CGG | |||||

| A | ATT | Isoleucine | ACT | Threonine | AAT | Asparagine | AGT | Serine |

| ATC | ACC | AAC | AGC | |||||

| ATA | ACA | AAA | Lysine | AGA | Arginine | |||

| ATG | Methionine | ACG | AAG | AGG | ||||

| G | GTT | Valine | GCT | Alanine | GAT | Aspartic acid |

GGT | Glycine |

| GTC | GCC | GAC | GGC | |||||

| GTA | GCA | GAA | Glutamic acid |

GGA | ||||

| GTG | GCG | GAG | GGG | |||||

The genetic code (the "translation table" according to which DNA information is translated into amino acids, and hence proteins) is nearly identical for all known lifeforms, from bacteria and archaea to animals and plants. The universality of this code is generally regarded by biologists as definitive evidence in favor of universal common descent.

The way that codons (DNA triplets) are mapped to amino acids seems to be strongly optimised. Richard Egel argues that in particular the hydrophobic (non-polar) side-chains are well organised, suggesting that these enabled the earliest organisms to create peptides with water-repelling regions able to support the essential electron exchange (redox) reactions for energy transfer.

Selectively neutral similarities

Similarities which have no adaptive relevance cannot be explained by convergent evolution, and therefore they provide compelling support for universal common descent. Such evidence has come from two areas: amino acid sequences and DNA sequences. Proteins with the same three-dimensional structure need not have identical amino acid sequences; any irrelevant similarity between the sequences is evidence for common descent. In certain cases, there are several codons (DNA triplets) that code redundantly for the same amino acid. Since many species use the same codon at the same place to specify an amino acid that can be represented by more than one codon, that is evidence for their sharing a recent common ancestor. Had the amino acid sequences come from different ancestors, they would have been coded for by any of the redundant codons, and since the correct amino acids would already have been in place, natural selection would not have driven any change in the codons, however much time was available. Genetic drift could change the codons, but it would be extremely unlikely to make all the redundant codons in a whole sequence match exactly across multiple lineages. Similarly, shared nucleotide sequences, especially where these are apparently neutral such as the positioning of introns and pseudogenes, provide strong evidence of common ancestry.

Other similarities

Biologists often point to the universality of many aspects of cellular life as supportive evidence to the more compelling evidence listed above. These similarities include the energy carrier adenosine triphosphate (ATP), and the fact that all amino acids found in proteins are left-handed. It is, however, possible that these similarities resulted because of the laws of physics and chemistry - rather than through universal common descent - and therefore resulted in convergent evolution. In contrast, there is evidence for homology of the central subunits of transmembrane ATPases throughout all living organisms, especially how the rotating elements are bound to the membrane. This supports the assumption of a LUCA as a cellular organism, although primordial membranes may have been semipermeable and evolved later to the membranes of modern bacteria, and on a second path to those of modern archaea also.

Phylogenetic trees

Another important piece of evidence is from detailed phylogenetic trees (i.e., "genealogic trees" of species) mapping out the proposed divisions and common ancestors of all living species. In 2010, Douglas L. Theobald published a statistical analysis of available genetic data, mapping them to phylogenetic trees, that gave "strong quantitative support, by a formal test, for the unity of life."

Traditionally, these trees have been built using morphological methods, such as appearance, embryology, etc. Recently, it has been possible to construct these trees using molecular data, based on similarities and differences between genetic and protein sequences. All these methods produce essentially similar results, even though most genetic variation has no influence over external morphology. That phylogenetic trees based on different types of information agree with each other is strong evidence of a real underlying common descent.

Objections

Gene exchange clouds phylogenetic analysis

Theobald noted that substantial horizontal gene transfer could have occurred during early evolution. Bacteria today remain capable of gene exchange between distantly-related lineages. This weakens the basic assumption of phylogenetic analysis, that similarity of genomes implies common ancestry, because sufficient gene exchange would allow lineages to share much of their genome whether or not they shared an ancestor (monophyly). This has led to questions about the single ancestry of life. However, biologists consider it very unlikely that completely unrelated proto-organisms could have exchanged genes, as their different coding mechanisms would have resulted only in garble rather than functioning systems. Later, however, many organisms all derived from a single ancestor could readily have shared genes that all worked in the same way, and it appears that they have.

Convergent evolution

If early organisms had been driven by the same environmental conditions to evolve similar biochemistry convergently, they might independently have acquired similar genetic sequences. Theobald's "formal test" was accordingly criticised by Takahiro Yonezawa and colleagues for not including consideration of convergence. They argued that Theobald's test was insufficient to distinguish between the competing hypotheses. Theobald has defended his method against this claim, arguing that his tests distinguish between phylogenetic structure and mere sequence similarity. Therefore, Theobald argued, his results show that "real universally conserved proteins are homologous."

RNA world

The possibility is mentioned, above, that all living organisms may be descended from an original single-celled organism with a DNA genome, and that this implies a single origin for life. Although such a universal common ancestor may have existed, such a complex entity is unlikely to have arisen spontaneously from non-life and thus a cell with a DNA genome cannot reasonably be regarded as the “origin” of life. To understand the “origin” of life, it has been proposed that DNA based cellular life descended from relatively simple pre-cellular self-replicating RNA molecules able to undergo natural selection. During the course of evolution, this RNA world was replaced by the evolutionary emergence of the DNA world. A world of independently self-replicating RNA genomes apparently no longer exists (RNA viruses are dependent on host cells with DNA genomes). Because the RNA world is apparently gone, it is not clear how scientific evidence could be brought to bear on the question of whether there was a single “origin” of life event from which all life descended.