From Wikipedia, the free encyclopedia

Bioinorganic chemistry is a field that examines the role of metals in biology. Bioinorganic chemistry includes the study of both natural phenomena such as the behavior of metalloproteins as well as artificially introduced metals, including those that are non-essential, in medicine and toxicology. Many biological processes such as respiration depend upon molecules that fall within the realm of inorganic chemistry. The discipline also includes the study of inorganic models or mimics that imitate the behaviour of metalloproteins.[1]

As a mix of biochemistry and inorganic chemistry, bioinorganic chemistry is important in elucidating the implications of electron-transfer proteins, substrate bindings and activation, atom and group transfer chemistry as well as metal properties in biological chemistry.

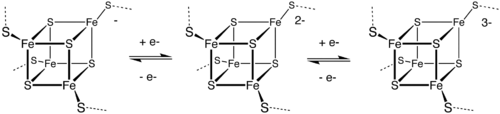

Metal-containing electron transfer proteins are also common. They can be organized into three major classes: iron-sulfur proteins (such as rubredoxins, ferredoxins, and Rieske proteins), blue copper proteins, and cytochromes. These electron transport proteins are complementary to the non-metal electron transporters nicotinamide adenine dinucleotide (NAD) and flavin adenine dinucleotide (FAD). The nitrogen cycle make extensive use of metals for the redox interconversions.

The abundant inorganic elements act as ionic electrolytes. The most important ions are sodium, potassium, calcium, magnesium, chloride, phosphate, and the organic ion bicarbonate. The maintenance of precise gradients across cell membranes maintains osmotic pressure and pH.[12] Ions are also critical for nerves and muscles, as action potentials in these tissues are produced by the exchange of electrolytes between the extracellular fluid and the cytosol.[13] Electrolytes enter and leave cells through proteins in the cell membrane called ion channels. For example, muscle contraction depends upon the movement of calcium, sodium and potassium through ion channels in the cell membrane and T-tubules.[14]

As a mix of biochemistry and inorganic chemistry, bioinorganic chemistry is important in elucidating the implications of electron-transfer proteins, substrate bindings and activation, atom and group transfer chemistry as well as metal properties in biological chemistry.

Composition of living organisms

About 99% of mammals' mass are the elements carbon, nitrogen, calcium, sodium, chlorine, potassium, hydrogen, phosphorus, oxygen and sulfur.[2] The organic compounds (proteins, lipids and carbohydrates) contain the majority of the carbon and nitrogen and most of the oxygen and hydrogen is present as water.[2] The entire collection of metal-containing biomolecules in a cell is called the metallome.History

Paul Ehrlich used organoarsenic (“arsenicals”) for the treatment of syphilis, demonstrating the relevance of metals, or at least metalloids, to medicine, that blossomed with Rosenberg’s discovery of the anti-cancer activity of cisplatin (cis-PtCl2(NH3)2). The first protein ever crystallized (see James B. Sumner) was urease, later shown to contain nickel at its active site. Vitamin B12, the cure for pernicious anemia was shown crystallographically by Dorothy Crowfoot Hodgkin to consist of a cobalt in a corrin macrocycle. The Watson-Crick structure for DNA demonstrated the key structural role played by phosphate-containing polymers.Themes in bioinorganic chemistry

Several distinct systems are of identifiable in bioinorganic chemistry. Major areas include:Metal ion transport and storage

This topic covers a diverse collection of ion channels, ion pumps (e.g. NaKATPase), vacuoles, siderophores, and other proteins and small molecules which control the concentration of metal ions in the cells. One issue is that many metals that are metabolically required are not readily available owing to solubility or scarcity. Organisms have developed a number of strategies for collecting such elements and transporting them.Enzymology

Many reactions in life sciences involve water and metal ions are often at the catalytic centers (active sites) for these enzymes, i.e. these are metalloproteins. Often the reacting water is a ligand (see metal aquo complex). Examples of hydrolase enzymes are carbonic anhydrase, metallophosphatases, and metalloproteinases. Bioinorganic chemists seek to understand and replicate the functi on of these metalloproteins.Metal-containing electron transfer proteins are also common. They can be organized into three major classes: iron-sulfur proteins (such as rubredoxins, ferredoxins, and Rieske proteins), blue copper proteins, and cytochromes. These electron transport proteins are complementary to the non-metal electron transporters nicotinamide adenine dinucleotide (NAD) and flavin adenine dinucleotide (FAD). The nitrogen cycle make extensive use of metals for the redox interconversions.

Oxygen transport and activation proteins

Aerobic life make extensive use of metals such as iron, copper, and manganese. Heme is utilized by red blood cells in the form of hemoglobin for oxygen transport and is perhaps the most recognized metal system in biology. Other oxygen transport systems include myoglobin, hemocyanin, and hemerythrin. Oxidases and oxygenases are metal systems found throughout nature that take advantage of oxygen to carry out important reactions such as energy generation in cytochrome c oxidase or small molecule oxidation in cytochrome P450 oxidases or methane monooxygenase. Some metalloproteins are designed to protect a biological system from the potentially harmful effects of oxygen and other reactive oxygen-containing molecules such as hydrogen peroxide. These systems include peroxidases, catalases, and superoxide dismutases. A complementary metalloprotein to those that react with oxygen is the oxygen evolving complex present in plants. This system is part of the complex protein machinery that produces oxygen as plants perform photosynthesis.Bioorganometallic chemistry

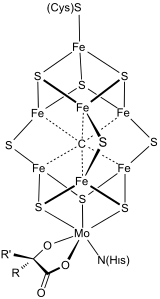

Bioorganometallic systems feature metal-carbon bonds as structural elements or as intermediates. Bioorganometallic enzymes and proteins include the hydrogenases, FeMoco in nitrogenase, and methylcobalamin. These naturally occurring organometallic compounds. This area is more focused on the utilization of metals by unicellular organisms. Bioorganometallic compounds are significant in environmental chemistry.[3]Metals in medicine

A number of drugs contain metals. This theme relies on the study of the design and mechanism of action of metal-containing pharmaceuticals, and compounds that interact with endogenous metal ions in enzyme active sites. The most widely used anti-cancer drug is cisplatin. MRI contrast agent commonly contain gadolinium. Lithium carbonate has been used to treat the manic phase of bipolar disorder. Gold antiarthritic drugs, e.g. auranofin have been commerciallized. Carbon monoxide-releasing molecules are metal complexes have been developed to suppress inflammation by releasing small amounts of carbon monoxide. The cardiovascular and neuronal importance of nitric oxide has been examined, including the enzyme nitric oxide synthase. (See also: nitrogen assimilation.)Environmental chemistry

Environmental chemistry traditionally emphasizes the interaction of heavy metals with organisms. Methylmercury has caused major disaster called Minamata disease. Arsenic poisoning is a widespread problem owing largely to arsenic contamination of groundwater, which affects many millions of people in developing countries. The metabolism of mercury- and arsenic-containing compounds involves cobalamin-based enzymes.Biomineralization

Biomineralization is the process by which living organisms produce minerals, often to harden or stiffen existing tissues. Such tissues are called mineralized tissues.[4][5][6] Examples include silicates in algae and diatoms, carbonates in invertebrates, and calcium phosphates and carbonates in vertebrates.Other examples include copper, iron and gold deposits involving bacteria. Biologically-formed minerals often have special uses such as magnetic sensors in magnetotactic bacteria (Fe3O4), gravity sensing devices (CaCO3, CaSO4, BaSO4) and iron storage and mobilization (Fe2O3•H2O in the protein ferritin). Because extracellular[7] iron is strongly involved in inducing calcification,[8][9] its control is essential in developing shells; the protein ferritin plays an important role in controlling the distribution of iron.[10]Types of inorganic elements in biology

Alkali and alkaline earth metals

The abundant inorganic elements act as ionic electrolytes. The most important ions are sodium, potassium, calcium, magnesium, chloride, phosphate, and the organic ion bicarbonate. The maintenance of precise gradients across cell membranes maintains osmotic pressure and pH.[12] Ions are also critical for nerves and muscles, as action potentials in these tissues are produced by the exchange of electrolytes between the extracellular fluid and the cytosol.[13] Electrolytes enter and leave cells through proteins in the cell membrane called ion channels. For example, muscle contraction depends upon the movement of calcium, sodium and potassium through ion channels in the cell membrane and T-tubules.[14]