AFM image of napthalenetetracarboxylic diimide molecules on silver-terminated silicon, interacting via hydrogen bonding, taken at 77 K.[1] ("Hydrogen bonds" in the top image are exaggerated by artifacts of the imaging technique.[2][3])

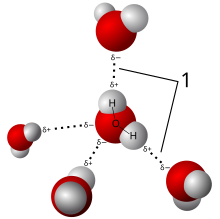

Model of hydrogen bonds (1) between molecules of water

A hydrogen bond is the electrostatic attraction between two polar groups that occurs when a hydrogen (H) atom covalently bound to a highly electronegative atom such as nitrogen (N), oxygen (O), or fluorine (F) experiences the electrostatic field of another highly electronegative atom nearby.

Hydrogen bonds can occur between molecules (intermolecular) or within different parts of a single molecule (intramolecular).[4] Depending on geometry and environment, the hydrogen bond free energy content is between 1 and 5 kcal/mol. This makes it stronger than a van der Waals interaction, but weaker than covalent or ionic bonds. This type of bond can occur in inorganic molecules such as water and in organic molecules like DNA and proteins.

Intermolecular hydrogen bonding is responsible for the high boiling point of water (100 °C) compared to the other group 16 hydrides that have much weaker hydrogen bonds.[5] Intramolecular hydrogen bonding is partly responsible for the secondary and tertiary structures of proteins and nucleic acids. It also plays an important role in the structure of polymers, both synthetic and natural.

In 2011, an IUPAC Task Group recommended a modern evidence-based definition of hydrogen bonding, which was published in the IUPAC journal Pure and Applied Chemistry. This definition specifies:

The hydrogen bond is an attractive interaction between a hydrogen atom from a molecule or a molecular fragment X–H in which X is more electronegative than H, and an atom or a group of atoms in the same or a different molecule, in which there is evidence of bond formation.[6]An accompanying detailed technical report provides the rationale behind the new definition.[7]

Bonding

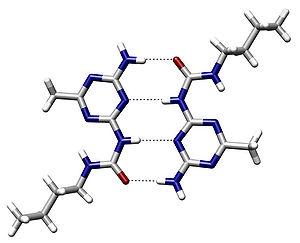

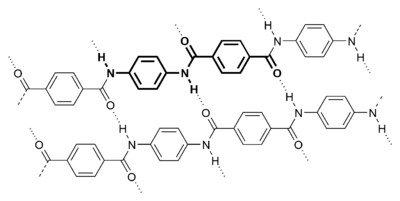

An example of intermolecular hydrogen bonding in a self-assembled dimer complex reported by Meijer and coworkers.[8] The hydrogen bonds are represented by dotted lines.

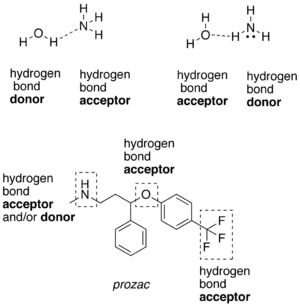

A hydrogen atom attached to a relatively electronegative atom will play the role of the hydrogen bond donor.[9] This electronegative atom is usually fluorine, oxygen, or nitrogen. A hydrogen attached to carbon can also participate in hydrogen bonding when the carbon atom is bound to electronegative atoms, as is the case in chloroform, CHCl3.[10][11][12] An example of a hydrogen bond donor is the hydrogen from the hydroxyl group of ethanol, which is bonded to an oxygen.

In a hydrogen bond, the electronegative atom not covalently attached to the hydrogen is named proton acceptor, whereas the one covalently bound to the hydrogen is named the proton donor.

Examples of hydrogen bond donating (donors) and hydrogen bond accepting groups (acceptors)

Cyclic dimer of acetic acid; dashed green lines represent hydrogen bonds

In the donor molecule, the electronegative atom attracts the electron cloud from around the hydrogen nucleus of the donor, and, by decentralizing the cloud, leaves the atom with a positive partial charge. Because of the small size of hydrogen relative to other atoms and molecules, the resulting charge, though only partial, represents a large charge density. A hydrogen bond results when this strong positive charge density attracts a lone pair of electrons on another heteroatom, which then becomes the hydrogen-bond acceptor.

The hydrogen bond is often described as an electrostatic dipole-dipole interaction. However, it also has some features of covalent bonding: it is directional and strong, produces interatomic distances shorter than the sum of the van der Waals radii, and usually involves a limited number of interaction partners, which can be interpreted as a type of valence. These covalent features are more substantial when acceptors bind hydrogens from more electronegative donors.

The partially covalent nature of a hydrogen bond raises the following questions: "To which molecule or atom does the hydrogen nucleus belong?" and "Which should be labeled 'donor' and which 'acceptor'?" Usually, this is simple to determine on the basis of interatomic distances in the X−H···Y system, where the dots represent the hydrogen bond: the X−H distance is typically ≈110 pm, whereas the H···Y distance is ≈160 to 200 pm. Liquids that display hydrogen bonding (such as water) are called associated liquids.

Hydrogen bonds can vary in strength from very weak (1–2 kJ mol−1) to extremely strong (161.5 kJ mol−1 in the ion HF−

2).[13][14] Typical enthalpies in vapor include:

- F−H···:F (161.5 kJ/mol or 38.6 kcal/mol)

- O−H···:N (29 kJ/mol or 6.9 kcal/mol)

- O−H···:O (21 kJ/mol or 5.0 kcal/mol)

- N−H···:N (13 kJ/mol or 3.1 kcal/mol)

- N−H···:O (8 kJ/mol or 1.9 kcal/mol)

- HO−H···:OH+

3 (18 kJ/mol[15] or 4.3 kcal/mol; data obtained using molecular dynamics as detailed in the reference and should be compared to 7.9 kJ/mol for bulk water, obtained using the same molecular dynamics.)

The length of hydrogen bonds depends on bond strength, temperature, and pressure. The bond strength itself is dependent on temperature, pressure, bond angle, and environment (usually characterized by local dielectric constant). The typical length of a hydrogen bond in water is 197 pm. The ideal bond angle depends on the nature of the hydrogen bond donor. The following hydrogen bond angles between a hydrofluoric acid donor and various acceptors have been determined experimentally:[17]

| Acceptor···donor | VSEPR geometry | Angle (°) |

| HCN···HF | linear | 180 |

| H2CO···HF | trigonal planar | 120 |

| H2O···HF | pyramidal | 46 |

| H2S···HF | pyramidal | 89 |

| SO2···HF | trigonal | 142 |

History

In the book The Nature of the Chemical Bond, Linus Pauling credits T. S. Moore and T. F. Winmill with the first mention of the hydrogen bond, in 1912.[18][19] Moore and Winmill used the hydrogen bond to account for the fact that trimethylammonium hydroxide is a weaker base than tetramethylammonium hydroxide. The description of hydrogen bonding in its better-known setting, water, came some years later, in 1920, from Latimer and Rodebush.[20] In that paper, Latimer and Rodebush cite work by a fellow scientist at their laboratory, Maurice Loyal Huggins, saying, "Mr. Huggins of this laboratory in some work as yet unpublished, has used the idea of a hydrogen kernel held between two atoms as a theory in regard to certain organic compounds."Hydrogen bonds in water

Crystal structure of hexagonal ice. Gray dashed lines indicate hydrogen bonds

The most ubiquitous and perhaps simplest example of a hydrogen bond is found between water molecules. In a discrete water molecule, there are two hydrogen atoms and one oxygen atom. Two molecules of water can form a hydrogen bond between them; the simplest case, when only two molecules are present, is called the water dimer and is often used as a model system. When more molecules are present, as is the case with liquid water, more bonds are possible because the oxygen of one water molecule has two lone pairs of electrons, each of which can form a hydrogen bond with a hydrogen on another water molecule. This can repeat such that every water molecule is H-bonded with up to four other molecules, as shown in the figure (two through its two lone pairs, and two through its two hydrogen atoms). Hydrogen bonding strongly affects the crystal structure of ice, helping to create an open hexagonal lattice. The density of ice is less than the density of water at the same temperature; thus, the solid phase of water floats on the liquid, unlike most other substances.

Liquid water's high boiling point is due to the high number of hydrogen bonds each molecule can form, relative to its low molecular mass. Owing to the difficulty of breaking these bonds, water has a very high boiling point, melting point, and viscosity compared to otherwise similar liquids not conjoined by hydrogen bonds. Water is unique because its oxygen atom has two lone pairs and two hydrogen atoms, meaning that the total number of bonds of a water molecule is up to four. For example, hydrogen fluoride—which has three lone pairs on the F atom but only one H atom—can form only two bonds; (ammonia has the opposite problem: three hydrogen atoms but only one lone pair).

- H−F···H−F···H−F

Where the bond strengths are more equivalent, one might instead find the atoms of two interacting water molecules partitioned into two polyatomic ions of opposite charge, specifically hydroxide (OH−) and hydronium (H3O+). (Hydronium ions are also known as "hydroxonium" ions.)

- H−O− H3O+

Because water may form hydrogen bonds with solute proton donors and acceptors, it may competitively inhibit the formation of solute intermolecular or intramolecular hydrogen bonds. Consequently, hydrogen bonds between or within solute molecules dissolved in water are almost always unfavorable relative to hydrogen bonds between water and the donors and acceptors for hydrogen bonds on those solutes.[23] Hydrogen bonds between water molecules have an average lifetime of 10−11 seconds, or 10 picoseconds.[24]

Bifurcated and over-coordinated hydrogen bonds in water

A single hydrogen atom can participate in two hydrogen bonds, rather than one. This type of bonding is called "bifurcated" (split in two or "two-forked"). It can exist, for instance, in complex natural or synthetic organic molecules.[25] It has been suggested that a bifurcated hydrogen atom is an essential step in water reorientation.[26]Acceptor-type hydrogen bonds (terminating on an oxygen's lone pairs) are more likely to form bifurcation (it is called overcoordinated oxygen, OCO) than are donor-type hydrogen bonds, beginning on the same oxygen's hydrogens.[27]

Hydrogen bonds in DNA and proteins

The structure of part of a DNA double helix

Hydrogen bonding also plays an important role in determining the three-dimensional structures adopted by proteins and nucleic bases. In these macromolecules, bonding between parts of the same macromolecule cause it to fold into a specific shape, which helps determine the molecule's physiological or biochemical role. For example, the double helical structure of DNA is due largely to hydrogen bonding between its base pairs (as well as pi stacking interactions), which link one complementary strand to the other and enable replication.

In the secondary structure of proteins, hydrogen bonds form between the backbone oxygens and amide hydrogens. When the spacing of the amino acid residues participating in a hydrogen bond occurs regularly between positions i and i + 4, an alpha helix is formed. When the spacing is less, between positions i and i + 3, then a 310 helix is formed. When two strands are joined by hydrogen bonds involving alternating residues on each participating strand, a beta sheet is formed. Hydrogen bonds also play a part in forming the tertiary structure of protein through interaction of R-groups. (See also protein folding).

The role of hydrogen bonds in protein folding has also been linked to osmolyte-induced protein stabilization. Protective osmolytes, such as trehalose and sorbitol, shift the protein folding equilibrium toward the folded state, in a concentration dependent manner. While the prevalent explanation for osmolyte action relies on excluded volume effects, that are entropic in nature, recent Circular dichroism (CD) experiments have shown osmolyte to act through an enthalpic effect.[28] The molecular mechanism for their role in protein stabilization is still not well established, though several mechanism have been proposed. Recently, computer molecular dynamics simulations suggested that osmolytes stabilize proteins by modifying the hydrogen bonds in the protein hydration layer.[29]

Several studies have shown that hydrogen bonds play an important role for the stability between subunits in multimeric proteins. For example, a study of sorbitol dehydrogenase displayed an important hydrogen bonding network which stabilizes the tetrameric quaternary structure within the mammalian sorbitol dehydrogenase protein family.[30]

A protein backbone hydrogen bond incompletely shielded from water attack is a dehydron. Dehydrons promote the removal of water through proteins or ligand binding. The exogenous dehydration enhances the electrostatic interaction between the amide and carbonyl groups by de-shielding their partial charges. Furthermore, the dehydration stabilizes the hydrogen bond by destabilizing the nonbonded state consisting of dehydrated isolated charges.[31]

Hydrogen bonds in polymers

Para-aramid structure

A strand of cellulose (conformation Iα), showing the hydrogen bonds (dashed) within and between cellulose molecules.

Many polymers are strengthened by hydrogen bonds in their main chains. Among the synthetic polymers, the best known example is nylon, where hydrogen bonds occur in the repeat unit and play a major role in crystallization of the material. The bonds occur between carbonyl and amine groups in the amide repeat unit. They effectively link adjacent chains to create crystals, which help reinforce the material. The effect is greatest in aramid fibre, where hydrogen bonds stabilize the linear chains laterally. The chain axes are aligned along the fibre axis, making the fibres extremely stiff and strong. Hydrogen bonds are also important in the structure of cellulose and derived polymers in its many different forms in nature, such as wood and natural fibres such as cotton and flax.

The hydrogen bond networks make both natural and synthetic polymers sensitive to humidity levels in the atmosphere because water molecules can diffuse into the surface and disrupt the network. Some polymers are more sensitive than others. Thus nylons are more sensitive than aramids, and nylon 6 more sensitive than nylon-11.

Symmetric hydrogen bond

A symmetric hydrogen bond is a special type of hydrogen bond in which the proton is spaced exactly halfway between two identical atoms. The strength of the bond to each of those atoms is equal. It is an example of a three-center four-electron bond. This type of bond is much stronger than a "normal" hydrogen bond. The effective bond order is 0.5, so its strength is comparable to a covalent bond. It is seen in ice at high pressure, and also in the solid phase of many anhydrous acids such as hydrofluoric acid and formic acid at high pressure. It is also seen in the bifluoride ion [F−H−F]−.Symmetric hydrogen bonds have been observed recently spectroscopically in formic acid at high pressure (>GPa). Each hydrogen atom forms a partial covalent bond with two atoms rather than one. Symmetric hydrogen bonds have been postulated in ice at high pressure (Ice X). Low-barrier hydrogen bonds form when the distance between two heteroatoms is very small.

Dihydrogen bond

The hydrogen bond can be compared with the closely related dihydrogen bond, which is also an intermolecular bonding interaction involving hydrogen atoms. These structures have been known for some time, and well characterized by crystallography;[32] however, an understanding of their relationship to the conventional hydrogen bond, ionic bond, and covalent bond remains unclear. Generally, the hydrogen bond is characterized by a proton acceptor that is a lone pair of electrons in nonmetallic atoms (most notably in the nitrogen, and chalcogen groups). In some cases, these proton acceptors may be pi-bonds or metal complexes. In the dihydrogen bond, however, a metal hydride serves as a proton acceptor, thus forming a hydrogen-hydrogen interaction. Neutron diffraction has shown that the molecular geometry of these complexes is similar to hydrogen bonds, in that the bond length is very adaptable to the metal complex/hydrogen donor system.[32]Advanced theory of the hydrogen bond

In 1999, Isaacs et al.[33] showed from interpretations of the anisotropies in the Compton profile of ordinary ice that the hydrogen bond is partly covalent. However, this interpretation was challenged by Ghanty et al.,[34] who concluded that considering electrostatic forces alone could explain the experimental results. Some NMR data on hydrogen bonds in proteins also indicate covalent bonding.Most generally, the hydrogen bond can be viewed as a metric-dependent electrostatic scalar field between two or more intermolecular bonds. This is slightly different from the intramolecular bound states of, for example, covalent or ionic bonds; however, hydrogen bonding is generally still a bound state phenomenon, since the interaction energy has a net negative sum. The initial theory of hydrogen bonding proposed by Linus Pauling suggested that the hydrogen bonds had a partial covalent nature. This remained a controversial conclusion until the late 1990s when NMR techniques were employed by F. Cordier et al. to transfer information between hydrogen-bonded nuclei, a feat that would only be possible if the hydrogen bond contained some covalent character.[35] While much experimental data has been recovered for hydrogen bonds in water, for example, that provide good resolution on the scale of intermolecular distances and molecular thermodynamics, the kinetic and dynamical properties of the hydrogen bond in dynamic systems remain unchanged.

Dynamics probed by spectroscopic means

The dynamics of hydrogen bond structures in water can be probed by the IR spectrum of OH stretching vibration.[36] In the hydrogen bonding network in protic organic ionic plastic crystals (POIPCs), which are a type of phase change material exhibiting solid-solid phase transitions prior to melting, variable-temperature infrared spectroscopy can reveal the temperature dependence of hydrogen bonds and the dynamics of both the anions and the cations.[37] The sudden weakening of hydrogen bonds during the solid-solid phase transition seems to be coupled with the onset of orientational or rotational disorder of the ions.[37]Hydrogen bonding phenomena

- Dramatically higher boiling points of NH3, H2O, and HF compared to the heavier analogues PH3, H2S, and HCl.

- Increase in the melting point, boiling point, solubility, and viscosity of many compounds can be explained by the concept of hydrogen bonding.

- Occurrence of proton tunneling during DNA replication is believed to be responsible for cell mutations.[38]

- Viscosity of anhydrous phosphoric acid and of glycerol

- Dimer formation in carboxylic acids and hexamer formation in hydrogen fluoride, which occur even in the gas phase, resulting in gross deviations from the ideal gas law.

- Pentamer formation of water and alcohols in apolar solvents.

- High water solubility of many compounds such as ammonia is explained by hydrogen bonding with water molecules.

- Negative azeotropy of mixtures of HF and water

- Deliquescence of NaOH is caused in part by reaction of OH− with moisture to form hydrogen-bonded H

3O−

2 species. An analogous process happens between NaNH2 and NH3, and between NaF and HF. - The fact that ice is less dense than liquid water is due to a crystal structure stabilized by hydrogen bonds.

- The presence of hydrogen bonds can cause an anomaly in the normal succession of states of matter for certain mixtures of chemical compounds as temperature increases or decreases. These compounds can be liquid until a certain temperature, then solid even as the temperature increases, and finally liquid again as the temperature rises over the "anomaly interval"[39]

- Smart rubber utilizes hydrogen bonding as its sole means of bonding, so that it can "heal" when torn, because hydrogen bonding can occur on the fly between two surfaces of the same polymer.

- Strength of nylon and cellulose fibres.

- Wool, being a protein fibre, is held together by hydrogen bonds, causing wool to recoil when stretched. However, washing at high temperatures can permanently break the hydrogen bonds and a garment may permanently lose its shape.