Modern biology began in the nineteenth century with Charles Darwin's work on evolution by natural selection.

Variation exists within all populations of organisms. This occurs partly because random mutations arise in the genome of an individual organism, and offspring can inherit such mutations. Throughout the lives of the individuals, their genomes interact with their environments to cause variations in traits. The environment of a genome includes the molecular biology in the cell, other cells, other individuals, populations, species, as well as the abiotic environment. Because individuals with certain variants of the trait tend to survive and reproduce more than individuals with other, less successful, variants, the population evolves. Other factors affecting reproductive success include sexual selection (now often included in natural selection) and fecundity selection.

Natural selection acts on the phenotype, the characteristics of the organism which actually interact with the environment, but the genetic (heritable) basis of any phenotype that gives that phenotype a reproductive advantage may become more common in a population. Over time, this process can result in populations that specialise for particular ecological niches (microevolution) and may eventually result in speciation (the emergence of new species, macroevolution). In other words, natural selection is a key process in the evolution of a population.

Natural selection is a cornerstone of modern biology. The concept, published by Darwin and Alfred Russel Wallace in a joint presentation of papers in 1858, was elaborated in Darwin's influential 1859 book On the Origin of Species by Means of Natural Selection, or the Preservation of Favoured Races in the Struggle for Life. He described natural selection as analogous to artificial selection, a process by which animals and plants with traits considered desirable by human breeders are systematically favoured for reproduction. The concept of natural selection originally developed in the absence of a valid theory of heredity; at the time of Darwin's writing, science had yet to develop modern theories of genetics. The union of traditional Darwinian evolution with subsequent discoveries in classical genetics formed the modern synthesis of the mid-20th century. The addition of molecular genetics has led to evolutionary developmental biology, which explains evolution at the molecular level. While genotypes can slowly change by random genetic drift, natural selection remains the primary explanation for adaptive evolution.

Historical development

Pre-Darwinian theories

Aristotle considered whether different forms could have appeared, only the useful ones surviving.

Several philosophers of the classical era, including Empedocles[1] and his intellectual successor, the Roman poet Lucretius,[2] expressed the idea that nature produces a huge variety of creatures, randomly, and that only those creatures that manage to provide for themselves and reproduce successfully persist. Empedocles' idea that organisms arose entirely by the incidental workings of causes such as heat and cold was criticised by Aristotle in Book II of Physics.[3] He posited natural teleology in its place, and believed that form was achieved for a purpose, citing the regularity of heredity in species as proof.[4][5] Nevertheless, he accepted in his biology that new types of animals, monstrosities (τερας), can occur in very rare instances (Generation of Animals, Book IV).[6] As quoted in Darwin's 1872 edition of The Origin of Species, Aristotle considered whether different forms (e.g., of teeth) might have appeared accidentally, but only the useful forms survived:

So what hinders the different parts [of the body] from having this merely accidental relation in nature? as the teeth, for example, grow by necessity, the front ones sharp, adapted for dividing, and the grinders flat, and serviceable for masticating the food; since they were not made for the sake of this, but it was the result of accident. And in like manner as to the other parts in which there appears to exist an adaptation to an end. Wheresoever, therefore, all things together (that is all the parts of one whole) happened like as if they were made for the sake of something, these were preserved, having been appropriately constituted by an internal spontaneity, and whatsoever things were not thus constituted, perished, and still perish.But Aristotle rejected this possibility in the next paragraph, making clear that he is talking about the development of animals as embryos with the phrase "either invariably or normally come about", not the origin of species:

— Aristotle, Physics, Book II, Chapter 8[7]

... Yet it is impossible that this should be the true view. For teeth and all other natural things either invariably or normally come about in a given way; but of not one of the results of chance or spontaneity is this true. We do not ascribe to chance or mere coincidence the frequency of rain in winter, but frequent rain in summer we do; nor heat in the dog-days, but only if we have it in winter. If then, it is agreed that things are either the result of coincidence or for an end, and these cannot be the result of coincidence or spontaneity, it follows that they must be for an end; and that such things are all due to nature even the champions of the theory which is before us would agree. Therefore action for an end is present in things which come to be and are by nature.The struggle for existence was later described by the Islamic writer Al-Jahiz in the 9th century.[9][10][11]

— Aristotle, Physics, Book II, Chapter 8[8]

The classical arguments were reintroduced in the 18th century by Pierre Louis Maupertuis[12] and others, including Darwin's grandfather, Erasmus Darwin.

Until the early 19th century, the prevailing view in Western societies was that differences between individuals of a species were uninteresting departures from their Platonic ideals (or typus) of created kinds. However, the theory of uniformitarianism in geology promoted the idea that simple, weak forces could act continuously over long periods of time to produce radical changes in the Earth's landscape. The success of this theory raised awareness of the vast scale of geological time and made plausible the idea that tiny, virtually imperceptible changes in successive generations could produce consequences on the scale of differences between species.[13]

The early 19th-century zoologist Jean-Baptiste Lamarck suggested the inheritance of acquired characteristics as a mechanism for evolutionary change; adaptive traits acquired by an organism during its lifetime could be inherited by that organism's progeny, eventually causing transmutation of species.[14] This theory, Lamarckism, was an influence on the Soviet biologist Trofim Lysenko's antagonism to mainstream genetic theory as late as the mid 20th century.[15]

Between 1835 and 1837, the zoologist Edward Blyth worked on the area of variation, artificial selection, and how a similar process occurs in nature. Darwin acknowledged Blyth's ideas in the first chapter on variation of On the Origin of Species.[16]

Darwin's theory

In 1859, Charles Darwin set out his theory of evolution by natural selection as an explanation for adaptation and speciation. He defined natural selection as the "principle by which each slight variation [of a trait], if useful, is preserved".[17] The concept was simple but powerful: individuals best adapted to their environments are more likely to survive and reproduce. As long as there is some variation between them and that variation is heritable, there will be an inevitable selection of individuals with the most advantageous variations. If the variations are heritable, then differential reproductive success leads to a progressive evolution of particular populations of a species, and populations that evolve to be sufficiently different eventually become different species.[18][19]

Part of Thomas Malthus's table of population growth in England 1780–1810, from his Essay on the Principle of Population, 6th edition, 1826

Darwin's ideas were inspired by the observations that he had made on the second voyage of HMS Beagle (1831–1836), and by the work of a political economist, Thomas Robert Malthus, who, in An Essay on the Principle of Population (1798), noted that population (if unchecked) increases exponentially, whereas the food supply grows only arithmetically; thus, inevitable limitations of resources would have demographic implications, leading to a "struggle for existence".[20] When Darwin read Malthus in 1838 he was already primed by his work as a naturalist to appreciate the "struggle for existence" in nature. It struck him that as population outgrew resources, "favourable variations would tend to be preserved, and unfavourable ones to be destroyed. The result of this would be the formation of new species."[21] Darwin wrote:

If during the long course of ages and under varying conditions of life, organic beings vary at all in the several parts of their organisation, and I think this cannot be disputed; if there be, owing to the high geometrical powers of increase of each species, at some age, season, or year, a severe struggle for life, and this certainly cannot be disputed; then, considering the infinite complexity of the relations of all organic beings to each other and to their conditions of existence, causing an infinite diversity in structure, constitution, and habits, to be advantageous to them, I think it would be a most extraordinary fact if no variation ever had occurred useful to each being's own welfare, in the same way as so many variations have occurred useful to man. But if variations useful to any organic being do occur, assuredly individuals thus characterised will have the best chance of being preserved in the struggle for life; and from the strong principle of inheritance they will tend to produce offspring similarly characterised. This principle of preservation, I have called, for the sake of brevity, Natural Selection.Once he had his theory, Darwin was meticulous about gathering and refining evidence before making his idea public. He was in the process of writing his "big book" to present his research when the naturalist Alfred Russel Wallace independently conceived of the principle and described it in an essay he sent to Darwin to forward to Charles Lyell. Lyell and Joseph Dalton Hooker decided to present his essay together with unpublished writings that Darwin had sent to fellow naturalists, and On the Tendency of Species to form Varieties; and on the Perpetuation of Varieties and Species by Natural Means of Selection was read to the Linnean Society of London announcing co-discovery of the principle in July 1858.[23] Darwin published a detailed account of his evidence and conclusions in On the Origin of Species in 1859. In the 3rd edition of 1861 Darwin acknowledged that others—like William Charles Wells in 1813, and Patrick Matthew in 1831—had proposed similar ideas, but had neither developed them nor presented them in notable scientific publications.[24]

— Darwin summarising natural selection in the fourth chapter of On the Origin of Species[22]

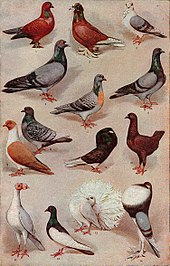

Charles Darwin noted that pigeon fanciers had created many kinds of pigeon, such as Tumblers (1, 12), Fantails (13), and Pouters (14) by selective breeding.

Darwin thought of natural selection by analogy to how farmers select crops or livestock for breeding, which he called "artificial selection"; in his early manuscripts he referred to a "Nature" which would do the selection. At the time, other mechanisms of evolution such as evolution by genetic drift were not yet explicitly formulated, and Darwin believed that selection was likely only part of the story: "I am convinced that Natural Selection has been the main but not exclusive means of modification."[25] In a letter to Charles Lyell in September 1860, Darwin regretted the use of the term "Natural Selection", preferring the term "Natural Preservation".[26]

For Darwin and his contemporaries, natural selection was in essence synonymous with evolution by natural selection. After the publication of On the Origin of Species,[27] educated people generally accepted that evolution had occurred in some form. However, natural selection remained controversial as a mechanism, partly because it was perceived to be too weak to explain the range of observed characteristics of living organisms, and partly because even supporters of evolution balked at its "unguided" and non-progressive nature,[28] a response that has been characterised as the single most significant impediment to the idea's acceptance.[29] However, some thinkers enthusiastically embraced natural selection; after reading Darwin, Herbert Spencer introduced the phrase survival of the fittest, which became a popular summary of the theory.[30][31] The fifth edition of On the Origin of Species published in 1869 included Spencer's phrase as an alternative to natural selection, with credit given: "But the expression often used by Mr. Herbert Spencer of the Survival of the Fittest is more accurate, and is sometimes equally convenient."[32] Although the phrase is still often used by non-biologists, modern biologists avoid it because it is tautological if "fittest" is read to mean "functionally superior" and is applied to individuals rather than considered as an averaged quantity over populations.[33]

The modern synthesis

Natural selection relies crucially on the idea of heredity, but developed before the basic concepts of genetics. Although the Moravian monk Gregor Mendel, the father of modern genetics, was a contemporary of Darwin's, his work lay in obscurity, only being rediscovered in 1900.[34] With the early 20th century integration of evolution with Mendel's laws of inheritance, the so-called modern synthesis, scientists generally came to accept natural selection.[35][36] The synthesis grew from advances in different fields. Ronald Fisher developed the required mathematical language and wrote The Genetical Theory of Natural Selection (1930).[37] J. B. S. Haldane introduced the concept of the "cost" of natural selection.[38][39] Sewall Wright elucidated the nature of selection and adaptation.[40] In his book Genetics and the Origin of Species (1937), Theodosius Dobzhansky established the idea that mutation, once seen as a rival to selection, actually supplied the raw material for natural selection by creating genetic diversity.[41][42]A second synthesis

Evolutionary developmental biology relates the evolution of form to the precise pattern of gene activity, here gap genes in the fruit fly, during embryonic development.[43]

Ernst Mayr recognised the key importance of reproductive isolation for speciation in his Systematics and the Origin of Species (1942).[44] W. D. Hamilton conceived of kin selection in 1964.[45][46] This synthesis cemented natural selection as the foundation of evolutionary theory, where it remains today. A second synthesis was brought about at the end of the 20th century by advances in molecular genetics, creating the field of evolutionary developmental biology ("evo-devo"), which seeks to explain the evolution of form in terms of the genetic regulatory programs which control the development of the embryo at molecular level. Natural selection is here understood to act on embryonic development to change the morphology of the adult body.[47][48][49][50]

Terminology

The term natural selection is most often defined to operate on heritable traits, because these directly participate in evolution. However, natural selection is "blind" in the sense that changes in phenotype can give a reproductive advantage regardless of whether or not the trait is heritable. Following Darwin's primary usage, the term is used to refer both to the evolutionary consequence of blind selection and to its mechanisms.[27][37][51][52] It is sometimes helpful to explicitly distinguish between selection's mechanisms and its effects; when this distinction is important, scientists define "(phenotypic) natural selection" specifically as "those mechanisms that contribute to the selection of individuals that reproduce", without regard to whether the basis of the selection is heritable.[53][54][55] Traits that cause greater reproductive success of an organism are said to be selected for, while those that reduce success are selected against.[56]Mechanism

Heritable variation, differential reproduction

During the industrial revolution, pollution killed many lichens, leaving tree trunks dark. A dark (melanic) morph of the peppered moth largely replaced the formerly usual light morph (both shown here). Since the moths are subject to predation by birds hunting by sight, the colour change offers better camouflage against the changed background, suggesting natural selection at work.

Natural variation occurs among the individuals of any population of organisms. Some differences may improve an individual's chances of surviving and reproducing such that its lifetime reproductive rate is increased, which means that it leaves more offspring. If the traits that give these individuals a reproductive advantage are also heritable, that is, passed from parent to offspring, then there will be differential reproduction, that is, a slightly higher proportion of fast rabbits or efficient algae in the next generation. Even if the reproductive advantage is very slight, over many generations any advantageous heritable trait becomes dominant in the population. In this way the natural environment of an organism "selects for" traits that confer a reproductive advantage, causing evolutionary change, as Darwin described.[57] This gives the appearance of purpose, but in natural selection there is no intentional choice. Artificial selection is purposive where natural selection is not, though biologists often use teleological language to describe it.[58]

The peppered moth exists in both light and dark colours in Great Britain, but during the industrial revolution, many of the trees on which the moths rested became blackened by soot, giving the dark-coloured moths an advantage in hiding from predators. This gave dark-coloured moths a better chance of surviving to produce dark-coloured offspring, and in just fifty years from the first dark moth being caught, nearly all of the moths in industrial Manchester were dark. The balance was reversed by the effect of the Clean Air Act 1956, and the dark moths became rare again, demonstrating the influence of natural selection on peppered moth evolution.[59]

Fitness

The concept of fitness is central to natural selection. In broad terms, individuals that are more "fit" have better potential for survival, as in the well-known phrase "survival of the fittest", but the precise meaning of the term is much more subtle. Modern evolutionary theory defines fitness not by how long an organism lives, but by how successful it is at reproducing. If an organism lives half as long as others of its species, but has twice as many offspring surviving to adulthood, its genes become more common in the adult population of the next generation. Though natural selection acts on individuals, the effects of chance mean that fitness can only really be defined "on average" for the individuals within a population. The fitness of a particular genotype corresponds to the average effect on all individuals with that genotype.[60]Competition

In biology, competition is an interaction between organisms in which the fitness of one is lowered by the presence of another. This may be because both rely on a limited supply of a resource such as food, water, or territory.[61] Competition may be within or between species, and may be direct or indirect.[62] Species less suited to compete should in theory either adapt or die out, since competition plays a powerful role in natural selection, but according to the "room to roam" theory it may be less important than expansion among larger clades.[62][63]Competition is modelled by r/K selection theory, which is based on Robert MacArthur and E. O. Wilson's work on island biogeography.[64] In this theory, selective pressures drive evolution in one of two stereotyped directions: r- or K-selection.[65] These terms, r and K, can be illustrated in a logistic model of population dynamics:[66]

Types of selection

1: directional selection: a single extreme phenotype favoured.

2, stabilizing selection: intermediate favoured over extremes.

3: disruptive selection: extremes favoured over intermediate.

X-axis: phenotypic trait

Y-axis: number of organisms

Group A: original population

Group B: after selection

2, stabilizing selection: intermediate favoured over extremes.

3: disruptive selection: extremes favoured over intermediate.

X-axis: phenotypic trait

Y-axis: number of organisms

Group A: original population

Group B: after selection

Natural selection can act on any heritable phenotypic trait,[67] and selective pressure can be produced by any aspect of the environment, including sexual selection and competition with members of the same or other species.[68][69] However, this does not imply that natural selection is always directional and results in adaptive evolution; natural selection often results in the maintenance of the status quo by eliminating less fit variants.[57]

Selection can be classified in several different ways, such as by its effect on a trait, on genetic diversity, by the life cycle stage where it acts, by the unit of selection, or by the resource being competed for.

Selection has different effects on traits. Stabilizing selection acts to hold a trait at a stable optimum, and in the simplest case all deviations from this optimum are selectively disadvantageous. Directional selection favours extreme values of a trait. The uncommon disruptive selection also acts during transition periods when the current mode is sub-optimal, but alters the trait in more than one direction. In particular, if the trait is quantitative and univariate then both higher and lower trait levels are favoured. Disruptive selection can be a precursor to speciation.[57]

Alternatively, selection can be divided according to its effect on genetic diversity. Purifying or negative selection acts to remove genetic variation from the population (and is opposed by de novo mutation, which introduces new variation.[70][71] In contrast, balancing selection acts to maintain genetic variation in a population, even in the absence of de novo mutation, by negative frequency-dependent selection. One mechanism for this is heterozygote advantage, where individuals with two different alleles have a selective advantage over individuals with just one allele. The polymorphism at the human ABO blood group locus has been explained in this way.[72]

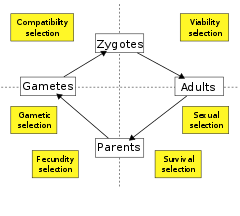

Different types of selection act at each life cycle stage of a sexually reproducing organism.[73]

Another option is to classify selection by the life cycle stage at which it acts. Some biologists recognise just two types: viability (or survival) selection, which acts to increase an organism's probability of survival, and fecundity (or fertility or reproductive) selection, which acts to increase the rate of reproduction, given survival. Others split the life cycle into further components of selection. Thus viability and survival selection may be defined separately and respectively as acting to improve the probability of survival before and after reproductive age is reached, while fecundity selection may be split into additional sub-components including sexual selection, gametic selection, acting on gamete survival, and compatibility selection, acting on zygote formation.[73]

Selection can also be classified by the level or unit of selection. Individual selection acts on the individual, in the sense that adaptations are "for" the benefit of the individual, and result from selection among individuals. Gene selection acts directly at the level of the gene. In kin selection and intragenomic conflict, gene-level selection provides a more apt explanation of the underlying process. Group selection, if it occurs, acts on groups of organisms, on the assumption that groups replicate and mutate in an analogous way to genes and individuals. There is an ongoing debate over the degree to which group selection occurs in nature.[74]

Finally, selection can be classified according to the resource being competed for. Sexual selection results from competition for mates. Sexual selection typically proceeds via fecundity selection, sometimes at the expense of viability. Ecological selection is natural selection via any means other than sexual selection, such as kin selection, competition, and infanticide. Following Darwin, natural selection is sometimes defined as ecological selection, in which case sexual selection is considered a separate mechanism.[75]

Sexual selection

The peacock's elaborate plumage is mentioned by Darwin as an example of sexual selection,[76] and is a classic example of Fisherian runaway,[77] driven to its conspicuous size and coloration through mate choice by females over many generations.

Sexual selection as first articulated by Darwin (using the example of the peacock's tail)[76] refers specifically to competition for mates,[78] which can be intrasexual, between individuals of the same sex, that is male–male competition, or intersexual, where one gender chooses mates, most often with males displaying and females choosing.[79] However, in some species, mate choice is primarily by males, as in some fishes of the family Syngnathidae.[80][81]

Phenotypic traits can be displayed in one sex and desired in the other sex, causing a positive feedback loop called a Fisherian runaway, for example, the extravagant plumage of some male birds such as the peacock.[77] An alternate theory proposed by the same Ronald Fisher in 1930 is the sexy son hypothesis, that mothers want promiscuous sons to give them large numbers of grandchildren and so choose promiscuous fathers for their children. Aggression between members of the same sex is sometimes associated with very distinctive features, such as the antlers of stags, which are used in combat with other stags. More generally, intrasexual selection is often associated with sexual dimorphism, including differences in body size between males and females of a species.[79]

Natural selection in action

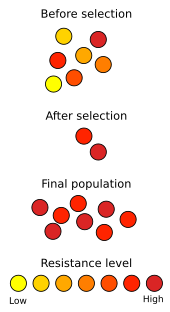

Selection in action: resistance to antibiotics grows though the survival of individuals less affected by the antibiotic. Their offspring inherit the resistance.

Natural selection is seen in action in the development of antibiotic resistance in microorganisms. Since the discovery of penicillin in 1928, antibiotics have been used to fight bacterial diseases. The widespread misuse of antibiotics has selected for microbial resistance to antibiotics in clinical use, to the point that the methicillin-resistant Staphylococcus aureus (MRSA) has been described as a "superbug" because of the threat it poses to health and its relative invulnerability to existing drugs.[82] Response strategies typically include the use of different, stronger antibiotics; however, new strains of MRSA have recently emerged that are resistant even to these drugs.[83] This is an evolutionary arms race, in which bacteria develop strains less susceptible to antibiotics, while medical researchers attempt to develop new antibiotics that can kill them. A similar situation occurs with pesticide resistance in plants and insects. Arms races are not necessarily induced by man; a well-documented example involves the spread of a gene in the butterfly Hypolimnas bolina suppressing male-killing activity by Wolbachia bacteria parasites on the island of Samoa, where the spread of the gene is known to have occurred over a period of just five years[84][85]

Evolution by means of natural selection

X-ray of the left hand of a ten-year-old boy with polydactyly, caused by a mutant Hox gene

A prerequisite for natural selection to result in adaptive evolution, novel traits and speciation is the presence of heritable genetic variation that results in fitness differences. Genetic variation is the result of mutations, genetic recombinations and alterations in the karyotype (the number, shape, size and internal arrangement of the chromosomes). Any of these changes might have an effect that is highly advantageous or highly disadvantageous, but large effects are rare. In the past, most changes in the genetic material were considered neutral or close to neutral because they occurred in noncoding DNA or resulted in a synonymous substitution. However, many mutations in non-coding DNA have deleterious effects.[86][87] Although both mutation rates and average fitness effects of mutations are dependent on the organism, a majority of mutations in humans are slightly deleterious.[88]

Some mutations occur in "toolkit" or regulatory genes. Changes in these often have large effects on the phenotype of the individual because they regulate the function of many other genes. Most, but not all, mutations in regulatory genes result in non-viable embryos. Some nonlethal regulatory mutations occur in HOX genes in humans, which can result in a cervical rib[89] or polydactyly, an increase in the number of fingers or toes.[90] When such mutations result in a higher fitness, natural selection favours these phenotypes and the novel trait spreads in the population. Established traits are not immutable; traits that have high fitness in one environmental context may be much less fit if environmental conditions change. In the absence of natural selection to preserve such a trait, it becomes more variable and deteriorate over time, possibly resulting in a vestigial manifestation of the trait, also called evolutionary baggage. In many circumstances, the apparently vestigial structure may retain a limited functionality, or may be co-opted for other advantageous traits in a phenomenon known as preadaptation. A famous example of a vestigial structure, the eye of the blind mole-rat, is believed to retain function in photoperiod perception.[91]

Speciation

Speciation requires a degree of reproductive isolation—that is, a reduction in gene flow. However, it is intrinsic to the concept of a species that hybrids are selected against, opposing the evolution of reproductive isolation, a problem that was recognised by Darwin. The problem does not occur in allopatric speciation with geographically separated populations, which can diverge with different sets of mutations. E. B. Poulton realized in 1903 that reproductive isolation could evolve through divergence, if each lineage acquired a different, incompatible allele of the same gene. Selection against the heterozygote would then directly create reproductive isolation, leading to the Bateson–Dobzhansky–Muller model, further elaborated by H. Allen Orr[92] and Sergey Gavrilets.[93] With reinforcement, however, natural selection can favor an increase in pre-zygotic isolation, influencing the process of speciation directly.[94]Genetic basis

Genotype and phenotype

Natural selection acts on an organism's phenotype, or physical characteristics. Phenotype is determined by an organism's genetic make-up (genotype) and the environment in which the organism lives. When different organisms in a population possess different versions of a gene for a certain trait, each of these versions is known as an allele. It is this genetic variation that underlies differences in phenotype. An example is the ABO blood type antigens in humans, where three alleles govern the phenotype.[95]Some traits are governed by only a single gene, but most traits are influenced by the interactions of many genes. A variation in one of the many genes that contributes to a trait may have only a small effect on the phenotype; together, these genes can produce a continuum of possible phenotypic values.[96]

Directionality of selection

When some component of a trait is heritable, selection alters the frequencies of the different alleles, or variants of the gene that produces the variants of the trait. Selection can be divided into three classes, on the basis of its effect on allele frequencies: directional, stabilizing, and purifying selection.[97] Directional selection occurs when an allele has a greater fitness than others, so that it increases in frequency, gaining an increasing share in the population. This process can continue until the allele is fixed and the entire population shares the fitter phenotype.[98] Far more common is stabilizing selection, which lowers the frequency of alleles that have a deleterious effect on the phenotype – that is, produce organisms of lower fitness. This process can continue until the allele is eliminated from the population. Purifying selection conserves functional genetic features, such as protein-coding genes or regulatory sequences, over time by selective pressure against deleterious variants.[99]Some forms of balancing selection do not result in fixation, but maintain an allele at intermediate frequencies in a population. This can occur in diploid species (with pairs of chromosomes) when heterozygous individuals (with just one copy of the allele) have a higher fitness than homozygous individuals (with two copies). This is called heterozygote advantage or over-dominance, of which the best-known example is the resistance to malaria in humans heterozygous for sickle-cell anaemia. Maintenance of allelic variation can also occur through disruptive or diversifying selection, which favours genotypes that depart from the average in either direction (that is, the opposite of over-dominance), and can result in a bimodal distribution of trait values. Finally, balancing selection can occur through frequency-dependent selection, where the fitness of one particular phenotype depends on the distribution of other phenotypes in the population. The principles of game theory have been applied to understand the fitness distributions in these situations, particularly in the study of kin selection and the evolution of reciprocal altruism.[100][101]

Selection, genetic variation, and drift

A portion of all genetic variation is functionally neutral, producing no phenotypic effect or significant difference in fitness. Motoo Kimura's neutral theory of molecular evolution by genetic drift proposes that this variation accounts for a large fraction of observed genetic diversity.[102] Neutral events can radically reduce genetic variation through population bottlenecks.[103] which among other things can cause the founder effect in initially small new populations.[104] When genetic variation does not result in differences in fitness, selection cannot directly affect the frequency of such variation. As a result, the genetic variation at those sites is higher than at sites where variation does influence fitness.[97] However, after a period with no new mutations, the genetic variation at these sites is eliminated due to genetic drift. Natural selection reduces genetic variation by eliminating maladapted individuals, and consequently the mutations that caused the maladaptation. At the same time, new mutations occur, resulting in a mutation–selection balance. The exact outcome of the two processes depends both on the rate at which new mutations occur and on the strength of the natural selection, which is a function of how unfavourable the mutation proves to be.[105]Genetic linkage occurs when the loci of two alleles are in close proximity on a chromosome. During the formation of gametes, recombination reshuffles the alleles. The chance that such a reshuffle occurs between two alleles is inversely related to the distance between them. Selective sweeps occur when an allele becomes more common in a population as a result of positive selection. As the prevalence of one allele increases, closely linked alleles can also become more common by "genetic hitchhiking", whether they are neutral or even slightly deleterious. A strong selective sweep results in a region of the genome where the positively selected haplotype (the allele and its neighbours) are in essence the only ones that exist in the population. Selective sweeps can be detected by measuring linkage disequilibrium, or whether a given haplotype is overrepresented in the population. Since a selective sweep also results in selection of neighbouring alleles, the presence of a block of strong linkage disequilibrium might indicate a 'recent' selective sweep near the centre of the block.[106]

Background selection is the opposite of a selective sweep. If a specific site experiences strong and persistent purifying selection, linked variation tends to be weeded out along with it, producing a region in the genome of low overall variability. Because background selection is a result of deleterious new mutations, which can occur randomly in any haplotype, it does not produce clear blocks of linkage disequilibrium, although with low recombination it can still lead to slightly negative linkage disequilibrium overall.[107]

Impact

Darwin's ideas, along with those of Adam Smith and Karl Marx, had a profound influence on 19th century thought, including his radical claim that "elaborately constructed forms, so different from each other, and dependent on each other in so complex a manner" evolved from the simplest forms of life by a few simple principles.[108] This inspired some of Darwin's most ardent supporters—and provoked the strongest opposition. Natural selection had the power, according to Stephen Jay Gould, to "dethrone some of the deepest and most traditional comforts of Western thought", such as the belief that humans have a special place in the world.[109]In the words of the philosopher Daniel Dennett, "Darwin's dangerous idea" of evolution by natural selection is a "universal acid," which cannot be kept restricted to any vessel or container, as it soon leaks out, working its way into ever-wider surroundings.[110] Thus, in the last decades, the concept of natural selection has spread from evolutionary biology to other disciplines, including evolutionary computation, quantum Darwinism, evolutionary economics, evolutionary epistemology, evolutionary psychology, and cosmological natural selection. This unlimited applicability has been called universal Darwinism.[111]

Origin of life

How life originated from inorganic matter remains an unresolved problem in biology. One prominent hypothesis is that life first appeared in the form of short self-replicating RNA polymers.[112] On this view, life may have come into existence when RNA chains first experienced the basic conditions, as conceived by Charles Darwin, for natural selection to operate. These conditions are: heritability, variation of type, and competition for limited resources. The fitness of an early RNA replicator would likely have been a function of adaptive capacities that were intrinsic (i.e., determined by the nucleotide sequence) and the availability of resources.[113][114] The three primary adaptive capacities could logically have been: (1) the capacity to replicate with moderate fidelity (giving rise to both heritability and variation of type), (2) the capacity to avoid decay, and (3) the capacity to acquire and process resources.[113][114] These capacities would have been determined initially by the folded configurations (including those configurations with ribozyme activity) of the RNA replicators that, in turn, would have been encoded in their individual nucleotide sequences.[115]Cell and molecular biology

In 1881, the embryologist Wilhelm Roux published Der Kampf der Theile im Organismus (The Struggle of Parts in the Organism) in which he suggested that the development of an organism results from a Darwinian competition between the parts of the embryo, occurring at all levels, from molecules to organs.[116] In recent years, a modern version of this theory has been proposed by Jean-Jacques Kupiec. According to this cellular Darwinism, random variation at the molecular level generates diversity in cell types whereas cell interactions impose a characteristic order on the developing embryo.[117]Social and psychological theory

The social implications of the theory of evolution by natural selection also became the source of continuing controversy. Friedrich Engels, a German political philosopher and co-originator of the ideology of communism, wrote in 1872 that "Darwin did not know what a bitter satire he wrote on mankind, and especially on his countrymen, when he showed that free competition, the struggle for existence, which the economists celebrate as the highest historical achievement, is the normal state of the animal kingdom."[118] Herbert Spencer and the eugenics advocate Francis Galton's interpretation of natural selection as necessarily progressive, leading to supposed advances in intelligence and civilisation, became a justification for colonialism, eugenics, and social Darwinism. For example, in 1940, Konrad Lorenz, in writings that he subsequently disowned, used the theory as a justification for policies of the Nazi state. He wrote "... selection for toughness, heroism, and social utility ... must be accomplished by some human institution, if mankind, in default of selective factors, is not to be ruined by domestication-induced degeneracy. The racial idea as the basis of our state has already accomplished much in this respect."[119] Others have developed ideas that human societies and culture evolve by mechanisms analogous to those that apply to evolution of species.[120]More recently, work among anthropologists and psychologists has led to the development of sociobiology and later of evolutionary psychology, a field that attempts to explain features of human psychology in terms of adaptation to the ancestral environment. The most prominent example of evolutionary psychology, notably advanced in the early work of Noam Chomsky and later by Steven Pinker, is the hypothesis that the human brain has adapted to acquire the grammatical rules of natural language.[121] Other aspects of human behaviour and social structures, from specific cultural norms such as incest avoidance to broader patterns such as gender roles, have been hypothesised to have similar origins as adaptations to the early environment in which modern humans evolved. By analogy to the action of natural selection on genes, the concept of memes—"units of cultural transmission," or culture's equivalents of genes undergoing selection and recombination—has arisen, first described in this form by Richard Dawkins in 1976[122] and subsequently expanded upon by philosophers such as Daniel Dennett as explanations for complex cultural activities, including human consciousness.[123]

Information and systems theory

In 1922, Alfred J. Lotka proposed that natural selection might be understood as a physical principle that could be described in terms of the use of energy by a system,[124][125] a concept later developed by Howard T. Odum as the maximum power principle in thermodynamics, whereby evolutionary systems with selective advantage maximise the rate of useful energy transformation.[126]The principles of natural selection have inspired a variety of computational techniques, such as "soft" artificial life, that simulate selective processes and can be highly efficient in 'adapting' entities to an environment defined by a specified fitness function.[127] For example, a class of heuristic optimisation algorithms known as genetic algorithms, pioneered by John Henry Holland in the 1970s and expanded upon by David E. Goldberg,[128] identify optimal solutions by simulated reproduction and mutation of a population of solutions defined by an initial probability distribution.[129] Such algorithms are particularly useful when applied to problems whose energy landscape is very rough or has many local minima.[130]