Strategies for Engineered Negligible Senescence (SENS) is the term coined by British biogerontologist Aubrey de Grey for the diverse range of regenerative medical therapies, either planned or currently in development, for the periodical repair of all age-related damage to human tissue with the ultimate purpose of maintaining a state of negligible senescence in the patient, thereby postponing age-associated disease for as long as the therapies are reapplied.

The term "negligible senescence" was first used in the early 1990s by professor Caleb Finch to describe organisms such as lobsters and hydras, which do not show symptoms of aging. The term "engineered negligible senescence" first appeared in print in Aubrey de Grey's 1999 book The Mitochondrial Free Radical Theory of Aging,[3] and was later prefaced with the term "strategies" in the article Time to Talk SENS: Critiquing the Immutability of Human Aging[4] De Grey called SENS a "goal-directed rather than curiosity-driven"[5] approach to the science of aging, and "an effort to expand regenerative medicine into the territory of aging".[6] To this end, SENS identifies seven categories of aging "damage" and a specific regenerative medical proposal for treating each.

While many biogerontologists find it "worthy of discussion"[7][8] and SENS conferences feature important research in the field,[9][10] some contend that the ultimate goals of de Grey's programme are too speculative given the current state of technology, referring to it as "fantasy rather than science".[11][12]

The term "negligible senescence" was first used in the early 1990s by professor Caleb Finch to describe organisms such as lobsters and hydras, which do not show symptoms of aging. The term "engineered negligible senescence" first appeared in print in Aubrey de Grey's 1999 book The Mitochondrial Free Radical Theory of Aging,[3] and was later prefaced with the term "strategies" in the article Time to Talk SENS: Critiquing the Immutability of Human Aging[4] De Grey called SENS a "goal-directed rather than curiosity-driven"[5] approach to the science of aging, and "an effort to expand regenerative medicine into the territory of aging".[6] To this end, SENS identifies seven categories of aging "damage" and a specific regenerative medical proposal for treating each.

While many biogerontologists find it "worthy of discussion"[7][8] and SENS conferences feature important research in the field,[9][10] some contend that the ultimate goals of de Grey's programme are too speculative given the current state of technology, referring to it as "fantasy rather than science".[11][12]

Framework

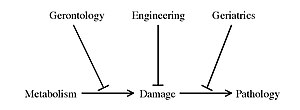

The arrows with flat heads are a notation meaning "inhibits," used in the literature of gene expression and gene regulation.

The ultimate objective of SENS is the eventual elimination of age-related diseases and infirmity by repeatedly reducing the state of senescence in the organism. The SENS project consists in implementing a series of periodic medical interventions designed to repair, prevent or render irrelevant all the types of molecular and cellular damage that cause age-related pathology and degeneration, in order to avoid debilitation and death from age-related causes.[2]

De Grey defines aging as "the set of accumulated side effects from metabolism that eventually kills us", and, more specifically, as follows: "a collection of cumulative changes to the molecular and cellular structure of an adult organism, which result in essential metabolic processes, but which also, once they progress far enough, increasingly disrupt metabolism, resulting in pathology and death."[13] He adds: "geriatrics is the attempt to stop damage from causing pathology; traditional gerontology is the attempt to stop metabolism from causing damage; and the SENS (engineering) approach is to eliminate the damage periodically, so keeping its abundance below the level that causes any pathology." The SENS approach to biomedical gerontology is thus distinctive because of its emphasis on tissue rejuvenation rather than attempting to slow the aging process.

By enumerating the various differences between young and old tissue identified by the science of biogerontology, a 'damage' report was drawn, which in turn formed the basis of the SENS strategy. The results fell into seven main categories of 'damage', seven alterations whose reversal would constitute negligible senescence:

- cell loss or atrophy (without replacement),[4][13][14]

- oncogenic nuclear mutations and epimutations,[15][16][17]

- cell senescence (Death-resistant cells),[18][19]

- mitochondrial mutations,[20][21]

- Intracellular junk or junk inside cells (lysosomal aggregates),[22][23]

- extracellular junk or junk outside cells (extracellular aggregates),[18][19]

- random extracellular cross-linking.[18][19]

Types of aging damage and treatment schemes

Nuclear mutations/epimutations—OncoSENS

These are changes to the nuclear DNA (nDNA), or to proteins which bind to the nDNA. Certain mutations can lead to cancer.This would need to be corrected in order to prevent or cure cancer. SENS focuses on a strategy called "whole-body interdiction of lengthening telomeres" (WILT), which would be made possible by periodic regenerative medicine treatments.

Mitochondrial mutations—MitoSENS

Mitochondria are components in our cells that are important for energy production. Because of the highly oxidative environment in mitochondria and their lack of the sophisticated repair systems, mitochondrial mutations are believed to be a major cause of progressive cellular degeneration.This would be corrected by allotopic expression—copying the DNA for mitochondria completely within the cellular nucleus, where it is better protected. De Grey argues that experimental evidence demonstrates that the operation is feasible, however, a 2003 study showed that some mitochondrial proteins are too hydrophobic to survive the transport from the cytoplasm to the mitochondria.[24]

Intracellular junk—LysoSENS

Our cells are constantly breaking down proteins and other molecules that are no longer useful or which can be harmful. Those molecules which can’t be digested accumulate as junk inside our cells, which is detected in the form of lipofuscin granules. Atherosclerosis, macular degeneration, liver spots on the skin and all kinds of neurodegenerative diseases (such as Alzheimer's disease) are associated with this problem.Junk inside cells might be removed by adding new enzymes to the cell's natural digestion organ, the lysosome. These enzymes would be taken from bacteria, molds and other organisms that are known to completely digest animal bodies.

Extracellular junk—AmyloSENS

Harmful junk protein can accumulate outside of our cells. Junk here means useless things accumulated by a body, but which cannot be digested or removed by its processes, such as the amyloid plaques characteristic of Alzheimer's disease and other amyloidoses.Junk outside cells might be removed by enhanced phagocytosis (the normal process used by the immune system), and small drugs able to break chemical beta-bonds. The large junk in this class can be removed surgically.

Cell loss and atrophy—RepleniSENS

Some of the cells in our bodies cannot be replaced, or can be only replaced very slowly—more slowly than they die. This decrease in cell number affects some of the most important tissues of the body. Muscle cells are lost in skeletal muscles and the heart, causing them to become frailer with age. Loss of neurons in the substantia nigra causes Parkinson's disease, while loss of immune cells impairs the immune system.This can be partly corrected by therapies involving exercise and growth factors, but stem cell therapy, regenerative medicine and tissue engineering are almost certainly required for any more than just partial replacement of lost cells.

Cell senescence—ApoptoSENS

Senescence is a phenomenon where the cells are no longer able to divide, but also do not die and let others divide. They may also do other harmful things, like secreting proteins. Degeneration of joints, immune senescence, accumulation of visceral fat and type 2 diabetes are caused by this. Cells sometimes enter a state of resistance to signals sent, as part of a process called apoptosis, to instruct cells to destroy themselves.Cells in this state could be eliminated by forcing them to apoptose (via suicide genes, vaccines, or recently discovered senolytic agents), and healthy cells would multiply to replace them.

Extracellular crosslinks—GlycoSENS

Cells are held together by special linking proteins. When too many cross-links form between cells in a tissue, the tissue can lose its elasticity and cause problems including arteriosclerosis, presbyopia and weakened skin texture. These are chemical bonds between structures that are part of the body, but not within a cell. In senescent people many of these become brittle and weak.SENS proposes to further develop small-molecular drugs and enzymes to break links caused by sugar-bonding, known as advanced glycation endproducts, and other common forms of chemical linking.

Scientific controversy

While some fields mentioned as branches of SENS are broadly supported by the medical research community, e.g., stem cell research (RepleniSENS), anti-Alzheimers research (AmyloSENS) and oncogenomics (OncoSENS), the SENS programme as a whole has been a highly controversial proposal, with many critics arguing that the SENS agenda is fanciful and the highly complicated biomedical phenomena involved in the aging process contain too many unknowns for SENS to be fully implementable in the foreseeable future. Cancer may well deserve special attention as an aging-associated disease (OncoSENS), but the SENS claim that nuclear DNA damage only matters for aging because of cancer has been challenged in the literature[25] as well as by material in the article DNA damage theory of aging.In November 2005, 28 biogerontologists published a statement of criticism in EMBO Reports, "Science fact and the SENS agenda: what can we reasonably expect from ageing research?,"[26] arguing "each one of the specific proposals that comprise the SENS agenda is, at our present stage of ignorance, exceptionally optimistic,"[26] and that some of the specific proposals "will take decades of hard work [to be medically integrated], if [they] ever prove to be useful."[26] The researchers argue that while there is "a rationale for thinking that we might eventually learn how to postpone human illnesses to an important degree,"[26] increased basic research, rather than the goal-directed approach of SENS, is presently the scientifically appropriate goal. This article was written in response to a July 2005 EMBO Reports article previously published by de Grey[27] and a response from de Grey was published in the same November issue.[28] De Grey summarizes these events in "The biogerontology research community's evolving view of SENS," published on the Methuselah Foundation website.[29]

In 2012, Colin Blakemore criticised Aubrey de Grey, but not SENS specifically, in a debate hosted at the Oxford University Scientific Society.[citation needed]

More recently, biogerontologist Marios Kyriazis has sharply criticised the clinical applicability of SENS[30][31] claiming that such therapies, even if developed in the laboratory, would be practically unusable by the general public.[32] De Grey responded to one such criticism.[33]

Technology Review controversy

In February 2005, Technology Review, which is owned by the Massachusetts Institute of Technology, published an article by Sherwin Nuland, a Clinical Professor of Surgery at Yale University and the author of "How We Die",[34] that drew a skeptical portrait of SENS, at the time de Grey was a computer associate in the Flybase Facility of the Department of Genetics at the University of Cambridge. The April 2005 issue of Technology Review contained a reply by Aubrey de Grey[35] and numerous comments from readers.[36]During June 2005, David Gobel, CEO and Co-founder of Methuselah Foundation offered Technology Review $20,000 to fund a prize competition to publicly clarify the viability of the SENS approach. In July 2005, Pontin announced a $20,000 prize, funded 50/50 by Methuselah Foundation and MIT Technology Review, open to any molecular biologist, with a record of publication in biogerontology, who could prove that the alleged benefits of SENS were "so wrong that it is unworthy of learned debate."[37] Technology Review received five submissions to its Challenge. In March 2006, Technology Review announced that it had chosen a panel of judges for the Challenge: Rodney Brooks, Anita Goel, Nathan Myhrvold, Vikram Sheel Kumar, and Craig Venter.[38] Three of the five submissions met the terms of the prize competition. They were published by Technology Review on June 9, 2006. Accompanying the three submissions were rebuttals by de Grey, and counter-responses to de Grey's rebuttals. On July 11, 2006, Technology Review published the results of the SENS Challenge.[7][39]

In the end, no one won the $20,000 prize. The judges felt that no submission met the criterion of the challenge and discredited SENS, although they unanimously agreed that one submission, by Preston Estep and his colleagues, was the most eloquent. Craig Venter succinctly expressed the prevailing opinion: "Estep et al. ... have not demonstrated that SENS is unworthy of discussion, but the proponents of SENS have not made a compelling case for it."[7] Summarizing the judges' deliberations, Pontin wrote that SENS is "highly speculative" and that many of its proposals could not be reproduced with the scientific technology of that period.[clarification needed] Myhrvold described SENS as belonging to a kind of "antechamber of science" where they wait until technology and scientific knowledge advance to the point where it can be tested.[7][8] In a letter of dissent dated July 11, 2006 in Technology Review, Estep et al. criticized the ruling of the judges.

Social and economic implications

Of the roughly 150,000 people who die each day across the globe, about two thirds—100,000 per day—die of age-related causes.[40] In industrialized nations, the proportion is much higher, reaching 90%.[40]De Grey and other scientists in the general field have argued that the costs of a rapidly growing aging population will increase to the degree that the costs of an accelerated pace of aging research are easy to justify in terms of future costs avoided. Olshansky et al. 2006 argue, for example, that the total economic cost of Alzheimer's disease in the US alone will increase from $80–100 billion today to more than $1 trillion in 2050.[41] "Consider what is likely to happen if we don't [invest further in aging research]. Take, for instance, the impact of just one age-related disorder, Alzheimer disease (AD). For no other reason than the inevitable shifting demographics, the number of Americans stricken with AD will rise from 4 million today to as many as 16 million by midcentury. This means that more people in the United States will have AD by 2050 than the entire current population of the Netherlands. Globally, AD prevalence is expected to rise to 45 million by 2050, with three of every four patients with AD living in a developing nation. The US economic toll is currently $80–$100 billion, but by 2050 more than $1 trillion will be spent annually on AD and related dementias. The impact of this single disease will be catastrophic, and this is just one example."[41]

SENS meetings

There have been four SENS roundtables and six SENS conferences held.[42][43] The first SENS roundtable was held in Oakland, California on October, 2000,[44] and the last SENS roundtable was held in Bethesda, Maryland on July, 2004.[45]On March 30–31, 2007 a North American SENS symposium was held in Edmonton, Alberta, Canada as the Edmonton Aging Symposium.[46][47] Another SENS-related conference ("Understanding Aging") was held at UCLA in Los Angeles, California on June 27–29, 2008[48]

Six SENS conferences have been held at Queens' College, Cambridge in England. All the conferences were organized by de Grey and all featured world-class researchers in the field of biogerontology.

- The first SENS conference was held in September 2003 as the 10th Congress of the International Association of Biomedical Gerontology[49] with the proceedings published in the Annals of the New York Academy of Sciences.[50]

- The second SENS conference was held in September 2005 and was simply called Strategies for Engineered Negligible Senescence (SENS), Second Conference[51] with the proceedings published in Rejuvenation Research.

- The third SENS conference was held in September, 2007.[52]

- The fourth SENS conference was held September 3–7, 2009.

- The fifth was held August 31 – September 4, 2011, like the first four, it was at Queens' College, Cambridge in England, organized by de Grey.[53][54] Videos of the presentations are available.

- The sixth SENS conference (SENS6) was held from September 3–7, 2013.

SENS Research Foundation

The SENS Research Foundation is a non-profit organization co-founded by Michael Kope, Aubrey de Grey, Jeff Hall, Sarah Marr and Kevin Perrott, which is based in California, United States. Its activities include SENS-based research programs and public relations work for the acceptance of and interest in related research.Before March 2009, the SENS research programme was mainly pursued by the Methuselah Foundation, co-founded by Aubrey de Grey and David Gobel. The Methuselah Foundation is most notable for establishing the Methuselah Mouse Prize, a monetary prize awarded to researchers who extend the lifespan of mice to unprecedented lengths.[55]

For 2013, The SENS Research Foundation has a research budget of approximately $4 million annually, half of it funded by a personal contribution of $13 million of Aubrey de Grey's[56] own wealth, and the other half coming from external donors, with the largest external donor being Peter Thiel, and another Internet entrepreneur Jason Hope,[57] has recently begun to contribute comparable sums.