The

Moon's cosmic ray shadow, as seen in secondary muons generated by

cosmic rays in the atmosphere, and detected 700 meters below ground, at

the Soudan II detector

| |

| Composition | Elementary particle |

|---|---|

| Statistics | Fermionic |

| Generation | Second |

| Interactions |

Gravity, Electromagnetic, Weak |

| Symbol |

μ− |

| Antiparticle |

Antimuon ( μ+) |

| Discovered | Carl D. Anderson, Seth Neddermeyer (1936) |

| Mass | 105.6583745(24) MeV/c2[1] |

| Mean lifetime | 2.1969811(22)×10−6 s[2][3] |

| Decays into |

e−, ν e, ν μ[3] (most common) |

| Electric charge | −1 e |

| Color charge | None |

| Spin | 1/2 |

| Weak isospin | LH: −1/2, RH: 0 |

| Weak hypercharge | LH: −1, RH: −2 |

The muon (/ˈmjuːɒn/; from the Greek letter mu (μ) used to represent it) is an elementary particle similar to the electron, with an electric charge of −1 e and a spin of 1/2, but with a much greater mass. It is classified as a lepton. As is the case with other leptons, the muon is not believed to have any sub-structure—that is, it is not thought to be composed of any simpler particles.

The muon is an unstable subatomic particle with a mean lifetime of 2.2 μs, much longer than many other subatomic particles. As with the decay of the non-elementary neutron (with a lifetime around 15 minutes), muon decay is slow (by subatomic standards) because the decay is mediated by the weak interaction exclusively (rather than the more powerful strong interaction or electromagnetic interaction), and because the mass difference between the muon and the set of its decay products is small, providing few kinetic degrees of freedom for decay. Muon decay almost always produces at least three particles, which must include an electron of the same charge as the muon and two neutrinos of different types.

Like all elementary particles, the muon has a corresponding antiparticle of opposite charge (+1 e) but equal mass and spin: the antimuon (also called a positive muon). Muons are denoted by

μ− and antimuons by

μ+. Muons were previously called mu mesons, but are not classified as mesons by modern particle physicists (see § History), and that name is no longer used by the physics community.

Muons have a mass of 105.7 MeV/c2, which is about 207 times that of the electron. Due to their greater mass, muons are not as sharply accelerated when they encounter electromagnetic fields, and do not emit as much bremsstrahlung (deceleration radiation). This allows muons of a given energy to penetrate far more deeply into matter than electrons since the deceleration of electrons and muons is primarily due to energy loss by the bremsstrahlung mechanism. As an example, so-called "secondary muons", generated by cosmic rays hitting the atmosphere, can penetrate to the Earth's surface, and even into deep mines.

Because muons have a very large mass and energy compared with the decay energy of radioactivity, they are never produced by radioactive decay. They are, however, produced in copious amounts in high-energy interactions in normal matter, in certain particle accelerator experiments with hadrons, or naturally in cosmic ray interactions with matter. These interactions usually produce pi mesons initially, which most often decay to muons.

As with the case of the other charged leptons, the muon has an associated muon neutrino, denoted by

ν

μ, which is not the same particle as the electron neutrino, and does not participate in the same nuclear reactions.

History

Muons were discovered by Carl D. Anderson and Seth Neddermeyer at Caltech in 1936, while studying cosmic radiation. Anderson noticed particles that curved differently from electrons and other known particles when passed through a magnetic field. They were negatively charged but curved less sharply than electrons, but more sharply than protons, for particles of the same velocity. It was assumed that the magnitude of their negative electric charge was equal to that of the electron, and so to account for the difference in curvature, it was supposed that their mass was greater than an electron but smaller than a proton. Thus Anderson initially called the new particle a mesotron, adopting the prefix meso- from the Greek word for "mid-". The existence of the muon was confirmed in 1937 by J. C. Street and E. C. Stevenson's cloud chamber experiment.[4]A particle with a mass in the meson range had been predicted before the discovery of any mesons, by theorist Hideki Yukawa:[5]

It seems natural to modify the theory of Heisenberg and Fermi in the following way. The transition of a heavy particle from neutron state to proton state is not always accompanied by the emission of light particles. The transition is sometimes taken up by another heavy particle.Because of its mass, the mu meson was initially thought to be Yukawa's particle, but it later proved to have the wrong properties. Yukawa's predicted particle, the pi meson, was finally identified in 1947 (again from cosmic ray interactions), and shown to differ from the earlier-discovered mu meson by having the correct properties to be a particle which mediated the nuclear force.

With two particles now known with the intermediate mass, the more general term meson was adopted to refer to any such particle within the correct mass range between electrons and nucleons. Further, in order to differentiate between the two different types of mesons after the second meson was discovered, the initial mesotron particle was renamed the mu meson (the Greek letter μ (mu) corresponds to m), and the new 1947 meson (Yukawa's particle) was named the pi meson.

As more types of mesons were discovered in accelerator experiments later, it was eventually found that the mu meson significantly differed not only from the pi meson (of about the same mass), but also from all other types of mesons. The difference, in part, was that mu mesons did not interact with the nuclear force, as pi mesons did (and were required to do, in Yukawa's theory). Newer mesons also showed evidence of behaving like the pi meson in nuclear interactions, but not like the mu meson. Also, the mu meson's decay products included both a neutrino and an antineutrino, rather than just one or the other, as was observed in the decay of other charged mesons.

In the eventual Standard Model of particle physics codified in the 1970s, all mesons other than the mu meson were understood to be hadrons—that is, particles made of quarks—and thus subject to the nuclear force. In the quark model, a meson was no longer defined by mass (for some had been discovered that were very massive—more than nucleons), but instead were particles composed of exactly two quarks (a quark and antiquark), unlike the baryons, which are defined as particles composed of three quarks (protons and neutrons were the lightest baryons). Mu mesons, however, had shown themselves to be fundamental particles (leptons) like electrons, with no quark structure. Thus, mu mesons were not mesons at all, in the new sense and use of the term meson used with the quark model of particle structure.

With this change in definition, the term mu meson was abandoned, and replaced whenever possible with the modern term muon, making the term mu meson only historical. In the new quark model, other types of mesons sometimes continued to be referred to in shorter terminology (e.g., pion for pi meson), but in the case of the muon, it retained the shorter name and was never again properly referred to by older "mu meson" terminology.

The eventual recognition of the "mu meson" muon as a simple "heavy electron" with no role at all in the nuclear interaction, seemed so incongruous and surprising at the time, that Nobel laureate I. I. Rabi famously quipped, "Who ordered that?"[6]

In the Rossi–Hall experiment (1941), muons were used to observe the time dilation (or alternatively, length contraction) predicted by special relativity, for the first time.

Muon sources

Muons arriving on the Earth's surface are created indirectly as decay products of collisions of cosmic rays with particles of the Earth's atmosphere.[7]About 10,000 muons reach every square meter of the earth's surface a minute; these charged particles form as by-products of cosmic rays colliding with molecules in the upper atmosphere. Traveling at relativistic speeds, muons can penetrate tens of meters into rocks and other matter before attenuating as a result of absorption or deflection by other atoms.[8]When a cosmic ray proton impacts atomic nuclei in the upper atmosphere, pions are created. These decay within a relatively short distance (meters) into muons (their preferred decay product), and muon neutrinos. The muons from these high energy cosmic rays generally continue in about the same direction as the original proton, at a velocity near the speed of light. Although their lifetime without relativistic effects would allow a half-survival distance of only about 456 m (2.197 µs×ln(2) × 0.9997×c) at most (as seen from Earth) the time dilation effect of special relativity (from the viewpoint of the Earth) allows cosmic ray secondary muons to survive the flight to the Earth's surface, since in the Earth frame, the muons have a longer half life due to their velocity. From the viewpoint (inertial frame) of the muon, on the other hand, it is the length contraction effect of special relativity which allows this penetration, since in the muon frame, its lifetime is unaffected, but the length contraction causes distances through the atmosphere and Earth to be far shorter than these distances in the Earth rest-frame. Both effects are equally valid ways of explaining the fast muon's unusual survival over distances.

Since muons are unusually penetrative of ordinary matter, like neutrinos, they are also detectable deep underground (700 meters at the Soudan 2 detector) and underwater, where they form a major part of the natural background ionizing radiation. Like cosmic rays, as noted, this secondary muon radiation is also directional.

The same nuclear reaction described above (i.e. hadron-hadron impacts to produce pion beams, which then quickly decay to muon beams over short distances) is used by particle physicists to produce muon beams, such as the beam used for the muon g − 2 experiment.[9]

Muon decay

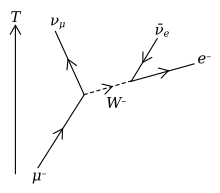

The most common decay of the muon

Muons are unstable elementary particles and are heavier than electrons and neutrinos but lighter than all other matter particles. They decay via the weak interaction. Because leptonic family numbers are conserved in the absence of an extremely unlikely immediate neutrino oscillation, one of the product neutrinos of muon decay must be a muon-type neutrino and the other an electron-type antineutrino (antimuon decay produces the corresponding antiparticles, as detailed below). Because charge must be conserved, one of the products of muon decay is always an electron of the same charge as the muon (a positron if it is a positive muon). Thus all muons decay to at least an electron, and two neutrinos. Sometimes, besides these necessary products, additional other particles that have no net charge and spin of zero (e.g., a pair of photons, or an electron-positron pair), are produced.

The dominant muon decay mode (sometimes called the Michel decay after Louis Michel) is the simplest possible: the muon decays to an electron, an electron antineutrino, and a muon neutrino. Antimuons, in mirror fashion, most often decay to the corresponding antiparticles: a positron, an electron neutrino, and a muon antineutrino. In formulaic terms, these two decays are:

The mean lifetime, τ = ħ/Γ, of the (positive) muon is (2.1969811±0.0000022 ) µs.[2] The equality of the muon and antimuon lifetimes has been established to better than one part in 104.

Prohibited decays

Certain neutrino-less decay modes are kinematically allowed but are, for all practical purposes, forbidden in the Standard Model, even given that neutrinos have mass and oscillate. Examples forbidden by lepton flavour conservation are:

μ− →

e− +

γ

μ− →

e− +

e+ +

e− .

μ− →

e− +

γ

is technically possible, for example by neutrino oscillation of a virtual muon neutrino into an electron neutrino, but such a decay is astronomically unlikely and therefore should be experimentally unobservable: less than one in 1050 muon decays should produce such a decay.

Observation of such decay modes would constitute clear evidence for theories beyond the Standard Model. Upper limits for the branching fractions of such decay modes were measured in many experiments starting more than 50 years ago. The current upper limit for the

μ+ →

e+ +

γ

branching fraction was measured 2009–2013 in the MEG experiment and is 4.2 × 10−13. [10]

Theoretical decay rate

The muon decay width which follows from Fermi's golden rule follows Sargent's law of fifth-power dependence on mμ , ,

,  is the Fermi coupling constant and

is the Fermi coupling constant and  is the fraction of the maximum energy transmitted to the electron.

is the fraction of the maximum energy transmitted to the electron.

The decay distributions of the electron in muon decays have been parameterised using the so-called Michel parameters. The values of these four parameters are predicted unambiguously in the Standard Model of particle physics, thus muon decays represent a good test of the space-time structure of the weak interaction. No deviation from the Standard Model predictions has yet been found.

For the decay of the muon, the expected decay distribution for the Standard Model values of Michel parameters is

is the angle between the muon's polarization vector

is the angle between the muon's polarization vector  and the decay-electron momentum vector, and

and the decay-electron momentum vector, and  is the fraction of muons that are forward-polarized. Integrating this

expression over electron energy gives the angular distribution of the

daughter electrons:

is the fraction of muons that are forward-polarized. Integrating this

expression over electron energy gives the angular distribution of the

daughter electrons:

) is

) is

term in the expected decay values of the Michel Parameters with a

term in the expected decay values of the Michel Parameters with a  term, where ω is the Larmor frequency from Larmor precession of the muon in a uniform magnetic field, given by:

term, where ω is the Larmor frequency from Larmor precession of the muon in a uniform magnetic field, given by:

where m is mass of the muon, e is charge, g is the muon g-factor and B is applied field.

A change in the electron distribution computed using the standard, unprecessional, Michel Parameters can be seen displaying a periodicity of π radians. This can be shown to physically correspond to a phase change of π, introduced in the electron distribution as the angular momentum is changed by the action of the charge conjugation operator, which is conserved by the weak interaction.

The observation of parity violation in muon decay can be compared to the concept of violation of parity in weak interactions in general as an extension of The Wu Experiment, as well as the change of angular momentum introduced by a phase change of π corresponding to the charge-parity operator being invariant in this interaction. This fact is true for all lepton interactions in The Standard Model.

Muonic atoms

The muon was the first elementary particle discovered that does not appear in ordinary atoms.Negative muon atoms

Negative muons can, however, form muonic atoms (previously called mu-mesic atoms), by replacing an electron in ordinary atoms. Muonic hydrogen atoms are much smaller than typical hydrogen atoms because the much larger mass of the muon gives it a much more localized ground-state wavefunction than is observed for the electron. In multi-electron atoms, when only one of the electrons is replaced by a muon, the size of the atom continues to be determined by the other electrons, and the atomic size is nearly unchanged. However, in such cases the orbital of the muon continues to be smaller and far closer to the nucleus than the atomic orbitals of the electrons.Muonic helium is created by substituting a muon for one of the electrons in helium-4. The muon orbits much closer to the nucleus, so muonic helium can therefore be regarded like an isotope of helium whose nucleus consists of two neutrons, two protons and a muon, with a single electron outside. Colloquially, it could be called "helium 4.1", since the mass of the muon is slightly greater than 0.1 amu. Chemically, muonic helium, possessing an unpaired valence electron, can bond with other atoms, and behaves more like a hydrogen atom than an inert helium atom.[11][12][13]

Muonic heavy hydrogen atoms with a negative muon may undergo nuclear fusion in the process of muon-catalyzed fusion, after the muon may leave the new atom to induce fusion in another hydrogen molecule. This process continues until the negative muon is trapped by a helium atom, and cannot leave until it decays.

Finally, a possible fate of negative muons bound to conventional atoms is that they are captured by the weak-force by protons in nuclei in a sort of electron-capture-like process. When this happens, the proton becomes a neutron and a muon neutrino is emitted.

Positive muon atoms

A positive muon, when stopped in ordinary matter, cannot be captured by a proton since it would need to be an antiproton. The positive muon is also not attracted to the nucleus of atoms. Instead, it binds a random electron and with this electron forms an exotic atom known as muonium (Mu) atom. In this atom, the muon acts as the nucleus. The positive muon, in this context, can be considered a pseudo-isotope of hydrogen with one ninth of the mass of the proton. Because the reduced mass of muonium, and hence its Bohr radius, is very close to that of hydrogen, this short-lived "atom" (or a muon and electron) behaves chemically—to a first approximation—like the isotopes of hydrogen (protium, deuterium and tritium).Use in measurement of the proton charge radius

The experimental technique that is expected to provide the most precise determination of the root-mean-square charge radius of the proton is the measurement of the frequency of photons (precise "color" of light ) emitted or absorbed by atomic transitions in muonic hydrogen. This form of hydrogen atom is composed of a negatively charged muon bound to a proton. The muon is particularly well suited for this purpose because its much larger mass results in a much more compact bound state and hence a larger probability for it to be found inside the proton in muonic hydrogen compared to the electron in atomic hydrogen.[14] The Lamb shift in muonic hydrogen was measured by driving the muon from a 2s state up to an excited 2p state using a laser. The frequency of the photons required to induce two such (slightly different) transitions were reported in 2014 to be 50 and 55 THz which, according to present theories of quantum electrodynamics, yield an appropriately averaged value of 0.84087±0.00039 fm for the charge radius of the proton.[15]The internationally accepted value of the proton's charge radius is based on a suitable average of results from older measurements of effects caused by the nonzero size of the proton on scattering of electrons by nuclei and the light spectrum (photon energies) from excited atomic hydrogen. The official value updated in 2014 is 0.8751±0.0061 fm (see orders of magnitude for comparison to other sizes).[16] The expected precision of this result is inferior to that from muonic hydrogen by about a factor of fifteen, yet they disagree by about 5.6 times the nominal uncertainty in the difference (a discrepancy called 5.6σ in scientific notation). A conference of the world experts on this topic led to the decision to exclude the muon result from influencing the official 2014 value, in order to avoid hiding the mysterious discrepancy.[17] This "proton radius puzzle" remained unresolved as of late 2015, and has attracted much attention, in part because of the possibility that both measurements are valid, which would imply the influence of some "new physics".[18]

Anomalous magnetic dipole moment

The anomalous magnetic dipole moment is the difference between the experimentally observed value of the magnetic dipole moment and the theoretical value predicted by the Dirac equation. The measurement and prediction of this value is very important in the precision tests of QED (quantum electrodynamics). The E821 experiment[19] at Brookhaven National Laboratory (BNL) studied the precession of muon and anti-muon in a constant external magnetic field as they circulated in a confining storage ring. E821 reported the following average value[20] in 2006:The prediction for the value of the muon anomalous magnetic moment includes three parts:

- αμSM = αμQED + αμEW + αμhad.

Muon radiography and tomography

Since muons are much more deeply penetrating than X-rays or gamma rays, muon imaging can be used with much thicker material or, with cosmic ray sources, larger objects. One example is commercial muon tomography used to image entire cargo containers to detect shielded nuclear material, as well as explosives or other contraband.[23]The technique of muon transmission radiography based on cosmic ray sources was first used in the 1950s to measure the depth of the overburden of a tunnel in Australia[24] and in the 1960s to search for possible hidden chambers in the Pyramid of Chephren in Giza.[25] In 2017, the discovery of a large void (with a length of 30 m minimum) by observation of cosmic-ray muons was reported. [26]

In 2003, the scientists at Los Alamos National Laboratory developed a new imaging technique: muon scattering tomography. With muon scattering tomography, both incoming and outgoing trajectories for each particle are reconstructed, such as with sealed aluminum drift tubes.[27] Since the development of this technique, several companies have started to use it.

In August 2014, Decision Sciences International Corporation announced it had been awarded a contract by Toshiba for use of its muon tracking detectors in reclaiming the Fukushima nuclear complex.[28] The Fukushima Daiichi Tracker (FDT) was proposed to make a few months of muon measurements to show the distribution of the reactor cores.

In December 2014, Tepco reported that they would be using two different muon imaging techniques at Fukushima, "Muon Scanning Method" on Unit 1 (the most badly damaged, where the fuel may have left the reactor vessel) and "Muon Scattering Method" on Unit 2.[29]

The International Research Institute for Nuclear Decommissioning IRID in Japan and the High Energy Accelerator Research Organization KEK call the method they developed for Unit 1 the muon permeation method; 1,200 optical fibers for wavelength conversion light up when muons come into contact with them.[30] After a month of data collection, it is hoped to reveal the location and amount of fuel debris still inside the reactor. The measurements began in February 2015.

![{\frac {d^{2}\Gamma }{dx\,d\cos \theta }}\sim x^{2}[(3-2x)+P_{\mu }\cos \theta (1-2x)]](https://wikimedia.org/api/rest_v1/media/math/render/svg/719fb24c59880673db6aa8455befe3e791e4fd02)