Telemedicine is the use of telecommunication and information technology to provide clinical health care

from a distance. It has been used to overcome distance barriers and to

improve access to medical services that would often not be consistently

available in distant rural communities. It is also used to save lives in

critical care and emergency situations.

Although there were distant precursors to telemedicine, it is essentially a product of 20th century telecommunication and information technologies. These technologies permit communications between patient and medical staff with both convenience and fidelity, as well as the transmission of medical, imaging and health informatics data from one site to another.

Early forms of telemedicine achieved with telephone and radio have been supplemented with videotelephony, advanced diagnostic methods supported by distributed client/server applications, and additionally with telemedical devices to support in-home care.

Although there were distant precursors to telemedicine, it is essentially a product of 20th century telecommunication and information technologies. These technologies permit communications between patient and medical staff with both convenience and fidelity, as well as the transmission of medical, imaging and health informatics data from one site to another.

Early forms of telemedicine achieved with telephone and radio have been supplemented with videotelephony, advanced diagnostic methods supported by distributed client/server applications, and additionally with telemedical devices to support in-home care.

Disambiguation

eHealth is another related term, used particularly in the U.K. and Europe, as an umbrella term that includes telehealth, electronic medical records, and other components of health information technology.

Benefits and drawbacks

Telemedicine can be beneficial to patients in isolated communities and remote regions, who can receive care from doctors or specialists far away without the patient having to travel to visit them. Recent developments in mobile collaboration technology can allow healthcare professionals in multiple locations to share information and discuss patient issues as if they were in the same place. Remote patient monitoring through mobile technology can reduce the need for outpatient visits and enable remote prescription verification and drug administration oversight, potentially significantly reducing the overall cost of medical care. Telemedicine can also facilitate medical education by allowing workers to observe experts in their fields and share best practices more easily.Telemedicine also can eliminate the possible transmission of infectious diseases or parasites between patients and medical staff. This is particularly an issue where MRSA is a concern. Additionally, some patients who feel uncomfortable in a doctors office may do better remotely. For example, white coat syndrome may be avoided. Patients who are home-bound and would otherwise require an ambulance to move them to a clinic are also a consideration.

The downsides of telemedicine include the cost of telecommunication and data management equipment and of technical training for medical personnel who will employ it. Virtual medical treatment also entails potentially decreased human interaction between medical professionals and patients, an increased risk of error when medical services are delivered in the absence of a registered professional, and an increased risk that protected health information may be compromised through electronic storage and transmission. There is also a concern that telemedicine may actually decrease time efficiency due to the difficulties of assessing and treating patients through virtual interactions; for example, it has been estimated that a teledermatology consultation can take up to thirty minutes, whereas fifteen minutes is typical for a traditional consultation. Additionally, potentially poor quality of transmitted records, such as images or patient progress reports, and decreased access to relevant clinical information are quality assurance risks that can compromise the quality and continuity of patient care for the reporting doctor. Other obstacles to the implementation of telemedicine include unclear legal regulation for some telemedical practices and difficulty claiming reimbursement from insurers or government programs in some fields.

Another disadvantage of telemedicine is the inability to start treatment immediately. For example, a patient suffering from a bacterial infection might be given an antibiotic hypodermic injection in the clinic, and observed for any reaction, before that antibiotic is prescribed in pill form.

History

In the early 1900s, people living in remote areas of Australia used two-way radios, powered by a dynamo driven by a set of bicycle pedals, to communicate with the Royal Flying Doctor Service of Australia.In 1967 one of the first telemedicine clinics was founded by Kenneth Bird at Massachusetts General Hospital. The clinic addressed the fundamental problem of delivering occupational and emergency health services to employees and travellers at Boston's Logan International Airport, located three congested miles from the hospital. Over 1,000 patients are documented as having received remote treatment from doctors at MGH using the clinic's two-way audiovisual microwave circuit. The timing of Bird's clinic more or less coincided with NASA's foray into telemedicine through the use of physiologic monitors for astronauts. Other pioneering programs in telemedicine were designed to deliver healthcare services to people in rural settings. The first interactive telemedicine system, operating over standard telephone lines, designed to remotely diagnose and treat patients requiring cardiac resuscitation (defibrillation) was developed and launched by an American company, MedPhone Corporation, in 1989. A year later under the leadership of its President/CEO S Eric Wachtel, MedPhone introduced a mobile cellular version, the MDPhone. Twelve hospitals in the U.S. served as receiving and treatment centers.

Types

Categories

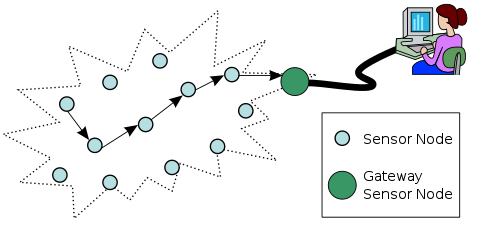

Telemedicine can be broken into three main categories: store-and-forward, remote patient monitoring and (real-time) interactive services.Store and forward

Store-and-forward telemedicine involves acquiring medical data (like medical images, biosignals etc.) and then transmitting this data to a doctor or medical specialist at a convenient time for assessment offline. It does not require the presence of both parties at the same time. Dermatology (cf: teledermatology), radiology, and pathology are common specialties that are conducive to asynchronous telemedicine. A properly structured medical record preferably in electronic form should be a component of this transfer. A key difference between traditional in-person patient meetings and telemedicine encounters is the omission of an actual physical examination and history. The 'store-and-forward' process requires the clinician to rely on a history report and audio/video information in lieu of a physical examination.Remote monitoring

Telehealth Blood Pressure Monitor

Remote monitoring, also known as self-monitoring or testing, enables medical professionals to monitor a patient remotely using various technological devices. This method is primarily used for managing chronic diseases or specific conditions, such as heart disease, diabetes mellitus, or asthma. These services can provide comparable health outcomes to traditional in-person patient encounters, supply greater satisfaction to patients, and may be cost-effective. Examples include home-based nocturnal dialysis and improved joint management.

Real-time interactive

Electronic consultations are possible through interactive telemedicine services which provide real-time interactions between patient and provider. Videoconferencing has been used in a wide range of clinical disciplines and settings for various purposes including management, diagnosis, counselling and monitoring of patients.Emergency

U.S. Navy medical staff being trained in the use of handheld telemedical devices (2006).

Common daily emergency telemedicine is performed by SAMU Regulator Physicians in France, Spain, Chile and Brazil. Aircraft and maritime emergencies are also handled by SAMU centres in Paris, Lisbon and Toulouse.

A recent study identified three major barriers to adoption of telemedicine in emergency and critical care units. They include:

- regulatory challenges related to the difficulty and cost of obtaining licensure across multiple states, malpractice protection and privileges at multiple facilities

- Lack of acceptance and reimbursement by government payers and some commercial insurance carriers creating a major financial barrier, which places the investment burden squarely upon the hospital or healthcare system.

- Cultural barriers occurring from the lack of desire, or unwillingness, of some physicians to adapt clinical paradigms for telemedicine applications.

Telemedicine system. Federal Center of Neurosurgery in Tyumen, 2013

Telenursing

Telenursing refers to the use of telecommunications and information technology in order to provide nursing services in health care whenever a large physical distance exists between patient and nurse, or between any number of nurses. As a field it is part of telehealth, and has many points of contacts with other medical and non-medical applications, such as telediagnosis, teleconsultation, telemonitoring, etc.Telenursing is achieving significant growth rates in many countries due to several factors: the preoccupation in reducing the costs of health care, an increase in the number of aging and chronically ill population, and the increase in coverage of health care to distant, rural, small or sparsely populated regions. Among its benefits, telenursing may help solve increasing shortages of nurses; to reduce distances and save travel time, and to keep patients out of hospital. A greater degree of job satisfaction has been registered among telenurses.

Baby Eve with Georgia for the Breastfeeding Support Project

In Australia, during January 2014, Melbourne tech startup Small World Social collaborated with the Australian Breastfeeding Association to create the first hands-free breastfeeding Google Glass application for new mothers. The application, named Google Glass Breastfeeding app trial, allows mothers to nurse their baby while viewing instructions about common breastfeeding issues (latching on, posture etc.) or call a lactation consultant via a secure Google Hangout, who can view the issue through the mother's Google Glass camera. The trial was successfully concluded in Melbourne in April 2014, and 100% of participants were breastfeeding confidently.

Telepharmacy

Pharmacy personnel deliver medical prescriptions electronically; remote delivery of pharmaceutical care is an example of telemedicine.

Telepharmacy is the delivery of pharmaceutical care via telecommunications to patients in locations where they may not have direct contact with a pharmacist. It is an instance of the wider phenomenon of telemedicine, as implemented in the field of pharmacy. Telepharmacy services include drug therapy monitoring, patient counseling, prior authorization and refill authorization for prescription drugs, and monitoring of formulary compliance with the aid of teleconferencing or videoconferencing. Remote dispensing of medications by automated packaging and labeling systems can also be thought of as an instance of telepharmacy. Telepharmacy services can be delivered at retail pharmacy sites or through hospitals, nursing homes, or other medical care facilities.

The term can also refer to the use of videoconferencing in pharmacy for other purposes, such as providing education, training, and management services to pharmacists and pharmacy staff remotely.

Teleneuropsychology

Teleneuropsychology (Cullum et al., 2014) is the use of telehealth/videoconference technology for the remote administration of neuropsychological tests. Neuropsychological tests are used to evaluate the cognitive status of individuals with known or suspected brain disorders and provide a profile of cognitive strengths and weaknesses. Through a series of studies, there is growing support in the literature showing that remote videoconference-based administration of many standard neuropsychological tests results in test findings that are similar to traditional in-person evaluations, thereby establishing the basis for the reliability and validity of teleneuropsychological assessment.Telerehabilitation

Telerehabilitation (or e-rehabilitation) is the delivery of rehabilitation services over telecommunication networks and the Internet. Most types of services fall into two categories: clinical assessment (the patient’s functional abilities in his or her environment), and clinical therapy. Some fields of rehabilitation practice that have explored telerehabilitation are: neuropsychology, speech-language pathology, audiology, occupational therapy, and physical therapy. Telerehabilitation can deliver therapy to people who cannot travel to a clinic because the patient has a disability or because of travel time. Telerehabilitation also allows experts in rehabilitation to engage in a clinical consultation at a distance.Most telerehabilitation is highly visual. As of 2014, the most commonly used mediums are webcams, videoconferencing, phone lines, videophones and webpages containing rich Internet applications. The visual nature of telerehabilitation technology limits the types of rehabilitation services that can be provided. It is most widely used for neuropsychological rehabilitation; fitting of rehabilitation equipment such as wheelchairs, braces or artificial limbs; and in speech-language pathology. Rich internet applications for neuropsychological rehabilitation (aka cognitive rehabilitation) of cognitive impairment (from many etiologies) were first introduced in 2001. This endeavor has expanded as a teletherapy application for cognitive skills enhancement programs for school children. Tele-audiology (hearing assessments) is a growing application. Currently, telerehabilitation in the practice of occupational therapy and physical therapy is limited, perhaps because these two disciplines are more "hands on".

Two important areas of telerehabilitation research are (1) demonstrating equivalence of assessment and therapy to in-person assessment and therapy, and (2) building new data collection systems to digitize information that a therapist can use in practice. Ground-breaking research in telehaptics (the sense of touch) and virtual reality may broaden the scope of telerehabilitation practice, in the future.

In the United States, the National Institute on Disability and Rehabilitation Research's (NIDRR) supports research and the development of telerehabilitation. NIDRR's grantees include the "Rehabilitation Engineering and Research Center" (RERC) at the University of Pittsburgh, the Rehabilitation Institute of Chicago, the State University of New York at Buffalo, and the National Rehabilitation Hospital in Washington DC. Other federal funders of research are the Veterans Health Administration, the Health Services Research Administration in the US Department of Health and Human Services, and the Department of Defense. Outside the United States, excellent research is conducted in Australia and Europe.

Only a few health insurers in the United States, and about half of Medicaid programs, reimburse for telerehabilitation services. If the research shows that teleassessments and teletherapy are equivalent to clinical encounters, it is more likely that insurers and Medicare will cover telerehabilitation services.

Teletrauma care

Telemedicine can be utilized to improve the efficiency and effectiveness of the delivery of care in a trauma environment. Examples include:Telemedicine for trauma triage: using telemedicine, trauma specialists can interact with personnel on the scene of a mass casualty or disaster situation, via the internet using mobile devices, to determine the severity of injuries. They can provide clinical assessments and determine whether those injured must be evacuated for necessary care. Remote trauma specialists can provide the same quality of clinical assessment and plan of care as a trauma specialist located physically with the patient.

Telemedicine for intensive care unit (ICU) rounds: Telemedicine is also being used in some trauma ICUs to reduce the spread of infections. Rounds are usually conducted at hospitals across the country by a team of approximately ten or more people to include attending physicians, fellows, residents and other clinicians. This group usually moves from bed to bed in a unit discussing each patient. This aids in the transition of care for patients from the night shift to the morning shift, but also serves as an educational experience for new residents to the team. A new approach features the team conducting rounds from a conference room using a video-conferencing system. The trauma attending, residents, fellows, nurses, nurse practitioners, and pharmacists are able to watch a live video stream from the patient's bedside. They can see the vital signs on the monitor, view the settings on the respiratory ventilator, and/or view the patient's wounds. Video-conferencing allows the remote viewers two-way communication with clinicians at the bedside.

Telemedicine for trauma education: some trauma centers are delivering trauma education lectures to hospitals and health care providers worldwide using video conferencing technology. Each lecture provides fundamental principles, firsthand knowledge and evidenced-based methods for critical analysis of established clinical practice standards, and comparisons to newer advanced alternatives. The various sites collaborate and share their perspective based on location, available staff, and available resources.

Telemedicine in the trauma operating room: trauma surgeons are able to observe and consult on cases from a remote location using video conferencing. This capability allows the attending to view the residents in real time. The remote surgeon has the capability to control the camera (pan, tilt and zoom) to get the best angle of the procedure while at the same time providing expertise in order to provide the best possible care to the patient.

Specialist care delivery

Telemedicine can facilitate specialty care delivered by primary care physicians according to a controlled study of the treatment of hepatitis C. Various specialties are contributing to telemedicine, in varying degrees.Telecardiology

ECGs, or electrocardiographs, can be transmitted using telephone and wireless. Willem Einthoven, the inventor of the ECG, actually did tests with transmission of ECG via telephone lines. This was because the hospital did not allow him to move patients outside the hospital to his laboratory for testing of his new device. In 1906 Einthoven came up with a way to transmit the data from the hospital directly to his lab. See above reference-General health care delivery. Remotely treating ventricular fibrillation Medphone Corporation, 1989Teletransmission of ECG using methods indigenous to Asia

One of the oldest known telecardiology systems for teletransmissions of ECGs was established in Gwalior, India in 1975 at GR Medical college by Ajai Shanker, S. Makhija, P.K. Mantri using an indigenous technique for the first time in India.This system enabled wireless transmission of ECG from the moving ICU van or the patients home to the central station in ICU of the department of Medicine. Transmission using wireless was done using frequency modulation which eliminated noise. Transmission was also done through telephone lines. The ECG output was connected to the telephone input using a modulator which converted ECG into high frequency sound. At the other end a demodulator reconverted the sound into ECG with a good gain accuracy. The ECG was converted to sound waves with a frequency varying from 500 Hz to 2500 Hz with 1500 Hz at baseline.

This system was also used to monitor patients with pacemakers in remote areas. The central control unit at the ICU was able to correctly interpret arrhythmia. This technique helped medical aid reach in remote areas.

In addition, electronic stethoscopes can be used as recording devices, which is helpful for purposes of telecardiology. There are many examples of successful telecardiology services worldwide.

In Pakistan three pilot projects in telemedicine was initiated by the Ministry of IT & Telecom, Government of Pakistan (MoIT) through the Electronic Government Directorate in collaboration with Oratier Technologies (a pioneer company within Pakistan dealing with healthcare and HMIS) and PakDataCom (a bandwidth provider). Three hub stations through were linked via the Pak Sat-I communications satellite, and four districts were linked with another hub. A 312 Kb link was also established with remote sites and 1 Mbit/s bandwidth was provided at each hub. Three hubs were established: the Mayo Hospital (the largest hospital in Asia), JPMC Karachi and Holy Family Rawalpindi. These 12 remote sites were connected and on average of 1,500 patients being treated per month per hub. The project was still running smoothly after two years.

Telepsychiatry

Telepsychiatry, another aspect of telemedicine, also utilizes videoconferencing for patients residing in underserved areas to access psychiatric services. It offers wide range of services to the patients and providers, such as consultation between the psychiatrists, educational clinical programs, diagnosis and assessment, medication therapy management, and routine follow-up meetings. Most telepsychiatry is undertaken in real time (synchronous) although in recent years research at UC Davis has developed and validated the process of asynchronous telepsychiatry. Recent reviews of the literature by Hilty et al. in 2013, and by Yellowlees et al. in 2015 confirmed that telepsychiatry is as effective as in-person psychiatric consultations for diagnostic assessment, is at least as good for the treatment of disorders such as depression and post traumatic stress disorder, and may be better than in-person treatment in some groups of patients, notably children, veterans and individuals with agoraphobia.As of 2011, the following are some of the model programs and projects which are deploying telepsychiatry in rural areas in the United States:

- University of Colorado Health Sciences Center (UCHSC) supports two programs for American Indian and Alaskan Native populations

-

- a. The Center for Native American Telehealth and Tele-education (CNATT) and

- b. Telemental Health Treatment for American Indian Veterans with Post-traumatic Stress Disorder (PTSD)

- Military Psychiatry, Walter Reed Army Medical Center.

- In 2009, the South Carolina Department of Mental Health established a partnership with the University of South Carolina School of Medicine and the South Carolina Hospital Association to form a statewide telepsychiatry program that provides access to psychiatrists 16 hours a day, 7 days a week, to treat patients with mental health issues who present at rural emergency departments in the network.

- Between 2007 and 2012, the University of Virginia Health System hosted a videoconferencing project that allowed child psychiatry fellows to conduct approximately 12,000 sessions with children and adolescents living in rural parts of the State.

Links for several sites related to telemedicine, telepsychiatry policy, guidelines, and networking are available at the website for the American Psychiatric Association.

There has also been a recent trend towards Video CBT sites with the recent endorsement and support of CBT by the National Health Service (NHS) in the United Kingdom.

In April 2012, a Manchester-based Video CBT pilot project was launched to provide live video therapy sessions for those with depression, anxiety, and stress related conditions called InstantCBT The site supported at launch a variety of video platforms (including Skype, GChat, Yahoo, MSN as well as bespoke) and was aimed at lowering the waiting times for mental health patients. This is a Commercial, For-Profit business.

In the United States, the American Telemedicine Association and the Center of Telehealth and eHealth are the most respectable places to go for information about telemedicine.

The Health Insurance Portability and Accountability Act (HIPAA), is a United States Federal Law that applies to all modes of electronic information exchange such as video-conferencing mental health services. In the United States, Skype, Gchat, Yahoo, and MSN are not permitted to conduct video-conferencing services unless these companies sign a Business Associate Agreement stating that their employees are HIPAA trained. For this reason, most companies provide their own specialized videotelephony services. Violating HIPAA in the United States can result in penalties of hundreds of thousands of dollars.

The momentum of telemental health and telepsychiatry is growing. In June 2012 the U.S. Veterans Administration announced expansion of the successful telemental health pilot. Their target was for 200,000 cases in 2012.

A growing number of HIPAA compliant technologies are now available. There is an independent comparison site that provides a criteria-based comparison of telemental health technologies.

The SATHI Telemental Health Support project cited above is another example of successful Telemental health support. - Also see SCARF India.

Teleradiology

A CT exam displayed through teleradiology

Teleradiology is the ability to send radiographic images (x-rays, CT, MR, PET/CT, SPECT/CT, MG, US...) from one location to another. For this process to be implemented, three essential components are required, an image sending station, a transmission network, and a receiving-image review station. The most typical implementation are two computers connected via the Internet. The computer at the receiving end will need to have a high-quality display screen that has been tested and cleared for clinical purposes. Sometimes the receiving computer will have a printer so that images can be printed for convenience.

The teleradiology process begins at the image sending station. The radiographic image and a modem or other connection are required for this first step. The image is scanned and then sent via the network connection to the receiving computer.

Today's high-speed broadband based Internet enables the use of new technologies for teleradiology: the image reviewer can now have access to distant servers in order to view an exam. Therefore, they do not need particular workstations to view the images; a standard personal computer (PC) and digital subscriber line (DSL) connection is enough to reach keosys central server. No particular software is necessary on the PC and the images can be reached from wherever in the world.

Teleradiology is the most popular use for telemedicine and accounts for at least 50% of all telemedicine usage.

Telepathology

Telepathology is the practice of pathology at a distance. It uses telecommunications technology to facilitate the transfer of image-rich pathology data between distant locations for the purposes of diagnosis, education, and research. Performance of telepathology requires that a pathologist selects the video images for analysis and the rendering diagnoses. The use of "television microscopy", the forerunner of telepathology, did not require that a pathologist have physical or virtual "hands-on" involvement is the selection of microscopic fields-of-view for analysis and diagnosis.A pathologist, Ronald S. Weinstein, M.D., coined the term "telepathology" in 1986. In an editorial in a medical journal, Weinstein outlined the actions that would be needed to create remote pathology diagnostic services. He, and his collaborators, published the first scientific paper on robotic telepathology. Weinstein was also granted the first U.S. patents for robotic telepathology systems and telepathology diagnostic networks. Weinstein is known to many as the "father of telepathology". In Norway, Eide and Nordrum implemented the first sustainable clinical telepathology service in 1989. This is still in operation, decades later. A number of clinical telepathology services have benefited many thousands of patients in North America, Europe, and Asia.

Telepathology has been successfully used for many applications including the rendering histopathology tissue diagnoses, at a distance, for education, and for research. Although digital pathology imaging, including virtual microscopy, is the mode of choice for telepathology services in developed countries, analog telepathology imaging is still used for patient services in some developing countries.

Teledermatology

Teledermatology allows dermatology consultations over a distance using audio, visual and data communication, and has been found to improve efficiency. Applications comprise health care management such as diagnoses, consultation and treatment as well as (continuing medical) education. The dermatologists Perednia and Brown were the first to coin the term "teledermatology" in 1995. In a scientific publication, they described the value of a teledermatologic service in a rural area underserved by dermatologists.Teledentistry

Teledentistry is the use of information technology and telecommunications for dental care, consultation, education, and public awareness in the same manner as telehealth and telemedicine.Teleaudiology

Tele-audiology is the utilization of telehealth to provide audiological services and may include the full scope of audiological practice. This term was first used by Dr Gregg Givens in 1999 in reference to a system being developed at East Carolina University in North Carolina, USA.Teleophthalmology

Teleophthalmology is a branch of telemedicine that delivers eye care through digital medical equipment and telecommunications technology. Today, applications of teleophthalmology encompass access to eye specialists for patients in remote areas, ophthalmic disease screening, diagnosis and monitoring; as well as distant learning. Teleophthalmology may help reduce disparities by providing remote, low-cost screening tests such as diabetic retinopathy screening to low-income and uninsured patients. In Mizoram, India, a hilly area with poor roads, between 2011 till 2015, Tele-ophthalmology has provided care to over 10000 patients. These patients were examined by ophthalmic assistants locally but surgery was done on appointment after viewing the patient images online by Eye Surgeons in the hospital 6–12 hours away. Instead of an average 5 trips for say, a cataract procedure, only one was required for surgery alone as even post op care like stitch removal and glasses was done locally. There were huge cost savings in travel etc.Licensure

U.S. licensing and regulatory issues

Restrictive licensure laws in the United States require a practitioner to obtain a full license to deliver telemedicine care across state lines. Typically, states with restrictive licensure laws also have several exceptions (varying from state to state) that may release an out-of-state practitioner from the additional burden of obtaining such a license. A number of states require practitioners who seek compensation to frequently deliver interstate care to acquire a full license.If a practitioner serves several states, obtaining this license in each state could be an expensive and time-consuming proposition. Even if the practitioner never practices medicine face-to-face with a patient in another state, he/she still must meet a variety of other individual state requirements, including paying substantial licensure fees, passing additional oral and written examinations, and traveling for interviews.

In 2008, the U.S. passed the Ryan Haight Act which required face-to-face or valid telemedicine consultations prior to receiving a prescription.

State medical licensing boards have sometimes opposed telemedicine; for example, in 2012 electronic consultations were illegal in Idaho, and an Idaho-licensed general practitioner was punished by the board for prescribing an antibiotic, triggering reviews of her licensure and board certifications across the country. Subsequently, in 2015 the state legislature legalized electronic consultations.

In 2015, Teladoc filed suit against the Texas Medical Board over a rule that required in-person consultations initially; the judge refused to dismiss the case, noting that antitrust laws apply to state medical boards.

Companies

In the United States, the major companies offering primary care for non-acute illnesses include Teladoc, American Well, and PlushCare. Companies such as Grand Rounds offer remote access to specialty care. Additionally, telemedicine companies are collaborating with health insurers and other telemedicine providers to expand marketshare and patient access to telemedicine consultations. For example, In 2015, UnitedHealthcare announced that it would cover a range of video visits from Doctor On Demand, American Well’s AmWell, and its own Optum’s NowClinic, which is a white-labeled American Well offering. In November 30, 2017, PlushCare launched in some U.S. states, the Pre-Exposure Prophylaxis (PrEP) therapy for prevention of HIV. In this PrEP initiative, PlushCare does not require an initial check-up and provides consistent online doctor visits, regular local laboratory testing and prescriptions filled at partner pharmacies.Advanced and experimental services

Telesurgery

Remote surgery (also known as telesurgery) is the ability for a doctor to perform surgery on a patient even though they are not physically in the same location. It is a form of telepresence. Remote surgery combines elements of robotics, cutting edge communication technology such as high-speed data connections, haptics and elements of management information systems. While the field of robotic surgery is fairly well established, most of these robots are controlled by surgeons at the location of the surgery.Remote surgery is essentially advanced telecommuting for surgeons, where the physical distance between the surgeon and the patient is immaterial. It promises to allow the expertise of specialized surgeons to be available to patients worldwide, without the need for patients to travel beyond their local hospital.

Remote surgery or telesurgery is performance of surgical procedures where the surgeon is not physically in the same location as the patient, using a robotic teleoperator system controlled by the surgeon. The remote operator may give tactile feedback to the user. Remote surgery combines elements of robotics and high-speed data connections. A critical limiting factor is the speed, latency and reliability of the communication system between the surgeon and the patient, though trans-Atlantic surgeries have been demonstrated.

Enabling technologies

Videotelephony

Videotelephony comprises the technologies for the reception and transmission of audio-video signals by users at different locations, for communication between people in real-time.At the dawn of the technology, videotelephony also included image phones which would exchange still images between units every few seconds over conventional POTS-type telephone lines, essentially the same as slow scan TV systems.

Currently videotelephony is particularly useful to the deaf and speech-impaired who can use them with sign language and also with a video relay service, and well as to those with mobility issues or those who are located in distant places and are in need of telemedical or tele-educational services.

Developing countries

For developing countries, telemedicine and eHealth can be the only means of healthcare provision in remote areas. For example, the difficult financial situation in many African states and lack of trained health professionals has meant that the majority of the people in sub-Saharan Africa are badly disadvantaged in medical care, and in remote areas with low population density, direct healthcare provision is often very poor However, provision of telemedicine and eHealth from urban centres or from other countries is hampered by the lack of communications infrastructure, with no landline phone or broadband internet connection, little or no mobile connectivity, and often not even a reliable electricity supply.The Satellite African eHEalth vaLidation (SAHEL) demonstration project has shown how satellite broadband technology can be used to establish telemedicine in such areas. SAHEL was started in 2010 in Kenya and Senegal, providing self-contained, solar-powered internet terminals to rural villages for use by community nurses for collaboration with distant health centres for training, diagnosis and advice on local health issues.

In 2014, the government of Luxembourg, along with satellite operator, SES and NGOs, Archemed, Fondation Follereau, Friendship Luxembourg, German Doctors and Médecins Sans Frontières, established SATMED, a multilayer eHealth platform to improve public health in remote areas of emerging and developing countries, using the Emergency.lu disaster relief satellite platform and the Astra 2G TV satellite. SATMED was first deployed in response to a report in 2014 by German Doctors of poor communications in Sierra Leone hampering the fight against Ebola, and SATMED equipment arrived in the Serabu clinic in Sierra Leone in December 2014. In June 2015 SATMED was deployed at Maternité Hospital in Ahozonnoude, Benin to provide remote consultation and monitoring, and is the only effective communication link between Ahozonnoude, the capital and a third hospital in Allada, since land routes are often inaccessible due to flooding during the rainy season.