Fuel consumption monitor from a 2006 Honda Airwave. The displayed fuel economy is 18.1 km/L (5.5 L/100 km; 43 mpg‑US).

A

1916 experiment in creating a fuel-saving automobile in the United

States. The vehicle weighed only 135 pounds (61.2 kg) and was an

adaptation of a small gasoline engine originally designed to power a

bicycle.

The fuel economy of an automobile relates distance traveled by a vehicle and the amount of fuel

consumed. Consumption can be expressed in terms of volume of fuel to

travel a distance, or the distance traveled per unit volume of fuel

consumed. Since fuel consumption of vehicles is a significant factor in

air pollution, and since importation of motor fuel can be a large part

of a nation's foreign trade, many countries impose requirements for fuel

economy. Different methods are used to approximate the actual

performance of the vehicle. The energy in fuel is required to overcome

various losses (wind resistance, tire drag, and others) encountered

while propelling the vehicle, and in providing power to vehicle systems

such as ignition or air conditioning. Various strategies can be employed

to reduce losses at each of the conversions between the chemical energy

in the fuel and the kinetic energy of the vehicle. Driver behavior can

affect fuel economy; maneuvers such as sudden acceleration and heavy

braking waste energy.

Electric cars do not directly burn fuel, and so do not have fuel economy per se, but equivalence measures, such as miles per gallon gasoline equivalent have been created to attempt to compare them.

Units of measure

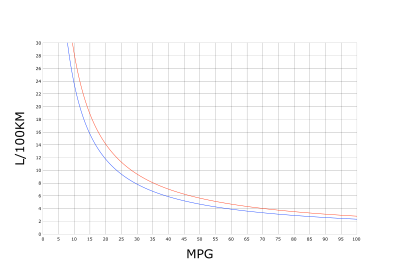

MPG to L/100 km conversion chart: blue, U.S. gallon; red, imperial gallon.

Fuel economy is the relationship between the distance traveled and fuel consumed.

Fuel economy can be expressed in two ways:

- Units of fuel per fixed distance

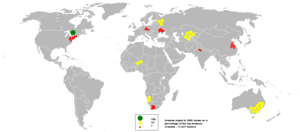

- Generally expressed as liters per 100 kilometers (L/100 km), used in most European countries, China, South Africa, Australia and New Zealand. British, Irish and Canadian law allow for the use of either liters per 100 kilometers or miles per imperial gallon. The window sticker on new US cars displays the vehicle's fuel consumption in US gallons per 100 miles, in addition to the traditional MPG number.

- Units of distance per fixed fuel unit

- Miles per gallon (mpg) is commonly used in the United States, the United Kingdom, and Canada (alongside L/100 km). Kilometers per liter (km/L) is more commonly used elsewhere in the Americas, Asia, parts of Africa and Oceania. In Arab countries km/20 L, which is known as kilometers per tanaka (or Tanakeh) is used, where tanaka is a metal container which has a volume of twenty liters. Both mpg and km/L are units of distance per fixed fuel amount (the increase of the value represents economic fuel consumption) whereas L/100 km is a unit of fuel consumption per a fixed unit of distance (the increase of the value represents large/bad fuel consumption). When the mpg unit is used, it is necessary to identify the type of gallon used: the imperial gallon is 4.54609 liters, and the U.S. gallon is 3.785 liters.

Fuel economy statistics

While the thermal efficiency (mechanical output to chemical energy in fuel) of petroleum engines has increased since the beginning of the automotive era to a current maximum of 36.4%

this is not the only factor in fuel economy. The design of automobile

as a whole and usage pattern affects the fuel economy. Published fuel

economy is subject to variation between jurisdiction due to variations

in testing protocols.

One of the first studies to determine fuel economy in the United States was the Mobil Economy Run, which was an event that took place every year from 1936 (except during World War II) to 1968. It was designed to provide real fuel efficiency numbers during a coast to coast test on real roads and with regular traffic and weather conditions. The Mobil Oil Corporation sponsored it and the United States Auto Club

(USAC) sanctioned and operated the run. In more recent studies, the

average fuel economy for new passenger car in the United States improved

from 17 mpg (13.8 L/100 km) in 1978 to more than 22 mpg (10.7 L/100 km)

in 1982.

The average fuel economy in 2008 for new cars, light trucks and SUVs in the United States was 26.4 mpgUS (8.9 L/100 km). 2008 model year cars classified as "midsize" by the US EPA ranged from 11 to 46 mpgUS(21 to 5 L/100 km) However, due to environmental concerns caused by CO2

emissions, new EU regulations are being introduced to reduce the

average emissions of cars sold beginning in 2012, to 130 g/km of CO2, equivalent to 4.5 L/100 km (52 mpgUS, 63 mpgimp) for a diesel-fueled car, and 5.0 L/100 km (47 mpgUS, 56 mpgimp) for a gasoline (petrol)-fueled car.

The average consumption across the fleet is not immediately affected by the new vehicle fuel economy: for example, Australia's car fleet average in 2004 was 11.5 L/100 km (20.5 mpgUS), compared with the average new car consumption in the same year of 9.3 L/100 km (25.3 mpgUS).

Speed and fuel economy studies

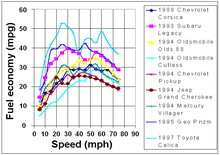

1997 fuel economy statistics for various US models

Fuel economy at steady speeds with selected vehicles was studied in 2010. The most recent study

indicates greater fuel efficiency at higher speeds than earlier

studies; for example, some vehicles achieve better fuel economy at

100 km/h (62 mph) rather than at 70 km/h (43 mph), although not their best economy, such as the 1994 Oldsmobile Cutlass Ciera with the LN2 2.2L engine, which has its best economy at 90 km/h (56 mph) (8.1 L/100 km (29 mpg‑US)), and gets better economy at 105 km/h (65 mph) than at 72 km/h (45 mph) (9.4 L/100 km (25 mpg‑US) vs 22 mpg‑US (11 L/100 km)). The proportion of driving on high speed roadways varies from 4% in Ireland to 41% in the Netherlands.

When the US National Maximum Speed Law's

55 mph (89 km/h) speed limit was mandated, there were complaints that

fuel economy could decrease instead of increase. The 1997 Toyota Celica

got better fuel-efficiency at 105 km/h (65 mph) than it did at 65 km/h

(40 mph) (5.41 L/100 km (43.5 mpg‑US) vs 5.53 L/100 km (42.5 mpg‑US)), although even better at 60 mph (97 km/h) than at 65 mph (105 km/h) (48.4 mpg‑US (4.86 L/100 km) vs 43.5 mpg‑US (5.41 L/100 km)), and its best economy (52.6 mpg‑US

(4.47 L/100 km)) at only 25 mph (40 km/h). Other vehicles tested had

from 1.4 to 20.2% better fuel-efficiency at 90 km/h (56 mph) vs.

105 km/h (65 mph). Their best economy was reached at speeds of 40 to

90 km/h (25 to 56 mph).

Officials hoped that the 55 mph (89 km/h) limit, combined with a

ban on ornamental lighting, no gasoline sales on Sunday, and a 15% cut

in gasoline production, would reduce total gas consumption by 200,000

barrels a day, representing a 2.2% drop from annualized 1973 gasoline

consumption levels.

This was partly based on a belief that cars achieve maximum efficiency

between 65 and 80 km/h (40 and 50 mph) and that trucks and buses were

most efficient at 55 mph (89 km/h).

In 1998, the U.S. Transportation Research Board footnoted an estimate that the 1974 National Maximum Speed Limit (NMSL) reduced fuel consumption by 0.2 to 1.0 percent. Rural interstates, the roads most visibly affected by the NMSL, accounted for 9.5% of the U.S' vehicle-miles-traveled in 1973, but such free-flowing roads typically provide more fuel-efficient travel than conventional roads.

Differences in testing standards

Identical vehicles can have varying fuel consumption figures listed depending upon the testing methods of the jurisdiction.

- Australia (L/100 km) – 'combined' 9.1, 'urban' 12.7, 'extra-urban' 7.0

- Canada (L/100 km) – 'combined' 9.6, 'city' 11.1, 'highway' 7.8

- European Union (L/100 km) – 'combined' 8.9, 'urban' 12.5, 'extra-urban' 6.9

- United States (L/100 km) – 'combined' 9.8, 'city' 11.2, 'highway' 8.1

Energy considerations

Since the total force opposing the vehicle's motion (at constant

speed) multiplied by the distance through which the vehicle travels

represents the work that the vehicle's engine must perform, the study of

fuel economy (the amount of energy consumed per unit of distance

traveled) requires a detailed analysis of the forces that oppose a

vehicle's motion. In terms of physics, Force = rate at which the amount

of work generated (energy delivered) varies with the distance traveled,

or:

Note: The amount of work generated by the vehicle's power source

(energy delivered by the engine) would be exactly proportional to the

amount of fuel energy consumed by the engine if the engine's efficiency

is the same regardless of power output, but this is not necessarily the

case due to the operating characteristics of the internal combustion

engine.

For a vehicle whose source of power is a heat engine (an engine

that uses heat to perform useful work), the amount of fuel energy that a

vehicle consumes per unit of distance (level road) depends upon:

- The thermodynamic efficiency of the heat engine;

- The forces of friction within the mechanical system that delivers engine output to the wheels;

- The forces of friction in the wheels and between the road and the wheels (rolling friction);

- Other internal forces that the engine works against (electrical generator, air conditioner, water pump, engine fan, etc.);

- External forces that resist motion (e.g., wind, rain);

- Non-regenerative braking force (brakes that turn motion energy into heat rather than storing it in a useful form; e.g., electrical energy in hybrid vehicles);

- Fuel consumed while the engine is on standby and not powering the wheels, i.e., while the vehicle is coasting, braking or idling.

Energy dissipation in city and highway driving for a mid-size gasoline-powered car.

Ideally, a car traveling at a constant velocity on level ground in a

vacuum with frictionless wheels could travel at any speed without

consuming any energy beyond what is needed to get the car up to speed.

Less ideally, any vehicle must expend energy on overcoming road load

forces, which consist of aerodynamic drag, tire rolling resistance, and

inertial energy that is lost when the vehicle is decelerated by friction

brakes. With ideal regenerative braking,

the inertial energy could be completely recovered, but there are few

options for reducing aerodynamic drag or rolling resistance other than

optimizing the vehicle's shape and the tire design. Road load energy, or

the energy demanded at the wheels, can be calculated by evaluating the

vehicle equation of motion over a specific driving cycle.

The vehicle power train must then provide this minimum energy in order

to move the vehicle, and will lose a large amount of additional energy

in the process of converting fuel energy into work and transmitting it

to the wheels. Overall, the sources of energy loss in moving a vehicle

may be summarized as follows:

- Engine efficiency (20–30%), which varies with engine type, the mass of the automobile and its load, and engine speed (usually measured in RPM).

- Aerodynamic drag force, which increases roughly by the square of the car's speed, but note that drag power goes by the cube of the car's speed.

- Rolling friction.

- Braking, although regenerative braking captures some of the energy that would otherwise be lost.

- Losses in the transmission. Manual transmissions can be up to 94% efficient whereas older automatic transmissions may be as low as 70% efficient Automatically controlled shifting of gearboxes that have the same internals as manual boxes will give the same efficiency as a pure manual gearbox plus the bonus of added intelligence selecting optimal shifting points

- Air conditioning. The power required for the engine to turn the compressor decreases the fuel-efficiency, though only when in use. This may be offset by the reduced drag of the vehicle compared with driving with the windows down. The efficiency of AC systems gradually deteriorates due to dirty filters etc.; regular maintenance prevents this. The extra mass of the air conditioning system will cause a slight increase in fuel consumption.

- Power steering. Older hydraulic power steering systems are powered by a hydraulic pump constantly engaged to the engine. Power assistance required for steering is inversely proportional to the vehicle speed so the constant load on the engine from a hydraulic pump reduces fuel efficiency. More modern designs improve fuel efficiency by only activating the power assistance when needed; this is done by using either direct electrical power steering assistance or an electrically powered hydraulic pump.

- Cooling. Older cooling systems used a constantly engaged mechanical fan to draw air through the radiator at a rate directly related to the engine speed. This constant load reduces efficiency. More modern systems use electrical fans to draw additional air through the radiator when extra cooling is required.

- Electrical systems. Headlights, battery charging, active suspension, circulating fans, defrosters, media systems, speakers, and other electronics can also significantly increase fuel consumption, as the energy to power these devices causes increased load on the alternator. Since alternators are commonly only 40–60% efficient, the added load from electronics on the engine can be as high as 3 horsepower (2.2 kW) at any speed including idle. In the FTP 75 cycle test, a 200 watt load on the alternator reduces fuel efficiency by 1.7 MPG. Headlights, for example, consume 110 watts on low and up to 240 watts on high. These electrical loads can cause much of the discrepancy between real world and EPA tests, which only include the electrical loads required to run the engine and basic climate control.

- Standby. The energy needed to keep the engine running while it is not providing power to the wheels, i.e., when stopped, coasting or braking.

Fuel-efficiency decreases from electrical loads are most pronounced

at lower speeds because most electrical loads are constant while engine

load increases with speed. So at a lower speed a higher proportion of

engine horsepower is used by electrical loads. Hybrid cars see the

greatest effect on fuel-efficiency from electrical loads because of this

proportional effect.

Future technologies

Technologies that may improve fuel efficiency, but are not yet on the market, include:

- HCCI (Homogeneous Charge Compression Ignition) combustion

- Scuderi engine

- Compound engines

- Two-stroke diesel engines

- High-efficiency gas turbine engines

- BMW's Turbosteamer – using the heat from the engine to spin a mini turbine to generate power

- Vehicle electronic control systems that automatically maintain distances between vehicles on motorways/freeways that reduce ripple back braking, and consequent re-acceleration.

- Time-optimized piston path, to capture energy from hot gases in the cylinders when they are at their highest temperatures

- sterling hybrid battery vehicle

Many aftermarket consumer products

exist that are purported to increase fuel economy; many of these claims

have been discredited. In the United States, the Environmental

Protection Agency maintains a list of devices that have been tested by

independent laboratories and makes the test results available to the

public.

Fuel economy data reliability

The

mandatory publication of the fuel consumption by the manufacturer led

some to use dubious practices to reach better values in the past. If the

test is on a test stand, the vehicle may detect open doors and adapt

the engine control. Also when driven according to the test regime, the

parameters may adapt automatically. Test laboratories use a "golden car"

that is tested in each one to check that each lab produces the same set

of measurements for a given drive cycle.

Tire pressures and lubricants have to be as recommended by the manufacturer (Higher tire pressures are required on a particular dynamometer type,

but this is to compensate for the different rolling resistance of the

dynamometer, not to produce an unrealistic load on the vehicle).

Normally the quoted figures a manufacturer publishes have to be proved

by the relevant authority witnessing vehicle/engine tests. Some

jurisdictions independently test emissions of vehicles in service, and

as a final measure can force a recall of all of a particular type of

vehicle if the customer vehicles do not fulfill manufacturers' claims

within reasonable limits. The expense and bad publicity from such a

recall encourages manufacturers to publish realistic figures. The US

Federal government retests 10–15% of models), to make sure that the manufacturer's tests are accurate.

Real world fuel consumption can vary greatly as they can be

affected by many factors that have little to do with the vehicle.

Driving conditions – weather, traffic, temperature; driving style – hard

braking, jack rabbit starts, and speeding; road conditions – paved vs

gravel, smooth vs potholes; and things like carrying excess weight, roof

racks, and fuel quality can all combine to dramatically increase fuel

consumption. Expecting to consistently perform in the face of so many

variables is impossible as is the expectation for one set of numbers to

encompass every driver and their personal circumstances.

The ratings are meant to provide a comparison, and are not a promise of actual performance.

Concerns over EPA estimates

For many years critics had claimed that EPA (U.S. Environmental Protection Agency)

estimated fuel economy figures had been misleading. The primary

arguments of the EPA detractors were focused on the lack of real world

testing, and the very limited scale (i.e., city or highway).

Partly as a response to these criticisms, the EPA changed their

fuel economy rating system in 2008 in an attempt to more adequately

address these concerns. Instead of testing simply in two presumed modes,

the testing now covers:

- Faster speeds and acceleration

- Air conditioner use

- Colder outside temperatures

While the new EPA standards may represent an improvement, real world

user data may still be the best way to gather and collect accurate fuel

economy information. As such the EPA has also set up a http://www.fueleconomy.gov/mpg/MPG.do?action=browseList website where drivers can enter and track their own real-world fuel economy numbers.

There are also a number of websites that attempt to track and

report individual user fuel economy data through real-life driving.

Sites or publications such as Consumer Reports, Edmunds.com, Consumer Guide and TrueDelta.com offer this service and claim more accurate numbers than those listed by the EPA.

Fuel economy maximizing behaviors

Governments, various environmentalist organizations, and companies like Toyota and Shell Oil Company have historically urged drivers to maintain adequate air pressure in tires and careful acceleration/deceleration habits. Keeping track of fuel efficiency stimulates fuel economy-maximizing behavior.

A five-year partnership between Michelin and Anglian Water

shows that 60,000 liters of fuel can be saved on tire pressure. The

Anglian Water fleet of 4,000 vans and cars are now lasting their full

lifetime. This shows the impact that tire pressures have on the fuel

efficiency.

Fuel economy as part of quality management regimes

Environmental management systems EMAS

as well as good fleet management includes record keeping of the fleet

fuel consumption. Quality management uses those figures to steer the

measures acting on the fleets. This is a way to check whether

procurement, driving, and maintenance in total have contributed to

changes in the fleet's overall consumption.

Fuel economy standards and testing procedures

Australia

From October 2008, all new cars had to be sold with a sticker on the windscreen showing the fuel consumption and the CO2 emissions. Fuel consumption figures are expressed as urban, extra urban and combined, measured according to ECE Regulations 83 and 101 – which are the based on the European driving cycle; previously, only the combined number was given.Australia also uses a star rating system, from one to five stars, that combines greenhouse gases with pollution, rating each from 0 to 10 with ten being best. To get 5 stars a combined score of 16 or better is needed, so a car with a 10 for economy (greenhouse) and a 6 for emission or 6 for economy and 10 for emission, or anything in between would get the highest 5 star rating. The lowest rated car is the Ssangyong Korrando with automatic transmission, with one star, while the highest rated was the Toyota Prius hybrid. The Fiat 500, Fiat Punto and Fiat Ritmo as well as the Citroen C3 also received 5 stars. The greenhouse rating depends on the fuel economy and the type of fuel used. A greenhouse rating of 10 requires 60 or less grams of CO2 per km, while a rating of zero is more than 440 g/km CO2. The highest greenhouse rating of any 2009 car listed is the Toyota Prius, with 106 g/km CO2 and 4.4 L/100 km (64 mpg‑imp; 53 mpg‑US). Several other cars also received the same rating of 8.5 for greenhouse. The lowest rated was the Ferrari 575 at 499 g/km CO2 and 21.8 L/100 km (13.0 mpg‑imp; 10.8 mpg‑US). The Bentley also received a zero rating, at 465 g/km CO2. The best fuel economy of any year is the 2004–2005 Honda Insight, at 3.4 L/100 km (83 mpg‑imp; 69 mpg‑US).

Canada

Vehicle manufacturers follow a controlled laboratory testing procedure to generate the fuel consumption data that they submit to the Government of Canada. This controlled method of fuel consumption testing, including the use of standardized fuels, test cycles and calculations, is used instead of on-road driving to ensure that all vehicles are tested under identical conditions and that the results are consistent and repeatable.Selected test vehicles are “run in” for about 6,000 km before testing. The vehicle is then mounted on a chassis dynamometer programmed to take into account the aerodynamic efficiency, weight and rolling resistance of the vehicle. A trained driver runs the vehicle through standardized driving cycles that simulate trips in the city and on the highway. Fuel consumption ratings are derived from the emissions generated during the driving cycles.

THE 5 CYCLE TEST:

- The city test simulates urban driving in stop-and-go traffic with an average speed of 34 km/h and a top speed of 90 km/h. The test runs for approximately 31 minutes and includes 23 stops. The test begins from a cold engine start, which is similar to starting a vehicle after it has been parked overnight during the summer. The final phase of the test repeats the first eight minutes of the cycle but with a hot engine start. This simulates restarting a vehicle after it has been warmed up, driven and then stopped for a short time. Over five minutes of test time are spent idling, to represent waiting at traffic lights. The ambient temperature of the test cell starts at 20 °C and ends at 30 °C.

- The highway test simulates a mixture of open highway and rural road driving, with an average speed of 78 km/h and a top speed of 97 km/h. The test runs for approximately 13 minutes and does not include any stops. The test begins from a hot engine start. The ambient temperature of the test cell starts at 20 °C and ends at 30 °C.

- In the cold temperature operation test, the same driving cycle is used as in the standard city test, except that the ambient temperature of the test cell is set to −7 °C.

- In the air conditioning test, the ambient temperature of the test cell is raised to 35 °C. The vehicle's climate control system is then used to lower the internal cabin temperature. Starting with a warm engine, the test averages 35 km/h and reaches a maximum speed of 88 km/h. Five stops are included, with idling occurring 19% of the time.

- The high speed/quick acceleration test averages 78 km/h and reaches a top speed of 129 km/h. Four stops are included and brisk acceleration maximizes at a rate of 13.6 km/h per second. The engine begins warm and air conditioning is not used. The ambient temperature of the test cell is constantly 25 °C.

Tests 2, 4, and 5 are averaged to create the highway driving fuel consumption rate.

Europe

Irish fuel economy label.

In the European Union, passenger vehicles are commonly tested using two drive cycles, and corresponding fuel economies are reported as 'urban' and 'extra-urban', in litres per 100 km and (in the UK) in miles per imperial gallon.

The urban economy is measured using the test cycle known as ECE-15, first introduced in 1970 by EC Directive 70/220/EWG and finalized by EEC Directive 90/C81/01 in 1999. It simulates a 4,052 m (2.518 mile) urban trip at an average speed of 18.7 km/h (11.6 mph) and at a maximum speed of 50 km/h (31 mph).

The extra-urban driving cycle or EUDC lasts 400 seconds (6 minutes 40 seconds) at an average speed 62.6 km/h (39 mph) and a top speed of 120 km/h (74.6 mph).

EU fuel consumption numbers are often considerably lower than corresponding US EPA test results for the same vehicle. For example, the 2011 Honda CR-Z with a six-speed manual transmission is rated 6.1/4.4 L/100 km in Europe and 7.6/6.4 L/100 km (31/37 mpg ) in the United States.

In the European Union advertising has to show Carbon dioxide (CO2)-emission and fuel consumption data in a clear way as described in the UK Statutory Instrument 2004 No 1661. Since September 2005 a color-coded "Green Rating" sticker has been available in the UK, which rates fuel economy by CO2 emissions: A: <= 100 g/km, B: 100–120, C: 121–150, D: 151–165, E: 166–185, F: 186–225, and G: 226+. Depending on the type of fuel used, for gasoline A corresponds to about 4.1 L/100 km (69 mpg‑imp; 57 mpg‑US) and G about 9.5 L/100 km (30 mpg‑imp; 25 mpg‑US). Ireland has a very similar label, but the ranges are slightly different, with A: <= 120 g/km, B: 121–140, C: 141–155, D: 156–170, E: 171–190, F: 191–225, and G: 226+.

In the UK the ASA (Advertising standards agency) have claimed that fuel consumption figures are misleading. Often the case with European vehicles as the MPG (miles per gallon) figures that can be advertised are often not the same as 'real world' driving.

The ASA have said that Car manufacturers can use ‘cheats’ to prepare their vehicles for their compulsory fuel efficiency and emissions tests in a way set out to make themselves look as ‘clean’ as possible. This practice is common in petrol and diesel vehicle tests, but hybrid and electric vehicles are not immune as manufacturers apply these techniques to fuel efficiency.

Car experts also assert that the official MPG figures given by manufacturers do not represent the true MPG values from real-world driving. Websites have been set up to show the real-world MPG figures, based on crowd-sourced data from real users, vs the official MPG figures.

The major loopholes in the current EU tests allow car manufacturers a number of ‘cheats’ to improve results. Car manufacturers can:

- Disconnect the alternator, thus no energy is used to recharge the battery;

- Use special lubricants that are not used in production cars, in order to reduce friction;

- Turn off all electrical gadgets i.e. Air Con/Radio;

- Adjust brakes or even disconnect them to reduce friction;

- Tape up cracks between body panels and windows to reduce air resistance;

- Remove Wing mirrors.

In 2018 update of the ICCT data the difference between the official and real figures was again 38 %.

Japan

The evaluation criteria used in Japan reflects driving conditions commonly found, as the typical Japanese driver doesn't drive as fast as other regions internationally.10–15 mode

The 10–15 mode driving cycle test is the official fuel economy and emission certification test for new light duty vehicles in Japan. Fuel economy is expressed in km/L (kilometers per litre) and emissions are expressed in g/km. The test is carried out on a dynamometer and consist of 25 tests which cover idling, acceleration, steady running and deceleration, and simulate typical Japanese urban and/or expressway driving conditions. The running pattern begins with a warm start, lasts for 660 seconds (11 minutes) and runs at speeds up to 70 km/h (43.5 mph). The distance of the cycle is 6.34 km (3.9 mi), average speed of 25.6 km/h (15.9 mph), and duration 892 seconds (14.9 minutes), including the initial 15 mode segment.JC08

A new more demanding test, called the JC08, was established in December 2006 for Japan’s new standard that goes into effect in 2015, but it is already being used by several car manufacturers for new cars. The JC08 test is significantly longer and more rigorous than the 10–15 mode test. The running pattern with JC08 stretches out to 1200 seconds (20 minutes), and there are both cold and warm start measurements and top speed is 82 km/h (51.0 mph). The economy ratings of the JC08 are lower than the 10–15 mode cycle, but they are expected to be more real world. The Toyota Prius became the first car to meet Japan’s new 2015 Fuel Economy Standards measured under the JC08 test.New Zealand

Starting on 7 April 2008 all cars of up to 3.5 tonnes GVW sold other than private sale need to have a fuel economy sticker applied (if available) that shows the rating from one half star to six stars with the most economic cars having the most stars and the more fuel hungry cars the least, along with the fuel economy in L/100 km and the estimated annual fuel cost for driving 14,000 km (at present fuel prices). The stickers must also appear on vehicles to be leased for more than 4 months. All new cars currently rated range from 6.9 L/100 km (41 mpg‑imp; 34 mpg‑US) to 3.8 L/100 km (74 mpg‑imp; 62 mpg‑US) and received respectively from 4.5 to 5.5 stars.Saudi Arabia

The Kingdom of Saudi Arabia announced new light-duty vehicle fuel economy standards in November 2014 which became effective January 1, 2016 and will be fully phased in by January 1, 2018. A review of the targets will be carried by December 2018, at which time targets for 2021–2025 will be set.United States

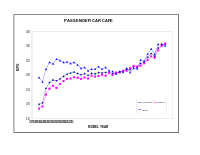

Motor vehicle fuel economy from 1966 to 2008.

US Energy Tax Act

The Energy Tax Act of 1978 in the US established a gas guzzler tax on the sale of new model year vehicles whose fuel economy fails to meet certain statutory levels. The tax applies only to cars (not trucks) and is collected by the IRS. Its purpose is to discourage the production and purchase of fuel-inefficient vehicles. The tax was phased in over ten years with rates increasing over time. It applies only to manufacturers and importers of vehicles, although presumably some or all of the tax is passed along to automobile consumers in the form of higher prices. Only new vehicles are subject to the tax, so no tax is imposed on used car sales. The tax is graduated to apply a higher tax rate for less-fuel-efficient vehicles. To determine the tax rate, manufacturers test all the vehicles at their laboratories for fuel economy. The US Environmental Protection Agency confirms a portion of those tests at an EPA lab.In some cases, this tax may apply only to certain variants of a given model; for example, the 2004–2006 Pontiac GTO (captive import version of the Holden Monaro) did incur the tax when ordered with the four-speed automatic transmission, but did not incur the tax when ordered with the six-speed manual transmission.

EPA testing procedure through 2007

The "city" or Urban Dynamometer Driving Schedule (UDDS) used in the EPA Federal Test Procedure

The Highway Fuel Economy Driving Cycle (HWFET) used in the EPA Federal Test Procedure

The "highway" program or Highway Fuel Economy Driving Schedule (HWFET) is defined in 40 C.F.R. 600 App I and uses a warmed-up engine and makes no stops, averaging 48 mph (77 km/h) with a top speed of 60 mph (97 km/h) over a 10-mile (16 km) distance. The measurements are then adjusted downward by 10% (city) and 22% (highway) to more accurately reflect real-world results. A weighted average of city (55%) and highway (45%) fuel economies is used to determine the guzzler tax.

The procedure has been updated to FTP-75, adding a "hot start" cycle which repeats the "cold start" cycle after a 10-minute pause.

Because EPA figures had almost always indicated better efficiency than real-world fuel-efficiency, the EPA has modified the method starting with 2008. Updated estimates are available for vehicles back to the 1985 model year.

EPA testing procedure: 2008 and beyond

2008 Monroney sticker highlights fuel economy.

SFTP US06 is a high speed/quick acceleration loop that lasts 10 minutes, covers 8 miles (13 km), averages 48 mph (77 km/h) and reaches a top speed of 80 mph (130 km/h). Four stops are included, and brisk acceleration maximizes at a rate of 8.46 mph (13.62 km/h) per second. The engine begins warm and air conditioning is not used. Ambient temperature varies between 68 °F (20 °C) to 86 °F (30 °C).

SFTO SC03 is the air conditioning test, which raises ambient temperatures to 95 °F (35 °C), and puts the vehicle's climate control system to use. Lasting 9.9 minutes, the 3.6-mile (5.8 km) loop averages 22 mph (35 km/h) and maximizes at a rate of 54.8 mph (88.2 km/h). Five stops are included, idling occurs 19 percent of the time and acceleration of 5.1 mph/sec is achieved. Engine temperatures begin warm.

Lastly, a cold temperature cycle uses the same parameters as the current city loop, except that ambient temperature is set to 20 °F (−7 °C).

EPA tests for fuel economy do not include electrical load tests beyond climate control, which may account for some of the discrepancy between EPA and real world fuel-efficiency. A 200 W electrical load can produce a 0.4 km/L (0.94 mpg) reduction in efficiency on the FTP 75 cycle test.

Electric vehicles and hybrids

2010 Monroney sticker for a plug-in hybrid showing fuel economy in all-electric mode and gas-only mode.

For the fuel economy label of the Chevy Volt plug-in hybrid EPA rated the car separately for all-electric mode expressed in miles per gallon gasoline equivalent (MPG-e) and for gasoline-only mode expressed in conventional miles per gallon. EPA also estimated an overall combined city/highway gas-electricity fuel economy rating expressed in miles per gallon gasoline equivalent (MPG-e). The label also includes a table showing fuel economy and electricity consumed for five different scenarios: 30 miles (48 km), 45 miles (72 km), 60 miles (97 km) and 75 miles (121 km) driven between a full charge, and a never charge scenario. This information was included in order to make the consumers aware of the variability of the fuel economy outcome depending on miles driven between charges. Also the fuel economy for a gasoline-only scenario (never charge) was included. For electric-only mode the energy consumption estimated in kWh per 100 miles (160 km) is also shown.

2010 Monroney label showing the EPA's combined city/highway fuel economy equivalent for an all-electric car

In May 2011, the National Highway Traffic Safety Administration (NHTSA) and EPA issued a joint final rule establishing new requirements for a fuel economy and environment label that is mandatory for all new passenger cars and trucks starting with model year 2013, and voluntary for 2012 models. The ruling includes new labels for alternative fuel and alternative propulsion vehicles available in the US market, such as plug-in hybrids, electric vehicles, flexible-fuel vehicles, hydrogen fuel cell vehicle, and natural gas vehicles. The common fuel economy metric adopted to allow the comparison of alternative fuel and advanced technology vehicles with conventional internal combustion engine vehicles is miles per gallon of gasoline equivalent (MPGe). A gallon of gasoline equivalent means the number of kilowatt-hours of electricity, cubic feet of compressed natural gas (CNG), or kilograms of hydrogen that is equal to the energy in a gallon of gasoline.

The new labels also include for the first time an estimate of how much fuel or electricity it takes to drive 100 miles (160 km), providing US consumers with fuel consumption per distance traveled, the metric commonly used in many other countries. EPA explained that the objective is to avoid the traditional miles per gallon metric that can be potentially misleading when consumers compare fuel economy improvements, and known as the "MPG illusion" – this illusion arises because the reciprocal (i.e. non-linear) relationship between cost (equivalently, volume of fuel consumed) per unit distance driven and MPG value means that differences in MPG values are not directly meaningful – only ratios are (in mathematical terms, the reciprocal function does not commute with addition and subtraction; in general, a difference in reciprocal values is not equal to the reciprocal of their difference). It has been claimed that many consumers are unaware of this, and therefore compare MPG values by subtracting them, which can give a misleading picture of relative differences in fuel economy between different pairs of vehicles – for instance, an increase from 10 to 20 MPG corresponds to a 100% improvement in fuel economy, whereas an increase from 50 to 60 MPG is only a 20% improvement, although in both cases the difference is 10 MPG. The EPA explained that the new gallons-per-100-miles metric provides a more accurate measure of fuel efficiency – notably, it is equivalent to the normal metric measurement of fuel economy, liters per 100 kilometers (L/100 km).

CAFE standards

Curve of average car mileage for model years between 1978–2014

The Corporate Average Fuel Economy (CAFE) regulations in the United States, first enacted by Congress in 1975, are federal regulations intended to improve the average fuel economy of cars and light trucks (trucks, vans and sport utility vehicles) sold in the US in the wake of the 1973 Arab Oil Embargo. Historically, it is the sales-weighted average fuel economy of a manufacturer's fleet of current model year passenger cars or light trucks, manufactured for sale in the United States. Under Truck CAFE standards 2008–2011 this changes to a "footprint" model where larger trucks are allowed to consume more fuel. The standards were limited to vehicles under a certain weight, but those weight classes were expanded in 2011.

State regulations

The Clean Air Act of 1970 prohibited states from establishing their own air pollution standards. However, the legislation authorized the EPA to grant a waiver to California, allowing the state to set higher standards. The law provides a “piggybacking” provision that allows other states to adopt vehicle emission limits that are the same as California’s. California’s waivers were routinely granted until 2007, when the Bush administration rejected the state’s bid to adopt global warming pollution limits for cars and light trucks. California and 15 other states that were trying to put in place the same emissions standards sued in response. The case was tied up in court until the administration of Barack Obama, which in 2009 reversed the Bush administration’s decision by granting the waiver.In April 2018, EPA Administrator Scott Pruitt announced that the Trump administration planned to roll back the federal fuel economy standards put in place in 2012 and that it would also seek to curb California’s authority to set its own standards. However, the Trump administration is reportedly also in talks with state officials to develop a compromise that would allow the state and national standards to stay in place.