SN 1994D (bright spot on the lower left), a Type Ia supernova within its host galaxy, NGC 4526

A supernova (/ˌsuːpərnoʊvə/ plural: supernovae /ˌsuːpərnoʊviː/ or supernovas, abbreviations: SN and SNe) is an event that occurs upon the death of certain types of stars.

Supernovae are more energetic than novae. In Latin, nova

means "new", referring astronomically to what appears to be a temporary

new bright star. Adding the prefix "super-" distinguishes supernovae

from ordinary novae, which are far less luminous. The word supernova was coined by Walter Baade and Fritz Zwicky in 1931.

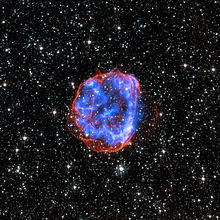

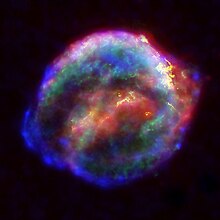

Only three Milky Way, naked-eye supernova events have been observed during the last thousand years, though many have been seen in other galaxies. The most recent directly observed supernova in the Milky Way was Kepler's Supernova in 1604, but two more recent supernova remnants

have also been found. Statistical observations of supernovae in other

galaxies suggest they occur on average about three times every century

in the Milky Way, and that any galactic supernova would almost certainly

be observable with modern astronomical telescopes.

Supernovae may expel much, if not all, of the material away from a star at velocities up to 30,000 km/s or 10% of the speed of light. This drives an expanding and fast-moving shock wave into the surrounding interstellar medium, and in turn, sweeping up an expanding shell of gas and dust, which is observed as a supernova remnant. Supernovae create, fuse and eject the bulk of the chemical elements produced by nucleosynthesis. Supernovae play a significant role in enriching the interstellar medium with the heavier atomic mass chemical elements. Furthermore, the expanding shock waves from supernovae can trigger the formation of new stars. Supernova remnants are expected to accelerate a large fraction of galactic primary cosmic rays, but direct evidence for cosmic ray production was found only in a few of them so far. They are also potentially strong galactic sources of gravitational waves.

Theoretical studies indicate that most supernovae are triggered by one of two basic mechanisms: the sudden re-ignition of nuclear fusion in a degenerate star or the sudden gravitational collapse of a massive star's core. In the first instance, a degenerate white dwarf may accumulate sufficient material from a binary companion, either through accretion or via a merger, to raise its core temperature enough to trigger runaway nuclear fusion, completely disrupting the star. In the second case, the core of a massive star may undergo sudden gravitational collapse, releasing gravitational potential energy

as a supernova. While some observed supernovae are more complex than

these two simplified theories, the astrophysical collapse mechanics have

been established and accepted by most astronomers for some time.

Due to the wide range of astrophysical consequences of these

events, astronomers now deem supernova research, across the fields of

stellar and galactic evolution, as an especially important area for

investigation.

Observation history

The earliest recorded supernova HB9 was viewed by Indians roughly 5,000-years ago and recorded in the oldest Star chart. Later, the SN 185, was viewed by Chinese astronomers in 185 AD. The brightest recorded supernova was SN 1006, which occurred in 1006 AD and was described by observers across China, Japan, Iraq, Egypt, and Europe. The widely observed supernova SN 1054 produced the Crab Nebula. Supernovae SN 1572 and SN 1604,

the latest to be observed with the naked eye in the Milky Way galaxy,

had notable effects on the development of astronomy in Europe because

they were used to argue against the Aristotelian idea that the universe beyond the Moon and planets was static and unchanging. Johannes Kepler

began observing SN 1604 at its peak on October 17, 1604, and continued

to make estimates of its brightness until it faded from naked eye view a

year later. It was the second supernova to be observed in a generation (after SN 1572 seen by Tycho Brahe in Cassiopeia).

There is some evidence that the youngest galactic supernova, G1.9+0.3, occurred in the late 19th century, considerably more recently than Cassiopeia A from around 1680.

Neither supernova was noted at the time. In the case of G1.9+0.3, high

extinction along the plane of the galaxy could have dimmed the event

sufficiently to go unnoticed. The situation for Cassiopeia A is less

clear. Infrared light echos have been detected showing that it was a type IIb supernova and was not in a region of especially high extinction.

Before the development of the telescope, only five supernovae were seen in the last millennium.

Compared to a star's entire history, the visual appearance of a

galactic supernova is very brief, perhaps spanning several months, so

that the chances of observing one is roughly once in a lifetime. Only a

tiny fraction of the 100 billion stars in a typical galaxy have the capacity to become a supernova, restricted to either those having large mass or extraordinarily rare kinds of binary stars containing white dwarfs.

However, observation and discovery of extragalactic supernovae are now far more common. The first such observation was of SN 1885A in the Andromeda galaxy.

Today, amateur and professional astronomers are finding several hundred

every year, some when near maximum brightness, others on old

astronomical photographs or plates. American astronomers Rudolph Minkowski and Fritz Zwicky developed the modern supernova classification scheme beginning in 1941. During the 1960s, astronomers found that the maximum intensities of supernovae could be used as standard candles, hence indicators of astronomical distances.

Some of the most distant supernovae observed in 2003, appeared dimmer

than expected. This supports the view that the expansion of the universe is accelerating. Techniques were developed for reconstructing supernovae events that have no written records of being observed. The date of the Cassiopeia A supernova event was determined from light echoes off nebulae, while the age of supernova remnant RX J0852.0-4622 was estimated from temperature measurements and the gamma ray emissions from the radioactive decay of titanium-44.

SN Antikythera, SN Eleanor and SN Alexander at galaxy cluster RXC J0949.8+1707.

The most luminous supernova ever recorded is ASASSN-15lh. It was first detected in June 2015 and peaked at 570 billion L☉, which is twice the bolometric luminosity of any other known supernova.

However, the nature of this supernova continues to be debated and

several alternative explanations have been suggested, e.g. tidal

disruption of a star by a black hole.

Among the earliest detected since time of detonation, and for

which the earliest spectra have been obtained (beginning at 6 hours

after the actual explosion), is the Type II SN 2013fs (iPTF13dqy) which was recorded 3 hours after the supernova event on 6 October 2013 by the Intermediate Palomar Transient Factory (iPTF). The star is located in a spiral galaxy named NGC 7610, 160 million light years away in the constellation of Pegasus.

On 20 September 2016, amateur astronomer Victor Buso from Rosario, Argentina was testing out his new 16 inch telescope. When taking several twenty second exposures of galaxy NGC 613,

Buso chanced upon a supernova that had just become visible on earth.

After examining the images he contacted the Instituto de Astrofísica de

La Plata. "It was the first time anyone had ever captured the initial

moments of the “shock breakout” from an optical supernova, one not

associated with a gamma-ray or X-ray burst."

The odds of capturing such an event were put between one in ten million

to one in a hundred million, according to astronomer Melina Bersten

from the Instituto de Astrofísica.

The supernova Buso observed was a Type IIb made by a star twenty times

the mass of the sun. Astronomer Alex Filippenko, from the University of California,

remarked that professional astronomers had been searching for such an

event for a long time. He stated: "Observations of stars in the first

moments they begin exploding provide information that cannot be directly

obtained in any other way."

Discovery

Early work on what was originally believed to be simply a new category of novae was performed during the 1930s by two astronomers named Walter Baade and Fritz Zwicky at Mount Wilson Observatory. The name super-novae was first used during 1931 lectures held at Caltech by Baade and Zwicky, then used publicly in 1933 at a meeting of the American Physical Society. By 1938, the hyphen had been lost and the modern name was in use. Because supernovae are relatively rare events within a galaxy, occurring about three times a century in the Milky Way, obtaining a good sample of supernovae to study requires regular monitoring of many galaxies.

Supernovae in other galaxies cannot be predicted with any

meaningful accuracy. Normally, when they are discovered, they are

already in progress. Most scientific interest in supernovae—as standard candles

for measuring distance, for example—require an observation of their

peak luminosity. It is therefore important to discover them well before

they reach their maximum. Amateur astronomers,

who greatly outnumber professional astronomers, have played an

important role in finding supernovae, typically by looking at some of

the closer galaxies through an optical telescope and comparing them to earlier photographs.

Toward the end of the 20th century astronomers increasingly turned to computer-controlled telescopes and CCDs for hunting supernovae. While such systems are popular with amateurs, there are also professional installations such as the Katzman Automatic Imaging Telescope. The Supernova Early Warning System (SNEWS) project uses a network of neutrino detectors to give early warning of a supernova in the Milky Way galaxy. Neutrinos are particles that are produced in great quantities by a supernova, and they are not significantly absorbed by the interstellar gas and dust of the galactic disk.

"A star set to explode", the SBW1 nebula surrounds a massive blue supergiant in the Carina Nebula.

Supernova searches fall into two classes: those focused on relatively

nearby events and those looking farther away. Because of the expansion of the universe, the distance to a remote object with a known emission spectrum can be estimated by measuring its Doppler shift (or redshift);

on average, more-distant objects recede with greater velocity than

those nearby, and so have a higher redshift. Thus the search is split

between high redshift and low redshift, with the boundary falling around

a redshift range of z=0.1–0.3—where z is a dimensionless measure of the spectrum's frequency shift.

High redshift searches for supernovae usually involve the

observation of supernova light curves. These are useful for standard or

calibrated candles to generate Hubble diagrams

and make cosmological predictions. Supernova spectroscopy, used to

study the physics and environments of supernovae, is more practical at

low than at high redshift.

Low redshift observations also anchor the low-distance end of the

Hubble curve, which is a plot of distance versus redshift for visible

galaxies.

Naming convention

Supernova discoveries are reported to the International Astronomical Union's Central Bureau for Astronomical Telegrams, which sends out a circular with the name it assigns to that supernova. The name is the marker SN

followed by the year of discovery, suffixed with a one or two-letter

designation. The first 26 supernovae of the year are designated with a

capital letter from A to Z. Afterward pairs of lower-case letters are used: aa, ab, and so on. Hence, for example, SN 2003C designates the third supernova reported in the year 2003. The last supernova of 2005 was SN 2005nc, indicating that it was the 367th

supernova found in 2005. Since 2000, professional and amateur

astronomers have been finding several hundreds of supernovae each year

(572 in 2007, 261 in 2008, 390 in 2009; 231 in 2013).

Historical supernovae are known simply by the year they occurred: SN 185, SN 1006, SN 1054, SN 1572 (called Tycho's Nova) and SN 1604 (Kepler's Star). Since 1885 the additional letter notation has been used, even if there was only one supernova discovered that year (e.g. SN 1885A, SN 1907A, etc.) — this last happened with SN 1947A. SN,

for SuperNova, is a standard prefix. Until 1987, two-letter

designations were rarely needed; since 1988, however, they have been

needed every year.

Classification

Artist's impression of supernova 1993J.

As part of the attempt to understand supernovae, astronomers have classified them according to their light curves and the absorption lines of different chemical elements that appear in their spectra. The first element for division is the presence or absence of a line caused by hydrogen. If a supernova's spectrum contains lines of hydrogen (known as the Balmer series in the visual portion of the spectrum) it is classified Type II; otherwise it is Type I. In each of these two types there are subdivisions according to the presence of lines from other elements or the shape of the light curve (a graph of the supernova's apparent magnitude as a function of time).

| Type I No hydrogen |

Type Ia Presents a singly ionized silicon (Si II) line at 615.0 nm (nanometers), near peak light |

Thermal runaway | ||||||

| Type Ib/c Weak or no silicon absorption feature |

Type Ib Shows a non-ionized helium (He I) line at 587.6 nm |

Core collapse | ||||||

| Type Ic Weak or no helium | ||||||||

| Type II Shows hydrogen |

Type II-P/L/N Type II spectrum throughout |

Type II-P/L No narrow lines |

Type II-P Reaches a "plateau" in its light curve | |||||

| Type II-L Displays a "linear" decrease in its light curve (linear in magnitude versus time). | ||||||||

| Type IIn Some narrow lines | ||||||||

| Type IIb Spectrum changes to become like Type Ib | ||||||||

Type I

Type I supernovae are subdivided on the basis of their spectra, with Type Ia showing a strong ionised silicon absorption line.

Type I supernovae without this strong line are classified as Type Ib

and Ic, with Type Ib showing strong neutral helium lines and Type Ic

lacking them. The light curves are all similar, although Type Ia are

generally brighter at peak luminosity, but the light curve is not

important for classification of Type I supernovae.

A small number of Type Ia supernovae exhibit unusual features

such as non-standard luminosity or broadened light curves, and these are

typically classified by referring to the earliest example showing

similar features. For example, the sub-luminous SN 2008ha is often referred to as SN 2002cx-like or class Ia-2002cx.

A small proportion of type Ic supernovae show highly broadened

and blended emission lines which are taken to indicate very high

expansion velocities for the ejecta. These have been classified as type

Ic-BL or Ic-bl.[54]

Type II

Light curves are used to classify Type II-P and Type II-L supernovae

The supernovae of Type II can also be sub-divided based on their spectra. While most Type II supernovae show very broad emission lines which indicate expansion velocities of many thousands of kilometres per second, some, such as SN 2005gl, have relatively narrow features in their spectra. These are called Type IIn, where the 'n' stands for 'narrow'.

A few supernovae, such as SN 1987K and SN 1993J,

appear to change types: they show lines of hydrogen at early times,

but, over a period of weeks to months, become dominated by lines of

helium. The term "Type IIb" is used to describe the combination of features normally associated with Types II and Ib.

Type II supernovae with normal spectra dominated by broad

hydrogen lines that remain for the life of the decline are classified on

the basis of their light curves. The most common type shows a

distinctive "plateau" in the light curve shortly after peak brightness

where the visual luminosity stays relatively constant for several months

before the decline resumes. These are called Type II-P referring to the

plateau. Less common are Type II-L supernovae that lack a distinct

plateau. The "L" signifies "linear" although the light curve is not

actually a straight line.

Supernovae that do not fit into the normal classifications are designated peculiar, or 'pec'.

Types III, IV, and V

Fritz Zwicky

defined additional supernovae types, although based on a very few

examples that did not cleanly fit the parameters for a Type I or Type II

supernova. SN 1961i in NGC 4303

was the prototype and only member of the Type III supernova class,

noted for its broad light curve maximum and broad hydrogen Balmer lines

that were slow to develop in the spectrum. SN 1961f in NGC 3003 was the prototype and only member of the Type IV class, with a light curve similar to a Type II-P supernova, with hydrogen absorption lines but weak hydrogen emission lines. The Type V class was coined for SN 1961V in NGC 1058, an unusual faint supernova or supernova impostor

with a slow rise to brightness, a maximum lasting many months, and an

unusual emission spectrum. The similarity of SN 1961V to the Eta Carinae Great Outburst was noted. Supernovae in M101 (1909) and M83 (1923 and 1957) were also suggested as possible Type IV or Type V supernovae.

These types would now all be treated as peculiar Type II

supernovae, of which many more examples have been discovered, although

it is still debated whether SN 1961V was a true supernova following an LBV outburst or an impostor.

Current models

Sequence shows the rapid brightening and slower fading of a supernova in the galaxy NGC 1365 (the bright dot close to the upper part of the galactic center)

The type codes, described above given to supernovae, are taxonomic

in nature: the type number describes the light observed from the

supernova, not necessarily its cause. For example, Type Ia supernovae

are produced by runaway fusion ignited on degenerate white dwarf

progenitors while the spectrally similar Type Ib/c are produced from

massive Wolf–Rayet progenitors by core collapse. The following

summarizes what is currently believed to be the most plausible

explanations for supernovae.

Thermal runaway

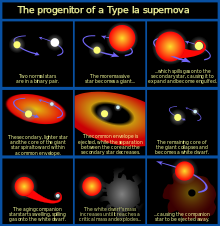

Formation of a Type Ia supernova

A white dwarf star may accumulate sufficient material from a stellar companion to raise its core temperature enough to ignite carbon fusion, at which point it undergoes runaway nuclear fusion, completely disrupting it. There are three avenues by which this detonation is theorized to happen: stable accretion

of material from a companion, the collision of two white dwarfs, or

accretion that causes ignition in a shell that then ignites. The

dominant mechanism by which Type Ia supernovae are produced remains

unclear.

Despite this uncertainty in how Type Ia supernovae are produced, Type

Ia supernovae have very uniform properties, and are useful standard

candles over intergalactic distances. Some calibrations are required to

compensate for the gradual change in properties or different frequencies

of abnormal luminosity supernovae at high red shift, and for small

variations in brightness identified by light curve shape or spectrum.

Normal Type Ia

There are several means by which a supernova of this type can form, but they share a common underlying mechanism. If a carbon-oxygen white dwarf accreted enough matter to reach the Chandrasekhar limit of about 1.44 solar masses (M☉) (for a non-rotating star), it would no longer be able to support the bulk of its mass through electron degeneracy pressure

and would begin to collapse. However, the current view is that this

limit is not normally attained; increasing temperature and density

inside the core ignite carbon fusion as the star approaches the limit (to within about 1%), before collapse is initiated.

Within a few seconds, a substantial fraction of the matter in the

white dwarf undergoes nuclear fusion, releasing enough energy (1–2×1044 J) to unbind the star in a supernova. An outwardly expanding shock wave is generated, with matter reaching velocities on the order of 5,000–20,000 km/s, or roughly 3% of the speed of light. There is also a significant increase in luminosity, reaching an absolute magnitude of −19.3 (or 5 billion times brighter than the Sun), with little variation.

The model for the formation of this category of supernova is a closed binary star system. The larger of the two stars is the first to evolve off the main sequence, and it expands to form a red giant.

The two stars now share a common envelope, causing their mutual orbit

to shrink. The giant star then sheds most of its envelope, losing mass

until it can no longer continue nuclear fusion. At this point it becomes a white dwarf star, composed primarily of carbon and oxygen.

Eventually the secondary star also evolves off the main sequence to

form a red giant. Matter from the giant is accreted by the white dwarf,

causing the latter to increase in mass. Despite widespread acceptance of

the basic model, the exact details of initiation and of the heavy

elements produced in the catastrophic event are still unclear.

Type Ia supernovae follow a characteristic light curve—the graph of luminosity as a function of time—after the event. This luminosity is generated by the radioactive decay of nickel-56 through cobalt-56 to iron-56. The peak luminosity of the light curve is extremely consistent across normal Type Ia supernovae, having a maximum absolute magnitude of about −19.3. This allows them to be used as a secondary standard candle to measure the distance to their host galaxies.

Non-standard Type Ia

Another

model for the formation of Type Ia supernovae involves the merger of

two white dwarf stars, with the combined mass momentarily exceeding the Chandrasekhar limit. There is much variation in this type of event,

and in many cases there may be no supernova at all, but it is expected

that they will have a broader and less luminous light curve than the

more normal SN Type Ia.

Abnormally bright Type Ia supernovae are expected when the white dwarf already has a mass higher than the Chandrasekhar limit, possibly enhanced further by asymmetry, but the ejected material will have less than normal kinetic energy.

There is no formal sub-classification for the non-standard Type

Ia supernovae. It has been proposed that a group of sub-luminous

supernovae that occur when helium accretes onto a white dwarf should be

classified as Type Iax. This type of supernova may not always completely destroy the white dwarf progenitor and could leave behind a zombie star.

One specific type of non-standard Type Ia supernova develops

hydrogen, and other, emission lines and gives the appearance of mixture

between a normal Type Ia and a Type IIn supernova. Examples are SN 2002ic and SN 2005gj. These supernova have been dubbed Type Ia/IIn, Type Ian, Type IIa and Type IIan.

Core collapse

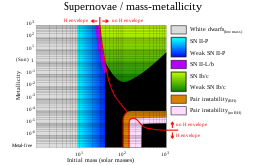

Supernova types by initial mass-metallicity

The layers of a massive, evolved star just prior to core collapse (Not to scale)

Very massive stars can undergo core collapse when nuclear fusion

becomes unable to sustain the core against its own gravity; passing this

threshold is the cause of all types of supernova except Type Ia. The

collapse may cause violent expulsion of the outer layers of the star

resulting in a supernova, or the release of gravitational potential

energy may be insufficient and the star may collapse into a black hole or neutron star with little radiated energy.

Core collapse can be caused by several different mechanisms: electron capture; exceeding the Chandrasekhar limit; pair-instability; or photodisintegration. When a massive star develops an iron core larger than the Chandrasekhar mass it will no longer be able to support itself by electron degeneracy pressure and will collapse further to a neutron star or black hole. Electron capture by magnesium in a degenerate O/Ne/Mg core causes gravitational collapse

followed by explosive oxygen fusion, with very similar results.

Electron-positron pair production in a large post-helium burning core

removes thermodynamic support and causes initial collapse followed by

runaway fusion, resulting in a pair-instability supernova. A

sufficiently large and hot stellar core

may generate gamma-rays energetic enough to initiate

photodisintegration directly, which will cause a complete collapse of

the core.

The table below lists the known reasons for core collapse in

massive stars, the types of star that they occur in, their associated

supernova type, and the remnant produced. The metallicity

is the proportion of elements other than hydrogen or helium, as

compared to the Sun. The initial mass is the mass of the star prior to

the supernova event, given in multiples of the Sun's mass, although the

mass at the time of the supernova may be much lower.

Type IIn supernovae are not listed in the table. They can

potentially be produced by various types of core collapse in different

progenitor stars, possibly even by Type Ia white dwarf ignitions,

although it seems that most will be from iron core collapse in luminous supergiants or hypergiants (including LBVs).

The narrow spectral lines for which they are named occur because the

supernova is expanding into a small dense cloud of circumstellar

material. It appears that a significant proportion of supposed Type IIn supernovae are actually supernova impostors, massive eruptions of LBV-like stars similar to the Great Eruption of Eta Carinae.

In these events, material previously ejected from the star creates the

narrow absorption lines and causes a shock wave through interaction with

the newly ejected material.

| Cause of collapse | Progenitor star approximate initial mass (solar masses) | Supernova type | Remnant |

|---|---|---|---|

| Electron capture in a degenerate O+Ne+Mg core | 8–10 | Faint II-P | Neutron star |

| Iron core collapse | 10–25 | Faint II-P | Neutron star |

| 25–40 with low or solar metallicity | Normal II-P | Black hole after fallback of material onto an initial neutron star | |

| 25–40 with very high metallicity | II-L or II-b | Neutron star | |

| 40–90 with low metallicity | None | Black hole | |

| ≥40 with near-solar metallicity | Faint Ib/c, or hypernova with gamma-ray burst (GRB) | Black hole after fallback of material onto an initial neutron star | |

| ≥40 with very high metallicity | Ib/c | Neutron star | |

| ≥90 with low metallicity | None, possible GRB | Black hole | |

| Pair instability | 140–250 with low metallicity | II-P, sometimes a hypernova, possible GRB | No remnant |

| Photodisintegration | ≥250 with low metallicity | None (or luminous supernova?), possible GRB | Massive black hole |

Remnants of single massive stars

Within

a massive, evolved star (a) the onion-layered shells of elements

undergo fusion, forming an iron core (b) that reaches Chandrasekhar-mass

and starts to collapse. The inner part of the core is compressed into

neutrons (c), causing infalling material to bounce (d) and form an

outward-propagating shock front (red). The shock starts to stall (e),

but it is re-invigorated by a process that may include neutrino

interaction. The surrounding material is blasted away (f), leaving only a

degenerate remnant.

When a stellar core is no longer supported against gravity, it collapses in on itself with velocities reaching 70,000 km/s (0.23c),

resulting in a rapid increase in temperature and density. What follows

next depends on the mass and structure of the collapsing core, with low

mass degenerate cores forming neutron stars, higher mass degenerate

cores mostly collapsing completely to black holes, and non-degenerate

cores undergoing runaway fusion.

The initial collapse of degenerate cores is accelerated by beta decay, photodisintegration and electron capture, which causes a burst of electron neutrinos.

As the density increases, neutrino emission is cut off as they become

trapped in the core. The inner core eventually reaches typically 30 km diameter and a density comparable to that of an atomic nucleus, and neutron degeneracy pressure tries to halt the collapse. If the core mass is more than about 15 M☉ then neutron degeneracy is insufficient to stop the collapse and a black hole forms directly with no supernova.

In lower mass cores the collapse is stopped and the newly formed neutron core has an initial temperature of about 100 billion kelvin, 6000 times the temperature of the sun's core. At this temperature, neutrino-antineutrino pairs of all flavors are efficiently formed by thermal emission. These thermal neutrinos are several times more abundant than the electron-capture neutrinos. About 1046

joules, approximately 10% of the star's rest mass, is converted into a

ten-second burst of neutrinos which is the main output of the event. The suddenly halted core collapse rebounds and produces a shock wave that stalls within milliseconds in the outer core as energy is lost through the dissociation of heavy elements. A process that is not clearly understood is necessary to allow the outer layers of the core to reabsorb around 1044 joules (1 foe)

from the neutrino pulse, producing the visible brightness, although

there are also other theories on how to power the explosion.

Some material from the outer envelope falls back onto the neutron star, and for cores beyond about 8 M☉

there is sufficient fallback to form a black hole. This fallback will

reduce the kinetic energy created and the mass of expelled radioactive

material, but in some situations it may also generate relativistic jets

that result in a gamma-ray burst or an exceptionally luminous supernova.

Collapse of massive non-degenerate cores will ignite further

fusion. When the core collapse is initiated by pair instability, oxygen

fusion begins and the collapse may be halted. For core masses of 40–60 M☉,

the collapse halts and the star remains intact, but core collapse will

occur again when a larger core has formed. For cores of around 60–130 M☉,

the fusion of oxygen and heavier elements is so energetic that the

entire star is disrupted, causing a supernova. At the upper end of the

mass range, the supernova is unusually luminous and extremely long-lived

due to many solar masses of ejected 56Ni. For even larger

core masses, the core temperature becomes high enough to allow

photodisintegration and the core collapses completely into a black hole.

Type II

The atypical subluminous Type II SN 1997D

Stars with initial masses less than about eight times the sun never

develop a core large enough to collapse and they eventually lose their

atmospheres to become white dwarfs. Stars with at least 9 M☉ (possibly as much as 12 M☉) evolve in a complex fashion, progressively burning heavier elements at hotter temperatures in their cores. The star becomes layered like an onion, with the burning of more easily fused elements occurring in larger shells.

Although popularly described as an onion with an iron core, the least

massive supernova progenitors only have oxygen-neon(-magnesium) cores.

These super AGB

stars may form the majority of core collapse supernovae, although less

luminous and so less commonly observed than those from more massive

progenitors.

If core collapse occurs during a supergiant phase when the star

still has a hydrogen envelope, the result is a Type II supernova. The

rate of mass loss for luminous stars depends on the metallicity and

luminosity. Extremely luminous stars at near solar metallicity will lose

all their hydrogen before they reach core collapse and so will not form

a Type II supernova. At low metallicity, all stars will reach core

collapse with a hydrogen envelope but sufficiently massive stars

collapse directly to a black hole without producing a visible supernova.

Stars with an initial mass up to about 90 times the sun, or a

little less at high metallicity, are expected to result in a Type II-P

supernova which is the most commonly observed type. At moderate to high

metallicity, stars near the upper end of that mass range will have lost

most of their hydrogen when core collapse occurs and the result will be a

Type II-L supernova. At very low metallicity, stars of around 140–250 M☉

will reach core collapse by pair instability while they still have a

hydrogen atmosphere and an oxygen core and the result will be a

supernova with Type II characteristics but a very large mass of ejected 56Ni and high luminosity.

Type Ib and Ic

SN 2008D, a Type Ib supernova, shown in X-ray (left) and visible light (right) at the far upper end of the galaxy

These supernovae, like those of Type II, are massive stars that

undergo core collapse. However the stars which become Types Ib and Ic

supernovae have lost most of their outer (hydrogen) envelopes due to

strong stellar winds or else from interaction with a companion. These stars are known as Wolf–Rayet stars,

and they occur at moderate to high metallicity where continuum driven

winds cause sufficiently high mass loss rates. Observations of Type Ib/c

supernova do not match the observed or expected occurrence of

Wolf–Rayet stars and alternate explanations for this type of core

collapse supernova involve stars stripped of their hydrogen by binary

interactions. Binary models provide a better match for the observed

supernovae, with the proviso that no suitable binary helium stars have

ever been observed.

Since a supernova can occur whenever the mass of the star at the time

of core collapse is low enough not to cause complete fallback to a black

hole, any massive star may result in a supernova if it loses enough

mass before core collapse occurs.

Type Ib supernovae are the more common and result from Wolf–Rayet

stars of Type WC which still have helium in their atmospheres. For a

narrow range of masses, stars evolve further before reaching core

collapse to become WO stars with very little helium remaining and these

are the progenitors of Type Ic supernovae.

A few percent of the Type Ic supernovae are associated with gamma-ray bursts

(GRB), though it is also believed that any hydrogen-stripped Type Ib or

Ic supernova could produce a GRB, depending on the circumstances of the

geometry. The mechanism for producing this type of GRB is the jets produced by the magnetic field of the rapidly spinning magnetar formed at the collapsing core of the star. The jets would also transfer energy into the expanding outer shell, producing a super-luminous supernova.

Ultra-stripped supernovae occur when the exploding star has been

stripped (almost) all the way to the metal core, via mass transfer in a

close binary. As a result, very little material is ejected from the exploding star (c. 0.1 M☉).

In the most extreme cases, ultra-stripped supernovae can occur in naked

metal cores, barely above the Chandrasekhar mass limit. SN 2005ek

might be an observational example of an ultra-stripped supernova,

giving rise to a relatively dim and fast decaying light curve. The

nature of ultra-stripped supernovae can be both iron core-collapse and

electron capture supernovae, depending on the mass of the collapsing

core.

Failed

The core collapse of some massive stars may not result in a visible

supernova. The main model for this is a sufficiently massive core that

the kinetic energy is insufficient to reverse the infall of the outer

layers onto a black hole. These events are difficult to detect, but

large surveys have detected possible candidates. The red supergiant N6946-BH1 in NGC 6946 underwent a modest outburst in March 2009, before fading from view. Only a faint infrared source remains at the star's location.

Light curves

Comparative supernova type light curves

A historic puzzle concerned the source of energy that can maintain

the optical supernova glow for months. Although the energy that disrupts

each type of supernovae is delivered promptly, the light curves are

mostly dominated by subsequent radioactive heating of the rapidly

expanding ejecta. Some have considered rotational energy from the

central pulsar. The ejecta gases would dim quickly without some energy

input to keep it hot. The intensely radioactive nature of the ejecta

gases, which is now known to be correct for most supernovae, was first

calculated on sound nucleosynthesis grounds in the late 1960s. It was not until SN 1987A that direct observation of gamma-ray lines unambiguously identified the major radioactive nuclei.

It is now known by direct observation that much of the light curve (the graph of luminosity as a function of time) after the occurrence of a Type II Supernova, such as SN 1987A, is explained by those predicted radioactive decays.

Although the luminous emission consists of optical photons, it is the

radioactive power absorbed by the ejected gases that keeps the remnant

hot enough to radiate light. The radioactive decay of 56Ni through its daughters 56Co to 56Fe produces gamma-ray photons,

primarily of 847keV and 1238keV, that are absorbed and dominate the

heating and thus the luminosity of the ejecta at intermediate times

(several weeks) to late times (several months). Energy for the peak of the light curve of SN1987A was provided by the decay of 56Ni to 56Co (half life 6 days) while energy for the later light curve in particular fit very closely with the 77.3 day half-life of 56Co decaying to 56Fe. Later measurements by space gamma-ray telescopes of the small fraction of the 56Co and 57Co gamma rays that escaped the SN 1987A remnant without absorption confirmed earlier predictions that those two radioactive nuclei were the power sources.

The visual light curves of the different supernova types all

depend at late times on radioactive heating, but they vary in shape and

amplitude because of the underlying mechanisms, the way that visible

radiation is produced, the epoch of its observation, and the

transparency of the ejected material. The light curves can be

significantly different at other wavelengths. For example, at

ultraviolet wavelengths there is an early extremely luminous peak

lasting only a few hours corresponding to the breakout of the shock

launched by the initial event, but that breakout is hardly detectable

optically.

The light curves for Type Ia are mostly very uniform, with a

consistent maximum absolute magnitude and a relatively steep decline in

luminosity. Their optical energy output is driven by radioactive decay

of ejected nickel-56 (half life 6 days), which then decays to

radioactive cobalt-56 (half life 77 days). These radioisotopes excite

the surrounding material to incandescence. Studies of cosmology today

rely on 56Ni

radioactivity providing the energy for the optical brightness of

supernovae of Type Ia, which are the "standard candles" of cosmology but

whose diagnostic 847keV and 1238keV gamma rays were first detected only

in 2014.

The initial phases of the light curve decline steeply as the effective

size of the photosphere decreases and trapped electromagnetic radiation

is depleted. The light curve continues to decline in the B band while it

may show a small shoulder in the visual at about 40 days, but this is

only a hint of a secondary maximum that occurs in the infra-red as

certain ionised heavy elements recombine to produce infra-red radiation

and the ejecta become transparent to it. The visual light curve

continues to decline at a rate slightly greater than the decay rate of

the radioactive cobalt (which has the longer half life and controls the

later curve), because the ejected material becomes more diffuse and less

able to convert the high energy radiation into visual radiation. After

several months, the light curve changes its decline rate again as positron emission becomes dominant from the remaining cobalt-56, although this portion of the light curve has been little-studied.

Type Ib and Ic light curves are basically similar to Type Ia

although with a lower average peak luminosity. The visual light output

is again due to radioactive decay being converted into visual radiation,

but there is a much lower mass of the created nickel-56. The peak

luminosity varies considerably and there are even occasional Type Ib/c

supernovae orders of magnitude more and less luminous than the norm. The

most luminous Type Ic supernovae are referred to as hypernovae

and tend to have broadened light curves in addition to the increased

peak luminosity. The source of the extra energy is thought to be

relativistic jets driven by the formation of a rotating black hole,

which also produce gamma-ray bursts.

The light curves for Type II supernovae are characterised by a much slower decline than Type I, on the order of 0.05 magnitudes per day,

excluding the plateau phase. The visual light output is dominated by

kinetic energy rather than radioactive decay for several months, due

primarily to the existence of hydrogen in the ejecta from the atmosphere

of the supergiant progenitor star. In the initial destruction this

hydrogen becomes heated and ionised. The majority of Type II supernovae

show a prolonged plateau in their light curves as this hydrogen

recombines, emitting visible light and becoming more transparent. This

is then followed by a declining light curve driven by radioactive decay

although slower than in Type I supernovae, due to the efficiency of

conversion into light by all the hydrogen.

In Type II-L the plateau is absent because the progenitor had

relatively little hydrogen left in its atmosphere, sufficient to appear

in the spectrum but insufficient to produce a noticeable plateau in the

light output. In Type IIb supernovae the hydrogen atmosphere of the

progenitor is so depleted (thought to be due to tidal stripping by a

companion star) that the light curve is closer to a Type I supernova and

the hydrogen even disappears from the spectrum after several weeks.

Type IIn supernovae are characterised by additional narrow

spectral lines produced in a dense shell of circumstellar material.

Their light curves are generally very broad and extended, occasionally

also extremely luminous and referred to as a superluminous supernova.

These light curves are produced by the highly efficient conversion of

kinetic energy of the ejecta into electromagnetic radiation by

interaction with the dense shell of material. This only occurs when the

material is sufficiently dense and compact, indicating that it has been

produced by the progenitor star itself only shortly before the supernova

occurs.

Large numbers of supernovae have been catalogued and classified

to provide distance candles and test models. Average characteristics

vary somewhat with distance and type of host galaxy, but can broadly be

specified for each supernova type.

| Typea | Average peak absolute magnitude | Approximate energy (foe) | Days to peak luminosity | Days from peak to 10% luminosity |

|---|---|---|---|---|

| Ia | −19 | 1 | approx. 19 | around 60 |

| Ib/c (faint) | around −15 | 0.1 | 15–25 | unknown |

| Ib | around −17 | 1 | 15–25 | 40–100 |

| Ic | around −16 | 1 | 15–25 | 40–100 |

| Ic (bright) | to −22 | above 5 | roughly 25 | roughly 100 |

| II-b | around −17 | 1 | around 20 | around 100 |

| II-L | around −17 | 1 | around 13 | around 150 |

| II-P (faint) | around −14 | 0.1 | roughly 15 | unknown |

| II-P | around −16 | 1 | around 15 | Plateau then around 50 |

| IInd | around −17 | 1 | 12–30 or more | 50–150 |

| IIn (bright) | to −22 | above 5 | above 50 | above 100 |

Asymmetry

The pulsar in the Crab nebula is travelling at 375 km/s relative to the nebula.

A long-standing puzzle surrounding Type II supernovae is why the

remaining compact object receives a large velocity away from the

epicentre; pulsars,

and thus neutron stars, are observed to have high velocities, and black

holes presumably do as well, although they are far harder to observe in

isolation. The initial impetus can be substantial, propelling an object

of more than a solar mass at a velocity of 500 km/s or greater. This

indicates an expansion asymmetry, but the mechanism by which momentum is

transferred to the compact object remains a puzzle. Proposed explanations for this kick include convection in the collapsing star and jet production during neutron star formation.

One possible explanation for this asymmetry is a large-scale convection

above the core. The convection can create variations in the local

abundances of elements, resulting in uneven nuclear burning during the

collapse, bounce and resulting expansion.

Another possible explanation is that accretion of gas onto the central neutron star can create a disk

that drives highly directional jets, propelling matter at a high

velocity out of the star, and driving transverse shocks that completely

disrupt the star. These jets might play a crucial role in the resulting

supernova. (A similar model is now favored for explaining long gamma-ray bursts.)

Initial asymmetries have also been confirmed in Type Ia

supernovae through observation. This result may mean that the initial

luminosity of this type of supernova depends on the viewing angle.

However, the expansion becomes more symmetrical with the passage of

time. Early asymmetries are detectable by measuring the polarization of

the emitted light.

Energy output

The radioactive decays of nickel-56 and cobalt-56 that produce a supernova visible light curve

Although we are used to thinking of supernovae primarily as luminous visible events, the electromagnetic radiation

they release is almost a minor side-effect. Particularly in the case of

core collapse supernovae, the emitted electromagnetic radiation is a

tiny fraction of the total energy released during the event.

There is a fundamental difference between the balance of energy

production in the different types of supernova. In Type Ia white dwarf

detonations, most of the energy is directed into heavy element synthesis and the kinetic energy of the ejecta. In core collapse supernovae, the vast majority of the energy is directed into neutrino

emission, and while some of this apparently powers the observed

destruction, 99%+ of the neutrinos escape the star in the first few

minutes following the start of the collapse.

Type Ia supernovae derive their energy from a runaway nuclear

fusion of a carbon-oxygen white dwarf. The details of the energetics are

still not fully understood, but the end result is the ejection of the

entire mass of the original star at high kinetic energy. Around half a

solar mass of that mass is 56Ni generated from silicon burning. 56Ni is radioactive and decays into 56Co by beta plus decay (with a half life of six days) and gamma rays. 56Co itself decays by the beta plus (positron) path with a half life of 77 days into stable 56Fe.

These two processes are responsible for the electromagnetic radiation

from Type Ia supernovae. In combination with the changing transparency

of the ejected material, they produce the rapidly declining light curve.

Core collapse supernovae are on average visually fainter than

Type Ia supernovae, but the total energy released is far higher. In

these type of supernovae, the gravitational potential energy is

converted into kinetic energy that compresses and collapses the core,

initially producing electron neutrinos from disintegrating nucleons, followed by all flavours

of thermal neutrinos from the super-heated neutron star core. Around 1%

of these neutrinos are thought to deposit sufficient energy into the

outer layers of the star to drive the resulting catastrophe, but again

the details cannot be reproduced exactly in current models. Kinetic

energies and nickel yields are somewhat lower than Type Ia supernovae,

hence the lower peak visual luminosity of Type II supernovae, but energy

from the de-ionisation

of the many solar masses of remaining hydrogen can contribute to a much

slower decline in luminosity and produce the plateau phase seen in the

majority of core collapse supernovae.

| Supernova | Approximate total energy 1044 joules (foe) |

Ejected Ni (solar masses) |

Neutrino energy (foe) |

Kinetic energy (foe) |

Electromagnetic radiation (foe) |

|---|---|---|---|---|---|

| Type Ia | 1.5 | 0.4 – 0.8 | 0.1 | 1.3 – 1.4 | ~0.01 |

| Core collapse | 100 | (0.01) – 1 | 100 | 1 | 0.001 – 0.01 |

| Hypernova | 100 | ~1 | 1–100 | 1–100 | ~0.1 |

| Pair instability | 5–100 | 0.5 – 50 | low? | 1–100 | 0.01 – 0.1 |

In some core collapse supernovae, fallback onto a black hole drives relativistic jets which may produce a brief energetic and directional burst of gamma rays

and also transfers substantial further energy into the ejected

material. This is one scenario for producing high luminosity supernovae

and is thought to be the cause of Type Ic hypernovae and long duration gamma-ray bursts.

If the relativistic jets are too brief and fail to penetrate the

stellar envelope then a low luminosity gamma-ray burst may be produced

and the supernova may be sub-luminous.

When a supernova occurs inside a small dense cloud of

circumstellar material, it will produce a shock wave that can

efficiently convert a high fraction of the kinetic energy into

electromagnetic radiation. Even though the initial energy was entirely

normal the resulting supernova will have high luminosity and extended

duration since it does not rely on exponential radioactive decay. This

type of event may cause Type IIn hypernovae.

Although pair-instability supernovae are core collapse supernovae

with spectra and light curves similar to Type II-P, the nature after

core collapse is more like that of a giant Type Ia with runaway fusion

of carbon, oxygen, and silicon. The total energy released by the highest

mass events is comparable to other core collapse supernovae but

neutrino production is thought to be very low, hence the kinetic and

electromagnetic energy released is very high. The cores of these stars

are much larger than any white dwarf and the amount of radioactive

nickel and other heavy elements ejected from their cores can be orders

of magnitude higher, with consequently high visual luminosity.

Progenitor

The supernova classification type is closely tied to the type of star at the time of the collapse. The occurrence of each type of supernova depends dramatically on the metallicity, and hence the age of the host galaxy.

Type Ia supernovae are produced from white dwarf stars in binary systems and occur in all galaxy types.

Core collapse supernovae are only found in galaxies undergoing current

or very recent star formation, since they result from short-lived

massive stars. They are most commonly found in Type Sc spirals, but also in the arms of other spiral galaxies and in irregular galaxies, especially starburst galaxies.

Type Ib/c and II-L, and possibly most Type IIn, supernovae are

only thought to be produced from stars having near-solar metallicity

levels that result in high mass loss from massive stars, hence they are

less common in older, more-distant galaxies. The table shows the

expected progenitor for the main types of core collapse supernova, and

the approximate proportions that have been observed in the local

neighbourhood.

There are a number of difficulties reconciling modelled and observed

stellar evolution leading up to core collapse supernovae. Red

supergiants are the expected progenitors for the vast majority of core

collapse supernovae, and these have been observed but only at relatively

low masses and luminosities, below about 18 M☉ and 100,000 L☉

respectively. Most progenitors of Type II supernovae are not detected

and must be considerably fainter, and presumably less massive. It is now

proposed that higher mass red supergiants do not explode as supernovae,

but instead evolve back towards hotter temperatures. Several

progenitors of Type IIb supernovae have been confirmed, and these were K

and G supergiants, plus one A supergiant.

Yellow hypergiants or LBVs are proposed progenitors for Type IIb

supernovae, and almost all Type IIb supernovae near enough to observe

have shown such progenitors.

Until just a few decades ago, hot supergiants were not considered

likely to explode, but observations have shown otherwise. Blue

supergiants form an unexpectedly high proportion of confirmed supernova

progenitors, partly due to their high luminosity and easy detection,

while not a single Wolf–Rayet progenitor has yet been clearly

identified.

Models have had difficulty showing how blue supergiants lose enough

mass to reach supernova without progressing to a different evolutionary

stage. One study has shown a possible route for low-luminosity post-red

supergiant luminous blue variables to collapse, most likely as a Type

IIn supernova. Several examples of hot luminous progenitors of Type IIn supernovae have been detected: SN 2005gy and SN 2010jl were both apparently massive luminous stars, but are very distant; and SN 2009ip had a highly luminous progenitor likely to have been an LBV, but is a peculiar supernova whose exact nature is disputed.

The progenitors of Type Ib/c supernovae are not observed at all,

and constraints on their possible luminosity are often lower than those

of known WC stars.

WO stars are extremely rare and visually relatively faint, so it is

difficult to say whether such progenitors are missing or just yet to be

observed. Very luminous progenitors have not been securely identified,

despite numerous supernovae being observed near enough that such

progenitors would have been clearly imaged.

Population modelling shows that the observed Type Ib/c supernovae could

be reproduced by a mixture of single massive stars and

stripped-envelope stars from interacting binary systems.

The continued lack of unambiguous detection of progenitors for normal

Type Ib and Ic supernovae may be due to most massive stars collapsing

directly to a black hole without a supernova outburst.

Most of these supernovae are then produced from lower-mass

low-luminosity helium stars in binary systems. A small number would be

from rapidly-rotating massive stars, likely corresponding to the

highly-energetic Type Ic-BL events that are associated with

long-duration gamma-ray bursts.

Interstellar impact

Source of heavy elements

Isolated neutron star in the Small Magellanic Cloud.

Supernovae are the major source of elements heavier than nitrogen. These elements are produced by nuclear fusion for nuclei up to 34S, by silicon photodisintegration rearrangement and quasiequilibrium (see Supernova nucleosynthesis) during silicon burning for nuclei between 36Ar and 56Ni,

and by rapid captures of neutrons during the supernova's collapse for

elements heavier than iron. Nucleosynthesis during silicon burning

yields nuclei roughly 1000-100,000 times more abundant than the

r-process isotopes heavier than iron. Supernovae are the most likely, although not undisputed, candidate sites for the r-process,

which is the rapid capture of neutrons that occurs at high temperature

and high density of neutrons. Those reactions produce highly unstable nuclei that are rich in neutrons and that rapidly beta decay into more stable forms. The r-process produces about half of all the heavier isotopes of the elements beyond iron, including plutonium and uranium. The only other major competing process for producing elements heavier than iron is the s-process

in large, old, red-giant AGB stars, which produces these elements

slowly over longer epochs and which cannot produce elements heavier than

lead.

Role in stellar evolution

Remnants of many supernovae consist of a compact object and a rapidly expanding shock wave of material. This cloud of material sweeps up the surrounding interstellar medium during a free expansion phase, which can last for up to two centuries. The wave then gradually undergoes a period of adiabatic expansion, and will slowly cool and mix with the surrounding interstellar medium over a period of about 10,000 years.

Supernova remnant N 63A lies within a clumpy region of gas and dust in the Large Magellanic Cloud.

The Big Bang produced hydrogen, helium, and traces of lithium, while all heavier elements are synthesized in stars and supernovae. Supernovae tend to enrich the surrounding interstellar medium with elements other than hydrogen and helium, which usually astronomers refer to as "metals".

These injected elements ultimately enrich the molecular clouds that are the sites of star formation.

Thus, each stellar generation has a slightly different composition,

going from an almost pure mixture of hydrogen and helium to a more

metal-rich composition. Supernovae are the dominant mechanism for

distributing these heavier elements, which are formed in a star during

its period of nuclear fusion. The different abundances of elements in

the material that forms a star have important influences on the star's

life, and may decisively influence the possibility of having planets orbiting it.

The kinetic energy of an expanding supernova remnant can trigger star formation by compressing nearby, dense molecular clouds in space. The increase in turbulent pressure can also prevent star formation if the cloud is unable to lose the excess energy.

Evidence from daughter products of short-lived radioactive isotopes shows that a nearby supernova helped determine the composition of the Solar System 4.5 billion years ago, and may even have triggered the formation of this system. Supernova production of heavy elements over astronomic periods of time ultimately made the chemistry of life on Earth possible.

Effect on Earth

A near-Earth supernova is a supernova close enough to the Earth to have noticeable effects on its biosphere. Depending upon the type and energy of the supernova, it could be as far as 3000 light-years away. Gamma rays from a supernova would induce a chemical reaction in the upper atmosphere converting molecular nitrogen into nitrogen oxides, depleting the ozone layer enough to expose the surface to harmful ultraviolet solar radiation. This has been proposed as the cause of the Ordovician–Silurian extinction, which resulted in the death of nearly 60% of the oceanic life on Earth.

In 1996 it was theorized that traces of past supernovae might be detectable on Earth in the form of metal isotope signatures in rock strata. Iron-60 enrichment was later reported in deep-sea rock of the Pacific Ocean.

In 2009, elevated levels of nitrate ions were found in Antarctic ice,

which coincided with the 1006 and 1054 supernovae. Gamma rays from these

supernovae could have boosted levels of nitrogen oxides, which became

trapped in the ice.

Type Ia supernovae are thought to be potentially the most

dangerous if they occur close enough to the Earth. Because these

supernovae arise from dim, common white dwarf stars in binary systems,

it is likely that a supernova that can affect the Earth will occur

unpredictably and in a star system that is not well studied. The closest

known candidate is IK Pegasi (see below). Recent estimates predict that a Type II supernova would have to be closer than eight parsecs

(26 light-years) to destroy half of the Earth's ozone layer, and there

are no such candidates closer than about 500 light years.

Milky Way candidates

The nebula around Wolf–Rayet star WR124, which is located at a distance of about 21,000 light years.

The next supernova in the Milky Way will likely be detectable even if

it occurs on the far side of the galaxy. It is likely to be produced by

the collapse of an unremarkable red supergiant and it is very probable

that it will already have been catalogued in infrared surveys such as 2MASS.

There is a smaller chance that the next core collapse supernova will be

produced by a different type of massive star such as a yellow

hypergiant, luminous blue variable, or Wolf–Rayet. The chances of the

next supernova being a Type Ia produced by a white dwarf are calculated

to be about a third of those for a core collapse supernova. Again it

should be observable wherever it occurs, but it is less likely that the

progenitor will ever have been observed. It isn't even known exactly

what a Type Ia progenitor system looks like, and it is difficult to

detect them beyond a few parsecs. The total supernova rate in our galaxy

is estimated to be between 2 and 12 per century, although we haven't

actually observed one for several centuries.

Statistically, the next supernova is likely to be produced from

an otherwise unremarkable red supergiant, but it is difficult to

identify which of those supergiants are in the final stages of heavy

element fusion in their cores and which have millions of years left. The

most-massive red supergiants are expected to shed their atmospheres and

evolve to Wolf–Rayet stars before their cores collapse. All Wolf–Rayet

stars are expected to end their lives from the Wolf–Rayet phase within a

million years or so, but again it is difficult to identify those that

are closest to core collapse. One class that is expected to have no more

than a few thousand years before exploding are the WO Wolf–Rayet stars,

which are known to have exhausted their core helium. Only eight of them are known, and only four of those are in the Milky Way.

A number of close or well known stars have been identified as possible core collapse supernova candidates: the red supergiants Antares and Betelgeuse; the yellow hypergiant Rho Cassiopeiae; the luminous blue variable Eta Carinae that has already produced a supernova impostor; and the brightest component, a Wolf–Rayet star, in the Regor or Gamma Velorum system, Others have gained notoriety as possible, although not very likely, progenitors for a gamma-ray burst; for example WR 104.

Identification of candidates for a Type Ia supernova is much more

speculative. Any binary with an accreting white dwarf might produce a

supernova although the exact mechanism and timescale is still debated.

These systems are faint and difficult to identify, but the novae and

recurrent novae are such systems that conveniently advertise themselves.

One example is U Scorpii. The nearest known Type Ia supernova candidate is IK Pegasi (HR 8210), located at a distance of 150 light-years,

but observations suggest it will be several million years before the

white dwarf can accrete the critical mass required to become a Type Ia

supernova.