Spontaneous parametric down-conversion process can split photons into type II photon pairs with mutually perpendicular polarization.

Quantum entanglement is a physical phenomenon that occurs when pairs or groups of particles are generated, interact, or share spatial proximity in ways such that the quantum state

of each particle cannot be described independently of the state of the

others, even when the particles are separated by a large distance.

Measurements of physical properties such as position, momentum, spin, and polarization, performed on entangled particles are found to be perfectly correlated.

For example, if a pair of particles is generated in such a way that

their total spin is known to be zero, and one particle is found to have

clockwise spin on a certain axis, the spin of the other particle,

measured on the same axis, will be found to be counterclockwise, as is

to be expected due to their entanglement. However, this behavior gives

rise to seemingly paradoxical

effects: any measurement of a property of a particle performs an

irreversible collapse on that particle and will change the original

quantum state. In the case of entangled particles, such a measurement

will be on the entangled system as a whole.

Such phenomena were the subject of a 1935 paper by Albert Einstein, Boris Podolsky, and Nathan Rosen, and several papers by Erwin Schrödinger shortly thereafter, describing what came to be known as the EPR paradox. Einstein and others considered such behavior to be impossible, as it violated the local realism view of causality (Einstein referring to it as "spooky action at a distance") and argued that the accepted formulation of quantum mechanics must therefore be incomplete.

Later, however, the counterintuitive predictions of quantum mechanics were verified experimentally in tests where the polarization or spin of entangled particles were measured at separate locations, statistically violating Bell's inequality. In earlier tests it couldn't be absolutely ruled out that the test result at one point could have been subtly transmitted to the remote point, affecting the outcome at the second location.

However so-called "loophole-free" Bell tests have been performed in

which the locations were separated such that communications at the speed

of light would have taken longer—in one case 10,000 times longer—than

the interval between the measurements.

According to some interpretations of quantum mechanics, the effect of one measurement occurs instantly. Other interpretations which don't recognize wavefunction collapse dispute that there is any "effect" at all. However, all interpretations agree that entanglement produces correlation between the measurements and that the mutual information between the entangled particles can be exploited, but that any transmission of information at faster-than-light speeds is impossible.

Quantum entanglement has been demonstrated experimentally with photons, neutrinos, electrons, molecules as large as buckyballs, and even small diamonds. On 13 July 2019, scientists from the University of Glasgow reported taking the first ever photo of a strong form of quantum entanglement known as Bell entanglement. The utilization of entanglement in communication and computation is a very active area of research.

History

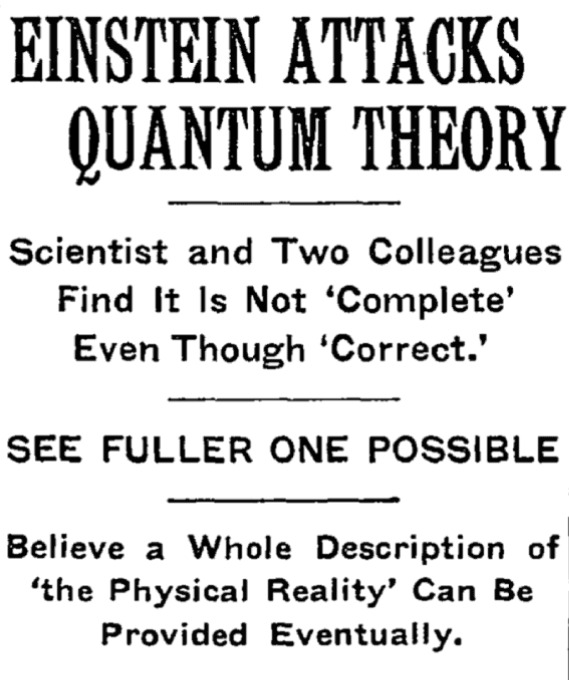

Article headline regarding the Einstein–Podolsky–Rosen paradox (EPR paradox) paper, in the May 4, 1935 issue of The New York Times.

The counterintuitive predictions of quantum mechanics about strongly correlated systems were first discussed by Albert Einstein in 1935, in a joint paper with Boris Podolsky and Nathan Rosen.

In this study, the three formulated the Einstein–Podolsky–Rosen paradox (EPR paradox), a thought experiment that attempted to show that quantum mechanical theory was incomplete.

They wrote: "We are thus forced to conclude that the quantum-mechanical

description of physical reality given by wave functions is not

complete."

However, the three scientists did not coin the word entanglement, nor did they generalize the special properties of the state they considered. Following the EPR paper, Erwin Schrödinger wrote a letter to Einstein in German in which he used the word Verschränkung (translated by himself as entanglement) "to describe the correlations between two particles that interact and then separate, as in the EPR experiment."

Schrödinger shortly thereafter published a seminal paper defining

and discussing the notion of "entanglement." In the paper he recognized

the importance of the concept, and stated: "I would not call [entanglement] one but rather the characteristic trait of quantum mechanics, the one that enforces its entire departure from classical lines of thought."

Like Einstein, Schrödinger was dissatisfied with the concept of

entanglement, because it seemed to violate the speed limit on the

transmission of information implicit in the theory of relativity. Einstein later famously derided entanglement as "spukhafte Fernwirkung" or "spooky action at a distance."

The EPR paper generated significant interest among physicists

which inspired much discussion about the foundations of quantum

mechanics (perhaps most famously Bohm's interpretation

of quantum mechanics), but produced relatively little other published

work. Despite the interest, the weak point in EPR's argument was not

discovered until 1964, when John Stewart Bell proved that one of their key assumptions, the principle of locality,

as applied to the kind of hidden variables interpretation hoped for by

EPR, was mathematically inconsistent with the predictions of quantum

theory.

Specifically, Bell demonstrated an upper limit, seen in Bell's inequality, regarding the strength of correlations that can be produced in any theory obeying local realism, and showed that quantum theory predicts violations of this limit for certain entangled systems. His inequality is experimentally testable, and there have been numerous relevant experiments, starting with the pioneering work of Stuart Freedman and John Clauser in 1972 and Alain Aspect's experiments in 1982, all of which have shown agreement with quantum mechanics rather than the principle of local realism.

For decades, each had left open at least one loophole

by which it was possible to question the validity of the results.

However, in 2015 an experiment was performed that simultaneously closed

both the detection and locality loopholes, and was heralded as

"loophole-free"; this experiment ruled out a large class of local

realism theories with certainty. Alain Aspect

notes that the "setting-independence loophole" – which he refers to as

"far-fetched", yet, a "residual loophole" that "cannot be ignored" –

has yet to be closed, and the free-will / superdeterminism loophole is unclosable; saying "no experiment, as ideal as it is, can be said to be totally loophole-free."

A minority opinion holds that although quantum mechanics is correct, there is no superluminal instantaneous action-at-a-distance between entangled particles once the particles are separated.

Bell's work raised the possibility of using these super-strong

correlations as a resource for communication. It led to the 1984

discovery of quantum key distribution protocols, most famously BB84 by Charles H. Bennett and Gilles Brassard and E91 by Artur Ekert. Although BB84 does not use entanglement, Ekert's protocol uses the violation of a Bell's inequality as a proof of security.

In October 2018, physicists reported that quantum behavior can be explained with classical physics for a single particle, but not for multiple particles as in quantum entanglement and related nonlocality phenomena.

In July 2019 physicists reported, for the first time, capturing an image of quantum entanglement.

Concept

Meaning of entanglement

An entangled system is defined to be one whose quantum state

cannot be factored as a product of states of its local constituents;

that is to say, they are not individual particles but are an inseparable

whole. In entanglement, one constituent cannot be fully described

without considering the other(s). The state of a composite system is

always expressible as a sum, or superposition, of products of states of local constituents; it is entangled if this sum necessarily has more than one term.

Quantum systems

can become entangled through various types of interactions. For some

ways in which entanglement may be achieved for experimental purposes,

see the section below on methods. Entanglement is broken when the entangled particles decohere through interaction with the environment; for example, when a measurement is made.

As an example of entanglement: a subatomic particle decays into an entangled pair of other particles. The decay events obey the various conservation laws,

and as a result, the measurement outcomes of one daughter particle must

be highly correlated with the measurement outcomes of the other

daughter particle (so that the total momenta, angular momenta, energy,

and so forth remains roughly the same before and after this process).

For instance, a spin-zero

particle could decay into a pair of spin-½ particles. Since the total

spin before and after this decay must be zero (conservation of angular

momentum), whenever the first particle is measured to be spin up on some axis, the other, when measured on the same axis, is always found to be spin down.

(This is called the spin anti-correlated case; and if the prior

probabilities for measuring each spin are equal, the pair is said to be

in the singlet state.)

The special property of entanglement can be better observed if we

separate the said two particles. Let's put one of them in the White

House in Washington and the other in Buckingham Palace (think about this

as a thought experiment, not an actual one). Now, if we measure a

particular characteristic of one of these particles (say, for example,

spin), get a result, and then measure the other particle using the same

criterion (spin along the same axis), we find that the result of the

measurement of the second particle will match (in a complementary sense)

the result of the measurement of the first particle, in that they will

be opposite in their values.

The above result may or may not be perceived as surprising. A classical system would display the same property, and a hidden variable theory

(see below) would certainly be required to do so, based on conservation

of angular momentum in classical and quantum mechanics alike. The

difference is that a classical system has definite values for all the

observables all along, while the quantum system does not. In a sense to

be discussed below, the quantum system considered here seems to acquire a

probability distribution for the outcome of a measurement of the spin

along any axis of the other particle upon measurement of the first

particle. This probability distribution is in general different from

what it would be without measurement of the first particle. This may

certainly be perceived as surprising in the case of spatially separated

entangled particles.

Paradox

The

paradox is that a measurement made on either of the particles apparently

collapses the state of the entire entangled system—and does so

instantaneously, before any information about the measurement result

could have been communicated to the other particle (assuming that

information cannot travel faster than light) and hence assured the "proper" outcome of the measurement of the other part of the entangled pair. In the Copenhagen interpretation,

the result of a spin measurement on one of the particles is a collapse

into a state in which each particle has a definite spin (either up or

down) along the axis of measurement. The outcome is taken to be random,

with each possibility having a probability of 50%. However, if both

spins are measured along the same axis, they are found to be

anti-correlated. This means that the random outcome of the measurement

made on one particle seems to have been transmitted to the other, so

that it can make the "right choice" when it too is measured.

The distance and timing of the measurements can be chosen so as to make the interval between the two measurements spacelike, hence, any causal effect connecting the events would have to travel faster than light. According to the principles of special relativity,

it is not possible for any information to travel between two such

measuring events. It is not even possible to say which of the

measurements came first. For two spacelike separated events x1 and x2 there are inertial frames in which x1 is first and others in which x2

is first. Therefore, the correlation between the two measurements

cannot be explained as one measurement determining the other: different

observers would disagree about the role of cause and effect.

(In fact similar paradoxes can arise even without entanglement:

the position of a single particle is spread out over space, and two

widely separated detectors attempting to detect the particle in two

different places must instantaneously attain appropriate correlation, so

that they do not both detect the particle.)

Hidden variables theory

A

possible resolution to the paradox is to assume that quantum theory is

incomplete, and the result of measurements depends on predetermined

"hidden variables". The state of the particles being measured contains some hidden variables,

whose values effectively determine, right from the moment of

separation, what the outcomes of the spin measurements are going to be.

This would mean that each particle carries all the required information

with it, and nothing needs to be transmitted from one particle to the

other at the time of measurement. Einstein and others (see the previous

section) originally believed this was the only way out of the paradox,

and the accepted quantum mechanical description (with a random

measurement outcome) must be incomplete.

Violations of Bell's inequality

The

hidden variables theory fails, however, when measurements of the spin

of entangled particles along different axes are considered (e.g., along

any of three axes that make angles of 120 degrees). If a large number of

pairs of such measurements are made (on a large number of pairs of

entangled particles), then statistically, if the local realist or hidden variables view were correct, the results would always satisfy Bell's inequality. A number of experiments

have shown in practice that Bell's inequality is not satisfied.

However, prior to 2015, all of these had loophole problems that were

considered the most important by the community of physicists. When measurements of the entangled particles are made in moving relativistic

reference frames, in which each measurement (in its own relativistic

time frame) occurs before the other, the measurement results remain

correlated.

The fundamental issue about measuring spin along different axes

is that these measurements cannot have definite values at the same

time―they are incompatible in the sense that these measurements' maximum simultaneous precision is constrained by the uncertainty principle.

This is contrary to what is found in classical physics, where any

number of properties can be measured simultaneously with arbitrary

accuracy. It has been proven mathematically that compatible measurements

cannot show Bell-inequality-violating correlations, and thus entanglement is a fundamentally non-classical phenomenon.

Other types of experiments

In experiments in 2012 and 2013, polarization correlation was created between photons that never coexisted in time. The authors claimed that this result was achieved by entanglement swapping

between two pairs of entangled photons after measuring the polarization

of one photon of the early pair, and that it proves that quantum

non-locality applies not only to space but also to time.

In three independent experiments in 2013 it was shown that classically-communicated separable quantum states can be used to carry entangled states. The first loophole-free Bell test was held in TU Delft in 2015 confirming the violation of Bell inequality.

In August 2014, Brazilian researcher Gabriela Barreto Lemos and

team were able to "take pictures" of objects using photons that had not

interacted with the subjects, but were entangled with photons that did

interact with such objects. Lemos, from the University of Vienna, is

confident that this new quantum imaging technique could find application

where low light imaging is imperative, in fields like biological or

medical imaging.

In 2015, Markus Greiner's group at Harvard performed a direct

measurement of Renyi entanglement in a system of ultracold bosonic

atoms.

From 2016 various companies like IBM, Microsoft etc. have

successfully created quantum computers and allowed developers and tech

enthusiasts to openly experiment with concepts of quantum mechanics

including quantum entanglement.

Mystery of time

There

have been suggestions to look at the concept of time as an emergent

phenomenon that is a side effect of quantum entanglement.

In other words, time is an entanglement phenomenon, which places all

equal clock readings (of correctly prepared clocks, or of any objects

usable as clocks) into the same history. This was first fully theorized

by Don Page and William Wootters in 1983.

The Wheeler–DeWitt equation

that combines general relativity and quantum mechanics – by leaving out

time altogether – was introduced in the 1960s and it was taken up again

in 1983, when Page and Wootters made a solution based on quantum

entanglement. Page and Wootters argued that entanglement can be used to

measure time.

In 2013, at the Istituto Nazionale di Ricerca Metrologica (INRIM)

in Turin, Italy, researchers performed the first experimental test of

Page and Wootters' ideas. Their result has been interpreted to confirm

that time is an emergent phenomenon for internal observers but absent

for external observers of the universe just as the Wheeler-DeWitt

equation predicts.

Source for the arrow of time

Physicist Seth Lloyd says that quantum uncertainty gives rise to entanglement, the putative source of the arrow of time. According to Lloyd; "The arrow of time is an arrow of increasing correlations."

The approach to entanglement would be from the perspective of the

causal arrow of time, with the assumption that the cause of the

measurement of one particle determines the effect of the result of the

other particle's measurement.

Emergent gravity

Based on AdS/CFT correspondence, Mark Van Raamsdonk

suggested that spacetime arises as an emergent phenomenon of the

quantum degrees of freedom that are entangled and live in the boundary

of the space-time. Induced gravity can emerge from the entanglement first law.

Non-locality and entanglement

In

the media and popular science, quantum non-locality is often portrayed

as being equivalent to entanglement. While this is true for pure

bipartite quantum states, in general entanglement is only necessary for

non-local correlations, but there exist mixed entangled states that do

not produce such correlations. A well-known example is the Werner states that are entangled for certain values of , but can always be described using local hidden variables.

Moreover, it was shown that, for arbitrary numbers of parties, there

exist states that are genuinely entangled but admit a local model.

The mentioned proofs about the existence of local models assume that

there is only one copy of the quantum state available at a time. If the

parties are allowed to perform local measurements on many copies of such

states, then many apparently local states (e.g., the qubit Werner

states) can no longer be described by a local model. This is, in

particular, true for all distillable states. However, it remains an open question whether all entangled states become non-local given sufficiently many copies.

In short, entanglement of a state shared by two parties is

necessary but not sufficient for that state to be non-local. It is

important to recognize that entanglement is more commonly viewed as an

algebraic concept, noted for being a prerequisite to non-locality as

well as to quantum teleportation and to superdense coding, whereas non-locality is defined according to experimental statistics and is much more involved with the foundations and interpretations of quantum mechanics.