https://en.wikipedia.org/wiki/Root-finding_algorithm

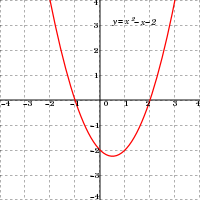

In mathematics and computing, a root-finding algorithm is an algorithm for finding zeroes, also called "roots", of continuous functions. A zero of a function f, from the real numbers to real numbers or from the complex numbers to the complex numbers, is a number x such that f(x) = 0. As, generally, the zeroes of a function cannot be computed exactly nor expressed in closed form, root-finding algorithms provide approximations to zeroes, expressed either as floating point numbers or as small isolating intervals, or disks for complex roots (an interval or disk output being equivalent to an approximate output together with an error bound).

Solving an equation f(x) = g(x) is the same as finding the roots of the function h(x) = f(x) – g(x). Thus root-finding algorithms allow solving any equation defined by continuous functions. However, most root-finding algorithms do not guarantee that they will find all the roots; in particular, if such an algorithm does not find any root, that does not mean that no root exists.

Most numerical root-finding methods use iteration, producing a sequence of numbers that hopefully converge towards the root as a limit. They require one or more initial guesses of the root as starting values, then each iteration of the algorithm produces a successively more accurate approximation to the root. Since the iteration must be stopped at some point these methods produce an approximation to the root, not an exact solution. Many methods compute subsequent values by evaluating an auxiliary function on the preceding values. The limit is thus a fixed point of the auxiliary function, which is chosen for having the roots of the original equation as fixed points, and for converging rapidly to these fixed points.

The behaviour of general root-finding algorithms is studied in numerical analysis. However, for polynomials, root-finding study belongs generally to computer algebra, since algebraic properties of polynomials are fundamental for the most efficient algorithms. The efficiency of an algorithm may depend dramatically on the characteristics of the given functions. For example, many algorithms use the derivative of the input function, while others work on every continuous function. In general, numerical algorithms are not guaranteed to find all the roots of a function, so failing to find a root does not prove that there is no root. However, for polynomials, there are specific algorithms that use algebraic properties for certifying that no root is missed, and locating the roots in separate intervals (or disks for complex roots) that are small enough to ensure the convergence of numerical methods (typically Newton's method) to the unique root so located.

In mathematics and computing, a root-finding algorithm is an algorithm for finding zeroes, also called "roots", of continuous functions. A zero of a function f, from the real numbers to real numbers or from the complex numbers to the complex numbers, is a number x such that f(x) = 0. As, generally, the zeroes of a function cannot be computed exactly nor expressed in closed form, root-finding algorithms provide approximations to zeroes, expressed either as floating point numbers or as small isolating intervals, or disks for complex roots (an interval or disk output being equivalent to an approximate output together with an error bound).

Solving an equation f(x) = g(x) is the same as finding the roots of the function h(x) = f(x) – g(x). Thus root-finding algorithms allow solving any equation defined by continuous functions. However, most root-finding algorithms do not guarantee that they will find all the roots; in particular, if such an algorithm does not find any root, that does not mean that no root exists.

Most numerical root-finding methods use iteration, producing a sequence of numbers that hopefully converge towards the root as a limit. They require one or more initial guesses of the root as starting values, then each iteration of the algorithm produces a successively more accurate approximation to the root. Since the iteration must be stopped at some point these methods produce an approximation to the root, not an exact solution. Many methods compute subsequent values by evaluating an auxiliary function on the preceding values. The limit is thus a fixed point of the auxiliary function, which is chosen for having the roots of the original equation as fixed points, and for converging rapidly to these fixed points.

The behaviour of general root-finding algorithms is studied in numerical analysis. However, for polynomials, root-finding study belongs generally to computer algebra, since algebraic properties of polynomials are fundamental for the most efficient algorithms. The efficiency of an algorithm may depend dramatically on the characteristics of the given functions. For example, many algorithms use the derivative of the input function, while others work on every continuous function. In general, numerical algorithms are not guaranteed to find all the roots of a function, so failing to find a root does not prove that there is no root. However, for polynomials, there are specific algorithms that use algebraic properties for certifying that no root is missed, and locating the roots in separate intervals (or disks for complex roots) that are small enough to ensure the convergence of numerical methods (typically Newton's method) to the unique root so located.

Bracketing methods

Bracketing

methods determine successively smaller intervals (brackets) that

contain a root. When the interval is small enough, then a root has been

found. They generally use the intermediate value theorem,

which asserts that if a continuous function has values of opposite

signs at the end points of an interval, then the function has at least

one root in the interval. Therefore, they require to start with an

interval such that the function takes opposite signs at the end points

of the interval. However, in the case of polynomials there are other methods (Descartes' rule of signs, Budan's theorem and Sturm's theorem) for getting information on the number of roots in an interval. They lead to efficient algorithms for real-root isolation of polynomials, which ensure finding all real roots with a guaranteed accuracy.

Bisection method

The simplest root-finding algorithm is the bisection method. Let f be a continuous function, for which one knows an interval [a, b] such that f(a) and f(b) have opposite signs (a bracket). Let c = (a +b)/2 be the middle of the interval (the midpoint or the point that bisects the interval). Then either f(a) and f(c), or f(c) and f(b)

have opposite signs, and one has divided by two the size of the

interval. Although the bisection method is robust, it gains one and only

one bit of accuracy with each iteration. Other methods, under appropriate conditions, can gain accuracy faster.

False position (regula falsi)

The false position method, also called the regula falsi method, is similar to the bisection method, but instead of using bisection search's middle of the interval it uses the x-intercept of the line that connects the plotted function values at the endpoints of the interval, that is

False position is similar to the secant method,

except that, instead of retaining the last two points, it makes sure to

keep one point on either side of the root. The false position method

can be faster than the bisection method and will never diverge like the

secant method; however, it may fail to converge in some naive

implementations due to roundoff errors that may lead to a wrong sign for

f(c); typically, this may occur if the rate of variation of f is large in the neighborhood of the root.

Ridders' method

is a variant of the false position method that uses the value of

function at the midpoint of the interval, for getting a function with

the same root, to which the false position method is applied. This gives

a faster convergence with a similar robustness.

Interpolation

Many root-finding processes work by interpolation. This consists in using the last computed approximate values of the root for approximating the function by a polynomial

of low degree, which takes the same values at these approximate roots.

Then the root of the polynomial is computed and used as a new

approximate value of the root of the function, and the process is

iterated.

Two values allow interpolating a function by a polynomial of

degree one (that is approximating the graph of the function by a line).

This is the basis of the secant method. Three values define a quadratic function, which approximates the graph of the function by a parabola. This is Muller's method.

Regula falsi is also an interpolation method, which

differs secant method by using, for interpolating by a line, two points

that are not necessarily the last two computed points.

Iterative methods

Although all root-finding algorithms proceed by iteration, an iterative

root-finding method generally use a specific type of iteration,

consisting of defining an auxiliary function, which is applied to the

last computed approximations of a root for getting a new approximation.

The iteration stops when a fixed point (up to

the desired precision) of the auxiliary function is reached, that is

when the new computed value is sufficiently close to the preceding ones.

Newton's method (and similar derivative-based methods)

Newton's method assumes the function f to have a continuous derivative.

Newton's method may not converge if started too far away from a root.

However, when it does converge, it is faster than the bisection method,

and is usually quadratic. Newton's method is also important because it

readily generalizes to higher-dimensional problems. Newton-like methods

with higher orders of convergence are the Householder's methods. The first one after Newton's method is Halley's method with cubic order of convergence.

Secant method

Replacing the derivative in Newton's method with a finite difference, we get the secant method.

This method does not require the computation (nor the existence) of a

derivative, but the price is slower convergence (the order is

approximately 1.6 (golden ratio)). A generalization of the secant method in higher dimensions is Broyden's method.

Steffensen's method

If

we use a polynomial fit to remove the quadratic part of the finite

difference used in the Secant method, so that it better approximates the

derivative, we obtain Steffensen's method,

which has quadratic convergence, and whose behavior (both good and bad)

is essentially the same Newton's method, but does not require a

derivative.

Inverse interpolation

The appearance of complex values in interpolation methods can be avoided by interpolating the inverse of f, resulting in the inverse quadratic interpolation

method. Again, convergence is asymptotically faster than the secant

method, but inverse quadratic interpolation often behaves poorly when

the iterates are not close to the root.

Combinations of methods

Brent's method

Brent's method is a combination of the bisection method, the secant method and inverse quadratic interpolation.

At every iteration, Brent's method decides which method out of these

three is likely to do best, and proceeds by doing a step according to

that method. This gives a robust and fast method, which therefore enjoys

considerable popularity.

Roots of polynomials

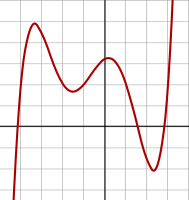

Finding roots of polynomial

is a long-standing problem that has been the object of much research

throughout history. A testament to this is that up until the 19th

century algebra meant essentially theory of polynomial equations.

Finding the root of a linear polynomial (degree one) is easy and needs only one division. For quadratic polynomials (degree two), the quadratic formula produces a solution, but its numerical evaluation may require some care for ensuring numerical stability. For degrees three and four, there are closed-form solutions in terms of radicals,

which are generally not convenient for numerical evaluation, as being

too complicated and involving the computation of several nth roots

whose computation is not easier than the direct computation of the

roots of the polynomial (for example the expression of the real roots of

a cubic polynomial may involve non-real cube roots). For polynomials of degree five or higher Abel–Ruffini theorem asserts that there is, in general, no radical expression of the roots.

So, except for very low degrees, root finding of polynomials consists of finding approximations of the roots. By the fundamental theorem of algebra, one knows that a polynomial of degree n has at most n real or complex roots, and this number is reached for almost all polynomials.

It follows that the problem of root finding for polynomials may be split in three different subproblems;

- Finding one root

- Finding all roots

- Finding roots in a specific region of the complex plane, typically the real roots or the real roots in a given interval (for example, when roots represents a physical quantity, only the real positive ones are interesting).

For finding one root, Newton's method and other general iterative methods work generally well.

For finding all the roots, the oldest method is, when a root r has been found, to divide the polynomial by x – r,

and restart iteratively the search of a root of the quotient

polynomial. However, except for low degrees, this does not work well

because of the numerical instability: Wilkinson's polynomial

shows that a very small modification of one coefficient may change

dramatically not only the value of the roots, but also their nature

(real or complex). Also, even with a good approximation, when one

evaluates a polynomial at an approximate root, one may get a result that

is far to be close to zero. For example, if a polynomial of degree 20

(the degree of Wilkinson's polynomial) has a root close to 10, the

derivative of the polynomial at the root may be of the order of this implies that an error of on the value of the root may produce a value of the polynomial at the approximate root that is of the order of

For avoiding these problems, methods have been elaborated, which

compute all roots simultaneously, to any desired accuracy. Presently the

most efficient method is Aberth method. A free implementation is available under the name of MPSolve.

This is a reference implementation, which can find routinely the roots

of polynomials of degree larger than 1,000, with more than 1,000

significant decimal digits.

The methods for computing all roots may be used for computing

real roots. However, it may be difficult to decide whether a root with a

small imaginary part is real or not. Moreover, as the number of the

real roots is, on the average, the logarithm of the degree, it is a

waste of computer resources to compute the non-real roots when one is

interested in real roots.

The oldest method for computing the number of real roots, and the number of roots in an interval results from Sturm's theorem, but the methods based on Descartes' rule of signs and its extensions—Budan's and Vincent's theorems—are

generally more efficient. For root finding, all proceed by reducing the

size of the intervals in which roots are searched until getting

intervals containing zero or one root. Then the intervals containing one

root may be further reduced for getting a quadratic convergence of Newton's method to the isolated roots. The main computer algebra systems (Maple, Mathematica, SageMath) have each a variant of this method as the default algorithm for the real roots of a polynomial.

Finding one root

The most widely used method for computing a root is Newton's method, which consists of the iterations of the computation of

by starting from a well-chosen value

If f is a polynomial, the computation is faster when using Horner rule for computing the polynomial and its derivative.

The convergence is generally quadratic, it may converge much slowly or even not converge at all. In particular, if the polynomial has no real root, and

is real, then Newton's method cannot converge. However, if the

polynomial has a real root, which is larger than the larger real root of

its derivative, then Newton's method converges quadratically to this

largest root if is larger that this larger root. This is the starting point of Horner method for computing the roots.

When one root r has been found, one may use Euclidean division for removing the factor x – r

from the polynomial. Computing a root of the resulting quotient, and

repeating the process provides, in principle, a way for computing all

roots. However, this iterative scheme is numerically unstable; the

approximation errors accumulate during the successive factorizations, so

that the last roots are determined with a polynomial that deviates

widely from a factor of the original polynomial. To reduce this error,

one may, for each root that is found, restart Newton's method with the

original polynomial, and this approximate root as starting value.

However, there is no warranty that this will allow finding all

roots. In fact, the problem of finding the roots of a polynomial from

its coefficients is in general highly ill-conditioned. This is illustrated by

Wilkinson's polynomial:

the roots of this polynomial of degree 20 are the 20 first positive

integers; changing the last bit of the 32-bit representation of one of

its coefficient (equal to –210) produces a polynomial with only 10 real

roots and 10 complex roots with imaginary parts larger than 0.6.

Closely related to Newton's method are Halley's method and Laguerre's method. Both use the polynomial and its two first derivations for an iterative process that has a cubic convergence. Combining two consecutive steps of these methods into a single test, one gets a rate of convergence

of 9, at the cost of 6 polynomial evaluations (with Horner rule). On

the other hand, combining three steps of Newtons method gives a rate of

convergence of 8 at the cost of the same number of polynomial

evaluation. This gives a slight advantage to these methods (less clear

for Laguerre's method, as a square root has to be computed at each

step).

When applying these methods to polynomials with real coefficients

and real starting points, Newton's and Halley's method stay inside the

real number line. One has to choose complex starting points to find

complex roots. In contrast, the Laguerre method with a square root in

its evaluation will leave the real axis of its own accord.

Another class of methods is based on converting the problem of

finding polynomial roots to the problem of finding eigenvalues of the companion matrix of the polynomial. In principle, one can use any eigenvalue algorithm

to find the roots of the polynomial. However, for efficiency reasons

one prefers methods that employ the structure of the matrix, that is,

can be implemented in matrix-free form. Among these methods are the power method, whose application to the transpose of the companion matrix is the classical Bernoulli's method to find the root of greatest modulus. The inverse power method with shifts, which finds some smallest root first, is what drives the complex (cpoly) variant of the Jenkins–Traub algorithm and gives it its numerical stability. Additionally, it is insensitive to multiple roots and has fast convergence with order (where is the golden ratio)

even in the presence of clustered roots. This fast convergence comes

with a cost of three polynomial evaluations per step, resulting in a

residual of O(|f(x)|2+3φ), that is a slower convergence than with three steps of Newton's method.

Finding roots in pairs

If

the given polynomial only has real coefficients, one may wish to avoid

computations with complex numbers. To that effect, one has to find

quadratic factors for pairs of conjugate complex roots. The application

of the multidimensional Newton's method to this task results in Bairstow's method.

The real variant of Jenkins–Traub algorithm is an improvement of this method.

Finding all roots at once

The simple Durand–Kerner and the slightly more complicated Aberth method simultaneously find all of the roots using only simple complex number arithmetic. Accelerated algorithms for multi-point evaluation and interpolation similar to the fast Fourier transform

can help speed them up for large degrees of the polynomial. It is

advisable to choose an asymmetric, but evenly distributed set of initial

points. The implementation of this method in the free software MPSolve is a reference for its efficiency and its accuracy.

Another method with this style is the Dandelin–Gräffe method (sometimes also ascribed to Lobachevsky), which uses polynomial transformations to repeatedly and implicitly square the roots. This greatly magnifies variances in the roots. Applying Viète's formulas, one obtains easy approximations for the modulus of the roots, and with some more effort, for the roots themselves.

Exclusion and enclosure methods

Several

fast tests exist that tell if a segment of the real line or a region of

the complex plane contains no roots. By bounding the modulus of the

roots and recursively subdividing the initial region indicated by these

bounds, one can isolate small regions that may contain roots and then

apply other methods to locate them exactly.

All these methods involve finding the coefficients of shifted and scaled versions of the polynomial. For large degrees, FFT-based accelerated methods become viable.

The Lehmer–Schur algorithm uses the Schur–Cohn test for circles; a variant, Wilf's global bisection algorithm uses a winding number computation for rectangular regions in the complex plane.

The splitting circle method

uses FFT-based polynomial transformations to find large-degree factors

corresponding to clusters of roots. The precision of the factorization

is maximized using a Newton-type iteration. This method is useful for

finding the roots of polynomials of high degree to arbitrary precision;

it has almost optimal complexity in this setting.

Real-root isolation

Finding the real roots of a polynomial with real coefficients is a

problem that has received much attention since the beginning of 19th

century, and is still an active domain of research. Most root-finding

algorithms can find some real roots, but cannot certify having found all

the roots. Methods for finding all complex roots, such as Aberth method can provide the real roots. However, because of the numerical instability of polynomials, they may need arbitrary-precision arithmetic for deciding which roots are real. Moreover, they compute all complex roots when only few are real.

It follows that the standard way of computing real roots is to compute first disjoint intervals, called isolating intervals, such that each one contains exactly one real root, and together they contain all the roots. This computation is called real-root isolation. Having isolating interval, one may use fast numerical methods, such as Newton's method for improving the precision of the result.

The oldest complete algorithm for real-root isolation results from Sturm's theorem. However, it appears to be much less efficient than the methods based on Descartes' rule of signs and Vincent's theorem. These methods divide into two main classes, one using continued fractions

and the other using bisection. Both method have been dramatically

improved since the beginning of 21st century. With these improvements

they reach a computational complexity that is similar to that of the best algorithms for computing all the roots (even when all roots are real).

These algorithms have been implemented and are available in Mathematica (continued fraction method) and Maple (bisection method). Both implementations can routinely find the real roots of polynomials of degree higher than 1,000.

Finding multiple roots of polynomials

Most root-finding algorithms behave badly when there are multiple roots or very close roots. However, for polynomials whose coefficients are exactly given as integers or rational numbers,

there is an efficient method to factorize them into factors that have

only simple roots and whose coefficients are also exactly given. This

method, called square-free factorization, is based on the multiple roots of a polynomial being the roots of the greatest common divisor of the polynomial and its derivative.

The square-free factorization of a polynomial p is a factorization where each is either 1 or a polynomial without multiple roots, and two different do not have any common root.

An efficient method to compute this factorization is Yun's algorithm.

![[-1,1]](https://wikimedia.org/api/rest_v1/media/math/render/svg/51e3b7f14a6f70e614728c583409a0b9a8b9de01)