Stable nuclides are nuclides that are not radioactive and so (unlike radionuclides) do not spontaneously undergo radioactive decay. When such nuclides are referred to in relation to specific elements, they are usually termed stable isotopes.

The 80 elements with one or more stable isotopes comprise a total of 252 nuclides that have not been known to decay using current equipment (see list at the end of this article). Of these elements, 26 have only one stable isotope; they are thus termed monoisotopic. The rest have more than one stable isotope. Tin has ten stable isotopes, the largest number of stable isotopes known for an element.

Definition of stability, and naturally occurring nuclides

Most naturally occurring nuclides are stable (about 252; see list at the end of this article), and about 34 more (total of 286) are known to be radioactive with sufficiently long half-lives (also known) to occur primordially. If the half-life of a nuclide is comparable to, or greater than, the Earth's age (4.5 billion years), a significant amount will have survived since the formation of the Solar System, and then is said to be primordial. It will then contribute in that way to the natural isotopic composition of a chemical element. Primordially present radioisotopes are easily detected with half-lives as short as 700 million years (e.g., 235U). This is the present limit of detection, as shorter-lived nuclides have not yet been detected undisputedly in nature.

Many naturally occurring radioisotopes (another 53 or so, for a total of about 339) exhibit still shorter half-lives than 700 million years, but they are made freshly, as daughter products of decay processes of primordial nuclides (for example, radium from uranium) or from ongoing energetic reactions, such as cosmogenic nuclides produced by present bombardment of Earth by cosmic rays (for example, 14C made from nitrogen).

Some isotopes that are classed as stable (i.e. no radioactivity has been observed for them) are predicted to have extremely long half-lives (sometimes as high as 1018 years or more). If the predicted half-life falls into an experimentally accessible range, such isotopes have a chance to move from the list of stable nuclides to the radioactive category, once their activity is observed. For example, 209Bi and 180W were formerly classed as stable, but were found to be alpha-active in 2003. However, such nuclides do not change their status as primordial when they are found to be radioactive.

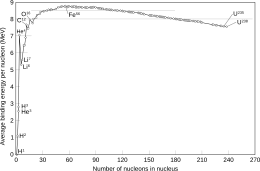

Most stable isotopes on Earth are believed to have been formed in processes of nucleosynthesis, either in the Big Bang, or in generations of stars that preceded the formation of the solar system. However, some stable isotopes also show abundance variations in the earth as a result of decay from long-lived radioactive nuclides. These decay-products are termed radiogenic isotopes, in order to distinguish them from the much larger group of 'non-radiogenic' isotopes.

Isotopes per element

Of the known chemical elements, 80 elements have at least one stable nuclide. These comprise the first 82 elements from hydrogen to lead, with the two exceptions, technetium (element 43) and promethium (element 61), that do not have any stable nuclides. As of December 2016, there were a total of 252 known "stable" nuclides. In this definition, "stable" means a nuclide that has never been observed to decay against the natural background. Thus, these elements have half lives too long to be measured by any means, direct or indirect.

Stable isotopes:

- 1 element (tin) has 10 stable isotopes

- 5 elements have 7 stable isotopes apiece

- 7 elements have 6 stable isotopes apiece

- 11 elements have 5 stable isotopes apiece

- 9 elements have 4 stable isotopes apiece

- 5 elements have 3 stable isotopes apiece

- 16 elements have 2 stable isotopes apiece

- 26 elements have 1 single stable isotope.

These last 26 are thus called monoisotopic elements. The mean number of stable isotopes for elements which have at least one stable isotope is 252/80 = 3.15.

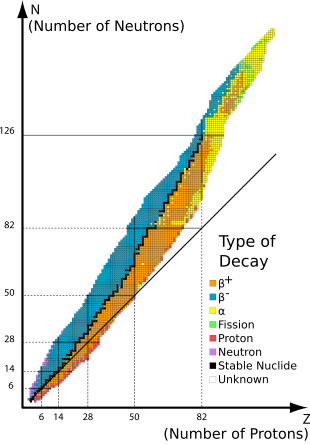

Physical magic numbers and odd and even proton and neutron count

Stability of isotopes is affected by the ratio of protons to neutrons, and also by presence of certain magic numbers of neutrons or protons which represent closed and filled quantum shells. These quantum shells correspond to a set of energy levels within the shell model of the nucleus; filled shells, such as the filled shell of 50 protons for tin, confers unusual stability on the nuclide. As in the case of tin, a magic number for Z, the atomic number, tends to increase the number of stable isotopes for the element.

Just as in the case of electrons, which have the lowest energy state when they occur in pairs in a given orbital, nucleons (both protons and neutrons) exhibit a lower energy state when their number is even, rather than odd. This stability tends to prevent beta decay (in two steps) of many even–even nuclides into another even–even nuclide of the same mass number but lower energy (and of course with two more protons and two fewer neutrons), because decay proceeding one step at a time would have to pass through an odd–odd nuclide of higher energy. Such nuclei thus instead undergo double beta decay (or are theorized to do so) with half-lives several orders of magnitude larger than the age of the universe. This makes for a larger number of stable even-even nuclides, which account for 151 of the 252 total. Stable even–even nuclides number as many as three isobars for some mass numbers, and up to seven isotopes for some atomic numbers.

Conversely, of the 252 known stable nuclides, only five have both an odd number of protons and odd number of neutrons: hydrogen-2 (deuterium), lithium-6, boron-10, nitrogen-14, and tantalum-180m. Also, only four naturally occurring, radioactive odd–odd nuclides have a half-life over a billion years: potassium-40, vanadium-50, lanthanum-138, and lutetium-176. Odd–odd primordial nuclides are rare because most odd–odd nuclei are unstable with respect to beta decay, because the decay products are even–even, and are therefore more strongly bound, due to nuclear pairing effects.

Yet another effect of the instability of an odd number of either type of nucleons is that odd-numbered elements tend to have fewer stable isotopes. Of the 26 monoisotopic elements (those with only a single stable isotope), all but one have an odd atomic number, and all but one has an even number of neutrons—the single exception to both rules being beryllium.

The end of the stable elements in the periodic table occurs after lead, largely due to the fact that nuclei with 128 neutrons are extraordinarily unstable and almost immediately shed alpha particles. This also contributes to the very short half-lives of astatine, radon, and francium relative to heavier elements. This may also be seen to a much lesser extent with 84 neutrons, which exhibits as a certain number of isotopes in the lanthanide series which exhibit alpha decay.

Nuclear isomers, including a "stable" one

The count of 252 known stable nuclides includes tantalum-180m, since even though its decay and instability is automatically implied by its notation of "metastable", this has still not yet been observed. All "stable" isotopes (stable by observation, not theory) are the ground states of nuclei, with the exception of tantalum-180m, which is a nuclear isomer or excited state. The ground state of this particular nucleus, tantalum-180, is radioactive with a comparatively short half-life of 8 hours; in contrast, the decay of the excited nuclear isomer is extremely strongly forbidden by spin-parity selection rules. It has been reported experimentally by direct observation that the half-life of 180mTa to gamma decay must be more than 1015 years. Other possible modes of 180mTa decay (beta decay, electron capture and alpha decay) have also never been observed.

Still-unobserved decay

It is expected that some continual improvement of experimental sensitivity will allow discovery of very mild radioactivity (instability) of some isotopes that are considered to be stable today. For an example of a recent discovery, it was not until 2003 that bismuth-209 (the only primordial isotope of bismuth) was shown to be very mildly radioactive, confirming theoretical predictions from nuclear physics that bismuth-209 would decay very slowly by alpha emission.

Isotopes that are theoretically believed to be unstable but have not been observed to decay are termed as observationally stable.

Summary table for numbers of each class of nuclides

This is a summary table from List of nuclides. Note that numbers are not exact and may change slightly in the future, as nuclides are observed to be radioactive, or new half-lives are determined to some precision.

| Type of nuclide by stability class | Number of nuclides in class | Running total of nuclides in all classes to this point | Notes |

|---|---|---|---|

| Theoretically stable to all but proton decay | 90 | 90 | Includes first 40 elements. If protons decay, then there are no stable nuclides. |

| Theoretically stable to alpha decay, beta decay, isomeric transition, and double beta decay but not spontaneous fission, which is possible for "stable" nuclides ≥ niobium-93 | 56 | 146 | (Note that spontaneous fission has never been observed for nuclides with mass number < 230). |

| Energetically unstable to one or more known decay modes, but no decay yet seen. Considered stable until radioactivity confirmed. | 106 | 252 | Total is the observationally stable nuclides. |

| Radioactive primordial nuclides. | 34 | 286 | Includes Bi, Th, U. |

| Radioactive nonprimordial, but naturally occurring on Earth. | ~61 significant | ~347 significant | Cosmogenic nuclides from cosmic rays; daughters of radioactive primordials such as francium, etc. |

List of stable nuclides

- Hydrogen-1

- Hydrogen-2

- Helium-3

- Helium-4

- no mass number 5

- Lithium-6

- Lithium-7

- no mass number 8

- Beryllium-9

- Boron-10

- Boron-11

- Carbon-12

- Carbon-13

- Nitrogen-14

- Nitrogen-15

- Oxygen-16

- Oxygen-17

- Oxygen-18

- Fluorine-19

- Neon-20

- Neon-21

- Neon-22

- Sodium-23

- Magnesium-24

- Magnesium-25

- Magnesium-26

- Aluminium-27

- Silicon-28

- Silicon-29

- Silicon-30

- Phosphorus-31

- Sulfur-32

- Sulfur-33

- Sulfur-34

- Sulfur-36

- Chlorine-35

- Chlorine-37

- Argon-36 (2E)

- Argon-38

- Argon-40

- Potassium-39

- Potassium-41

- Calcium-40 (2E)*

- Calcium-42

- Calcium-43

- Calcium-44

- Calcium-46 (2B)*

- Scandium-45

- Titanium-46

- Titanium-47

- Titanium-48

- Titanium-49

- Titanium-50

- Vanadium-51

- Chromium-50 (2E)*

- Chromium-52

- Chromium-53

- Chromium-54

- Manganese-55

- Iron-54 (2E)*

- Iron-56

- Iron-57

- Iron-58

- Cobalt-59

- Nickel-58 (2E)*

- Nickel-60

- Nickel-61

- Nickel-62

- Nickel-64

- Copper-63

- Copper-65

- Zinc-64 (2E)*

- Zinc-66

- Zinc-67

- Zinc-68

- Zinc-70 (2B)*

- Gallium-69

- Gallium-71

- Germanium-70

- Germanium-72

- Germanium-73

- Germanium-74

- Arsenic-75

- Selenium-74 (2E)

- Selenium-76

- Selenium-77

- Selenium-78

- Selenium-80 (2B)

- Bromine-79

- Bromine-81

- Krypton-80

- Krypton-82

- Krypton-83

- Krypton-84

- Krypton-86 (2B)

- Rubidium-85

- Strontium-84 (2E)

- Strontium-86

- Strontium-87

- Strontium-88

- Yttrium-89

- Zirconium-90

- Zirconium-91

- Zirconium-92

- Zirconium-94 (2B)*

- Niobium-93

- Molybdenum-92 (2E)*

- Molybdenum-94

- Molybdenum-95

- Molybdenum-96

- Molybdenum-97

- Molybdenum-98 (2B)*

- Technetium - No stable isotopes

- Ruthenium-96 (2E)*

- Ruthenium-98

- Ruthenium-99

- Ruthenium-100

- Ruthenium-101

- Ruthenium-102

- Ruthenium-104 (2B)

- Rhodium-103

- Palladium-102 (2E)

- Palladium-104

- Palladium-105

- Palladium-106

- Palladium-108

- Palladium-110 (2B)*

- Silver-107

- Silver-109

- Cadmium-106 (2E)*

- Cadmium-108 (2E)*

- Cadmium-110

- Cadmium-111

- Cadmium-112

- Cadmium-114 (2B)*

- Indium-113

- Tin-112 (2E)

- Tin-114

- Tin-115

- Tin-116

- Tin-117

- Tin-118

- Tin-119

- Tin-120

- Tin-122 (2B)

- Tin-124 (2B)*

- Antimony-121

- Antimony-123

- Tellurium-120 (2E)*

- Tellurium-122

- Tellurium-123 (E)*

- Tellurium-124

- Tellurium-125

- Tellurium-126

- Iodine-127

- Xenon-126 (2E)

- Xenon-128

- Xenon-129

- Xenon-130

- Xenon-131

- Xenon-132

- Xenon-134 (2B)*

- Caesium-133

- Barium-132 (2E)*

- Barium-134

- Barium-135

- Barium-136

- Barium-137

- Barium-138

- Lanthanum-139

- Cerium-136 (2E)*

- Cerium-138 (2E)*

- Cerium-140

- Cerium-142 (A, 2B)*

- Praseodymium-141

- Neodymium-142

- Neodymium-143

- Neodymium-145 (A)*

- Neodymium-146 (2B)

- no mass number 147

- Neodymium-148 (A, 2B)*

- Promethium - No stable isotopes

- Samarium-144 (2E)

- Samarium-149 (A)*

- Samarium-150

- no mass number 151

- Samarium-152

- Samarium-154 (2B)*

- Europium-153

- Gadolinium-154

- Gadolinium-155

- Gadolinium-156

- Gadolinium-157

- Gadolinium-158

- Gadolinium-160 (2B)*

- Terbium-159

- Dysprosium-156 (A, 2E)*

- Dysprosium-158

- Dysprosium-160

- Dysprosium-161

- Dysprosium-162

- Dysprosium-163

- Dysprosium-164

- Holmium-165

- Erbium-162 (A, 2E)*

- Erbium-164

- Erbium-166

- Erbium-167

- Erbium-168

- Erbium-170 (A, 2B)*

- Thulium-169

- Ytterbium-168 (A, 2E)*

- Ytterbium-170

- Ytterbium-171

- Ytterbium-172

- Ytterbium-173

- Ytterbium-174

- Ytterbium-176 (A, 2B)*

- Lutetium-175

- Hafnium-176

- Hafnium-177

- Hafnium-178

- Hafnium-179

- Hafnium-180

- Tantalum-180m (A, B, E, IT)* ^

- Tantalum-181

- Tungsten-182 (A)*

- Tungsten-183 (A)*

- Tungsten-184 (A)*

- Tungsten-186 (A, 2B)*

- Rhenium-185

- Osmium-184 (A, 2E)*

- Osmium-187

- Osmium-188

- Osmium-189

- Osmium-190

- Osmium-192 (A, 2B)*

- Iridium-191

- Iridium-193

- Platinum-192 (A)*

- Platinum-194

- Platinum-195

- Platinum-196

- Platinum-198 (A, 2B)*

- Gold-197

- Mercury-196 (A, 2E)*

- Mercury-198

- Mercury-199

- Mercury-200

- Mercury-201

- Mercury-202

- Mercury-204 (2B)

- Thallium-203

- Thallium-205

- Lead-204 (A)*

- Lead-206 (A)

- Lead-207 (A)

- Lead-208 (A)*

- Bismuth ^^ and above – No stable isotopes

- no mass number 209 and above

Abbreviations for predicted unobserved decay:

A for alpha decay, B for beta decay, 2B for double beta decay, E for electron capture, 2E for double electron capture, IT for isomeric transition, SF for spontaneous fission, * for the nuclides whose half-lives have lower bound.

^ Tantalum-180m is a "metastable isotope" meaning that it is an excited nuclear isomer of tantalum-180. See isotopes of tantalum. However, the half-life of this nuclear isomer is so long that it has never been observed to decay, and it thus occurs as an "observationally nonradioactive" primordial nuclide, as a minor isotope of tantalum. This is the only case of a nuclear isomer which has a half-life so long that it has never been observed to decay. It is thus included in this list.

^^ Bismuth-209 had long been believed to be stable, due to its unusually long half-life of 2.01×1019 years, which is more than a billion (1000 million) times the age of the universe.