From Wikipedia, the free encyclopedia

Hemodialysis, also spelled haemodialysis, or simply dialysis, is a process of purifying the blood of a person whose kidneys are not working normally. This type of dialysis achieves the extracorporeal removal of waste products such as creatinine and urea and free water from the blood when the kidneys are in a state of kidney failure. Hemodialysis is one of three renal replacement therapies (the other two being kidney transplant and peritoneal dialysis). An alternative method for extracorporeal separation of blood components such as plasma or cells is apheresis.

Hemodialysis can be an outpatient or inpatient therapy. Routine hemodialysis is conducted in a dialysis outpatient facility, either a purpose built room in a hospital or a dedicated, stand-alone clinic. Less frequently hemodialysis is done at home.

Dialysis treatments in a clinic are initiated and managed by

specialized staff made up of nurses and technicians; dialysis treatments

at home can be self-initiated and managed or done jointly with the

assistance of a trained helper who is usually a family member.

Medical uses

Hemodialysis is the choice of renal replacement therapy

for patients who need dialysis acutely, and for many patients as

maintenance therapy. It provides excellent, rapid clearance of solutes.

A nephrologist

(a medical kidney specialist) decides when hemodialysis is needed and

the various parameters for a dialysis treatment. These include frequency

(how many treatments per week), length of each treatment, and the blood

and dialysis solution flow rates, as well as the size of the dialyzer.

The composition of the dialysis solution is also sometimes adjusted in

terms of its sodium, potassium, and bicarbonate levels. In general, the

larger the body size of an individual, the more dialysis he/she will

need. In North America and the UK,

3–4 hour treatments (sometimes up to 5 hours for larger patients) given

3 times a week are typical. Twice-a-week sessions are limited to

patients who have a substantial residual kidney function. Four sessions

per week are often prescribed for larger patients, as well as patients

who have trouble with fluid overload. Finally, there is growing interest in short daily home hemodialysis, which is 1.5 – 4 hr sessions given 5–7 times per week, usually at home. There is also interest in nocturnal dialysis,

which involves dialyzing a patient, usually at home, for 8–10 hours per

night, 3–6 nights per week. Nocturnal in-center dialysis, 3–4 times per

week, is also offered at a handful of dialysis units in the United States.

Adverse effects

Disadvantages

- Restricts independence, as people undergoing this procedure cannot travel around because of supplies' availability

- Requires more supplies such as high water quality and electricity

- Requires reliable technology like dialysis machines

- The procedure is complicated and requires that care givers have more knowledge

- Requires time to set up and clean dialysis machines, and expense with machines and associated staff

Complications

Fluid shifts

Hemodialysis often involves fluid removal (through ultrafiltration), because most patients with renal failure pass little or no urine. Side effects caused by removing too much fluid and/or removing fluid too rapidly include low blood pressure, fatigue, chest pains, leg-cramps, nausea and headaches.

These symptoms can occur during the treatment and can persist post

treatment; they are sometimes collectively referred to as the dialysis

hangover or dialysis washout. The severity of these symptoms is usually

proportionate to the amount and speed of fluid removal. However, the

impact of a given amount or rate of fluid removal can vary greatly from

person to person and day to day. These side effects can be avoided

and/or their severity lessened by limiting fluid intake between

treatments or increasing the dose of dialysis e.g. dialyzing more often

or longer per treatment than the standard three times a week, 3–4 hours

per treatment schedule.

Access-related

Since

hemodialysis requires access to the circulatory system, patients

undergoing hemodialysis may expose their circulatory system to microbes, which can lead to bacteremia, an infection affecting the heart valves (endocarditis) or an infection affecting the bones (osteomyelitis).

The risk of infection varies depending on the type of access used (see

below). Bleeding may also occur, again the risk varies depending on the

type of access used. Infections can be minimized by strictly adhering to

infection control best practices.

Venous needle dislodgement

Venous

needle dislodgement (VND) is a fatal complication of hemodialysis

where the patient suffers rapid blood loss due to a faltering attachment

of the needle to the venous access point.

Anticoagulation-related

Unfractioned heparin (UHF) is the most commonly used anticoagulant in hemodialysis, as it is generally well tolerated and can be quickly reversed with protamine sulfate. Low-molecular weight heparin (LMWH) is however, becoming increasingly popular and is now the norm in western Europe.

Compared to UHF, LMWH has the advantage of an easier mode of

administration and reduced bleeding but the effect cannot be easily

reversed. Heparin can infrequently cause a low platelet count due to a reaction called heparin-induced thrombocytopenia (HIT).

In such patients, alternative anticoagulants may be used. The risk of

HIT is lower with LMWH compared to UHF. Even though HIT causes a low

platelet count it can paradoxically predispose thrombosis. In patients at high risk of bleeding, dialysis can be done without anticoagulation.

First-use syndrome

First-use syndrome is a rare but severe anaphylactic reaction to the artificial kidney.

Its symptoms include sneezing, wheezing, shortness of breath, back

pain, chest pain, or sudden death. It can be caused by residual

sterilant in the artificial kidney or the material of the membrane

itself. In recent years, the incidence of first-use syndrome has

decreased, due to an increased use of gamma irradiation,

steam sterilization, or electron-beam radiation instead of chemical

sterilants, and the development of new semipermeable membranes of higher

biocompatibility.

New methods of processing previously acceptable components of dialysis

must always be considered. For example, in 2008, a series of first-use

type of reactions, including deaths, occurred due to heparin

contaminated during the manufacturing process with oversulfated chondroitin sulfate.

Cardiovascular

Longterm complications of hemodialysis include hemodialysis-associated amyloidosis, neuropathy and various forms of heart disease.

Increasing the frequency and length of treatments has been shown to

improve fluid overload and enlargement of the heart that is commonly

seen in such patients. Due to these complications, the prevalence of complementary and alternative medicine use is high among patients undergoing hemodialysis.

Vitamin deficiency

Folate deficiency can occur in some patients having hemodialysis.

Electrolyte imbalances

Although

a dyalisate fluid, which is a solution containing diluted electrolytes,

is employed for the filtration of blood, haemodialysis can cause an

electrolyte imbalance. These imbalances can derive from abnormal

concentrations of potassium (hypokalemia, hyperkalemia), and sodium (hyponatremia, hypernatremia). These electrolyte imbalances are associated with increased cardiovascular mortality.

Mechanism and technique

The principle of hemodialysis is the same as other methods of dialysis; it involves diffusion of solutes across a semipermeable membrane. Hemodialysis utilizes counter current flow, where the dialysate is flowing in the opposite direction to blood flow in the extracorporeal

circuit. Counter-current flow maintains the concentration gradient

across the membrane at a maximum and increases the efficiency of the

dialysis.

Fluid removal (ultrafiltration) is achieved by altering the hydrostatic pressure

of the dialysate compartment, causing free water and some dissolved

solutes to move across the membrane along a created pressure gradient.

The dialysis solution that is used may be a sterilized solution of mineral ions and is called dialysate. Urea and other waste products including potassium, and phosphate diffuse into the dialysis solution. However, concentrations of sodium and chloride are similar to those of normal plasma to prevent loss. Sodium bicarbonate

is added in a higher concentration than plasma to correct blood

acidity. A small amount of glucose is also commonly used. The

concentration of electrolytes in the dialysate is adjusted depending on

the patient's status before the dialysis. If a high concentration of

sodium is added to the dialysate, the patient can become thirsty and end

up accumulating body fluids, which can lead to heart damage. On the

contrary, low concentrations of sodium in the dialysate solution have

been associated with a low blood pressure and intradialytic weight gain,

which are markers of improved outcomes. However, the benefits of using a

low concentration of sodium have not been demonstrated yet, since these

patients can also suffer from cramps, intradialytic hypotension and low

sodium in serum, which are symptoms associated with a high mortality

risk.

Note that this is a different process to the related technique of hemofiltration.

Access

Three primary methods are used to gain access to the blood for hemodialysis: an intravenous catheter, an arteriovenous fistula

(AV) and a synthetic graft. The type of access is influenced by factors

such as the expected time course of a patient's renal failure and the

condition of their vasculature. Patients may have multiple access

procedures, usually because an AV fistula or graft is maturing and a

catheter is still being used. The placement of a catheter is usually

done under light sedation, while fistulas and grafts require an

operation.

Types

There are

three types of hemodialysis: conventional hemodialysis, daily

hemodialysis, and nocturnal hemodialysis. Below is an adaptation and

summary from a brochure of The Ottawa Hospital.

Conventional hemodialysis

Conventional

hemodialysis is usually done three times per week, for about three to

four hours for each treatment (Sometimes five hours for larger

patients), during which the patient's blood is drawn out through a tube

at a rate of 200–400 mL/min. The tube is connected to a 15, 16, or 17

gauge needle inserted in the dialysis fistula or graft, or connected to

one port of a dialysis catheter.

The blood is then pumped through the dialyzer, and then the processed

blood is pumped back into the patient's bloodstream through another tube

(connected to a second needle or port). During the procedure, the

patient's blood pressure is closely monitored, and if it becomes low, or

the patient develops any other signs of low blood volume such as

nausea, the dialysis attendant can administer extra fluid through the

machine. During the treatment, the patient's entire blood volume (about

5000 cc) circulates through the machine every 15 minutes. During this

process, the dialysis patient is exposed to a week's worth of water for

the average person.

Daily hemodialysis

Daily

hemodialysis is typically used by those patients who do their own

dialysis at home. It is less stressful (more gentle) but does require

more frequent access. This is simple with catheters, but more

problematic with fistulas or grafts. The "buttonhole technique" can be

used for fistulas requiring frequent access. Daily hemodialysis is

usually done for 2 hours six days a week.

Nocturnal hemodialysis

The

procedure of nocturnal hemodialysis is similar to conventional

hemodialysis except it is performed three to six nights a week and

between six and ten hours per session while the patient sleeps.

Equipment

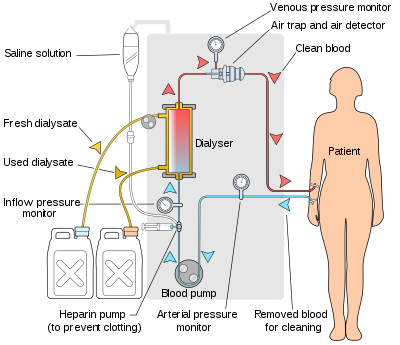

Schematic of a hemodialysis circuit

The hemodialysis machine pumps the patient's blood and the dialysate

through the dialyzer. The newest dialysis machines on the market are

highly computerized and continuously monitor an array of safety-critical

parameters, including blood and dialysate flow rates; dialysis solution

conductivity, temperature, and pH; and analysis of the dialysate for

evidence of blood leakage or presence of air. Any reading that is out

of normal range triggers an audible alarm to alert the patient-care

technician who is monitoring the patient. Manufacturers of dialysis

machines include companies such as Nipro, Fresenius, Gambro, Baxter, B. Braun, NxStage and Bellco.

Water system

A hemodialysis unit's dialysate solution tanks

An extensive water purification

system is absolutely critical for hemodialysis. Since dialysis patients

are exposed to vast quantities of water, which is mixed with dialysate

concentrate to form the dialysate, even trace mineral contaminants or

bacterial endotoxins

can filter into the patient's blood. Because the damaged kidneys cannot

perform their intended function of removing impurities, ions introduced

into the bloodstream via water can build up to hazardous levels,

causing numerous symptoms or death. Aluminum, chloramine, fluoride, copper, and zinc, as well as bacterial fragments and endotoxins, have all caused problems in this regard.

For this reason, water used in hemodialysis is carefully purified

before use. Initially, it is filtered and temperature-adjusted and its

pH is corrected by adding an acid or base. Chemical buffers such as

bicarbonate and lactate can alternatively be added to regulate the pH of

the dialysate. Both buffers can stabilise the pH of the solution at a

physiological level with no negative impacts on the patient. There is

some evidence of a reduction in the incidence of heart and blood

problems and high blood pressure events when using bicarbonate as the pH

buffer compared to lactate. However, the mortality rates after using

both buffers do not show a significative difference.

The dialysate solution is then softened. Next the water is run through a tank containing activated charcoal to adsorb organic contaminants. Primary purification is then done by forcing water through a membrane with very tiny pores, a so-called reverse osmosis

membrane. This lets the water pass, but holds back even very small

solutes such as electrolytes. Final removal of leftover electrolytes is

done by passing the water through a tank with ion-exchange resins, which

remove any leftover anions or cations and replace them with hydroxyl

and hydrogen ions, respectively, leaving ultrapure water.

Even this degree of water purification may be insufficient. The

trend lately is to pass this final purified water (after mixing with

dialysate concentrate) through a dialyzer membrane. This provides

another layer of protection by removing impurities, especially those of

bacterial origin, that may have accumulated in the water after its

passage through the original water purification system.

Once purified water is mixed with dialysate concentrate, its

conductivity increases, since water that contains charged ions conducts

electricity. During dialysis, the conductivity of dialysis solution is

continuously monitored to ensure that the water and dialysate

concentrate are being mixed in the proper proportions. Both excessively

concentrated dialysis solution and excessively dilute solution can cause

severe clinical problems.

Dialyzer

The

dialyzer is the piece of equipment that actually filters the blood.

Almost all dialyzers in use today are of the hollow-fiber variety. A

cylindrical bundle of hollow fibers, whose walls are composed of

semi-permeable membrane, is anchored at each end into potting compound

(a sort of glue). This assembly is then put into a clear plastic

cylindrical shell with four openings. One opening or blood port at each

end of the cylinder communicates with each end of the bundle of hollow

fibers. This forms the "blood compartment" of the dialyzer. Two other

ports are cut into the side of the cylinder. These communicate with the

space around the hollow fibers, the "dialysate compartment." Blood is

pumped via the blood ports through this bundle of very thin capillary-like

tubes, and the dialysate is pumped through the space surrounding the

fibers. Pressure gradients are applied when necessary to move fluid from

the blood to the dialysate compartment.

Membrane and flux

Dialyzer

membranes come with different pore sizes. Those with smaller pore size

are called "low-flux" and those with larger pore sizes are called

"high-flux." Some larger molecules, such as beta-2-microglobulin, are

not removed at all with low-flux dialyzers; lately, the trend has been

to use high-flux dialyzers. However, such dialyzers require newer

dialysis machines and high-quality dialysis solution to control the rate

of fluid removal properly and to prevent backflow of dialysis solution

impurities into the patient through the membrane.

Dialyzer membranes used to be made primarily of cellulose

(derived from cotton linter). The surface of such membranes was not very

biocompatible, because exposed hydroxyl groups would activate complement

in the blood passing by the membrane. Therefore, the basic,

"unsubstituted" cellulose membrane was modified. One change was to cover

these hydroxyl groups with acetate groups (cellulose acetate); another

was to mix in some compounds that would inhibit complement activation at

the membrane surface (modified cellulose). The original "unsubstituted

cellulose" membranes are no longer in wide use, whereas cellulose

acetate and modified cellulose dialyzers are still used. Cellulosic

membranes can be made in either low-flux or high-flux configuration,

depending on their pore size.

Another group of membranes is made from synthetic materials, using polymers such as polyarylethersulfone, polyamide, polyvinylpyrrolidone, polycarbonate, and polyacrylonitrile.

These synthetic membranes activate complement to a lesser degree than

unsubstituted cellulose membranes. However, they are in general more

hydrophobic which leads to increased adsorption of proteins to the

membrane surface which in turn can lead to complement system activation. Synthetic membranes can be made in either low- or high-flux configuration, but most are high-flux.

Nanotechnology is being used in some of the most recent high-flux

membranes to create a uniform pore size. The goal of high-flux

membranes is to pass relatively large molecules such as

beta-2-microglobulin (MW 11,600 daltons), but not to pass albumin (MW

~66,400 daltons). Every membrane has pores in a range of sizes. As pore

size increases, some high-flux dialyzers begin to let albumin pass out

of the blood into the dialysate. This is thought to be undesirable,

although one school of thought holds that removing some albumin may be

beneficial in terms of removing protein-bound uremic toxins.

Membrane flux and outcome

Whether

using a high-flux dialyzer improves patient outcomes is somewhat

controversial, but several important studies have suggested that it has

clinical benefits. The NIH-funded HEMO trial compared survival and

hospitalizations in patients randomized to dialysis with either low-flux

or high-flux membranes. Although the primary outcome (all-cause

mortality) did not reach statistical significance in the group

randomized to use high-flux membranes, several secondary outcomes were

better in the high-flux group. A recent Cochrane analysis concluded that benefit of membrane choice on outcomes has not yet been demonstrated. A collaborative randomized trial from Europe, the MPO (Membrane Permeabilities Outcomes) study,

comparing mortality in patients just starting dialysis using either

high-flux or low-flux membranes, found a nonsignificant trend to

improved survival in those using high-flux membranes, and a survival

benefit in patients with lower serum albumin levels or in diabetics.

Membrane flux and beta-2-microglobulin amyloidosis

High-flux dialysis membranes and/or intermittent on-line

hemodiafiltration (IHDF) may also be beneficial in reducing

complications of beta-2-microglobulin accumulation. Because

beta-2-microglobulin is a large molecule, with a molecular weight of

about 11,600 daltons, it does not pass at all through low-flux dialysis

membranes. Beta-2-M is removed with high-flux dialysis, but is removed

even more efficiently with IHDF. After several years (usually at least

5–7), patients on hemodialysis begin to develop complications from

beta-2-M accumulation, including carpal tunnel syndrome, bone cysts, and

deposits of this amyloid in joints and other tissues. Beta-2-M

amyloidosis can cause very serious complications, including spondyloarthropathy,

and often is associated with shoulder joint problems. Observational

studies from Europe and Japan have suggested that using high-flux

membranes in dialysis mode, or IHDF, reduces beta-2-M complications in

comparison to regular dialysis using a low-flux membrane.

Dialyzer size and efficiency

Dialyzers

come in many different sizes. A larger dialyzer with a larger membrane

area (A) will usually remove more solutes than a smaller dialyzer,

especially at high blood flow rates. This also depends on the membrane

permeability coefficient K0 for the solute in question. So dialyzer efficiency is usually expressed as the K0A

– the product of permeability coefficient and area. Most dialyzers have

membrane surface areas of 0.8 to 2.2 square meters, and values of K0A ranging from about 500 to 1500 mL/min. K0A, expressed in mL/min, can be thought of as the maximum clearance of a dialyzer at very high blood and dialysate flow rates.

Reuse of dialyzers

The

dialyzer may either be discarded after each treatment or be reused.

Reuse requires an extensive procedure of high-level disinfection. Reused

dialyzers are not shared between patients. There was an initial

controversy about whether reusing dialyzers worsened patient outcomes.

The consensus today is that reuse of dialyzers, if done carefully and

properly, produces similar outcomes to single use of dialyzers.

Dialyzer Reuse is a practice that has been around since the

invention of the product. This practice includes the cleaning of a used

dialyzer to be reused multiple times for the same patient. Dialysis

clinics reuse dialyzers to become more economical and reduce the high

costs of “single-use” dialysis which can be extremely expensive and

wasteful. Single used dialyzers are initiated just once and then thrown

out creating a large amount of bio-medical waste with no mercy for cost savings. If done right, dialyzer reuse can be very safe for dialysis patients.

There are two ways of reusing dialyzers, manual and automated.

Manual reuse involves the cleaning of a dialyzer by hand. The dialyzer

is semi-disassembled then flushed repeatedly before being rinsed with

water. It is then stored with a liquid disinfectant(PAA) for 18+ hours

until its next use. Although many clinics outside the USA use this

method, some clinics are switching toward a more automated/streamlined

process as the dialysis practice advances. The newer method of automated

reuse is achieved by means of a medical device that began in the early

1980s. These devices are beneficial to dialysis clinics that practice

reuse – especially for large dialysis clinical entities – because they

allow for several back to back cycles per day. The dialyzer is first

pre-cleaned by a technician, then automatically cleaned by machine

through a step-cycles process until it is eventually filled with liquid

disinfectant for storage. Although automated reuse is more effective

than manual reuse, newer technology has sparked even more advancement in

the process of reuse. When reused over 15 times with current

methodology, the dialyzer can lose B2m, middle molecule clearance and

fiber pore structure integrity, which has the potential to reduce the

effectiveness of the patient's dialysis session. Currently, as of 2010,

newer, more advanced reprocessing technology has proven the ability to

completely eliminate the manual pre-cleaning process altogether and has

also proven the potential to regenerate (fully restore) all functions of

a dialyzer to levels that are approximately equivalent to single-use

for more than 40 cycles.

As medical reimbursement rates begin to fall even more, many dialysis

clinics are continuing to operate effectively with reuse programs

especially since the process is easier and more streamlined than before.

Epidemiology

Hemodialysis

was one of the most common procedures performed in U.S. hospitals in

2011, occurring in 909,000 stays (a rate of 29 stays per 10,000

population). This was an increase of 68 percent from 1997, when there

were 473,000 stays. It was the fifth most common procedure for patients

aged 45–64 years.

History

Many have played a role in developing dialysis as a practical treatment for renal failure, starting with Thomas Graham of Glasgow, who first presented the principles of solute transport across a semipermeable membrane in 1854. The artificial kidney was first developed by Abel, Rountree, and Turner in 1913, the first hemodialysis in a human being was by Haas (February 28, 1924) and the artificial kidney was developed into a clinically useful apparatus by Kolff in 1943 – 1945. This research showed that life could be prolonged in patients dying of kidney failure.

Willem Kolff was the first to construct a working dialyzer in 1943. The first successfully treated patient was a 67-year-old woman in uremic coma

who regained consciousness after 11 hours of hemodialysis with Kolff's

dialyzer in 1945. At the time of its creation, Kolff's goal was to

provide life support during recovery from acute renal failure. After World War II ended, Kolff donated the five dialyzers he had made to hospitals around the world, including Mount Sinai Hospital, New York. Kolff gave a set of blueprints for his hemodialysis machine to George Thorn at the Peter Bent Brigham Hospital in Boston. This led to the manufacture of the next generation of Kolff's dialyzer, a stainless steel Kolff-Brigham dialysis machine.

According to McKellar (1999), a significant contribution to renal therapies was made by Canadian surgeon Gordon Murray

with the assistance of two doctors, an undergraduate chemistry student,

and research staff. Murray's work was conducted simultaneously and

independently from that of Kolff. Murray's work led to the first

successful artificial kidney built in North America in 1945–46, which

was successfully used to treat a 26-year-old woman out of a uraemic coma

in Toronto. The less-crude, more compact, second-generation

"Murray-Roschlau" dialyser was invented in 1952–53, whose designs were

stolen by German immigrant Erwin Halstrup, and passed off as his own

(the "Halstrup–Baumann artificial kidney").

By the 1950s, Willem Kolff's invention of the dialyzer was used

for acute renal failure, but it was not seen as a viable treatment for

patients with stage 5 chronic kidney disease

(CKD). At the time, doctors believed it was impossible for patients to

have dialysis indefinitely for two reasons. First, they thought no

man-made device could replace the function of kidneys over the long

term. In addition, a patient undergoing dialysis suffered from damaged

veins and arteries, so that after several treatments, it became

difficult to find a vessel to access the patient's blood.

The original Kolff kidney was not very useful clinically, because

it did not allow for removal of excess fluid. Swedish professor Nils Alwall

encased a modified version of this kidney inside a stainless steel

canister, to which a negative pressure could be applied, in this way

effecting the first truly practical application of hemodialysis, which

was done in 1946 at the University of Lund.

Alwall also was arguably the inventor of the arteriovenous shunt for

dialysis. He reported this first in 1948 where he used such an

arteriovenous shunt in rabbits. Subsequently, he used such shunts, made

of glass, as well as his canister-enclosed dialyzer, to treat 1500

patients in renal failure between 1946 and 1960, as reported to the

First International Congress of Nephrology held in Evian in September

1960. Alwall was appointed to a newly created Chair of Nephrology at

the University of Lund in 1957. Subsequently, he collaborated with

Swedish businessman Holger Crafoord to found one of the key companies that would manufacture dialysis equipment in the past 50 years, Gambro. The early history of dialysis has been reviewed by Stanley Shaldon.

Belding H. Scribner, working with the biomechanical engineer Wayne Quinton, modified the glass shunts used by Alwall by making them from Teflon.

Another key improvement was to connect them to a short piece of

silicone elastomer tubing. This formed the basis of the so-called

Scribner shunt, perhaps more properly called the Quinton-Scribner shunt.

After treatment, the circulatory access would be kept open by

connecting the two tubes outside the body using a small U-shaped Teflon

tube, which would shunt the blood from the tube in the artery back to

the tube in the vein.

In 1962, Scribner started the world's first outpatient dialysis

facility, the Seattle Artificial Kidney Center, later renamed the Northwest Kidney Centers.

Immediately the problem arose of who should be given dialysis, since

demand far exceeded the capacity of the six dialysis machines at the

center. Scribner decided that he would not make the decision about who

would receive dialysis and who would not. Instead, the choices would be

made by an anonymous committee, which could be viewed as one of the

first bioethics committees.

For a detailed history of successful and unsuccessful attempts at

dialysis, including pioneers such as Abel and Roundtree, Haas, and

Necheles, see this review by Kjellstrand.