From Wikipedia, the free encyclopedia

https://en.wikipedia.org/wiki/Digital_cinemaDigital cinema refers to adoption of digital technology within the film industry to distribute or project motion pictures as opposed to the historical use of reels of motion picture film, such as 35 mm film. Whereas film reels have to be shipped to movie theaters, a digital movie can be distributed to cinemas in a number of ways: over the Internet or dedicated satellite links, or by sending hard drives or optical discs such as Blu-ray discs.

Digital movies are projected using a digital video projector instead of a film projector, are shot using digital movie cameras and edited using a non-linear editing system

(NLE). The NLE is often a video editing application installed in one or

more computers that may be networked to access the original footage

from a remote server, share or gain access to computing resources for

rendering the final video, and to allow several editors to work on the

same timeline or project.

Alternatively a digital movie could be a film reel that has been digitized using a motion picture film scanner and then restored, or, a digital movie could be recorded using a film recorder onto film stock for projection using a traditional film projector.

Digital cinema is distinct from high-definition television and does not necessarily use traditional television or other traditional high-definition video

standards, aspect ratios, or frame rates. In digital cinema,

resolutions are represented by the horizontal pixel count, usually 2K (2048×1080 or 2.2 megapixels) or 4K

(4096×2160 or 8.8 megapixels). The 2K and 4K resolutions used in

digital cinema projection are often referred to as DCI 2K and DCI 4K.

DCI stands for Digital Cinema Initiatives.

As digital-cinema technology improved in the early 2010s, most theaters across the world converted to digital video projection.

History

The transition from film to digital video was preceded by cinema's transition from analog to digital audio, with the release of the Dolby Digital (AC-3) audio coding standard in 1991. Its main basis is the modified discrete cosine transform (MDCT), a lossy audio compression algorithm. It is a modification of the discrete cosine transform (DCT) algorithm, which was first proposed by Nasir Ahmed in 1972 and was originally intended for image compression. The DCT was adapted into the MDCT by J.P. Princen, A.W. Johnson and Alan B. Bradley at the University of Surrey in 1987, and then Dolby Laboratories adapted the MDCT algorithm along with perceptual coding principles to develop the AC-3 audio format for cinema needs. Cinema in the 1990s typically combined analog video with digital audio.

Digital media playback of high-resolution 2K files has at least a 20-year history. Early video data storage units (RAIDs)

fed custom frame buffer systems with large memories. In early digital

video units, content was usually restricted to several minutes of

material. Transfer of content between remote locations was slow and had

limited capacity. It was not until the late 1990s that feature-length

films could be sent over the "wire" (Internet or dedicated fiber links).

On October 23, 1998, Digital Light Processing (DLP) projector technology was publicly demonstrated with the release of The Last Broadcast, the first feature-length movie, shot, edited and distributed digitally. In conjunction with Texas Instruments, the movie was publicly demonstrated in five theaters across the United States (Philadelphia, Portland (Oregon), Minneapolis, Providence, and Orlando).

Foundations

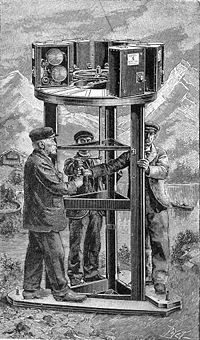

Texas Instruments, DLP Cinema Prototype Projector, Mark V, 2000

In the United States, on June 18, 1999, Texas Instruments' DLP Cinema projector technology was publicly demonstrated on two screens in Los Angeles and New York for the release of Lucasfilm's Star Wars Episode I: The Phantom Menace. In Europe, on February 2, 2000, Texas Instruments' DLP Cinema projector technology was publicly demonstrated, by Philippe Binant, on one screen in Paris for the release of Toy Story 2.

From 1997 to 2000, the JPEG 2000 image compression standard was developed by a Joint Photographic Experts Group (JPEG) committee chaired by Touradj Ebrahimi (later the JPEG president). In contrast to the original 1992 JPEG standard, which is a DCT-based lossy compression format for static digital images, JPEG 2000 is a discrete wavelet transform (DWT) based compression standard that could be adapted for motion imaging video compression with the Motion JPEG 2000 extension. JPEG 2000 technology was later selected as the video coding standard for digital cinema in 2004.

Initiatives

On January 19, 2000, the Society of Motion Picture and Television Engineers, in the United States, initiated the first standards group dedicated towards developing digital cinema.

By December 2000, there were 15 digital cinema screens in the United

States and Canada, 11 in Western Europe, 4 in Asia, and 1 in South

America. Digital Cinema Initiatives (DCI) was formed in March 2002 as a joint project of many motion picture studios (Disney, Fox, MGM, Paramount, Sony Pictures, Universal and Warner Bros.) to develop a system specification for digital cinema.

In April 2004, in cooperation with the American Society of Cinematographers,

DCI created standard evaluation material (the ASC/DCI StEM material)

for testing of 2K and 4K playback and compression technologies. DCI

selected JPEG 2000 as the basis for the compression in the system the same year. Initial tests with JPEG 2000 produced bit rates of around 75–125 Mbit/s for 2K resolution and 100–200 Mbit/s for 4K resolution.

Worldwide deployment

In

China, in June 2005, an e-cinema system called "dMs" was established

and was used in over 15,000 screens spread across China's 30 provinces.

dMs estimated that the system would expand to 40,000 screens in 2009.

In 2005 the UK Film Council Digital Screen Network launched in the UK

by Arts Alliance Media creating a chain of 250 2K digital cinema

systems. The roll-out was completed in 2006. This was the first mass

roll-out in Europe. AccessIT/Christie Digital also started a roll-out in

the United States and Canada. By mid 2006, about 400 theaters were

equipped with 2K digital projectors with the number increasing every

month. In August 2006, the Malayalam digital movie Moonnamathoral,

produced by Benzy Martin, was distributed via satellite to cinemas,

thus becoming the first Indian digital cinema. This was done by Emil and

Eric Digital Films, a company based at Thrissur using the end-to-end

digital cinema system developed by Singapore-based DG2L Technologies.

In January 2007, Guru became the first Indian film

mastered in the DCI-compliant JPEG 2000 Interop format and also the

first Indian film to be previewed digitally, internationally, at the

Elgin Winter Garden in Toronto. This film was digitally mastered at Real

Image Media Technologies in India. In 2007, the UK became home to

Europe's first DCI-compliant fully digital multiplex cinemas; Odeon

Hatfield and Odeon Surrey Quays (in London), with a total of 18 digital

screens, were launched on 9 February 2007. By March 2007, with the

release of Disney's Meet the Robinsons,

about 600 screens had been equipped with digital projectors. In June

2007, Arts Alliance Media announced the first European commercial

digital cinema Virtual Print Fee (VPF) agreements (with 20th Century Fox and Universal Pictures). In March 2009 AMC Theatres announced that it closed a $315 million deal with Sony to replace all of its movie projectors

with 4K digital projectors starting in the second quarter of 2009; it

was anticipated that this replacement would be finished by 2012.

AMC Theatres former corporate headquarters in Kansas City, prior to their 2013 move to Leawood, Kansas.

In January 2011, the total number of digital screens worldwide was

36,242, up from 16,339 at end 2009 or a growth rate of 121.8 percent

during the year.

There were 10,083 d-screens in Europe as a whole (28.2 percent of

global figure), 16,522 in the United States and Canada (46.2 percent of

global figure) and 7,703 in Asia (21.6 percent of global figure).

Worldwide progress was slower as in some territories, particularly Latin

America and Africa.

As of 31 March 2015, 38,719 screens (out of a total of 39,789 screens)

in the United States have been converted to digital, 3,007 screens in

Canada have been converted, and 93,147 screens internationally have been

converted. At the end of 2017, virtually all of the world's cinema screens were digital (98%).

Despite the fact that today, virtually all global movie theaters

have converted their screens to digital cinemas, some major motion

pictures even as of 2019 are shot on film. For example, Quentin Tarantino released his latest film Once Upon a Time in Hollywood in 70 mm and 35 mm in selected theaters across the United States and Canada.

Elements

In addition to the equipment already found in a film-based movie theatre (e.g., a sound reinforcement system, screen, etc.), a DCI-compliant digital cinema requires a digital projector and a powerful computer known as a server. Movies are supplied to the theatre as a digital file called a Digital Cinema Package (DCP).

For a typical feature film, this file will be anywhere between 90 GB

and 300 GB of data (roughly two to six times the information of a

Blu-ray disc) and may arrive as a physical delivery on a conventional

computer hard drive or via satellite or fibre-optic broadband Internet.

As of 2013, physical deliveries of hard drives were most common in the

industry. Promotional trailers arrive on a separate hard drive and

range between 200 GB and 400 GB in size. The contents of the hard

drive(s) may be encrypted.

Regardless of how the DCP arrives, it first needs to be copied

onto the internal hard drives of the server, usually via a USB port,

a process known as "ingesting". DCPs can be, and in the case of feature

films almost always are, encrypted, to prevent illegal copying and

piracy. The necessary decryption keys are supplied separately, usually

as email attachments and then "ingested" via USB. Keys are time-limited

and will expire after the end of the period for which the title has been

booked. They are also locked to the hardware (server and projector)

that is to screen the film, so if the theatre wishes to move the title

to another screen or extend the run, a new key must be obtained from the

distributor.

Several versions of the same feature can be sent together. The original

version (OV) is used as the basis of all the other playback options.

Version files (VF) may have a different sound format (e.g. 7.1 as

opposed to 5.1 surround sound) or subtitles. 2D and 3D versions are often distributed on the same hard drive.

The playback of the content is controlled by the server using a

"playlist". As the name implies, this is a list of all the content that

is to be played as part of the performance. The playlist will be created

by a member of the theatre's staff using proprietary software that runs

on the server. In addition to listing the content to be played the

playlist also includes automation cues that allow the playlist to

control the projector, the sound system, auditorium lighting, tab

curtains and screen masking (if present), etc. The playlist can be

started manually, by clicking the "play" button on the server's monitor

screen, or automatically at pre-set times.

Technology and standards

Digital Cinema Initiatives

Digital Cinema Initiatives (DCI), a joint venture of the six major studios, published the first version (V1.0) of a system specification for digital cinema in July 2005. The main declared objectives of the specification were to define a digital cinema system that would "present a theatrical experience that is better than what one could achieve now with a traditional 35mm Answer Print",

to provide global standards for interoperability such that any

DCI-compliant content could play on any DCI-compliant hardware anywhere

in the world and to provide robust protection for the intellectual

property of the content providers.

The DCI specification calls for picture encoding using the ISO/IEC 15444-1 "JPEG2000" (.j2c) standard and use of the CIE XYZ color space at 12 bits per component encoded with a 2.6 gamma

applied at projection. Two levels of resolution for both content and

projectors are supported: 2K (2048×1080) or 2.2 MP at 24 or 48 frames per second,

and 4K (4096×2160) or 8.85 MP at 24 frames per second. The

specification ensures that 2K content can play on 4K projectors and vice

versa. Smaller resolutions in one direction are also supported (the

image gets automatically centered). Later versions of the standard added

additional playback rates (like 25 fps in SMPTE mode). For the sound

component of the content the specification provides for up to 16

channels of uncompressed audio using the "Broadcast Wave" (.wav) format at 24 bits and 48 kHz or 96 kHz sampling.

Playback is controlled by an XML-format Composition Playlist, into an MXF-compliant file at a maximum data rate of 250 Mbit/s. Details about encryption, key management,

and logging are all discussed in the specification as are the minimum

specifications for the projectors employed including the color gamut, the contrast ratio

and the brightness of the image. While much of the specification

codifies work that had already been ongoing in the Society of Motion

Picture and Television Engineers (SMPTE),

the specification is important in establishing a content owner

framework for the distribution and security of first-release

motion-picture content.

National Association of Theatre Owners

In addition to DCI's work, the National Association of Theatre Owners (NATO) released its Digital Cinema System Requirements.

The document addresses the requirements of digital cinema systems from

the operational needs of the exhibitor, focusing on areas not addressed

by DCI, including access for the visually impaired and hearing impaired,

workflow inside the cinema, and equipment interoperability. In

particular, NATO's document details requirements for the Theatre

Management System (TMS), the governing software for digital cinema

systems within a theatre complex, and provides direction for the

development of security key management systems. As with DCI's document,

NATO's document is also important to the SMPTE standards effort.

E-Cinema

The Society of Motion Picture and Television Engineers (SMPTE)

began work on standards for digital cinema in 2000. It was clear by

that point in time that HDTV did not provide a sufficient technological

basis for the foundation of digital cinema playback. In Europe, India

and Japan however, there is still a significant presence of HDTV for

theatrical presentations. Agreements within the ISO standards body have

led to these non-compliant systems being referred to as Electronic

Cinema Systems (E-Cinema).

Projectors for digital cinema

Only three manufacturers make DCI-approved digital cinema projectors; these are Barco, Christie and NEC. Except for Sony, who used to use their own SXRD technology, all use the Digital Light Processing (DLP) technology developed by Texas Instruments

(TI). D-Cinema projectors are similar in principle to digital

projectors used in industry, education, and domestic home cinemas, but

differ in two important respects. First, projectors must conform to the

strict performance requirements of the DCI specification. Second,

projectors must incorporate anti-piracy devices intended to enforce

copyright compliance such as licensing limits. For these reasons all

projectors intended to be sold to theaters for screening current release

movies must be approved by the DCI before being put on sale.

They now pass through a process called CTP (compliance test plan).

Because feature films in digital form are encrypted and the decryption

keys (KDMs) are locked to the serial number of the server used (linking

to both the projector serial number and server is planned in the

future), a system will allow playback of a protected feature only with

the required KDM.

DLP Cinema

Three manufacturers have licensed the DLP Cinema technology developed by Texas Instruments (TI): Christie Digital Systems, Barco, and NEC.

While NEC is a relative newcomer to Digital Cinema, Christie is the

main player in the U.S. and Barco takes the lead in Europe and Asia.

Initially DCI-compliant DLP projectors were available in 2K only, but

from early 2012, when TI's 4K DLP chip went into full production, DLP

projectors have been available in both 2K and 4K versions. Manufacturers

of DLP-based cinema projectors can now also offer 4K upgrades to some

of the more recent 2K models. Early DLP Cinema projectors,

which were deployed primarily in the United States, used limited

1280×1024 resolution or the equivalent of 1.3 MP (megapixels). Digital

Projection Incorporated (DPI) designed and sold a few DLP Cinema units

(is8-2K) when TI's 2K technology debuted but then abandoned the D-Cinema

market while continuing to offer DLP-based projectors for non-cinema

purposes. Although based on the same 2K TI "light engine" as those of

the major players they are so rare as to be virtually unknown in the

industry. They are still widely used for pre-show advertising but not

usually for feature presentations.

TI's technology is based on the use of digital micromirror devices (DMDs). These are MEMS

devices that are manufactured from silicon using similar technology to

that of computer chips. The surface of these devices is covered by a

very large number of microscopic mirrors, one for each pixel, so a 2K

device has about 2.2 million mirrors and a 4K device about 8.8 million.

Each mirror vibrates several thousand times a second between two

positions: In one, light from the projector's lamp is reflected towards

the screen, in the other away from it. The proportion of the time the

mirror is in each position varies according to the required brightness

of each pixel. Three DMD devices are used, one for each of the primary

colors. Light from the lamp, usually a Xenon arc lamp

similar to those used in film projectors with a power between 1 kW and

7 kW, is split by colored filters into red, green and blue beams which

are directed at the appropriate DMD. The 'forward' reflected beam from

the three DMDs is then re-combined and focused by the lens onto the

cinema screen.

Sony SXRD

Alone

amongst the manufacturers of DCI-compliant cinema projectors Sony

decided to develop its own technology rather than use TI's DLP

technology. SXRD

(Silicon X-tal (Crystal) Reflective Display) projectors have only ever

been manufactured in 4K form and, until the launch of the 4K DLP chip by

TI, Sony SXRD projectors were the only 4K DCI-compatible projectors on

the market. Unlike DLP projectors, however, SXRD projectors do not

present the left and right eye images of stereoscopic movies

sequentially, instead they use half the available area on the SXRD chip

for each eye image. Thus during stereoscopic presentations the SXRD

projector functions as a sub 2K projector, the same for HFR 3D Content.

However, Sony decided in late April, 2020 that they would no longer manufacture digital cinema projectors.

Stereo 3D images

In late 2005, interest in digital 3-D stereoscopic

projection led to a new willingness on the part of theaters to

co-operate in installing 2K stereo installations to show Disney's Chicken Little in 3-D film. Six more digital 3-D movies were released in 2006 and 2007 (including Beowulf, Monster House and Meet the Robinsons). The technology combines a single digital projector fitted with either a polarizing filter (for use with polarized glasses and silver screens), a filter wheel or an emitter for LCD glasses. RealD uses a "ZScreen"

for polarisation and MasterImage uses a filter wheel that changes the

polarity of projector's light output several times per second to

alternate quickly the left-and-right-eye views. Another system that uses

a filter wheel is Dolby 3D.

The wheel changes the wavelengths of the colours being displayed, and

tinted glasses filter these changes so the incorrect wavelength cannot

enter the wrong eye. XpanD makes use of an external emitter that sends a signal to the 3D glasses to block out the wrong image from the wrong eye.

Laser

RGB laser projection produces the purest BT.2020 colors and the brightest images.

LED screen for digital cinema

In Asia, on July 13, 2017, an LED screen for digital cinema developed by Samsung Electronics was publicly demonstrated on one screen at Lotte Cinema World Tower in Seoul. First installation in Europe is in Arena Sihlcity Cinema in Zürich. These displays do not use a projector; instead they use a MicroLED video wall,

and can offer higher contrast ratios, higher resolutions, and overall

improvements in image quality. MicroLED allows for the elimination of

display bezels, creating the illusion of a single large screen. This is

possible due to the large amount of spacing in between pixels in

MicroLED displays. Sony already sells MicroLED displays as a replacement

for conventional cinema screens.

Effect on distribution

Digital distribution

of movies has the potential to save money for film distributors. To

print an 80-minute feature film can cost US$1,500 to $2,500,

so making thousands of prints for a wide-release movie can cost

millions of dollars. In contrast, at the maximum 250 megabit-per-second

data rate (as defined by DCI for digital cinema), a feature-length movie can be stored on an off-the-shelf 300 GB

hard drive for $50 and a broad release of 4000 'digital prints' might

cost $200,000. In addition hard drives can be returned to distributors

for reuse. With several hundred movies distributed every year, the

industry saves billions of dollars. The digital-cinema roll-out was

stalled by the slow pace at which exhibitors acquired digital

projectors, since the savings would be seen not by themselves but by

distribution companies. The Virtual Print Fee model was created to address this by passing some of the saving on to the cinemas.

As a consequence of the rapid conversion to digital projection, the

number of theatrical releases exhibited on film is dwindling. As of 4

May 2014, 37,711 screens (out of a total of 40,048 screens) in the

United States have been converted to digital, 3,013 screens in Canada

have been converted, and 79,043 screens internationally have been

converted.

Telecommunication

Realization and demonstration, on October 29, 2001, of the first digital cinema transmission by satellite in Europe of a feature film by Bernard Pauchon, Alain Lorentz, Raymond Melwig and Philippe Binant.

Live broadcasting to cinemas

Digital cinemas can deliver live broadcasts

from performances or events. This began initially with live broadcasts

from the New York Metropolitan Opera delivering regular live broadcasts

into cinemas and has been widely imitated ever since. Leading

territories providing the content are the UK, the US, France and

Germany. The Royal Opera House, Sydney Opera House, English National

Opera and others have found new and returning audiences captivated by

the detail offered by a live digital broadcast featuring handheld and

cameras on cranes positioned throughout the venue to capture the emotion

that might be missed in a live venue situation. In addition these

providers all offer additional value during the intervals e.g.

interviews with choreographers, cast members, a backstage tour which

would not be on offer at the live event itself. Other live events in

this field include live theatre from NT Live, Branagh Live, Royal

Shakespeare Company, Shakespeare's Globe, the Royal Ballet, Mariinsky

Ballet, the Bolshoi Ballet and the Berlin Philharmoniker.

In the last ten years this initial offering of the arts has also

expanded to include live and recorded music events such as Take That

Live, One Direction Live, Andre Rieu, live musicals such as the recent

Miss Saigon and a record-breaking Billy Elliot Live In Cinemas. Live

sport, documentary with a live question and answer element such as the

recent Oasis documentary, lectures, faith broadcasts, stand-up comedy,

museum and gallery exhibitions, TV specials such as the record-breaking Doctor Who fiftieth anniversary special The Day Of The Doctor,

have all contributed to creating a valuable revenue stream for cinemas

large and small all over the world. Subsequently, live broadcasting,

formerly known as Alternative Content, has become known as Event Cinema

and a trade association now exists to that end. Ten years on the sector

has become a sizeable revenue stream in its own right, earning a loyal

following amongst fans of the arts, and the content limited only by the

imagination of the producers it would seem. Theatre, ballet, sport,

exhibitions, TV specials and documentaries are now established forms of

Event Cinema. Worldwide estimations put the likely value of the Event

Cinema industry at $1bn by 2019.

Event Cinema currently accounts for on average between 1-3% of

overall box office for cinemas worldwide but anecdotally it's been

reported that some cinemas attribute as much as 25%, 48% and even 51%

(the Rio Bio cinema in Stockholm) of their overall box office. It is

envisaged ultimately that Event Cinema will account for around 5% of the

overall box office globally. Event Cinema saw six worldwide records set

and broken over from 2013 to 2015 with notable successes Dr Who ($10.2m

in three days at the box office - event was also broadcast on

terrestrial TV simultaneously), Pompeii Live by the British Museum,

Billy Elliot, Andre Rieu, One Direction, Richard III by the Royal

Shakespeare Company.

Event Cinema is defined more by the frequency of events rather

than by the content itself. Event Cinema events typically appear in

cinemas during traditionally quieter times in the cinema week such as

the Monday-Thursday daytime/evening slot and are characterised by the

One Night Only release, followed by one or possibly more 'Encore'

releases a few days or weeks later if the event is successful and sold

out. On occasion more successful events have returned to cinemas some

months or even years later in the case of NT Live where the audience

loyalty and company branding is so strong the content owner can be

assured of a good showing at the box office.

Pros and cons

Pros

The

digital formation of sets and locations, especially in the time of

growing film series and sequels, is that virtual sets, once computer

generated and stored, can be easily revived for future films.Considering digital film images are documented as data files on hard

disk or flash memory, varying systems of edits can be executed with the

alteration of a few settings on the editing console with the structure

being composed virtually in the computer's memory. A broad choice of

effects can be sampled simply and rapidly, without the physical

constraints posed by traditional cut-and-stick editing.

Digital cinema allows national cinemas to construct films specific to

their cultures in ways that the more constricting configurations and

economics of customary film-making prevented. Low-cost cameras and

computer-based editing software have gradually enabled films to be

produced for minimal cost. The ability of digital cameras to allow

film-makers to shoot limitless footage without wasting pricey celluloid

has transformed film production in some Third World countries.

From consumers' perspective digital prints don't deteriorate with the

number of showings. Unlike celluloid film, there is no projection

mechanism or manual handling to add scratches or other physically

generated artefacts. Provincial cinemas that would have received old

prints can give consumers the same cinematographic experience (all other

things being equal) as those attending the premiere.

The use of NLEs in movies allows for edits and cuts to be made non-destructively, without actually discarding any footage.

Cons

A number of high-profile film directors, including Christopher Nolan, Paul Thomas Anderson, David O. Russell and Quentin Tarantino

have publicly criticized digital cinema and advocated the use of film

and film prints. Most famously, Tarantino has suggested he may retire

because, though he can still shoot on film, because of the rapid

conversion to digital, he cannot project from 35 mm prints in the

majority of American cinemas. Steven Spielberg

has stated that though digital projection produces a much better image

than film if originally shot in digital, it is "inferior" when it has

been converted to digital. He attempted at one stage to release Indiana Jones and the Kingdom of the Crystal Skull solely on film. Paul Thomas Anderson recently was able to create 70-mm film prints for his film The Master.

Film critic Roger Ebert criticized the use of DCPs after a cancelled film festival screening of Brian DePalma's film Passion at New York Film Festival as a result of a lockup due to the coding system.

The theoretical resolution of 35 mm film is greater than that of 2K digital cinema. 2K resolution (2048×1080) is also only slightly greater than that of consumer based 1080p HD (1920x1080).

However, since digital post-production techniques became the standard

in the early 2000s, the majority of movies, whether photographed

digitally or on 35 mm film, have been mastered and edited at the 2K

resolution. Moreover, 4K post production was becoming more common as of

2013. As projectors are replaced with 4K models the difference in resolution between digital and 35 mm film is somewhat reduced.

Digital cinema servers utilize far greater bandwidth over domestic

"HD", allowing for a difference in quality (e.g., Blu-ray colour

encoding 4:2:0 48 Mbit/s MAX datarate, DCI D-Cinema 4:4:4 250 Mbit/s

2D/3D, 500 Mbit/s HFR3D). Each frame has greater detail.

Owing to the smaller dynamic range of digital cameras, correcting

poor digital exposures is more difficult than correcting poor film

exposures during post-production. A partial solution to this problem is

to add complex video-assist technology during the shooting process.

However, such technologies are typically available only to high-budget

production companies.

Digital cinemas' efficiency of storing images has a downside. The speed

and ease of modern digital editing processes threatens to give editors

and their directors, if not an embarrassment of choice then at least a

confusion of options, potentially making the editing process, with this

'try it and see' philosophy, lengthier rather than shorter. Because the equipment needed to produce digital feature films can be

obtained more easily than celluloid, producers could inundate the market

with cheap productions and potentially dominate the efforts of serious

directors. Because of the quick speed in which they are filmed, these

stories sometimes lack essential narrative structure.

The projectors used for celluloid film were largely the same

technology as when film/movies were invented over 100 years ago. The

evolutions of adding sound and wide screen could largely be accommodated

by bolting on sound decoders, and changing lenses. This well proven and

understood technology had several advantages 1) The life of a

mechanical projector of around 35 years 2) a mean time between failures (MTBF)

of 15 years and 3) an average repair time of 15 minutes (often done by

the projectionist). On the other hand, digital projectors are around 10

times more expensive, have a much shorter life expectancy due to the

developing technology (already technology has moved from 2K to 4K) so

the pace of obsolescence is higher. The MTBF has not yet been

established, but the ability for the projectionist to effect a quick

repair is gone.

Costs

Pros

The

electronic transferring of digital film, from central servers to

servers in cinema projection booths, is an inexpensive process of

supplying copies of newest releases to the vast number of cinema screens

demanded by prevailing saturation-release strategies. There is a

significant saving on print expenses in such cases: at a minimum cost

per print of $1200–2000, the cost of celluloid print production is

between $5–8 million per film. With several thousand releases a year,

the probable savings offered by digital distribution and projection are

over $1 billion.

The cost savings and ease, together with the ability to store film

rather than having to send a print on to the next cinema, allows a

larger scope of films to be screened and watched by the public; minority

and small-budget films that would not otherwise get such a chance.

Cons

The initial

costs for converting theaters to digital are high: $100,000 per screen,

on average. Theaters have been reluctant to switch without a

cost-sharing arrangement with film distributors. A solution is a temporary Virtual Print Fee

system, where the distributor (who saves the money of producing and

transporting a film print) pays a fee per copy to help finance the

digital systems of the theaters. A theater can purchase a film projector for as little as $10,000 (though projectors intended for commercial cinemas cost two to three times that; to which must be added the cost of a long-play system,

which also costs around $10,000, making a total of around

$30,000–$40,000) from which they could expect an average life of 30–40

years. By contrast, a digital cinema playback system—including server, media block, and projector—can cost two to three times as much,

and would have a greater risk of component failure and obsolescence.

(In Britain the cost of an entry level projector including server,

installation, etc., would be £31,000 [$50,000].)

Archiving digital masters has also turned out to be both tricky and costly. In a 2007 study, the Academy of Motion Picture Arts and Sciences

found the cost of long-term storage of 4K digital masters to be

"enormously higher—up to 11 times that of the cost of storing film

masters." This is because of the limited or uncertain lifespan of

digital storage: No current digital medium—be it optical disc, magnetic hard drive

or digital tape—can reliably store a motion picture for as long as a

hundred years or more (something that film—properly stored and

handled—does very well).

The short history of digital storage media has been one of innovation

and, therefore, of obsolescence. Archived digital content must be

periodically removed from obsolete physical media to up-to-date media.

The expense of digital image capture is not necessarily less than the capture of images onto film; indeed, it is sometimes greater.