|

| |||

| |||

| Names | |||

|---|---|---|---|

| Preferred IUPAC name

Formic acid | |||

| Systematic IUPAC name

Methanoic acid | |||

| Other names

Carbonous acid; Formylic acid; Hydrogen carboxylic acid; Hydroxy(oxo)methane; Metacarbonoic acid; Oxocarbinic acid; Oxomethanol

| |||

| Identifiers | |||

3D model (JSmol)

|

|||

| 1209246 | |||

| ChEBI | |||

| ChEMBL | |||

| ChemSpider | |||

| DrugBank | |||

| ECHA InfoCard | 100.000.527 | ||

| EC Number |

| ||

| E number | E236 (preservatives) | ||

| 1008 | |||

| KEGG | |||

PubChem CID

|

|||

| RTECS number |

| ||

| UNII | |||

CompTox Dashboard (EPA)

|

|||

| Properties | |

|---|---|

| CH2O2 | |

| Molar mass | 46.025 g·mol−1 |

| Appearance | Colorless fuming liquid |

| Odor | Pungent, penetrating |

| Density | 1.220 g/mL |

| Melting point | 8.4 °C (47.1 °F; 281.5 K) |

| Boiling point | 100.8 °C (213.4 °F; 373.9 K) |

| Miscible | |

| Solubility | Miscible with ether, acetone, ethyl acetate, glycerol, methanol, ethanol Partially soluble in benzene, toluene, xylenes |

| log P | −0.54 |

| Vapor pressure | 35 mmHg (20 °C) |

| Acidity (pKa) | 3.745 |

| Conjugate base | Formate |

| −19.90·10−6 cm3/mol | |

Refractive index (nD)

|

1.3714 (20 °C) |

| Viscosity | 1.57 cP at 268 °C |

| Structure | |

| Planar | |

| 1.41 D (gas) | |

| Thermochemistry | |

Std molar

entropy (S |

131.8 J/mol K |

Std enthalpy of

formation (ΔfH⦵298) |

−425.0 kJ/mol |

Std enthalpy of

combustion (ΔcH⦵298) |

−254.6 kJ/mol |

| Pharmacology | |

| QP53AG01 (WHO) | |

| Hazards | |

| Occupational safety and health (OHS/OSH): | |

Main hazards

|

Corrosive; irritant; sensitizer |

| GHS labelling: | |

| |

| Danger | |

| H314 | |

| P260, P264, P280, P301+P330+P331, P303+P361+P353, P304+P340, P305+P351+P338, P310, P321, P363, P405, P501 | |

| NFPA 704 (fire diamond) | |

| Flash point | 69 °C (156 °F; 342 K) |

| 601 °C (1,114 °F; 874 K) | |

| Explosive limits | 14–34% 18–57% (90% solution) |

| Lethal dose or concentration (LD, LC): | |

LD50 (median dose)

|

700 mg/kg (mouse, oral), 1100 mg/kg (rat, oral), 4000 mg/kg (dog, oral) |

LC50 (median concentration)

|

7853 ppm (rat, 15 min) 3246 ppm (mouse, 15 min) |

| NIOSH (US health exposure limits): | |

PEL (Permissible)

|

TWA 5 ppm (9 mg/m3) |

REL (Recommended)

|

TWA 5 ppm (9 mg/m3) |

IDLH (Immediate danger)

|

30 ppm |

| Safety data sheet (SDS) | MSDS from JT Baker |

| Related compounds | |

Related carboxylic acids

|

Acetic acid Propionic acid |

Related compounds

|

Formaldehyde Methanol |

| Supplementary data page | |

| Formic acid (data page) | |

Except where otherwise noted, data are given for materials in their standard state (at 25 °C [77 °F], 100 kPa). | |

Formic acid, systematically named methanoic acid, is the simplest carboxylic acid, and has the chemical formula HCOOH. It is an important intermediate in chemical synthesis and occurs naturally, most notably in some ants. The word "formic" comes from the Latin word for ant, formica, referring to its early isolation by the distillation of ant bodies. Esters, salts, and the anion derived from formic acid are called formates. Industrially, formic acid is produced from methanol.

Properties

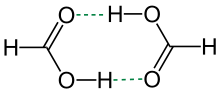

Formic acid is a colorless liquid having a pungent, penetrating odor at room temperature, comparable to the related acetic acid. It is miscible with water and most polar organic solvents, and is somewhat soluble in hydrocarbons. In hydrocarbons and in the vapor phase, it consists of hydrogen-bonded dimers rather than individual molecules. Owing to its tendency to hydrogen-bond, gaseous formic acid does not obey the ideal gas law. Solid formic acid, which can exist in either of two polymorphs, consists of an effectively endless network of hydrogen-bonded formic acid molecules. Formic acid forms a high-boiling azeotrope with water (22.4%). Liquid formic acid tends to supercool.

Natural occurrence

In nature, formic acid is found in most ants and in stingless bees of the genus Oxytrigona. The wood ants from the genus Formica can spray formic acid on their prey or to defend the nest. The puss moth caterpillar (Cerura vinula) will spray it as well when threatened by predators. It is also found in the trichomes of stinging nettle (Urtica dioica). Formic acid is a naturally occurring component of the atmosphere primarily due to forest emissions.

Production

In 2009, the worldwide capacity for producing formic acid was 720 thousand tonnes (1.6 billion pounds) per year, roughly equally divided between Europe (350 thousand tonnes or 770 million pounds, mainly in Germany) and Asia (370 thousand tonnes or 820 million pounds, mainly in China) while production was below 1 thousand tonnes or 2.2 million pounds per year in all other continents. It is commercially available in solutions of various concentrations between 85 and 99 w/w %. As of 2009, the largest producers are BASF, Eastman Chemical Company, LC Industrial, and Feicheng Acid Chemicals, with the largest production facilities in Ludwigshafen (200 thousand tonnes or 440 million pounds per year, BASF, Germany), Oulu (105 thousand tonnes or 230 million pounds, Eastman, Finland), Nakhon Pathom (n/a, LC Industrial), and Feicheng (100 thousand tonnes or 220 million pounds, Feicheng, China). 2010 prices ranged from around €650/tonne (equivalent to around $800/tonne) in Western Europe to $1250/tonne in the United States.

From methyl formate and formamide

When methanol and carbon monoxide are combined in the presence of a strong base, the result is methyl formate, according to the chemical equation:

- CH3OH + CO → HCO2CH3

In industry, this reaction is performed in the liquid phase at elevated pressure. Typical reaction conditions are 80 °C and 40 atm. The most widely used base is sodium methoxide. Hydrolysis of the methyl formate produces formic acid:

- HCO2CH3 + H2O → HCOOH + CH3OH

Efficient hydrolysis of methyl formate requires a large excess of water. Some routes proceed indirectly by first treating the methyl formate with ammonia to give formamide, which is then hydrolyzed with sulfuric acid:

- HCO2CH3 + NH3 → HC(O)NH2 + CH3OH

- 2 HC(O)NH2 + 2H2O + H2SO4 → 2HCO2H + (NH4)2SO4

A disadvantage of this approach is the need to dispose of the ammonium sulfate byproduct. This problem has led some manufacturers to develop energy-efficient methods of separating formic acid from the excess water used in direct hydrolysis. In one of these processes, used by BASF, the formic acid is removed from the water by liquid-liquid extraction with an organic base.

Niche and obsolete chemical routes

By-product of acetic acid production

A significant amount of formic acid is produced as a byproduct in the manufacture of other chemicals. At one time, acetic acid was produced on a large scale by oxidation of alkanes, by a process that cogenerates significant formic acid. This oxidative route to acetic acid has declined in importance so that the aforementioned dedicated routes to formic acid have become more important.

Hydrogenation of carbon dioxide

The catalytic hydrogenation of CO2 to formic acid has long been studied. This reaction can be conducted homogeneously.

Oxidation of biomass

Formic acid can also be obtained by aqueous catalytic partial oxidation of wet biomass by the OxFA process. A Keggin-type polyoxometalate (H5PV2Mo10O40) is used as the homogeneous catalyst to convert sugars, wood, waste paper, or cyanobacteria to formic acid and CO2 as the sole byproduct. Yields of up to 53% formic acid can be achieved.

Laboratory methods

In the laboratory, formic acid can be obtained by heating oxalic acid in glycerol and extraction by steam distillation. Glycerol acts as a catalyst, as the reaction proceeds through a glyceryl oxalate intermediate. If the reaction mixture is heated to higher temperatures, allyl alcohol results. The net reaction is thus:

- C2O4H2 → HCO2H + CO2

Another illustrative method involves the reaction between lead formate and hydrogen sulfide, driven by the formation of lead sulfide.

- Pb(HCOO)2 + H2S → 2HCOOH + PbS

Electrochemical production

It has been reported that formate can be formed by the electrochemical reduction of CO2 (in the form of bicarbonate) at a lead cathode at pH 8.6:

- HCO−

3 + H

2O + 2e− → HCO−

2 + 2OH−

or

- CO

2 + H

2O + 2e− → HCO−

2 + OH−

If the feed is CO

2 and oxygen is evolved at the anode, the total reaction is:

- CO2 + OH−

→ HCO−

2 + 1/2 O2

This has been proposed as a large-scale source of formate by various groups. The formate could be used as feed to modified E. coli bacteria for producing biomass. Natural microbes do exist that can feed on formic acid or formate (see Methylotroph).

Biosynthesis

Formic acid is named after ants which have high concentrations of the compound in their venom. In ants, formic acid is derived from serine through a 5,10-methenyltetrahydrofolate intermediate. The conjugate base of formic acid, formate, also occurs widely in nature. An assay for formic acid in body fluids, designed for determination of formate after methanol poisoning, is based on the reaction of formate with bacterial formate dehydrogenase.

Artificial photosynthesis

In August 2020 researchers at Cambridge University announced a stand-alone advanced 'photosheet' technology that converts sunlight, carbon dioxide and water into oxygen and formic acid with no other inputs.

Uses

A major use of formic acid is as a preservative and antibacterial agent in livestock feed. In Europe, it is applied on silage, including fresh hay, to promote the fermentation of lactic acid and to suppress the formation of butyric acid; it also allows fermentation to occur quickly, and at a lower temperature, reducing the loss of nutritional value. Formic acid arrests certain decay processes and causes the feed to retain its nutritive value longer, and so it is widely used to preserve winter feed for cattle. In the poultry industry, it is sometimes added to feed to kill E. coli bacteria. Use as a preservative for silage and (other) animal feed constituted 30% of the global consumption in 2009.

Formic acid is also significantly used in the production of leather, including tanning (23% of the global consumption in 2009), and in dyeing and finishing textiles (9% of the global consumption in 2009) because of its acidic nature. Use as a coagulant in the production of rubber consumed 6% of the global production in 2009.

Formic acid is also used in place of mineral acids for various cleaning products, such as limescale remover and toilet bowl cleaner. Some formate esters are artificial flavorings and perfumes.

Beekeepers use formic acid as a miticide against the tracheal mite (Acarapis woodi) and the Varroa destructor mite and Varroa jacobsoni mite.

Formic acid application has been reported to be an effective treatment for warts.

Formic acid can be used in a fuel cell (it can be used directly in formic acid fuel cells and indirectly in hydrogen fuel cells).

It is possible to use formic acid as an intermediary to produce isobutanol from CO2 using microbes.

Formic acid has a potential application in soldering, due to its capacity to reduce oxide layers, formic acid gas can be blasted at an oxide surface in order to increase solder wettability.

Formic acid is often used as a component of mobile phase in reversed-phase high-performance liquid chromatography (RP-HPLC) analysis and separation techniques for the separation of hydrophobic macromolecules, such as peptides, proteins and more complex structures including intact viruses. Especially when paired with mass spectrometry detection, formic acid offers several advantages over the more traditionally used phosphoric acid.

Chemical reactions

Formic acid is about ten times stronger than acetic acid. It is used as a volatile pH modifier in HPLC and capillary electrophoresis.

Formic acid is a source for a formyl group for example in the formylation of methylaniline to N-methylformanilide in toluene.

In synthetic organic chemistry, formic acid is often used as a source of hydride ion. The Eschweiler-Clarke reaction and the Leuckart-Wallach reaction are examples of this application. It, or more commonly its azeotrope with triethylamine, is also used as a source of hydrogen in transfer hydrogenation.

As mentioned below, formic acid readily decomposes with concentrated sulfuric acid to form carbon monoxide.

- HCO2H + H2SO4 → H2SO4 + H2O + CO

Reactions

Formic acid shares most of the chemical properties of other carboxylic acids. Because of its high acidity, solutions in alcohols form esters spontaneously. Formic acid shares some of the reducing properties of aldehydes, reducing solutions of metal oxides to their respective metal.

Decomposition

Heat and especially acids cause formic acid to decompose to carbon monoxide (CO) and water (dehydration). Treatment of formic acid with sulfuric acid is a convenient laboratory source of CO.

In the presence of platinum, it decomposes with a release of hydrogen and carbon dioxide.

- HCO2H → H2 + CO2

Soluble ruthenium catalysts are also effective. Carbon monoxide free hydrogen has been generated in a very wide pressure range (1–600 bar). Formic acid has been considered as a means of hydrogen storage. The co-product of this decomposition, carbon dioxide, can be rehydrogenated back to formic acid in a second step. Formic acid contains 53 g/L hydrogen at room temperature and atmospheric pressure, which is three and a half times as much as compressed hydrogen gas can attain at 350 bar pressure (14.7 g/L). Pure formic acid is a liquid with a flash point of +69 °C, much higher than that of gasoline (−40 °C) or ethanol (+13 °C).

Addition to alkenes

Formic acid is unique among the carboxylic acids in its ability to participate in addition reactions with alkenes. Formic acids and alkenes readily react to form formate esters. In the presence of certain acids, including sulfuric and hydrofluoric acids, however, a variant of the Koch reaction occurs instead, and formic acid adds to the alkene to produce a larger carboxylic acid.

Formic acid anhydride

An unstable formic anhydride, H(C=O)−O−(C=O)H, can be obtained by dehydration of formic acid with N,N′-dicyclohexylcarbodiimide in ether at low temperature.

History

Some alchemists and naturalists were aware that ant hills give off an acidic vapor as early as the 15th century. The first person to describe the isolation of this substance (by the distillation of large numbers of ants) was the English naturalist John Ray, in 1671. Ants secrete the formic acid for attack and defense purposes. Formic acid was first synthesized from hydrocyanic acid by the French chemist Joseph Gay-Lussac. In 1855, another French chemist, Marcellin Berthelot, developed a synthesis from carbon monoxide similar to the process used today.

Formic acid was long considered a chemical compound of only minor interest in the chemical industry. In the late 1960s, however, significant quantities became available as a byproduct of acetic acid production. It now finds increasing use as a preservative and antibacterial in livestock feed.

Safety

Formic acid has low toxicity (hence its use as a food additive), with an LD50 of 1.8 g/kg (tested orally on mice). The concentrated acid is corrosive to the skin.

Formic acid is readily metabolized and eliminated by the body. Nonetheless, it has specific toxic effects; the formic acid and formaldehyde produced as metabolites of methanol are responsible for the optic nerve damage, causing blindness, seen in methanol poisoning. Some chronic effects of formic acid exposure have been documented. Some experiments on bacterial species have demonstrated it to be a mutagen. Chronic exposure in humans may cause kidney damage. Another possible effect of chronic exposure is development of a skin allergy that manifests upon re-exposure to the chemical.

Concentrated formic acid slowly decomposes to carbon monoxide and water, leading to pressure buildup in the containing vessel. For this reason, 98% formic acid is shipped in plastic bottles with self-venting caps.

The hazards of solutions of formic acid depend on the concentration. The following table lists the Globally Harmonized System of Classification and Labelling of Chemicals for formic acid solutions:

| Concentration (weight percent) | Pictogram | H-Phrases |

|---|---|---|

| 2–10% |

|

H315 |

| 10–90% |

|

H313 |

| >90% |

|

H314 |

Formic acid in 85% concentration is flammable, and diluted formic acid is on the U.S. Food and Drug Administration list of food additives. The principal danger from formic acid is from skin or eye contact with the concentrated liquid or vapors. The U.S. OSHA Permissible Exposure Level (PEL) of formic acid vapor in the work environment is 5 parts per million parts of air (ppm).