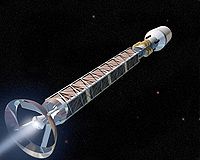

Illustration of an emission of a gamma ray (γ) from an atomic nucleus

NASA guide to electromagnetic spectrum showing overlap of frequency between X-rays and gamma rays

A gamma ray, also known as gamma radiation (symbol γ or  ), is a penetrating form of electromagnetic radiation arising from the radioactive decay of atomic nuclei. It consists of the shortest wavelength electromagnetic waves, typically shorter than those of X-rays. With frequencies above 30 exahertz (3×1019 Hz), it imparts the highest photon energy. Paul Villard, a French chemist and physicist, discovered gamma radiation in 1900 while studying radiation emitted by radium. In 1903, Ernest Rutherford named this radiation gamma rays based on their relatively strong penetration of matter; in 1900 he had already named two less penetrating types of decay radiation (discovered by Henri Becquerel) alpha rays and beta rays in ascending order of penetrating power.

), is a penetrating form of electromagnetic radiation arising from the radioactive decay of atomic nuclei. It consists of the shortest wavelength electromagnetic waves, typically shorter than those of X-rays. With frequencies above 30 exahertz (3×1019 Hz), it imparts the highest photon energy. Paul Villard, a French chemist and physicist, discovered gamma radiation in 1900 while studying radiation emitted by radium. In 1903, Ernest Rutherford named this radiation gamma rays based on their relatively strong penetration of matter; in 1900 he had already named two less penetrating types of decay radiation (discovered by Henri Becquerel) alpha rays and beta rays in ascending order of penetrating power.

Gamma rays from radioactive decay are in the energy range from a few kiloelectronvolts (keV) to approximately 8 megaelectronvolts (MeV),

corresponding to the typical energy levels in nuclei with reasonably

long lifetimes. The energy spectrum of gamma rays can be used to

identify the decaying radionuclides using gamma spectroscopy. Very-high-energy gamma rays in the 100–1000 teraelectronvolt (TeV) range have been observed from sources such as the Cygnus X-3 microquasar.

Natural sources of gamma rays originating on Earth are mostly a

result of radioactive decay and secondary radiation from atmospheric

interactions with cosmic ray particles. However, there are other rare natural sources, such as terrestrial gamma-ray flashes, which produce gamma rays from electron action upon the nucleus. Notable artificial sources of gamma rays include fission, such as that which occurs in nuclear reactors, and high energy physics experiments, such as neutral pion decay and nuclear fusion.

Gamma rays and X-rays are both electromagnetic radiation, and since they overlap in the electromagnetic spectrum,

the terminology varies between scientific disciplines. In some fields

of physics, they are distinguished by their origin: Gamma rays are

created by nuclear decay while X-rays originate outside the nucleus. In astrophysics, gamma rays are conventionally defined as having photon energies above 100 keV and are the subject of gamma ray astronomy, while radiation below 100 keV is classified as X-rays and is the subject of X-ray astronomy.

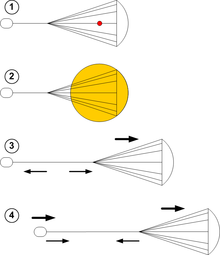

Gamma rays are ionizing radiation

and are thus hazardous to life. Due to their high penetration power,

they can damage bone marrow and internal organs. Unlike alpha and beta

rays, they easily pass through the body and thus pose a formidable radiation protection challenge, requiring shielding made from dense materials such as lead or concrete. On Earth, the magnetosphere protects life from most types of lethal cosmic radiation other than gamma rays.

History of discovery

The first gamma ray source to be discovered was the radioactive decay process called gamma decay. In this type of decay, an excited nucleus emits a gamma ray almost immediately upon formation. Paul Villard, a French chemist and physicist, discovered gamma radiation in 1900, while studying radiation emitted from radium.

Villard knew that his described radiation was more powerful than

previously described types of rays from radium, which included beta

rays, first noted as "radioactivity" by Henri Becquerel

in 1896, and alpha rays, discovered as a less penetrating form of

radiation by Rutherford, in 1899. However, Villard did not consider

naming them as a different fundamental type. Later, in 1903, Villard's radiation was recognized as being of a type fundamentally different from previously named rays by Ernest Rutherford, who named Villard's rays "gamma rays" by analogy with the beta and alpha rays that Rutherford had differentiated in 1899.

The "rays" emitted by radioactive elements were named in order of their

power to penetrate various materials, using the first three letters of

the Greek alphabet: alpha rays as the least penetrating, followed by

beta rays, followed by gamma rays as the most penetrating. Rutherford

also noted that gamma rays were not deflected (or at least, not easily deflected) by a magnetic field, another property making them unlike alpha and beta rays.

Gamma rays were first thought to be particles with mass, like

alpha and beta rays. Rutherford initially believed that they might be

extremely fast beta particles, but their failure to be deflected by a

magnetic field indicated that they had no charge. In 1914, gamma rays were observed to be reflected from crystal surfaces, proving that they were electromagnetic radiation. Rutherford and his co-worker Edward Andrade measured the wavelengths of gamma rays from radium, and found they were similar to X-rays, but with shorter wavelengths and thus, higher frequency. This was eventually recognized as giving them more energy per photon, as soon as the latter term became generally accepted. A gamma decay was then understood to usually emit a gamma photon.

Sources

Natural sources of gamma rays on Earth include gamma decay from naturally occurring radioisotopes such as potassium-40, and also as a secondary radiation from various atmospheric interactions with cosmic ray particles. Some rare terrestrial natural sources that produce gamma rays that are not of a nuclear origin, are lightning strikes and terrestrial gamma-ray flashes,

which produce high energy emissions from natural high-energy voltages.

Gamma rays are produced by a number of astronomical processes in which

very high-energy electrons are produced. Such electrons produce

secondary gamma rays by the mechanisms of bremsstrahlung, inverse Compton scattering and synchrotron radiation.

A large fraction of such astronomical gamma rays are screened by

Earth's atmosphere. Notable artificial sources of gamma rays include fission, such as occurs in nuclear reactors, as well as high energy physics experiments, such as neutral pion decay and nuclear fusion.

A sample of gamma ray-emitting material that is used for irradiating or imaging is known as a gamma source. It is also called a radioactive source,

isotope source, or radiation source, though these more general terms

also apply to alpha and beta-emitting devices. Gamma sources are usually

sealed to prevent radioactive contamination, and transported in heavy shielding.

Radioactive decay (gamma decay)

Gamma rays are produced during gamma decay, which normally occurs after other forms of decay occur, such as alpha or beta decay. A radioactive nucleus can decay by the emission of an

α

or

β

particle. The daughter nucleus

that results is usually left in an excited state. It can then decay to a

lower energy state by emitting a gamma ray photon, in a process called

gamma decay.

The emission of a gamma ray from an excited nucleus typically requires only 10−12 seconds. Gamma decay may also follow nuclear reactions such as neutron capture, nuclear fission,

or nuclear fusion. Gamma decay is also a mode of relaxation of many

excited states of atomic nuclei following other types of radioactive

decay, such as beta decay, so long as these states possess the necessary

component of nuclear spin.

When high-energy gamma rays, electrons, or protons bombard materials,

the excited atoms emit characteristic "secondary" gamma rays, which are

products of the creation of excited nuclear states in the bombarded

atoms. Such transitions, a form of nuclear gamma fluorescence, form a topic in nuclear physics called gamma spectroscopy. Formation of fluorescent gamma rays are a rapid subtype of radioactive gamma decay.

In certain cases, the excited nuclear state that follows the

emission of a beta particle or other type of excitation, may be more

stable than average, and is termed a metastable excited state, if its decay takes (at least) 100 to 1000 times longer than the average 10−12 seconds. Such relatively long-lived excited nuclei are termed nuclear isomers, and their decays are termed isomeric transitions. Such nuclei have half-lifes

that are more easily measurable, and rare nuclear isomers are able to

stay in their excited state for minutes, hours, days, or occasionally

far longer, before emitting a gamma ray. The process of isomeric

transition is therefore similar to any gamma emission, but differs in

that it involves the intermediate metastable excited state(s) of the

nuclei. Metastable states are often characterized by high nuclear spin,

requiring a change in spin of several units or more with gamma decay,

instead of a single unit transition that occurs in only 10−12 seconds. The rate of gamma decay is also slowed when the energy of excitation of the nucleus is small.

An emitted gamma ray from any type of excited state may transfer its energy directly to any electrons,

but most probably to one of the K shell electrons of the atom, causing

it to be ejected from that atom, in a process generally termed the photoelectric effect

(external gamma rays and ultraviolet rays may also cause this effect).

The photoelectric effect should not be confused with the internal conversion

process, in which a gamma ray photon is not produced as an intermediate

particle (rather, a "virtual gamma ray" may be thought to mediate the

process).

Decay schemes

Radioactive decay scheme of 60

Co

Gamma emission spectrum of cobalt-60

One example of gamma ray production due to radionuclide decay is the

decay scheme for cobalt-60, as illustrated in the accompanying diagram.

First, 60

Co

decays to excited 60

Ni

by beta decay emission of an electron of 0.31 MeV. Then the excited 60

Ni

decays to the ground state (see nuclear shell model) by emitting gamma rays in succession of 1.17 MeV followed by 1.33 MeV. This path is followed 99.88% of the time:

Another example is the alpha decay of 241

Am

to form 237

Np

;

which is followed by gamma emission. In some cases, the gamma emission

spectrum of the daughter nucleus is quite simple, (e.g. 60

Co

/60

Ni

) while in other cases, such as with (241

Am

/237

Np

and 192

Ir

/192

Pt

), the gamma emission spectrum is complex, revealing that a series of nuclear energy levels exist.

Particle physics

Gamma rays are produced in many processes of particle physics. Typically, gamma rays are the products of neutral systems which decay through electromagnetic interactions (rather than a weak or strong interaction). For example, in an electron–positron annihilation, the usual products are two gamma ray photons. If the annihilating electron and positron are at rest, each of the resulting gamma rays has an energy of ~ 511 keV and frequency of ~ 1.24×1020 Hz. Similarly, a neutral pion most often decays into two photons. Many other hadrons and massive bosons also decay electromagnetically. High energy physics experiments, such as the Large Hadron Collider, accordingly employ substantial radiation shielding. Because subatomic particles

mostly have far shorter wavelengths than atomic nuclei, particle

physics gamma rays are generally several orders of magnitude more

energetic than nuclear decay gamma rays. Since gamma rays are at the top

of the electromagnetic spectrum in terms of energy, all extremely

high-energy photons are gamma rays; for example, a photon having the Planck energy would be a gamma ray.

Other sources

A few gamma rays in astronomy are known to arise from gamma decay (see discussion of SN1987A), but most do not.

Photons from astrophysical sources that carry energy in the gamma

radiation range are often explicitly called gamma-radiation. In

addition to nuclear emissions, they are often produced by sub-atomic

particle and particle-photon interactions. Those include electron-positron annihilation, neutral pion decay, bremsstrahlung, inverse Compton scattering, and synchrotron radiation.

Laboratory sources

In October 2017, scientists from various European universities

proposed a means for sources of GeV photons using lasers as exciters

through a controlled interplay between the cascade and anomalous radiative trapping.

Terrestrial thunderstorms

Thunderstorms can produce a brief pulse of gamma radiation called a terrestrial gamma-ray flash.

These gamma rays are thought to be produced by high intensity static

electric fields accelerating electrons, which then produce gamma rays by

bremsstrahlung

as they collide with and are slowed by atoms in the atmosphere. Gamma

rays up to 100 MeV can be emitted by terrestrial thunderstorms, and were

discovered by space-borne observatories. This raises the possibility of

health risks to passengers and crew on aircraft flying in or near

thunderclouds.

Solar flares

The most effusive solar flares emit across the entire EM spectrum, including γ-rays. The first confident observation occurred in 1972.

Cosmic rays

Extraterrestrial, high energy gamma rays include the gamma ray

background produced when cosmic rays (either high speed electrons or

protons) collide with ordinary matter, producing pair-production gamma

rays at 511 keV. Alternatively, bremsstrahlung

are produced at energies of tens of MeV or more when cosmic ray

electrons interact with nuclei of sufficiently high atomic number (see

gamma ray image of the Moon near the end of this article, for

illustration).

Image of entire sky in 100 MeV or greater gamma rays as seen by the EGRET instrument aboard the

CGRO spacecraft. Bright spots within the galactic plane are

pulsars while those above and below the plane are thought to be

quasars.

Pulsars and magnetars

The gamma ray sky (see illustration at right) is dominated by the

more common and longer-term production of gamma rays that emanate from pulsars within the Milky Way. Sources from the rest of the sky are mostly quasars.

Pulsars are thought to be neutron stars with magnetic fields that

produce focused beams of radiation, and are far less energetic, more

common, and much nearer sources (typically seen only in our own galaxy)

than are quasars or the rarer gamma-ray burst

sources of gamma rays. Pulsars have relatively long-lived magnetic

fields that produce focused beams of relativistic speed charged

particles, which emit gamma rays (bremsstrahlung) when those strike gas

or dust in their nearby medium, and are decelerated. This is a similar

mechanism to the production of high-energy photons in megavoltage radiation therapy machines (see bremsstrahlung). Inverse Compton scattering,

in which charged particles (usually electrons) impart energy to

low-energy photons boosting them to higher energy photons. Such impacts

of photons on relativistic charged particle beams is another possible

mechanism of gamma ray production. Neutron stars with a very high

magnetic field (magnetars), thought to produce astronomical soft gamma repeaters, are another relatively long-lived star-powered source of gamma radiation.

Quasars and active galaxies

More powerful gamma rays from very distant quasars and closer active galaxies are thought to have a gamma ray production source similar to a particle accelerator. High energy electrons produced by the quasar, and subjected to inverse Compton scattering, synchrotron radiation, or bremsstrahlung, are the likely source of the gamma rays from those objects. It is thought that a supermassive black hole

at the center of such galaxies provides the power source that

intermittently destroys stars and focuses the resulting charged

particles into beams that emerge from their rotational poles. When those

beams interact with gas, dust, and lower energy photons they produce

X-rays and gamma rays. These sources are known to fluctuate with

durations of a few weeks, suggesting their relatively small size (less

than a few light-weeks across). Such sources of gamma and X-rays are the

most commonly visible high intensity sources outside the Milky Way

galaxy. They shine not in bursts (see illustration), but relatively

continuously when viewed with gamma ray telescopes. The power of a

typical quasar is about 1040 watts, a small fraction of which

is gamma radiation. Much of the rest is emitted as electromagnetic

waves of all frequencies, including radio waves.

A

hypernova. Artist's illustration showing the life of a

massive star as

nuclear fusion

converts lighter elements into heavier ones. When fusion no longer

generates enough pressure to counteract gravity, the star rapidly

collapses to form a

black hole. Theoretically, energy may be released during the collapse along the axis of rotation to form a long duration

gamma-ray burst.

Gamma-ray bursts

The most intense sources of gamma rays, are also the most intense

sources of any type of electromagnetic radiation presently known. They

are the "long duration burst" sources of gamma rays in astronomy ("long"

in this context, meaning a few tens of seconds), and they are rare

compared with the sources discussed above. By contrast, "short" gamma-ray bursts

of two seconds or less, which are not associated with supernovae, are

thought to produce gamma rays during the collision of pairs of neutron

stars, or a neutron star and a black hole.

The so-called long-duration gamma-ray bursts produce a total energy output of about 1044 joules (as much energy as the Sun

will produce in its entire life-time) but in a period of only 20 to 40

seconds. Gamma rays are approximately 50% of the total energy output.

The leading hypotheses for the mechanism of production of these

highest-known intensity beams of radiation, are inverse Compton scattering and synchrotron radiation

from high-energy charged particles. These processes occur as

relativistic charged particles leave the region of the event horizon of a

newly formed black hole

created during supernova explosion. The beam of particles moving at

relativistic speeds are focused for a few tens of seconds by the

magnetic field of the exploding hypernova.

The fusion explosion of the hypernova drives the energetics of the

process. If the narrowly directed beam happens to be pointed toward the

Earth, it shines at gamma ray frequencies with such intensity, that it

can be detected even at distances of up to 10 billion light years, which

is close to the edge of the visible universe.

Properties

Penetration of matter

Due to their penetrating nature, gamma rays require large amounts of

shielding mass to reduce them to levels which are not harmful to living

cells, in contrast to alpha particles, which can be stopped by paper or skin, and beta particles, which can be shielded by thin aluminium. Gamma rays are best absorbed by materials with high atomic numbers (Z) and high density, which contribute to the total stopping power. Because of this, a lead (high Z) shield is 20–30% better as a gamma shield than an equal mass of another low-Z

shielding material, such as aluminium, concrete, water, or soil; lead's

major advantage is not in lower weight, but rather its compactness due

to its higher density. Protective clothing, goggles and respirators can

protect from internal contact with or ingestion of alpha or beta

emitting particles, but provide no protection from gamma radiation from

external sources.

The higher the energy of the gamma rays, the thicker the

shielding made from the same shielding material is required. Materials

for shielding gamma rays are typically measured by the thickness

required to reduce the intensity of the gamma rays by one half (the half value layer or HVL). For example, gamma rays that require 1 cm (0.4 inch) of lead to reduce their intensity by 50% will also have their intensity reduced in half by 4.1 cm of granite rock, 6 cm (2.5 inches) of concrete, or 9 cm (3.5 inches) of packed soil. However, the mass of this much concrete or soil is only 20–30% greater than that of lead with the same absorption capability. Depleted uranium is used for shielding in portable gamma ray sources, but here the savings in weight over lead are larger, as a portable source

is very small relative to the required shielding, so the shielding

resembles a sphere to some extent. The volume of a sphere is dependent

on the cube of the radius; so a source with its radius cut in half will

have its volume (and weight) reduced by a factor of eight, which will

more than compensate for uranium's greater density (as well as reducing

bulk).

In a nuclear power plant, shielding can be provided by steel and

concrete in the pressure and particle containment vessel, while water

provides a radiation shielding of fuel rods during storage or transport

into the reactor core. The loss of water or removal of a "hot" fuel

assembly into the air would result in much higher radiation levels than

when kept under water.

Matter interaction

The

total absorption coefficient of aluminium (atomic number 13) for gamma

rays, plotted versus gamma energy, and the contributions by the three

effects. As is usual, the photoelectric effect is largest at low

energies, Compton scattering dominates at intermediate energies, and

pair production dominates at high energies.

The

total absorption coefficient of lead (atomic number 82) for gamma rays,

plotted versus gamma energy, and the contributions by the three

effects. Here, the photoelectric effect dominates at low energy. Above 5

MeV, pair production starts to dominate.

When a gamma ray passes through matter, the probability for

absorption is proportional to the thickness of the layer, the density of

the material, and the absorption cross section of the material. The

total absorption shows an exponential decrease of intensity with distance from the incident surface:

where x is the thickness of the material from the incident surface, μ= nσ is the absorption coefficient, measured in cm−1, n the number of atoms per cm3 of the material (atomic density) and σ the absorption cross section in cm2.

As it passes through matter, gamma radiation ionizes via three processes:

- The photoelectric effect:

This describes the case in which a gamma photon interacts with and

transfers its energy to an atomic electron, causing the ejection of that

electron from the atom. The kinetic energy of the resulting photoelectron

is equal to the energy of the incident gamma photon minus the energy

that originally bound the electron to the atom (binding energy). The

photoelectric effect is the dominant energy transfer mechanism for X-ray

and gamma ray photons with energies below 50 keV (thousand

electronvolts), but it is much less important at higher energies.

- Compton scattering:

This is an interaction in which an incident gamma photon loses enough

energy to an atomic electron to cause its ejection, with the remainder

of the original photon's energy emitted as a new, lower energy gamma

photon whose emission direction is different from that of the incident

gamma photon, hence the term "scattering". The probability of Compton

scattering decreases with increasing photon energy. It is thought to be

the principal absorption mechanism for gamma rays in the intermediate

energy range 100 keV to 10 MeV. It is relatively independent of the atomic number of the absorbing material, which is why very dense materials like lead are only modestly better shields, on a per weight basis, than are less dense materials.

- Pair production:

This becomes possible with gamma energies exceeding 1.02 MeV, and

becomes important as an absorption mechanism at energies over 5 MeV (see

illustration at right, for lead). By interaction with the electric field

of a nucleus, the energy of the incident photon is converted into the

mass of an electron-positron pair. Any gamma energy in excess of the

equivalent rest mass of the two particles (totaling at least 1.02 MeV)

appears as the kinetic energy of the pair and in the recoil of the

emitting nucleus. At the end of the positron's range,

it combines with a free electron, and the two annihilate, and the

entire mass of these two is then converted into two gamma photons of at

least 0.51 MeV energy each (or higher according to the kinetic energy of

the annihilated particles).

The secondary electrons (and/or positrons) produced in any of these

three processes frequently have enough energy to produce much ionization themselves.

Additionally, gamma rays, particularly high energy ones, can interact with atomic nuclei resulting in ejection of particles in photodisintegration, or in some cases, even nuclear fission (photofission).

Light interaction

High-energy (from 80 GeV to ~10 TeV) gamma rays arriving from far-distant quasars are used to estimate the extragalactic background light

in the universe: The highest-energy rays interact more readily with the

background light photons and thus the density of the background light

may be estimated by analyzing the incoming gamma ray spectra.

Gamma spectroscopy

Gamma spectroscopy is the study of the energetic transitions in

atomic nuclei, which are generally associated with the absorption or

emission of gamma rays. As in optical spectroscopy (see Franck–Condon

effect) the absorption of gamma rays by a nucleus is especially likely

(i.e., peaks in a "resonance") when the energy of the gamma ray is the

same as that of an energy transition in the nucleus. In the case of

gamma rays, such a resonance is seen in the technique of Mössbauer spectroscopy. In the Mössbauer effect

the narrow resonance absorption for nuclear gamma absorption can be

successfully attained by physically immobilizing atomic nuclei in a

crystal. The immobilization of nuclei at both ends of a gamma resonance

interaction is required so that no gamma energy is lost to the kinetic

energy of recoiling nuclei at either the emitting or absorbing end of a

gamma transition. Such loss of energy causes gamma ray resonance

absorption to fail. However, when emitted gamma rays carry essentially

all of the energy of the atomic nuclear de-excitation that produces

them, this energy is also sufficient to excite the same energy state in a

second immobilized nucleus of the same type.

Applications

Gamma-ray image of a truck with two stowaways taken with a

VACIS (vehicle and container imaging system)

Gamma rays provide information about some of the most energetic

phenomena in the universe; however, they are largely absorbed by the

Earth's atmosphere. Instruments aboard high-altitude balloons and

satellites missions, such as the Fermi Gamma-ray Space Telescope, provide our only view of the universe in gamma rays.

Gamma-induced molecular changes can also be used to alter the properties of semi-precious stones, and is often used to change white topaz into blue topaz.

Non-contact industrial sensors commonly use sources of gamma

radiation in refining, mining, chemicals, food, soaps and detergents,

and pulp and paper industries, for the measurement of levels, density,

and thicknesses. Gamma-ray sensors are also used for measuring the fluid levels in water and oil industries. Typically, these use Co-60 or Cs-137 isotopes as the radiation source.

In the US, gamma ray detectors are beginning to be used as part of the Container Security Initiative (CSI). These machines are advertised to be able to scan 30 containers per hour.

Gamma radiation is often used to kill living organisms, in a process called irradiation. Applications of this include the sterilization of medical equipment (as an alternative to autoclaves or chemical means), the removal of decay-causing bacteria from many foods and the prevention of the sprouting of fruit and vegetables to maintain freshness and flavor.

Despite their cancer-causing properties, gamma rays are also used to treat some types of cancer, since the rays also kill cancer cells. In the procedure called gamma-knife

surgery, multiple concentrated beams of gamma rays are directed to the

growth in order to kill the cancerous cells. The beams are aimed from

different angles to concentrate the radiation on the growth while

minimizing damage to surrounding tissues.

Gamma rays are also used for diagnostic purposes in nuclear medicine in imaging techniques. A number of different gamma-emitting radioisotopes are used. For example, in a PET scan a radiolabeled sugar called fluorodeoxyglucose emits positrons

that are annihilated by electrons, producing pairs of gamma rays that

highlight cancer as the cancer often has a higher metabolic rate than

the surrounding tissues. The most common gamma emitter used in medical

applications is the nuclear isomer technetium-99m

which emits gamma rays in the same energy range as diagnostic X-rays.

When this radionuclide tracer is administered to a patient, a gamma camera can be used to form an image of the radioisotope's distribution by detecting the gamma radiation emitted (see also SPECT).

Depending on which molecule has been labeled with the tracer, such

techniques can be employed to diagnose a wide range of conditions (for

example, the spread of cancer to the bones via bone scan).

Health effects

Gamma rays cause damage at a cellular level and are penetrating,

causing diffuse damage throughout the body. However, they are less

ionising than alpha or beta particles, which are less penetrating.

Low levels of gamma rays cause a stochastic health risk, which for radiation dose assessment is defined as the probability of cancer induction and genetic damage. High doses produce deterministic effects, which is the severity of acute tissue damage that is certain to happen. These effects are compared to the physical quantity absorbed dose measured by the unit gray (Gy).

Body response

When gamma radiation breaks DNA molecules, a cell may be able to repair the damaged

genetic material, within limits. However, a study of Rothkamm and

Lobrich has shown that this repair process works well after high-dose

exposure but is much slower in the case of a low-dose exposure.

Risk assessment

The natural outdoor exposure in the United Kingdom ranges from 0.1 to

0.5 µSv/h with significant increase around known nuclear and

contaminated sites.

Natural exposure to gamma rays is about 1 to 2 mSv per year, and the

average total amount of radiation received in one year per inhabitant in

the USA is 3.6 mSv.

There is a small increase in the dose, due to naturally occurring gamma

radiation, around small particles of high atomic number materials in

the human body caused by the photoelectric effect.

By comparison, the radiation dose from chest radiography (about 0.06 mSv) is a fraction of the annual naturally occurring background radiation dose.[21] A chest CT delivers 5 to 8 mSv. A whole-body PET/CT scan can deliver 14 to 32 mSv depending on the protocol. The dose from fluoroscopy of the stomach is much higher, approximately 50 mSv (14 times the annual background).

An acute full-body equivalent single exposure dose of 1 Sv (1000 mSv), or 1 Gy, will cause mild symptoms of acute radiation sickness,

such as nausea and vomiting; and a dose of 2.0–3.5 Sv (2.0–3.5 Gy)

causes more severe symptoms (i.e. nausea, diarrhea, hair loss, hemorrhaging,

and inability to fight infections), and will cause death in a sizable

number of cases —- about 10% to 35% without medical treatment. A dose of

5 Sv (5 Gy) is considered approximately the LD50

(lethal dose for 50% of exposed population) for an acute exposure to

radiation even with standard medical treatment. A dose higher than 5 Sv

(5 Gy) brings an increasing chance of death above 50%. Above 7.5–10 Sv

(7.5–10 Gy) to the entire body, even extraordinary treatment, such as

bone-marrow transplants, will not prevent the death of the individual

exposed (see radiation poisoning). (Doses much larger than this may, however, be delivered to selected parts of the body in the course of radiation therapy.)

For low-dose exposure, for example among nuclear workers, who receive an average yearly radiation dose of 19 mSv, the risk of dying from cancer (excluding leukemia)

increases by 2 percent. For a dose of 100 mSv, the risk increase is 10

percent. By comparison, risk of dying from cancer was increased by 32

percent for the survivors of the atomic bombing of Hiroshima and Nagasaki.

Units of measurement and exposure

The following table shows radiation quantities in SI and non-SI units:

The measure of the ionizing effect of gamma and X-rays in dry air is called the exposure, for which a legacy unit, the röntgen was used from 1928. This has been replaced by kerma,

now mainly used for instrument calibration purposes but not for

received dose effect. The effect of gamma and other ionizing radiation

on living tissue is more closely related to the amount of energy deposited in tissue rather than the ionisation of air, and replacement radiometric units and quantities for radiation protection have been defined and developed from 1953 onwards. These are:

- The gray (Gy), is the SI unit of absorbed dose,

which is the amount of radiation energy deposited in the irradiated

material. For gamma radiation this is numerically equivalent to equivalent dose measured by the sievert,

which indicates the stochastic biological effect of low levels of

radiation on human tissue. The radiation weighting conversion factor

from absorbed dose to equivalent dose is 1 for gamma, whereas alpha

particles have a factor of 20, reflecting their greater ionising effect

on tissue.

- The rad is the deprecated CGS unit for absorbed dose and the rem is the deprecated CGS unit of equivalent dose, used mainly in the USA.

Distinction from X-rays

In

practice, gamma ray energies overlap with the range of X-rays,

especially in the higher-frequency region referred to as "hard" X-rays.

This depiction follows the older convention of distinguishing by

wavelength.

The conventional distinction between X-rays and gamma rays has

changed over time. Originally, the electromagnetic radiation emitted by X-ray tubes almost invariably had a longer wavelength than the radiation (gamma rays) emitted by radioactive nuclei.

Older literature distinguished between X- and gamma radiation on the

basis of wavelength, with radiation shorter than some arbitrary

wavelength, such as 10−11 m, defined as gamma rays. Since the energy of photons

is proportional to their frequency and inversely proportional to

wavelength, this past distinction between X-rays and gamma rays can also

be thought of in terms of its energy, with gamma rays considered to be

higher energy electromagnetic radiation than are X-rays.

However, since current artificial sources are now able to

duplicate any electromagnetic radiation that originates in the nucleus,

as well as far higher energies, the wavelengths characteristic of

radioactive gamma ray sources vs. other types now completely overlap.

Thus, gamma rays are now usually distinguished by their origin: X-rays

are emitted by definition by electrons outside the nucleus, while gamma

rays are emitted by the nucleus.

Exceptions to this convention occur in astronomy, where gamma decay is

seen in the afterglow of certain supernovas, but radiation from high

energy processes known to involve other radiation sources than

radioactive decay is still classed as gamma radiation.

The

Moon as seen by the

Compton Gamma Ray Observatory, in gamma rays of greater than 20 MeV. These are produced by

cosmic ray bombardment of its surface. The Sun, which has no similar surface of high

atomic number

to act as target for cosmic rays, cannot usually be seen at all at

these energies, which are too high to emerge from primary nuclear

reactions, such as solar nuclear fusion (though occasionally the Sun

produces gamma rays by

cyclotron-type mechanisms, during

solar flares). Gamma rays typically have higher energy than X-rays.

For example, modern high-energy X-rays produced by linear accelerators for megavoltage treatment in cancer often have higher energy (4 to 25 MeV) than do most classical gamma rays produced by nuclear gamma decay. One of the most common gamma ray emitting isotopes used in diagnostic nuclear medicine, technetium-99m,

produces gamma radiation of the same energy (140 keV) as that produced

by diagnostic X-ray machines, but of significantly lower energy than

therapeutic photons from linear particle accelerators. In the medical

community today, the convention that radiation produced by nuclear decay

is the only type referred to as "gamma" radiation is still respected.

Due to this broad overlap in energy ranges, in physics the two

types of electromagnetic radiation are now often defined by their

origin: X-rays are emitted by electrons (either in orbitals outside of

the nucleus, or while being accelerated to produce bremsstrahlung-type radiation), while gamma rays are emitted by the nucleus or by means of other particle decays or annihilation events. There is no lower limit to the energy of photons produced by nuclear reactions, and thus ultraviolet or lower energy photons produced by these processes would also be defined as "gamma rays".

The only naming-convention that is still universally respected is the

rule that electromagnetic radiation that is known to be of atomic

nuclear origin is always referred to as "gamma rays", and never

as X-rays. However, in physics and astronomy, the converse convention

(that all gamma rays are considered to be of nuclear origin) is

frequently violated.

In astronomy, higher energy gamma and X-rays are defined by

energy, since the processes that produce them may be uncertain and

photon energy, not origin, determines the required astronomical

detectors needed.

High-energy photons occur in nature that are known to be produced by

processes other than nuclear decay but are still referred to as gamma

radiation. An example is "gamma rays" from lightning discharges at 10 to

20 MeV, and known to be produced by the bremsstrahlung mechanism.

Another example is gamma-ray bursts, now known to be produced

from processes too powerful to involve simple collections of atoms

undergoing radioactive decay. This is part and parcel of the general

realization that many gamma rays produced in astronomical processes

result not from radioactive decay or particle annihilation, but rather

in non-radioactive processes similar to X-rays. Although the gamma rays of astronomy often come from non-radioactive

events, a few gamma rays in astronomy are specifically known to

originate from gamma decay of nuclei (as demonstrated by their spectra

and emission half life). A classic example is that of supernova SN 1987A, which emits an "afterglow" of gamma-ray photons from the decay of newly made radioactive nickel-56 and cobalt-56. Most gamma rays in astronomy, however, arise by other mechanisms.