| Developer | Microsoft |

|---|---|

| Written in | C, Assembly language (core) C++, C# (user mode applications) (C++ is used in the kernel graphical subsystem) |

| Working state | Current |

| Source model | |

| Initial release | July 27, 1993 (as Windows NT 3.1) |

| Latest release | 23H2 (10.0.22631.2715) (November 14, 2023) [±] |

| Latest preview |

23H2 (10.0.22631.2787) (November 16, 2023) [±]

23H2 (10.0.22635.2771) (November 16, 2023) [±]

10.0.23590.1000 (November 15, 2023) [±]

|

| Update method | Windows Update, Windows Server Update Services |

| Platforms | IA-32, x86-64, ARM and ARM64 (and historically Intel i860, DEC Alpha, Itanium, MIPS, and PowerPC) |

| Kernel type | Hybrid (NT) |

| Influenced by | RSX-11, VAXELN, OpenVMS, MICA, Mach (kernel) MS-DOS, OS/2, Windows 3.1x (userland) |

| Default user interface | Graphical (Windows shell) |

| License | Depending on version, edition or customer choice: Trialware, commercial software, volume licensing, OEM-only, SaaS, S+S |

| Official website | www |

Windows NT is a proprietary graphical operating system produced by Microsoft, the first version of which was released on July 27, 1993. It is a processor-independent, multiprocessing and multi-user operating system.

The first version of Windows NT was Windows NT 3.1 and was produced for workstations and server computers. It was a commercially focused operating system intended to complement consumer versions of Windows that were based on MS-DOS (including Windows 1.0 through Windows 3.1x). In 1996, Windows NT 4.0 was released, including the new shell from Windows 95. "NT" was formerly expanded to "New Technology" but no longer carries any specific meaning.

Gradually, the Windows NT family was expanded into Microsoft's general-purpose operating system product line for all personal computers, deprecating the Windows 9x family. Starting with Windows 2000, "NT" was removed from the product name and is only included in the product version string along with several low-level places within the system. In fact, NT was a trademark of Northern Telecom (later Nortel) at the time, which Microsoft was forced to acknowledge on the product packaging.

NT was the first purely 32-bit version of Windows, whereas its consumer-oriented counterparts, Windows 3.1x and Windows 9x, were 16-bit/32-bit hybrids. It is a multi-architecture operating system. Initially, it supported several instruction set architectures, including IA-32, MIPS, and DEC Alpha; support for PowerPC, Itanium, x64, and ARM were added later. The latest versions support x86 (including IA-32 and x64) and ARM. Major features of the Windows NT family include the Windows shell, Windows API, Native API, Active Directory, Group Policy, Hardware Abstraction Layer, NTFS, BitLocker, Windows Store, Windows Update, and Hyper-V.

Versions of Windows NT are installed using Windows Setup, which, starting with Windows Vista, uses the Windows Preinstallation Environment, which is a lightweight version of Windows NT made for deployment of the operating system.

Naming

It has been suggested that Dave Cutler intended the initialism "WNT" as a play on VMS, incrementing each letter by one. However, the project was originally intended as a follow-on to OS/2 and was referred to as "NT OS/2" before receiving the Windows brand. One of the original NT developers, Mark Lucovsky, states that the name was taken from the original target processor—the Intel i860, code-named N10 ("N-Ten"). A 1998 question-and-answer (Q&A) session with Bill Gates revealed that the letters were previously expanded to "New Technology" but no longer carry any specific meaning. The letters were dropped from the names of releases from Windows 2000 and later, though Microsoft described that product as being "Built on NT Technology".

Major features

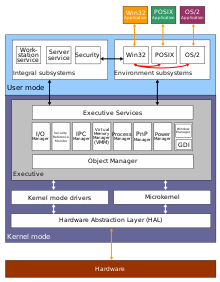

One of the main purposes of NT is hardware and software portability. Various versions of NT family operating systems have been released for a variety of processor architectures, initially IA-32, MIPS, and DEC Alpha, with PowerPC, Itanium, x86-64 and ARM supported in later releases. An initial idea was to have a common code base with a custom Hardware Abstraction Layer (HAL) for each platform. However, support for MIPS, Alpha, and PowerPC was later dropped in Windows 2000. Broad software compatibility was initially achieved with support for several API "personalities", including Windows API, POSIX, and OS/2 APIs – the latter two were phased out starting with Windows XP. Partial MS-DOS and Windows 16-bit compatibility is achieved on IA-32 via an integrated DOS Virtual Machine – although this feature is not available on other architectures.

NT has supported per-object (file, function, and role) access control lists allowing a rich set of security permissions to be applied to systems and services. NT has also supported Windows network protocols, inheriting the previous OS/2 LAN Manager networking, as well as TCP/IP networking (for which Microsoft used to implement a TCP/IP stack derived at first from a STREAMS-based stack from Spider Systems, then later rewritten in-house).

Windows NT 3.1 was the first version of Windows to use 32-bit flat virtual memory addressing on 32-bit processors. Its companion product, Windows 3.1, used segmented addressing and switches from 16-bit to 32-bit addressing in pages.

Windows NT 3.1 featured a core kernel providing a system API, running in supervisor mode (ring 0 in x86; referred to in Windows NT as "kernel mode" on all platforms), and a set of user-space environments with their own APIs which included the new Win32 environment, an OS/2 1.3 text-mode environment and a POSIX environment. The full preemptive multitasking kernel could interrupt running tasks to schedule other tasks, without relying on user programs to voluntarily give up control of the CPU, as in Windows 3.1 Windows applications (although MS-DOS applications were preemptively multitasked in Windows starting with Windows/386).

Notably, in Windows NT 3.x, several I/O driver subsystems, such as video and printing, were user-mode subsystems. In Windows NT 4, the video, server, and printer spooler subsystems were moved into kernel mode. Windows NT's first GUI was strongly influenced by (and programmatically compatible with) that from Windows 3.1; Windows NT 4's interface was redesigned to match that of the brand new Windows 95, moving from the Program Manager to the Windows shell design.

NTFS, a journaled, secure file system, is a major feature for NT. Windows NT also allows for other installable file systems; since versions 3.1, NT may be installed on FAT or HPFS file systems.

Windows NT introduced its own driver model, the Windows NT driver model, and is incompatible with older driver frameworks. With Windows 2000, the Windows NT driver model was enhanced to become the Windows Driver Model, which was first introduced with Windows 98, but was based on the NT driver model. Windows Vista added native support for the Windows Driver Foundation, which is also available for Windows XP, Windows Server 2003 and to an extent, Windows 2000.

Development

Microsoft decided to create a portable operating system, compatible with OS/2 and POSIX and supporting multiprocessing, in October 1988. When development started in November 1989, Windows NT was to be known as OS/2 3.0, the third version of the operating system developed jointly by Microsoft and IBM. To ensure portability, initial development was targeted at the Intel i860XR RISC processor, switching to the MIPS R3000 in late 1989, and then the Intel i386 in 1990. Microsoft also continued parallel development of the DOS-based and less resource-demanding Windows environment, resulting in the release of Windows 3.0 in May 1990.

Windows 3.0 was eventually so successful that Microsoft decided to change the primary application programming interface for the still unreleased NT OS/2 (as it was then known) from an extended OS/2 API to an extended Windows API. This decision caused tension between Microsoft and IBM and the collaboration ultimately fell apart.

IBM continued OS/2 development alone while Microsoft continued work on the newly renamed Windows NT. Though neither operating system would immediately be as popular as Microsoft's MS-DOS or Windows products, Windows NT would eventually be far more successful than OS/2.

Microsoft hired a group of developers from Digital Equipment Corporation led by Dave Cutler to build Windows NT, and many elements of the design reflect earlier DEC experience with Cutler's VMS, VAXELN and RSX-11, but also an unreleased object-based operating system developed by Cutler at Digital codenamed MICA. The team was joined by selected members of the disbanded OS/2 team, including Moshe Dunie.

The VMS kernel was primarily written in VAX MACRO, but Windows NT was designed to run on multiple instruction set architectures and multiple hardware platforms within each architecture. The platform dependencies are hidden from the rest of the system by the HAL (Hardware Abstraction Layer).

Mark Russinovich wrote in 1998 that while creating Windows NT, Microsoft developers rewrote VMS in C. Although they added the Win32 API, NTFS file system, GUI, and backwards compatibility with DOS, OS/2, and Win16, DEC engineers almost immediately noticed the two operating systems' internal similarities; parts of VAX/VMS Internals and Data Structures, published by Digital Press, accurately describe Windows NT internals using VMS terms. Instead of a lawsuit, Microsoft agreed to pay DEC $65–100 million, help market VMS, train Digital personnel on Windows NT, and continue Windows NT support for the DEC Alpha.

Windows NT and VMS memory management, processes, and scheduling are very similar. Windows NT's process management differs by implementing threading, which DEC did not implement until VMS 7.0 in 1995, likely to compete with Microsoft.

Like VMS, Windows NT's kernel mode code distinguishes between the "kernel", whose primary purpose is to implement processor- and architecture-dependent functions, and the "executive". This was designed as a modified microkernel, as the Windows NT kernel was influenced by the Mach microkernel developed by Richard Rashid at Carnegie Mellon University, but does not meet all of the criteria of a pure microkernel. Both the kernel and the executive are linked together into the single loaded module ntoskrnl.exe; from outside this module, there is little distinction between the kernel and the executive. Routines from each are directly accessible, as for example from kernel-mode device drivers.

API sets in the Windows NT family are implemented as subsystems atop the publicly undocumented "native" API; this allowed the late adoption of the Windows API (into the Win32 subsystem). Windows NT was one of the earliest operating systems to use Unicode internally.

Architecture

Windows NT uses a layered design architecture that consists of two main components, user mode and kernel mode. Programs and subsystems in user mode are limited in terms of what system resources they have access to, while the kernel mode has unrestricted access to the system memory and external devices. Kernel mode in Windows NT has full access to the hardware and system resources of the computer. The Windows NT kernel is a hybrid kernel; the architecture comprises a simple kernel, hardware abstraction layer (HAL), drivers, and a range of services (collectively named Executive), which all exist in kernel mode.

The booting process of Windows NT begins with NTLDR in versions before Vista and the Windows Boot Manager in Vista and later. The boot loader is responsible for accessing the file system on the boot drive, starting the kernel, and loading boot-time device drivers into memory. Once all the boot and system drivers have been loaded, the kernel starts the Session Manager Subsystem. This process launches winlogon, which allows the user to login. Once the user is logged in File Explorer is started, loading the graphical user interface of Windows NT.

Programming language

Windows NT is written in C and C++, with a very small amount written in assembly language. C is mostly used for the kernel code while C++ is mostly used for user-mode code. Assembly language is avoided where possible because it would impede portability.

Releases

| Version | Marketing name | Editions | Release date | Build number |

|---|---|---|---|---|

| 3.1 | Windows NT 3.1 | Workstation (named just Windows NT), Advanced Server | July 27, 1993 | 528 |

| 3.5 | Windows NT 3.5 | Workstation, Server | September 21, 1994 | 807 |

| 3.51 | Windows NT 3.51 | May 30, 1995 | 1057 | |

| 4.0 | Windows NT 4.0 | Workstation, Server, Enterprise Server, Terminal Server, Embedded | August 24, 1996 | 1381 |

| 5.0 | Windows 2000 | Professional, Server, Advanced Server | February 17, 2000 | 2195 |

| Datacenter Server | September 26, 2000 | |||

| 5.1 | Windows XP | Home, Professional, Media Center (original, 2004 & 2005), Tablet PC (original and 2005), Starter, Embedded, Home N, Professional N | October 25, 2001 | 2600 |

| Windows Fundamentals for Legacy PCs | — | July 8, 2006 | ||

| 5.2 | Windows XP | 64-bit Edition Version 2003 (IA-64) | March 28, 2003 | 3790 |

| Windows Server 2003 | Standard, Enterprise, Datacenter, Web, Storage, Small Business Server, Compute Cluster | April 24, 2003 | ||

| Windows XP | Professional x64 Edition (x86-64) | April 25, 2005 | ||

| Windows Server 2003 R2 | Standard, Enterprise, Datacenter, Web, Storage, Small Business Server, Compute Cluster | December 6, 2005 | ||

| Windows Home Server | — | November 4, 2007 | ||

| 6.0 | Windows Vista | Starter, Home Basic, Home Premium, Business, Enterprise, Ultimate | Business: November 30, 2006 Consumer: January 30, 2007 |

|

| Windows Server 2008 | Foundation, Standard, Enterprise, Datacenter, Web Server, HPC Server, Itanium-Based Systems | February 27, 2008 | 6001 (RTM) 6002 (SP2) 6003 (SP2 Update) | |

| 6.1 | Windows 7 | Starter, Home Basic, Home Premium, Professional, Enterprise, Ultimate | October 22, 2009 | 7600 (RTM) 7601 (SP1) |

| Windows Server 2008 R2 | Foundation, Standard, Enterprise, Datacenter, Web Server, HPC Server, Itanium-Based Systems | |||

| Windows Home Server 2011 | Home Server Premium | April 6, 2011 | 7601 (SP1) | |

| Windows Thin PC | — | June 6, 2011 | ||

| 6.2 | Windows 8 | Windows 8, Windows 8 Pro, Windows 8 Enterprise, Windows RT | October 26, 2012 | 9200 |

| Windows Server 2012 | Foundation, Essentials, Standard, Datacenter | September 4, 2012 | ||

| 6.3 | Windows 8.1 | Windows 8.1, Windows 8.1 Pro, Windows 8.1 Enterprise, Windows RT 8.1, Windows Embedded 8.1 Industry Pro/Enterprise | October 18, 2013 | 9600 |

| Windows Server 2012 R2 | Foundation, Essentials, Standard, Datacenter | |||

| 10.0 | Windows 10 | Home Single Language, Home China, Home, Pro, Pro Education, Pro for Workstations, Enterprise, Education, Windows 10 S, IoT Core, Mobile, Mobile Enterprise | July 29, 2015 | 10240 (1507/RTM) 10586 (1511) 14393 (1607) 15063 (1703) 16299 (1709) 17134 (1803) 17763 (1809) 18362 (1903) 18363 (1909) 19041 (2004) 19042 (20H2) 19043 (21H1) 19044 (21H2) 19045 (22H2) |

| Windows Server 2016 | Essentials, Standard, Datacenter, Multipoint Premium Server, Storage Server, Hyper-V Server | September 26, 2016 | 14393 | |

| Windows Server 2019 | Essentials, Standard, Datacenter, Multipoint Premium Server, Hyper-V Server | October 2, 2018 | 17763 | |

| Windows Server 2022 | August 18, 2021 | 20348 | ||

| Windows 11 | Home Single Language, Home China, Home, Pro, Education, Pro Education, Pro for Workstations, Enterprise, IoT Enterprise, Windows 11 SE | October 5, 2021 | 22000 (21H2/RTM) 22621 (22H2) 22631 (23H2) |

Windows NT 3.1 to 3.51 incorporated the Program Manager and File Manager from the Windows 3.1x series. Windows NT 4.0 onwards replaced those programs with Windows Explorer (including a taskbar and Start menu), which originally appeared in Windows 95.

The first release was given version number 3.1 to match the contemporary 16-bit Windows; magazines of that era claimed the number was also used to make that version seem more reliable than a ".0" release. Also the Novell IPX protocol was apparently licensed only to 3.1 versions of Windows software.

The NT version number is not now generally used for marketing purposes, but is still used internally, and said to reflect the degree of changes to the core of the operating system. However, for application compatibility reasons, Microsoft kept the major version number as 6 in releases following Vista, but changed it later to 10 in Windows 10. The build number is an internal identifier used by Microsoft's developers and beta testers.

Starting with Windows 8.1, Microsoft changed the Version API Helper functions' behavior. If an application is not manifested for Windows 8.1 or later, the API will always return version 6.2, which is the version number of Windows 8. This is because the manifest feature was introduced with Windows 8.1, to replace GetVersion and related functions.

Supported platforms

32-bit platforms

In order to prevent Intel x86-specific code from slipping into the operating system, due to developers being used to developing on x86 chips, Windows NT 3.1 was initially developed using non-x86 development systems and then ported to the x86 architecture. This work was initially based on the Intel i860-based Dazzle system and, later, the MIPS R4000-based Jazz platform. Both systems were designed internally at Microsoft.

Windows NT 3.1 was released for Intel x86 PC compatible, PC-98, DEC Alpha, and ARC-compliant MIPS platforms. Windows NT 3.51 added support for the PowerPC processor in 1995, specifically PReP-compliant systems such as the IBM ThinkPad Power Series laptops and Motorola PowerStack series; but despite meetings between Michael Spindler and Bill Gates, not on the Power Macintosh as the PReP compliant Power Macintosh project failed to ship.

Intergraph Corporation ported Windows NT to its Clipper architecture and later announced an intention to port Windows NT 3.51 to Sun Microsystems' SPARC architecture, in conjunction with the company's planned introduction of UltraSPARC models in 1995, but neither version was sold to the public as a retail product.

Only two of the Windows NT 4.0 variants (IA-32 and Alpha) have a full set of service packs available. All of the other ports done by third parties (Motorola, Intergraph, etc.) have few, if any, publicly available updates.

Windows NT 4.0 was the last major release to support Alpha, MIPS, or PowerPC, though development of Windows 2000 for Alpha continued until August 1999, when Compaq stopped support for Windows NT on that architecture; and then three days later Microsoft also canceled their AlphaNT program, even though the Alpha NT 5 (Windows 2000) release had reached RC1 status.

On January 5, 2011, Microsoft announced that the next major version of the Windows NT family will include support for the ARM architecture. Microsoft demonstrated a preliminary version of Windows (version 6.2.7867) running on an ARM-based computer at the 2011 Consumer Electronics Show. This eventually led to the commercial release of the Windows 8-derived Windows RT on October 26, 2012, and the implementation of NT over CE on Windows Phone 8.

According to Microsoft, it is a common misconception that the Xbox and Xbox 360 use a modified Windows 2000 kernel. In reality, the Xbox operating system was built from scratch but implements a subset of Windows APIs. The Xbox One, and Xbox Series X/S, however, do use a modified version of Windows 10.

Windows 11 is the first non-server version of Windows NT to not support 32-bit platforms.

64-bit platforms

The 64-bit versions of Windows NT were originally intended to run on Itanium and DEC Alpha; the latter was used internally at Microsoft during early development of 64-bit Windows. This continued for some time after Microsoft publicly announced that it was cancelling plans to ship 64-bit Windows for Alpha. Because of this, Alpha versions of Windows NT are 32-bit only.

While Windows 2000 only supports Intel IA-32 (32-bit), Windows XP, Server 2003, Server 2008 and Server 2008 R2 each have one edition dedicated to Itanium-based systems. In comparison with Itanium, Microsoft adopted x64 on a greater scale: every version of Windows since Windows XP (which has a dedicated x64 edition) has x64 editions.

The first version of Windows NT which began supporting ARM64 devices with Qualcomm processors was Windows 10, version 1709. This is a full version of Windows, rather than the cut-down Windows RT.

Hardware requirements

The minimum hardware specification required to run each release of the professional workstation version of Windows NT has been fairly slow-moving until the 6.0 Vista release, which requires a minimum of 15 GB of free disk space, a 10-fold increase in free disk space alone over the previous version.

| Windows version | CPU | RAM | Free disk space |

|---|---|---|---|

| NT 3.1 | i386, 25 MHz | 12 MB | 90 MB |

| NT 3.1 Advanced Server | 16 MB | ||

| NT 3.5 Workstation | 12 MB | ||

| NT 3.5 Server | 16 MB | ||

| NT 3.51 Workstation | 12 MB | ||

| NT 3.51 Server | 16 MB | ||

| NT 4.0 Workstation | i486, 25 MHz | 12 MB | 124 MB |

| NT 4.0 Server | 16 MB | ||

| 2000 Professional | Pentium, 133 MHz | 32 MB | 650 MB |

| 2000 Server | 128 MB | ||

| XP | Pentium, 233 MHz | 64 MB | 1.5 GB |

| Server 2003 | 133 MHz | 128 MB | |

| Vista Home Basic | 800 MHz | 512 MB | 20 GB |

| Vista (other editions) | 1 GHz | 1 GB | 40 GB |

| 7 for IA-32 | 16 GB | ||

| 7 for x64 | 2 GB | 20 GB | |

| 8 for IA-32 | 1 GHz with NX bit, SSE2, PAE | 1 GB | 16 GB |

| 8 for x64 | 2 GB | 20 GB | |

| 8.1 for IA-32 | 1 GB | 16 GB | |

| 8.1 for x64 | 1 GHz with NX bit, SSE2, PAE, CMPXCHG16b, PrefetchW and LAHF/SAHF | 2 GB | 20 GB |

| 10 for IA-32 (v1507-1809) | 1 GHz with NX bit, SSE2, PAE | 1 GB | 16 GB |

| 10 for x64 (v1507-1809) | 1 GHz with NX bit, SSE2, PAE, CMPXCHG16b, PrefetchW and LAHF/SAHF | 2 GB | 20 GB |

| 10 for IA-32 (v1903 and later) | 1 GHz with NX bit, SSE2, PAE | 1 GB | 32 GB |

| 10 for x64 (v1903 and later) | 1 GHz with NX bit, SSE2, PAE, CMPXCHG16b, PrefetchW and LAHF/SAHF | 2 GB | |

| 11 for x64 | Intel 8th-Gen CPU or AMD Zen+-based CPU; Trusted Platform Module (TPM) 2.0 or equivalent crypto-processor | 4 GB | 64 GB |

| 11 for ARM64 | Qualcomm Snapdragon 850, 7c, 8c, 8cx; Microsoft SQ1, SQ2 |

![\mu : \mathbb{R}^{n} \times [0, T] \to \mathbb{R}^{n};](https://wikimedia.org/api/rest_v1/media/math/render/svg/00920f011519de701fabbdb1568c5846778c8d75)

![\sigma : \mathbb{R}^{n} \times [0, T] \to \mathbb{R}^{n \times m};](https://wikimedia.org/api/rest_v1/media/math/render/svg/380a01815f7f8e4f8ee5e890c6b5296a8a5316f4)

![\mathbb{E} \big[ | Z |^{2} \big] < + \infty.](https://wikimedia.org/api/rest_v1/media/math/render/svg/5ec4b8cbf28f33b70d987a31cc379385c4c60ddf)

![\mathrm{d} X_{t} = \mu (X_{t}, t) \, \mathrm{d} t + \sigma (X_{t}, t) \, \mathrm{d} B_{t} \mbox{ for } t \in [0, T];](https://wikimedia.org/api/rest_v1/media/math/render/svg/390ff0714bfb7a22132f6da141bbf84c6489cd92)

![\mathbb{E} \left[ \int_{0}^{T} | X_{t} |^{2} \, \mathrm{d} t \right] < + \infty.](https://wikimedia.org/api/rest_v1/media/math/render/svg/3844b497bd22d7f1759dd396e589c15da1c8f44d)