https://en.wikipedia.org/wiki/Nature

Nature, in the broadest sense, is the physical world or universe. "Nature" can refer to the phenomena of the physical world, and also to life in general. The study of nature is a large, if not the only, part of science. Although humans are part of nature, human activity is often understood as a separate category from other natural phenomena.

The word nature is borrowed from the Old French nature and is derived from the Latin word natura, or "essential qualities, innate disposition", and in ancient times, literally meant "birth". In ancient philosophy, natura is mostly used as the Latin translation of the Greek word physis (φύσις), which originally related to the intrinsic characteristics of plants, animals, and other features of the world to develop of their own accord. The concept of nature as a whole, the physical universe, is one of several expansions of the original notion; it began with certain core applications of the word φύσις by pre-Socratic philosophers (though this word had a dynamic dimension then, especially for Heraclitus), and has steadily gained currency ever since.

During the advent of modern scientific method in the last several centuries, nature became the passive reality, organized and moved by divine laws. With the Industrial revolution, nature increasingly became seen as the part of reality deprived from intentional intervention: it was hence considered as sacred by some traditions (Rousseau, American transcendentalism) or a mere decorum for divine providence or human history (Hegel, Marx). However, a vitalist vision of nature, closer to the pre-Socratic one, got reborn at the same time, especially after Charles Darwin.

Within the various uses of the word today, "nature" often refers to geology and wildlife. Nature can refer to the general realm of living plants and animals, and in some cases to the processes associated with inanimate objects—the way that particular types of things exist and change of their own accord, such as the weather and geology of the Earth. It is often taken to mean the "natural environment" or wilderness—wild animals, rocks, forest, and in general those things that have not been substantially altered by human intervention, or which persist despite human intervention. For example, manufactured objects and human interaction generally are not considered part of nature, unless qualified as, for example, "human nature" or "the whole of nature". This more traditional concept of natural things that can still be found today implies a distinction between the natural and the artificial, with the artificial being understood as that which has been brought into being by a human consciousness or a human mind. Depending on the particular context, the term "natural" might also be distinguished from the unnatural or the supernatural.

Earth

−13 — – −12 — – −11 — – −10 — – −9 — – −8 — – −7 — – −6 — – −5 — – −4 — – −3 — – −2 — – −1 — – 0 — |

| |||||||||||||||||||||||||||||||||||||||

Earth is the only planet known to support life, and its natural features are the subject of many fields of scientific research. Within the Solar System, it is third closest to the Sun; it is the largest terrestrial planet and the fifth largest overall. Its most prominent climatic features are its two large polar regions, two relatively narrow temperate zones, and a wide equatorial tropical to subtropical region. Precipitation varies widely with location, from several metres of water per year to less than a millimetre. 71 percent of the Earth's surface is covered by salt-water oceans. The remainder consists of continents and islands, with most of the inhabited land in the Northern Hemisphere.

Earth has evolved through geological and biological processes that have left traces of the original conditions. The outer surface is divided into several gradually migrating tectonic plates. The interior remains active, with a thick layer of plastic mantle and an iron-filled core that generates a magnetic field. This iron core is composed of a solid inner phase, and a fluid outer phase. Convective motion in the core generates electric currents through dynamo action, and these, in turn, generate the geomagnetic field.

The atmospheric conditions have been significantly altered from the original conditions by the presence of life-forms,hich create an ecological balance that stabilizes the surface conditions. Despite the wide regional variations in climate by latitude and other geographic factors, the long-term average global climate is quite stable during interglacial periods, and variations of a degree or two of average global temperature have historically had major effects on the ecological balance, and on the actual geography of the Earth.

Geology

Geology is the science and study of the solid and liquid matter that constitutes the Earth. The field of geology encompasses the study of the composition, structure, physical properties, dynamics, and history of Earth materials, and the processes by which they are formed, moved, and changed. The field is a major academic discipline, and is also important for mineral and hydrocarbon extraction, knowledge about and mitigation of natural hazards, some Geotechnical engineering fields, and understanding past climates and environments.

Geological evolution

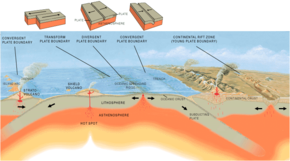

The geology of an area evolves through time as rock units are deposited and inserted and deformational processes change their shapes and locations.

Rock units are first emplaced either by deposition onto the surface or intrude into the overlying rock. Deposition can occur when sediments settle onto the surface of the Earth and later lithify into sedimentary rock, or when as volcanic material such as volcanic ash or lava flows, blanket the surface. Igneous intrusions such as batholiths, laccoliths, dikes, and sills, push upwards into the overlying rock, and crystallize as they intrude.

After the initial sequence of rocks has been deposited, the rock units can be deformed and/or metamorphosed. Deformation typically occurs as a result of horizontal shortening, horizontal extension, or side-to-side (strike-slip) motion. These structural regimes broadly relate to convergent boundaries, divergent boundaries, and transform boundaries, respectively, between tectonic plates.

Historical perspective

Earth is estimated to have formed 4.54 billion years ago from the solar nebula, along with the Sun and other planets. The Moon formed roughly 20 million years later. Initially molten, the outer layer of the Earth cooled, resulting in the solid crust. Outgassing and volcanic activity produced the primordial atmosphere. Condensing water vapor, most or all of which came from ice delivered by comets, produced the oceans and other water sources. The highly energetic chemistry is believed to have produced a self-replicating molecule around 4 billion years ago.

Continents formed, then broke up and reformed as the surface of Earth reshaped over hundreds of millions of years, occasionally combining to make a supercontinent. Roughly 750 million years ago, the earliest known supercontinent Rodinia, began to break apart. The continents later recombined to form Pannotia which broke apart about 540 million years ago, then finally Pangaea, which broke apart about 180 million years ago.

During the Neoproterozoic era, freezing temperatures covered much of the Earth in glaciers and ice sheets. This hypothesis has been termed the "Snowball Earth", and it is of particular interest as it precedes the Cambrian explosion in which multicellular life forms began to proliferate about 530–540 million years ago.

Since the Cambrian explosion there have been five distinctly identifiable mass extinctions. The last mass extinction occurred some 66 million years ago, when a meteorite collision probably triggered the extinction of the non-avian dinosaurs and other large reptiles, but spared small animals such as mammals. Over the past 66 million years, mammalian life diversified.

Several million years ago, a species of small African ape gained the ability to stand upright. The subsequent advent of human life, and the development of agriculture and further civilization allowed humans to affect the Earth more rapidly than any previous life form, affecting both the nature and quantity of other organisms as well as global climate. By comparison, the Great Oxygenation Event, produced by the proliferation of algae during the Siderian period, required about 300 million years to culminate.

The present era is classified as part of a mass extinction event, the Holocene extinction event, the fastest ever to have occurred. Some, such as E. O. Wilson of Harvard University, predict that human destruction of the biosphere could cause the extinction of one-half of all species in the next 100 years. The extent of the current extinction event is still being researched, debated and calculated by biologists.

Atmosphere, climate, and weather

The Earth's atmosphere is a key factor in sustaining the ecosystem. The thin layer of gases that envelops the Earth is held in place by gravity. Air is mostly nitrogen, oxygen, water vapor, with much smaller amounts of carbon dioxide, argon, etc. The atmospheric pressure declines steadily with altitude. The ozone layer plays an important role in depleting the amount of ultraviolet (UV) radiation that reaches the surface. As DNA is readily damaged by UV light, this serves to protect life at the surface. The atmosphere also retains heat during the night, thereby reducing the daily temperature extremes.

Terrestrial weather occurs almost exclusively in the lower part of the atmosphere, and serves as a convective system for redistributing heat. Ocean currents are another important factor in determining climate, particularly the major underwater thermohaline circulation which distributes heat energy from the equatorial oceans to the polar regions. These currents help to moderate the differences in temperature between winter and summer in the temperate zones. Also, without the redistributions of heat energy by the ocean currents and atmosphere, the tropics would be much hotter, and the polar regions much colder.

Weather can have both beneficial and harmful effects. Extremes in weather, such as tornadoes or hurricanes and cyclones, can expend large amounts of energy along their paths, and produce devastation. Surface vegetation has evolved a dependence on the seasonal variation of the weather, and sudden changes lasting only a few years can have a dramatic effect, both on the vegetation and on the animals which depend on its growth for their food.

Climate is a measure of the long-term trends in the weather. Various factors are known to influence the climate, including ocean currents, surface albedo, greenhouse gases, variations in the solar luminosity, and changes to the Earth's orbit. Based on historical and geological records, the Earth is known to have undergone drastic climate changes in the past, including ice ages.

The climate of a region depends on a number of factors, especially latitude. A latitudinal band of the surface with similar climatic attributes forms a climate region. There are a number of such regions, ranging from the tropical climate at the equator to the polar climate in the northern and southern extremes. Weather is also influenced by the seasons, which result from the Earth's axis being tilted relative to its orbital plane. Thus, at any given time during the summer or winter, one part of the Earth is more directly exposed to the rays of the sun. This exposure alternates as the Earth revolves in its orbit. At any given time, regardless of season, the Northern and Southern Hemispheres experience opposite seasons.

Weather is a chaotic system that is readily modified by small changes to the environment, so accurate weather forecasting is limited to only a few days. Overall, two things are happening worldwide: (1) temperature is increasing on the average; and (2) regional climates have been undergoing noticeable changes.

Water on the Earth

Water is a chemical substance that is composed of hydrogen and oxygen (H2O) and is vital for all known forms of life. In typical usage, water refers only to its liquid form or state, but the substance also has a solid state, ice, and a gaseous state, water vapor, or steam. Water covers 71% of the Earth's surface. On Earth, it is found mostly in oceans and other large bodies of water, with 1.6% of water below ground in aquifers and 0.001% in the air as vapor, clouds, and precipitation. Oceans hold 97% of surface water, glaciers, and polar ice caps 2.4%, and other land surface water such as rivers, lakes, and ponds 0.6%. Additionally, a minute amount of the Earth's water is contained within biological bodies and manufactured products.

Oceans

An ocean is a major body of saline water, and a principal component of the hydrosphere. Approximately 71% of the Earth's surface (an area of some 361 million square kilometers) is covered by ocean, a continuous body of water that is customarily divided into several principal oceans and smaller seas. More than half of this area is over 3,000 meters (9,800 feet) deep. Average oceanic salinity is around 35 parts per thousand (ppt) (3.5%), and nearly all seawater has a salinity in the range of 30 to 38 ppt. Though generally recognized as several 'separate' oceans, these waters comprise one global, interconnected body of salt water often referred to as the World Ocean or global ocean. This concept of a global ocean as a continuous body of water with relatively free interchange among its parts is of fundamental importance to oceanography.

The major oceanic divisions are defined in part by the continents, various archipelagos, and other criteria: these divisions are (in descending order of size) the Pacific Ocean, the Atlantic Ocean, the Indian Ocean, the Southern Ocean, and the Arctic Ocean. Smaller regions of the oceans are called seas, gulfs, bays and other names. There are also salt lakes, which are smaller bodies of landlocked saltwater that are not interconnected with the World Ocean. Two notable examples of salt lakes are the Aral Sea and the Great Salt Lake.

Lakes

A lake (from Latin word lacus) is a terrain feature (or physical feature), a body of liquid on the surface of a world that is localized to the bottom of basin (another type of landform or terrain feature; that is, it is not global) and moves slowly if it moves at all. On Earth, a body of water is considered a lake when it is inland, not part of the ocean, is larger and deeper than a pond, and is fed by a river. The only world other than Earth known to harbor lakes is Titan, Saturn's largest moon, which has lakes of ethane, most likely mixed with methane. It is not known if Titan's lakes are fed by rivers, though Titan's surface is carved by numerous river beds. Natural lakes on Earth are generally found in mountainous areas, rift zones, and areas with ongoing or recent glaciation. Other lakes are found in endorheic basins or along the courses of mature rivers. In some parts of the world, there are many lakes because of chaotic drainage patterns left over from the last ice age. All lakes are temporary over geologic time scales, as they will slowly fill in with sediments or spill out of the basin containing them.

Ponds

A pond is a body of standing water, either natural or human-made, that is usually smaller than a lake. A wide variety of human-made bodies of water are classified as ponds, including water gardens designed for aesthetic ornamentation, fish ponds designed for commercial fish breeding, and solar ponds designed to store thermal energy. Ponds and lakes are distinguished from streams via current speed. While currents in streams are easily observed, ponds and lakes possess thermally driven micro-currents and moderate wind driven currents. These features distinguish a pond from many other aquatic terrain features, such as stream pools and tide pools.

Rivers

A river is a natural watercourse, usually freshwater, flowing towards an ocean, a lake, a sea or another river. In a few cases, a river simply flows into the ground or dries up completely before reaching another body of water. Small rivers may also be called by several other names, including stream, creek, brook, rivulet, and rill; there is no general rule that defines what can be called a river. Many names for small rivers are specific to geographic location; one example is Burn in Scotland and North-east England. Sometimes a river is said to be larger than a creek, but this is not always the case, due to vagueness in the language. A river is part of the hydrological cycle. Water within a river is generally collected from precipitation through surface runoff, groundwater recharge, springs, and the release of stored water in natural ice and snowpacks (i.e., from glaciers).

Streams

A stream is a flowing body of water with a current, confined within a bed and stream banks. In the United States, a stream is classified as a watercourse less than 60 feet (18 metres) wide. Streams are important as conduits in the water cycle, instruments in groundwater recharge, and they serve as corridors for fish and wildlife migration. The biological habitat in the immediate vicinity of a stream is called a riparian zone. Given the status of the ongoing Holocene extinction, streams play an important corridor role in connecting fragmented habitats and thus in conserving biodiversity. The study of streams and waterways in general involves many branches of inter-disciplinary natural science and engineering, including hydrology, fluvial geomorphology, aquatic ecology, fish biology, riparian ecology, and others.

Ecosystems

Ecosystems are composed of a variety of biotic and abiotic components that function in an interrelated way. The structure and composition is determined by various environmental factors that are interrelated. Variations of these factors will initiate dynamic modifications to the ecosystem. Some of the more important components are soil, atmosphere, radiation from the sun, water, and living organisms.

Central to the ecosystem concept is the idea that living organisms interact with every other element in their local environment. Eugene Odum, a founder of ecology, stated: "Any unit that includes all of the organisms (ie: the "community") in a given area interacting with the physical environment so that a flow of energy leads to clearly defined trophic structure, biotic diversity, and material cycles (i.e.: exchange of materials between living and nonliving parts) within the system is an ecosystem." Within the ecosystem, species are connected and dependent upon one another in the food chain, and exchange energy and matter between themselves as well as with their environment. The human ecosystem concept is based on the human/nature dichotomy and the idea that all species are ecologically dependent on each other, as well as with the abiotic constituents of their biotope.

A smaller unit of size is called a microecosystem. For example, a microsystem can be a stone and all the life under it. A macroecosystem might involve a whole ecoregion, with its drainage basin.

Wilderness

Wilderness is generally defined as areas that have not been significantly modified by human activity. Wilderness areas can be found in preserves, estates, farms, conservation preserves, ranches, national forests, national parks, and even in urban areas along rivers, gulches, or otherwise undeveloped areas. Wilderness areas and protected parks are considered important for the survival of certain species, ecological studies, conservation, and solitude. Some nature writers believe wilderness areas are vital for the human spirit and creativity, and some ecologists consider wilderness areas to be an integral part of the Earth's self-sustaining natural ecosystem (the biosphere). They may also preserve historic genetic traits and that they provide habitat for wild flora and fauna that may be difficult or impossible to recreate in zoos, arboretums, or laboratories.

Life

−4500 — – — – −4000 — – — – −3500 — – — – −3000 — – — – −2500 — – — – −2000 — – — – −1500 — – — – −1000 — – — – −500 — – — – 0 — |

| |||||||||||||||||||||||||||||||||||||||||||||

Although there is no universal agreement on the definition of life, scientists generally accept that the biological manifestation of life is characterized by organization, metabolism, growth, adaptation, response to stimuli, and reproduction. Life may also be said to be simply the characteristic state of organisms.

Properties common to terrestrial organisms (plants, animals, fungi, protists, archaea, and bacteria) are that they are cellular, carbon-and-water-based with complex organization, having a metabolism, a capacity to grow, respond to stimuli, and reproduce. An entity with these properties is generally considered life. However, not every definition of life considers all of these properties to be essential. Human-made analogs of life may also be considered to be life.

The biosphere is the part of Earth's outer shell—including land, surface rocks, water, air and the atmosphere—within which life occurs, and which biotic processes in turn alter or transform. From the broadest geophysiological point of view, the biosphere is the global ecological system integrating all living beings and their relationships, including their interaction with the elements of the lithosphere (rocks), hydrosphere (water), and atmosphere (air). The entire Earth contains over 75 billion tons (150 trillion pounds or about 6.8×1013 kilograms) of biomass (life), which lives within various environments within the biosphere.

Over nine-tenths of the total biomass on Earth is plant life, on which animal life depends very heavily for its existence. More than 2 million species of plant and animal life have been identified to date, and estimates of the actual number of existing species range from several million to well over 50 million. The number of individual species of life is constantly in some degree of flux, with new species appearing and others ceasing to exist on a continual basis. The total number of species is in rapid decline.

Evolution

The origin of life on Earth is not well understood, but it is known to have occurred at least 3.5 billion years ago, during the hadean or archean eons on a primordial Earth that had a substantially different environment than is found at present. These life forms possessed the basic traits of self-replication and inheritable traits. Once life had appeared, the process of evolution by natural selection resulted in the development of ever-more diverse life forms.

Species that were unable to adapt to the changing environment and competition from other life forms became extinct. However, the fossil record retains evidence of many of these older species. Current fossil and DNA evidence shows that all existing species can trace a continual ancestry back to the first primitive life forms.

When basic forms of plant life developed the process of photosynthesis the sun's energy could be harvested to create conditions which allowed for more complex life forms. The resultant oxygen accumulated in the atmosphere and gave rise to the ozone layer. The incorporation of smaller cells within larger ones resulted in the development of yet more complex cells called eukaryotes. Cells within colonies became increasingly specialized, resulting in true multicellular organisms. With the ozone layer absorbing harmful ultraviolet radiation, life colonized the surface of Earth.

Microbes

The first form of life to develop on the Earth were microbes, and they remained the only form of life until about a billion years ago when multi-cellular organisms began to appear. Microorganisms are single-celled organisms that are generally microscopic, and smaller than the human eye can see. They include Bacteria, Fungi, Archaea, and Protista.

These life forms are found in almost every location on the Earth where there is liquid water, including in the Earth's interior. Their reproduction is both rapid and profuse. The combination of a high mutation rate and a horizontal gene transfer ability makes them highly adaptable, and able to survive in new environments, including outer space. They form an essential part of the planetary ecosystem. However, some microorganisms are pathogenic and can post health risk to other organisms.

Plants and animals

Originally Aristotle divided all living things between plants, which generally do not move fast enough for humans to notice, and animals. In Linnaeus' system, these became the kingdoms Vegetabilia (later Plantae) and Animalia. Since then, it has become clear that the Plantae as originally defined included several unrelated groups, and the fungi and several groups of algae were removed to new kingdoms. However, these are still often considered plants in many contexts. Bacterial life is sometimes included in flora, and some classifications use the term bacterial flora separately from plant flora.

Among the many ways of classifying plants are by regional floras, which, depending on the purpose of study, can also include fossil flora, remnants of plant life from a previous era. People in many regions and countries take great pride in their individual arrays of characteristic flora, which can vary widely across the globe due to differences in climate and terrain.

Regional floras commonly are divided into categories such as native flora and agricultural and garden flora, the lastly mentioned of which are intentionally grown and cultivated. Some types of "native flora" actually have been introduced centuries ago by people migrating from one region or continent to another, and become an integral part of the native, or natural flora of the place to which they were introduced. This is an example of how human interaction with nature can blur the boundary of what is considered nature.

Another category of plant has historically been carved out for weeds. Though the term has fallen into disfavor among botanists as a formal way to categorize "useless" plants, the informal use of the word "weeds" to describe those plants that are deemed worthy of elimination is illustrative of the general tendency of people and societies to seek to alter or shape the course of nature. Similarly, animals are often categorized in ways such as domestic, farm animals, wild animals, pests, etc. according to their relationship to human life.

Animals as a category have several characteristics that generally set them apart from other living things. Animals are eukaryotic and usually multicellular (although see Myxozoa), which separates them from bacteria, archaea, and most protists. They are heterotrophic, generally digesting food in an internal chamber, which separates them from plants and algae. They are also distinguished from plants, algae, and fungi by lacking cell walls.

With a few exceptions—most notably the two phyla consisting of sponges and placozoans—animals have bodies that are differentiated into tissues. These include muscles, which are able to contract and control locomotion, and a nervous system, which sends and processes signals. There is also typically an internal digestive chamber. The eukaryotic cells possessed by all animals are surrounded by a characteristic extracellular matrix composed of collagen and elastic glycoproteins. This may be calcified to form structures like shells, bones, and spicules, a framework upon which cells can move about and be reorganized during development and maturation, and which supports the complex anatomy required for mobility.

Human interrelationship

−10 — – −9 — – −8 — – −7 — – −6 — – −5 — – −4 — – −3 — – −2 — – −1 — – 0 — |

| |||||||||||||||||||||||||||

Human impact

Although humans comprise only a minuscule proportion of the total living biomass on Earth, the human effect on nature is disproportionately large. Because of the extent of human influence, the boundaries between what humans regard as nature and "made environments" is not clear cut except at the extremes. Even at the extremes, the amount of natural environment that is free of discernible human influence is diminishing at an increasingly rapid pace. A 2020 study published in Nature found that anthropogenic mass (human-made materials) outweighs all living biomass on earth, with plastic alone exceeding the mass of all land and marine animals combined. And according to a 2021 study published in Frontiers in Forests and Global Change, only about 3% of the planet's terrestrial surface is ecologically and faunally intact, with a low human footprint and healthy populations of native animal species. Philip Cafaro, professor of philosophy at the School of Global Environmental Sustainability at Colorado State University, wrote in 2022 that "the cause of global biodiversity loss is clear: other species are being displaced by a rapidly growing human economy."

The development of technology by the human race has allowed the greater exploitation of natural resources and has helped to alleviate some of the risk from natural hazards. In spite of this progress, however, the fate of human civilization remains closely linked to changes in the environment. There exists a highly complex feedback loop between the use of advanced technology and changes to the environment that are only slowly becoming understood. Human-made threats to the Earth's natural environment include pollution, deforestation, and disasters such as oil spills. Humans have contributed to the extinction of many plants and animals, with roughly 1 million species threatened with extinction within decades. The loss of biodiversity and ecosystem functions over the last half century have impacted the extent that nature can contribute to human quality of life, and continued declines could pose a major threat to the continued existence of human civilization, unless a rapid course correction is made. The value of natural resources to human society is not reflected in market prices because mostly natural resources are available free of charge. This distorts market pricing of natural resources and at the same time leads to underinvestment in our natural assets. The annual global cost of public subsidies that damage nature is conservatively estimated at $4–6 trillion (million million). Institutional protections of these natural goods, such as the oceans and rainforests, are lacking. Governments have not prevented these economic externalities.

Humans employ nature for both leisure and economic activities. The acquisition of natural resources for industrial use remains a sizable component of the world's economic system. Some activities, such as hunting and fishing, are used for both sustenance and leisure, often by different people. Agriculture was first adopted around the 9th millennium BCE. Ranging from food production to energy, nature influences economic wealth.

Although early humans gathered uncultivated plant materials for food and employed the medicinal properties of vegetation for healing, most modern human use of plants is through agriculture. The clearance of large tracts of land for crop growth has led to a significant reduction in the amount available of forestation and wetlands, resulting in the loss of habitat for many plant and animal species as well as increased erosion.

Aesthetics and beauty

Beauty in nature has historically been a prevalent theme in art and books, filling large sections of libraries and bookstores. That nature has been depicted and celebrated by so much art, photography, poetry, and other literature shows the strength with which many people associate nature and beauty. Reasons why this association exists, and what the association consists of, are studied by the branch of philosophy called aesthetics. Beyond certain basic characteristics that many philosophers agree about to explain what is seen as beautiful, the opinions are virtually endless. Nature and wildness have been important subjects in various eras of world history. An early tradition of landscape art began in China during the Tang Dynasty (618–907). The tradition of representing nature as it is became one of the aims of Chinese painting and was a significant influence in Asian art.

Although natural wonders are celebrated in the Psalms and the Book of Job, wilderness portrayals in art became more prevalent in the 1800s, especially in the works of the Romantic movement. British artists John Constable and J. M. W. Turner turned their attention to capturing the beauty of the natural world in their paintings. Before that, paintings had been primarily of religious scenes or of human beings. William Wordsworth's poetry described the wonder of the natural world, which had formerly been viewed as a threatening place. Increasingly the valuing of nature became an aspect of Western culture. This artistic movement also coincided with the Transcendentalist movement in the Western world. A common classical idea of beautiful art involves the word mimesis, the imitation of nature. Also in the realm of ideas about beauty in nature is that the perfect is implied through perfect mathematical forms and more generally by patterns in nature. As David Rothenburg writes, "The beautiful is the root of science and the goal of art, the highest possibility that humanity can ever hope to see".

Matter and energy

Some fields of science see nature as matter in motion, obeying certain laws of nature which science seeks to understand. For this reason the most fundamental science is generally understood to be "physics"—the name for which is still recognizable as meaning that it is the "study of nature".

Matter is commonly defined as the substance of which physical objects are composed. It constitutes the observable universe. The visible components of the universe are now believed to compose only 4.9 percent of the total mass. The remainder is believed to consist of 26.8 percent cold dark matter and 68.3 percent dark energy. The exact arrangement of these components is still unknown and is under intensive investigation by physicists.

The behaviour of matter and energy throughout the observable universe appears to follow well-defined physical laws. These laws have been employed to produce cosmological models that successfully explain the structure and the evolution of the universe we can observe. The mathematical expressions of the laws of physics employ a set of twenty physical constants that appear to be static across the observable universe. The values of these constants have been carefully measured, but the reason for their specific values remains a mystery.

Beyond Earth

Outer space, also simply called space, refers to the relatively empty regions of the Universe outside the atmospheres of celestial bodies. Outer space is used to distinguish it from airspace (and terrestrial locations). There is no discrete boundary between Earth's atmosphere and space, as the atmosphere gradually attenuates with increasing altitude. Outer space within the Solar System is called interplanetary space, which passes over into interstellar space at what is known as the heliopause.

Outer space is sparsely filled with several dozen types of organic molecules discovered to date by microwave spectroscopy, blackbody radiation left over from the Big Bang and the origin of the universe, and cosmic rays, which include ionized atomic nuclei and various subatomic particles. There is also some gas, plasma and dust, and small meteors. Additionally, there are signs of human life in outer space today, such as material left over from previous crewed and uncrewed launches which are a potential hazard to spacecraft. Some of this debris re-enters the atmosphere periodically.

Although Earth is the only body within the Solar System known to support life, evidence suggests that in the distant past the planet Mars possessed bodies of liquid water on the surface. For a brief period in Mars' history, it may have also been capable of forming life. At present though, most of the water remaining on Mars is frozen. If life exists at all on Mars, it is most likely to be located underground where liquid water can still exist.

Conditions on the other terrestrial planets, Mercury and Venus, appear to be too harsh to support life as we know it. But it has been conjectured that Europa, the fourth-largest moon of Jupiter, may possess a sub-surface ocean of liquid water and could potentially host life.

Astronomers have started to discover extrasolar Earth analogs – planets that lie in the habitable zone of space surrounding a star, and therefore could possibly host life as we know it.