Analogy is a comparison or correspondence between two things (or two groups of things) because of a third element that they are considered to share.

In logic, it is an inference or an argument from one particular to another particular, as opposed to deduction, induction, and abduction. It is also used of where at least one of the premises, or the conclusion, is general rather than particular in nature. It has the general form A is to B as C is to D.

In a broader sense, analogical reasoning is a cognitive process of transferring some information or meaning of a particular subject (the analog, or source) onto another (the target); and also the linguistic expression corresponding to such a process. The term analogy can also refer to the relation between the source and the target themselves, which is often (though not always) a similarity, as in the biological notion of analogy.

Analogy plays a significant role in human thought processes. It has been argued that analogy lies at "the core of cognition".

Etymology

The English word analogy derives from the Latin analogia, itself derived from the Greek ἀναλογία, "proportion", from ana- "upon, according to" [also "again", "anew"] + logos "ratio" [also "word, speech, reckoning"].

Models and theories

Analogy plays a significant role in problem solving, as well as decision making, argumentation, perception, generalization, memory, creativity, invention, prediction, emotion, explanation, conceptualization and communication. It lies behind basic tasks such as the identification of places, objects and people, for example, in face perception and facial recognition systems. Hofstadter has argued that analogy is "the core of cognition".

An analogy is not a figure of speech but a kind of thought. Specific analogical language uses exemplification, comparisons, metaphors, similes, allegories, and parables, but not metonymy. Phrases like and so on, and the like, as if, and the very word like also rely on an analogical understanding by the receiver of a message including them. Analogy is important not only in ordinary language and common sense (where proverbs and idioms give many examples of its application) but also in science, philosophy, law and the humanities.

The concepts of association, comparison, correspondence, mathematical and morphological homology, homomorphism, iconicity, isomorphism, metaphor, resemblance, and similarity are closely related to analogy. In cognitive linguistics, the notion of conceptual metaphor may be equivalent to that of analogy. Analogy is also a basis for any comparative arguments as well as experiments whose results are transmitted to objects that have been not under examination (e.g., experiments on rats when results are applied to humans).

Analogy has been studied and discussed since classical antiquity by philosophers, scientists, theologists and lawyers. The last few decades have shown a renewed interest in analogy, most notably in cognitive science.

Development

- Aristotle identified analogy in works such as Metaphysics and Nicomachean Ethics

- Roman lawyers used analogical reasoning and the Greek word analogia.

- In Islamic logic, analogical reasoning was used for the process of qiyas in Islamic sharia law and fiqh jurisprudence.

- Medieval lawyers distinguished analogia legis and analogia iuris (see below).

- The Middle Ages saw an increased use and theorization of analogy.

- In Christian scholastic theology, analogical arguments were accepted in order to explain the attributes of God.

- Aquinas made a distinction between equivocal, univocal and analogical terms, the last being those like healthy that have different but related meanings. Not only a person can be "healthy", but also the food that is good for health (see the contemporary distinction between polysemy and homonymy).

- Thomas Cajetan wrote an influential treatise on analogy. In all of these cases, the wide Platonic and Aristotelian notion of analogy was preserved.

Cajetan named several kinds of analogy that had been used but previously unnamed, particularly:

- Analogy of attribution (analogia attributionis) or improper proportionality, e.g., "This food is healthy."

- Analogy of proportionality (analogia proportionalitatis) or proper proportionality, e.g., "2 is to 1 as 4 is to 2", or "the goodness of humans is relative to their essence as the goodness of God is relative to God's essence."

- Metaphor, e.g., steely determination.

Identity of relation

In ancient Greek the word αναλογια (analogia) originally meant proportionality, in the mathematical sense, and it was indeed sometimes translated to Latin as proportio. Analogy was understood as identity of relation between any two ordered pairs, whether of mathematical nature or not.

Analogy and abstraction are different cognitive processes, and analogy is often an easier one. This analogy is not comparing all the properties between a hand and a foot, but rather comparing the relationship between a hand and its palm to a foot and its sole. While a hand and a foot have many dissimilarities, the analogy focuses on their similarity in having an inner surface.

The same notion of analogy was used in the US-based SAT college admission tests, that included "analogy questions" in the form "A is to B as C is to what?" For example, "Hand is to palm as foot is to ____?" These questions were usually given in the Aristotelian format: HAND : PALM : : FOOT : ____ While most competent English speakers will immediately give the right answer to the analogy question (sole), it is more difficult to identify and describe the exact relation that holds both between pairs such as hand and palm, and between foot and sole. This relation is not apparent in some lexical definitions of palm and sole, where the former is defined as the inner surface of the hand, and the latter as the underside of the foot.

Kant's Critique of Judgment held to this notion of analogy, arguing that there can be exactly the same relation between two completely different objects.

Greek philosophers such as Plato and Aristotle used a wider notion of analogy. They saw analogy as a shared abstraction. Analogous objects did not share necessarily a relation, but also an idea, a pattern, a regularity, an attribute, an effect or a philosophy. These authors also accepted that comparisons, metaphors and "images" (allegories) could be used as arguments, and sometimes they called them analogies. Analogies should also make those abstractions easier to understand and give confidence to those who use them.

James Francis Ross in Portraying Analogy (1982), the first substantive examination of the topic since Cajetan's De Nominum Analogia, demonstrated that analogy is a systematic and universal feature of natural languages, with identifiable and law-like characteristics which explain how the meanings of words in a sentence are interdependent.

Special case of induction

On the contrary, Ibn Taymiyya, Francis Bacon and later John Stuart Mill argued that analogy is simply a special case of induction. In their view analogy is an inductive inference from common known attributes to another probable common attribute, which is known about only in the source of the analogy, in the following form:

- Premises

- a is C, D, E, F, G

- b is C, D, E, F

- Conclusion

- b is probably G.

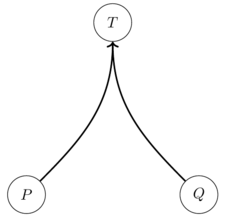

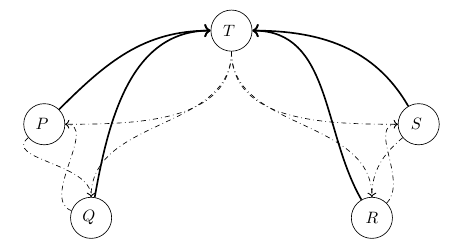

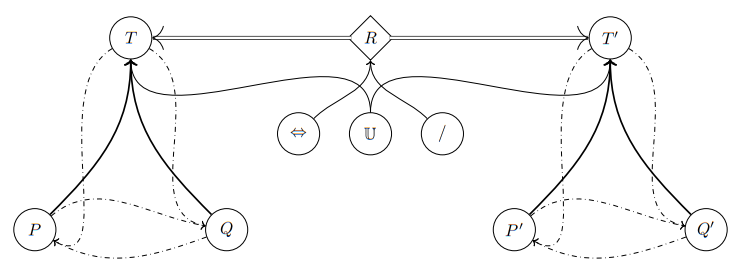

Contemporary cognitive scientists use a wide notion of analogy, extensionally close to that of Plato and Aristotle, but framed by Gentner's (1983) structure mapping theory. The same idea of mapping between source and target is used by conceptual metaphor and conceptual blending theorists. Structure mapping theory concerns both psychology and computer science. According to this view, analogy depends on the mapping or alignment of the elements of source and target. The mapping takes place not only between objects, but also between relations of objects and between relations of relations. The whole mapping yields the assignment of a predicate or a relation to the target. Structure mapping theory has been applied and has found considerable confirmation in psychology. It has had reasonable success in computer science and artificial intelligence (see below). Some studies extended the approach to specific subjects, such as metaphor and similarity.

Applications and types

Logic

Logicians analyze how analogical reasoning is used in arguments from analogy.

An analogy can be stated using is to and as when representing the analogous relationship between two pairs of expressions, for example, "Smile is to mouth, as wink is to eye." In the field of mathematics and logic, this can be formalized with colon notation to represent the relationships, using single colon for ratio, and double colon for equality.

In the field of testing, the colon notation of ratios and equality is often borrowed, so that the example above might be rendered, "Smile : mouth :: wink : eye" and pronounced the same way.

Linguistics

- An analogy can be the linguistic process that reduces word forms thought to break rules to more common forms that follow these rules. For example, the English verb help once had the preterite (simple past tense in English) holp and the past participle holpen. These old-fashioned forms have been discarded and replaced by helped by using the power of analogy (or by applying the more frequently used Verb-ed rule.) This is called morphological leveling. Analogies can sometimes create rule-breaking forms; one example is the American English past tense form of dive: dove, formed on analogy with words such as drive: drove.

- Neologisms can also be formed by analogy with existing words. A good example is software, formed by analogy with hardware; other analogous neologisms such as firmware and vapourware have followed. Another example is the humorous term underwhelm, formed by analogy with overwhelm.

- Some people present analogy as an alternative to generative rules for explaining the productive formation of structures such as words. Others argue that they are in fact the same and that rules are analogies that have essentially become standard parts of the linguistic system, whereas clearer cases of analogy have simply not (yet) done so (e.g. Langacker 1987.445–447). This view agrees with the current views of analogy in cognitive science which are discussed above.

Analogy is also a term used in the Neogrammarian school of thought as a catch-all to describe any morphological change in a language that cannot be explained merely sound change or borrowing.

Science

Analogies are mainly used as a means of creating new ideas and hypotheses, or testing them, which is called a heuristic function of analogical reasoning.

Analogical arguments can also be probative, meaning that they serve as a means of proving the rightness of particular theses and theories. This application of analogical reasoning in science is debatable. Analogy can help prove important theories, especially in those kinds of science in which logical or empirical proof is not possible such as theology, philosophy or cosmology when it relates to those areas of the cosmos (the universe) that are beyond any data-based observation and knowledge about them stems from the human insight and thinking outside the senses.

Analogy can be used in theoretical and applied sciences in the form of models or simulations which can be considered as strong indications of probable correctness. Other, much weaker, analogies may also assist in understanding and describing nuanced or key functional behaviours of systems that are otherwise difficult to grasp or prove. For instance, an analogy used in physics textbooks compares electrical circuits to hydraulic circuits. Another example is the analogue ear based on electrical, electronic or mechanical devices.

Mathematics

Some types of analogies can have a precise mathematical formulation through the concept of isomorphism. In detail, this means that if two mathematical structures are of the same type, an analogy between them can be thought of as a bijection which preserves some or all of the relevant structure. For example, and are isomorphic as vector spaces, but the complex numbers, , have more structure than does: is a field as well as a vector space.

Category theory takes the idea of mathematical analogy much further with the concept of functors. Given two categories C and D, a functor f from C to D can be thought of as an analogy between C and D, because f has to map objects of C to objects of D and arrows of C to arrows of D in such a way that the structure of their respective parts is preserved. This is similar to the structure mapping theory of analogy of Dedre Gentner, because it formalises the idea of analogy as a function which makes certain conditions true.

Artificial intelligence

A computer algorithm has achieved human-level performance on multiple-choice analogy questions from the SAT test. The algorithm measures the similarity of relations between pairs of words (e.g., the similarity between the pairs HAND:PALM and FOOT:SOLE) by statistically analysing a large collection of text. It answers SAT questions by selecting the choice with the highest relational similarity.

The analogical reasoning in the human mind is free of the false inferences plaguing conventional artificial intelligence models, (called systematicity). Steven Phillips and William H. Wilson use category theory to mathematically demonstrate how such reasoning could arise naturally by using relationships between the internal arrows that keep the internal structures of the categories rather than the mere relationships between the objects (called "representational states"). Thus, the mind, and more intelligent AIs, may use analogies between domains whose internal structures transform naturally and reject those that do not.

Keith Holyoak and Paul Thagard (1997) developed their multiconstraint theory within structure mapping theory. They defend that the "coherence" of an analogy depends on structural consistency, semantic similarity and purpose. Structural consistency is the highest when the analogy is an isomorphism, although lower levels can be used as well. Similarity demands that the mapping connects similar elements and relationships between source and target, at any level of abstraction. It is the highest when there are identical relations and when connected elements have many identical attributes. An analogy achieves its purpose if it helps solve the problem at hand. The multiconstraint theory faces some difficulties when there are multiple sources, but these can be overcome. Hummel and Holyoak (2005) recast the multiconstraint theory within a neural network architecture. A problem for the multiconstraint theory arises from its concept of similarity, which, in this respect, is not obviously different from analogy itself. Computer applications demand that there are some identical attributes or relations at some level of abstraction. The model was extended (Doumas, Hummel, and Sandhofer, 2008) to learn relations from unstructured examples (providing the only current account of how symbolic representations can be learned from examples).

Mark Keane and Brayshaw (1988) developed their Incremental Analogy Machine (IAM) to include working memory constraints as well as structural, semantic and pragmatic constraints, so that a subset of the base analogue is selected and mapping from base to target occurs in series. Empirical evidence shows that humans are better at using and creating analogies when the information is presented in an order where an item and its analogue are placed together.

Eqaan Doug and his team challenged the shared structure theory and mostly its applications in computer science. They argue that there is no clear line between perception, including high-level perception, and analogical thinking. In fact, analogy occurs not only after, but also before and at the same time as high-level perception. In high-level perception, humans make representations by selecting relevant information from low-level stimuli. Perception is necessary for analogy, but analogy is also necessary for high-level perception. Chalmers et al. concludes that analogy actually is high-level perception. Forbus et al. (1998) claim that this is only a metaphor. It has been argued (Morrison and Dietrich 1995) that Hofstadter's and Gentner's groups do not defend opposite views, but are instead dealing with different aspects of analogy.

Anatomy

In anatomy, two anatomical structures are considered to be analogous when they serve similar functions but are not evolutionarily related, such as the legs of vertebrates and the legs of insects. Analogous structures are the result of independent evolution and should be contrasted with structures which shared an evolutionary line.

Engineering

Often a physical prototype is built to model and represent some other physical object. For example, wind tunnels are used to test scale models of wings and aircraft which are analogous to (correspond to) full-size wings and aircraft.

For example, the MONIAC (an analogue computer) used the flow of water in its pipes as an analogue to the flow of money in an economy.

Cybernetics

Where two or more biological or physical participants meet, they communicate and the stresses produced describe internal models of the participants. Pask in his conversation theory asserts an analogy that describes both similarities and differences between any pair of the participants' internal models or concepts exists.

History

In historical science, comparative historical analysis often uses the concept of analogy and analogical reasoning. Recent methods involving calculation operate on large document archives, allowing for analogical or corresponding terms from the past to be found as a response to random questions by users (e.g., Myanmar - Burma) and explained.

Morality

Analogical reasoning plays a very important part in morality. This may be because morality is supposed to be impartial and fair. If it is wrong to do something in a situation A, and situation B corresponds to A in all related features, then it is also wrong to perform that action in situation B. Moral particularism accepts such reasoning, instead of deduction and induction, since only the first can be used regardless of any moral principles.

Psychology

Structure mapping theory

Structure mapping, originally proposed by Dedre Gentner, is a theory in psychology that describes the psychological processes involved in reasoning through, and learning from, analogies. More specifically, this theory aims to describe how familiar knowledge, or knowledge about a base domain, can be used to inform an individual's understanding of a less familiar idea, or a target domain. According to this theory, individuals view their knowledge of ideas, or domains, as interconnected structures. In other words, a domain is viewed as consisting of objects, their properties, and the relationships that characterise their interactions. The process of analogy then involves:

- Recognising similar structures between the base and target domains.

- Finding deeper similarities by mapping other relationships of a base domain to the target domain.

- Cross-checking those findings against existing knowledge of the target domain.

In general, it has been found that people prefer analogies where the two systems correspond highly to each other (e.g. have similar relationships across the domains as opposed to just having similar objects across domains) when these people try to compare and contrast the systems. This is also known as the systematicity principle.

An example that has been used to illustrate structure mapping theory comes from Gentner and Gentner (1983) and uses the base domain of flowing water and the target domain of electricity. In a system of flowing water, the water is carried through pipes and the rate of water flow is determined by the pressure of the water towers or hills. This relationship corresponds to that of electricity flowing through a circuit. In a circuit, the electricity is carried through wires and the current, or rate of flow of electricity, is determined by the voltage, or electrical pressure. Given the similarity in structure, or structural alignment, between these domains, structure mapping theory would predict that relationships from one of these domains, would be inferred in the other using analogy.

Children

Children do not always need prompting to make comparisons in order to learn abstract relationships. Eventually, children undergo a relational shift, after which they begin seeing similar relations across different situations instead of merely looking at matching objects. This is critical in their cognitive development as continuing to focus on specific objects would reduce children's ability to learn abstract patterns and reason analogically. Interestingly, some researchers have proposed that children's basic brain functions (i.e., working memory and inhibitory control) do not drive this relational shift. Instead, it is driven by their relational knowledge, such as having labels for the objects that make the relationships clearer(see previous section). However, there is not enough evidence to determine whether the relational shift is actually because basic brain functions become better or relational knowledge becomes deeper.

Additionally, research has identified several factors that may increase the likelihood that a child may spontaneously engage in comparison and learn an abstract relationship, without the need for prompts. Comparison is more likely when the objects to be compared are close together in space and/or time, are highly similar (although not so similar that they match, which interfere with identifying relationships), or share common labels.

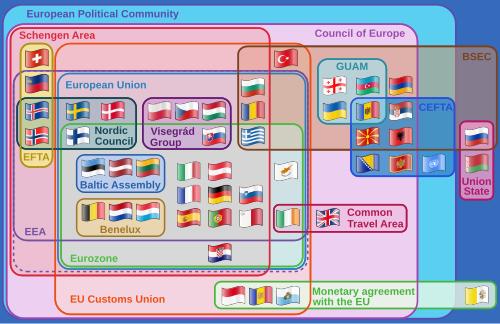

Law

In law, analogy is primarily used to resolve issues on which there is no previous authority. A distinction can be made between analogical reasoning employed in statutory law and analogical reasoning present in precedential law (case law).

Statutory

In statutory law analogy is used in order to fill the so-called lacunas, gaps or loopholes.

- A gap arises when a specific case or legal issue is not clearly dealt with in written law. Then, one may identify a provision required by law which covers the cases that are similar to the case at hand and apply this provision to this case by analogy. Such a gap, in civil law countries, is referred to as a gap extra legem (outside of the law), while analogy which closes it is termed analogy extra legem (outside of the law). The very case at hand is named: an unprovided case.

- A second gap comes into being when there is a law-controlled provision which applies to the case at hand but this provision leads in this case to an unwanted outcome. Then, one may try to find another law-controlled provision that covers cases similar to the case at hand, using analogy to act upon this provision instead of the provision that applies to it directly. This kind of gap is called a gap contra legem (against the law), while analogy which fills this gap is referred to as analogy contra legem (against the law).

- A third gap occurs where a law-controlled provision regulates the case at hand, but is unclear or ambiguous. In such circumstances, to decide the case at hand, one may try to find out what this provision means by relying on law-controlled provisions which address cases that are similar to the case at hand or other cases that are regulated by this unclear/ambiguous provision for help. A gap of this type is named gap intra legem (within the law) and analogy which deals with it is referred to as analogy intra legem (within the law). In Equity, the expression infra legem is used (below the law).

The similarity upon which law-controlled analogy depends on may depend on the resemblance of raw facts of the cases being compared, the purpose (the so-called ratio legis which is generally the will of the legislature) of a law-controlled provision which is applied by analogy or some other sources.

Law-controlled analogy may be also based upon more than one statutory provision or even a spirit of law. In the latter case, it is called analogia iuris (from the law in general) as opposed to analogia legis (from a specific legal provision or provisions).

Case

In case law (precedential law), analogies can be drawn from precedent cases. The judge who decides the case at hand may find that the facts of this case are similar to the facts of one of the prior cases to an extent that the outcomes of these cases are treated as the same or similar: stare decesis. Such use of analogy in precedential law is related or connected to the so-called cases of first impression in name, i.e. the cases which have not been regulated by any binding judge's precedent (are not covered by a precedential rule of such a precedent).

Reasoning from (dis)analogy is also sufficiently employed, while a judge is distinguishing a precedent. That is, upon the discerned differences between the case at hand and the precedential case, a judge rejects to decide the case upon the precedent whose precedential rule embraces the case at hand.

There is also much room for some other uses of analogy in precedential law. One of them is resort to analogical reasoning, while resolving the conflict between two or more precedents which all apply to the case at hand despite dictating different legal outcomes for that case. Analogy can also take part in verifying the contents of ratio decidendi, deciding upon precedents that have become irrelevant or quoting precedents form other jurisdictions. It is visible in legal Education, notably in the US (the so-called 'case method').

Restrictions and Civil Law

The law of every jurisdiction is different. In legal matters, sometimes the use of analogy is forbidden (by the very law or common agreement between judges and scholars): the most common instances concern criminal, international, administrative and tax law, especially in jurisdictions which do not have a common law system. For example:

- Analogy should not be resorted to in criminal matters whenever its outcome would be unfavorable to the accused or suspect. Such a ban finds its footing in the principle: "nullum crimen, nulla poena sine lege", which is understood in the way that there is no crime (punishment) unless it is plainly provided for in a law-controlled provision or an already existing judicial precedent.

- Analogy should be applied with caution in the domain of tax law. Here, the principle: "nullum tributum sine lege" justifies a general ban on the usage of analogy that would lead to an increase in taxation or whose results would – for some other reason – be harmful to the interests of taxpayers.

- Extending by analogy those provisions of administrative law that restrict human rights and the rights of the citizens (particularly the category of the so-called "individual rights" or "basic rights") is prohibited in many jurisdictions. Analogy generally should also not be resorted to in order to make the citizen's burdens and obligations larger.

- The other limitations on the use of analogy in law, among many others, apply to:

- the analogical extension of statutory provisions that involve exceptions to more general law-controlled regulation or provisions (this restriction flows from the well-known, especially in civil law continental legal systems, Latin maxims: "exceptiones non sunt excendentae", "exception est strictissimae interpretationis" and "singularia non sunt extendenda")

- the usage of an analogical argument with regard to those law-controlled provisions which comprise lists (enumerations)

- extending by analogy those law-controlled provisions that give the impression that the Legislator intended to regulate some issues in an exclusive (exhaustive) manner (such a manner is especially implied when the wording of a given statutory provision involves such pointers as: "only", "exclusively", "solely", "always", "never") or which have a plain precise meaning.

In civil law jurisdictions, analogy may be permitted or required by law. But also in this branch of law there are some restrictions confining the possible scope of the use of an analogical argument. Such is, for instance, the prohibition to use analogy in relation to provisions regarding time limits or a general ban on the recourse to analogical arguments which lead to extension of those statutory provisions which envisage some obligations or burdens or which order (mandate) something. The other examples concern the usage of analogy in the field of property law, especially when one is going to create some new property rights by it or to extend these statutory provisions whose terms are unambiguous (unequivocal) and plain (clear), e.g.: be of or under a certain age.

Teaching strategies

Analogies as defined in rhetoric are a comparison between words, but an analogy more generally can also be used to illustrate and teach. To enlighten pupils on the relations between or within certain concepts, items or phenomena, a teacher may refer to other concepts, items or phenomena that pupils are more familiar with. It may help to create or clarify one theory (or theoretical model) via the workings of another theory (or theoretical model). Thus an analogy, as used in teaching, would be comparing a topic that students are already familiar with, with a new topic that is being introduced, so that students can get a better understanding of the new topic by relating back to existing knowledge. This can be particularly helpful when the analogy serves across different disciplines: indeed, there are various teaching innovations now emerging that use sight-based analogies for teaching and research across subjects such as science and the humanities.

Shawn Glynn, a professor in the department of educational psychology and instructional technology at the University of Georgia, developed a theory on teaching with analogies and developed steps to explain the process of teaching with this method. The steps for teaching with analogies are as follows: Step one is introducing the new topic that is about to be taught and giving some general knowledge on the subject. Step two is reviewing the concept that the students already know to ensure they have the proper knowledge to assess the similarities between the two concepts. Step three is finding relevant features within the analogy of the two concepts. Step four is finding similarities between the two concepts so students are able to compare and contrast them in order to understand. Step five is indicating where the analogy breaks down between the two concepts. And finally, step six is drawing a conclusion about the analogy and comparing the new material with the already learned material. Typically this method is used to learn topics in science.

In 1989, teacher Kerry Ruef began a program titled The Private Eye Project. It is a method of teaching that revolves around using analogies in the classroom to better explain topics. She thought of the idea to use analogies as a part of curriculum because she was observing objects once and she said, "my mind was noting what else each object reminded me of..." This led her to teach with the question, "what does [the subject or topic] remind you of?" The idea of comparing subjects and concepts led to the development of The Private Eye Project as a method of teaching. The program is designed to build critical thinking skills with analogies as one of the main themes revolving around it. While Glynn focuses on using analogies to teach science, The Private Eye Project can be used for any subject including writing, math, art, social studies, and invention. It is now used by thousands of schools around the country.

Religion

Catholicism

The Fourth Lateran Council of 1215 taught: For between creator and creature there can be noted no similarity so great that a greater dissimilarity cannot be seen between them.

The theological exploration of this subject is called the analogia entis. The consequence of this theory is that all true statements concerning God (excluding the concrete details of Jesus' earthly life) are rough analogies, without implying any falsehood. Such analogical and true statements would include God is, God is Love, God is a consuming fire, God is near to all who call him, or God as Trinity, where being, love, fire, distance, number must be classed as analogies that allow human cognition of what is infinitely beyond positive or negative language.

The use of theological statements in syllogisms must take into account their analogical essence, in that every analogy breaks down when stretched beyond its intended meaning.

Islam

Islamic jurisprudence makes ample use of analogy as a means of making conclusions from outside sources of law. The bounds and rules employed to make analogical deduction vary greatly between madhhabs and to a lesser extent individual scholars. It is nonetheless a generally accepted source of law within jurisprudential epistemology, with the chief opposition to it forming the dhahiri (ostensiblist) school.