Teleology (from τέλος, telos, 'end', 'aim', or 'goal,' and λόγος, logos, 'explanation' or 'reason') or finality is a reason or explanation for something as a function of its end, purpose, or goal. A purpose that is imposed by a human use, such as that of a fork, is called extrinsic.

Natural teleology, common in classical philosophy, though controversial today, contends that natural entities also have intrinsic purposes, irrespective of human use or opinion. For instance, Aristotle claimed that an acorn's intrinsic telos is to become a fully grown oak tree. Though ancient atomists rejected the notion of natural teleology, teleological accounts of non-personal or non-human nature were explored and often endorsed in ancient and medieval philosophies, but fell into disfavor during the modern era (1600–1900).

In the late 18th century, Immanuel Kant used the concept of telos as a regulative principle in his Critique of Judgment (1790). Teleology was also fundamental to the philosophy of Karl Marx and G. W. F. Hegel.

Contemporary philosophers and scientists are still in debate as to whether teleological axioms are useful or accurate in proposing modern philosophies and scientific theories. An example of the reintroduction of teleology into modern language is the notion of an attractor. Another instance is when Thomas Nagel (2012), though not a biologist, proposed a non-Darwinian account of evolution that incorporates impersonal and natural teleological laws to explain the existence of life, consciousness, rationality, and objective value. Regardless, the accuracy can also be considered independently from the usefulness: it is a common experience in pedagogy that a minimum of apparent teleology can be useful in thinking about and explaining Darwinian evolution even if there is no true teleology driving evolution. Thus it is easier to say that evolution "gave" wolves sharp canine teeth because those teeth "serve the purpose of" predation regardless of whether there is an underlying non-teleologic reality in which evolution is not an actor with intentions. In other words, because human cognition and learning often rely on the narrative structure of stories (with actors, goals, and immediate (proximal) rather than ultimate (distal) causation (see also proximate and ultimate causation), some minimal level of teleology might be recognized as useful or at least tolerable for practical purposes even by people who reject its cosmologic accuracy. Its accuracy is upheld by Barrow and Tippler (1986), whose citings of such teleologists as Max Planck and Norbert Wiener are significant for scientific endeavor.

History

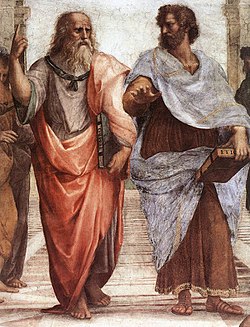

In western philosophy, the term and concept of teleology originated in the writings of Plato and Aristotle. Aristotle's 'four causes' give special place to the telos or "final cause" of each thing. In this, he followed Plato in seeing purpose in both human and subhuman nature.

Etymology

The word teleology combines Greek telos (τέλος, from τελε-, 'end' or 'purpose') and logia (-λογία, 'speak of', 'study of', or 'a branch of learning"'). German philosopher Christian Wolff would coin the term, as teleologia (Latin), in his work Philosophia rationalis, sive logica (1728).

Platonic

In the Phaedo, Plato through Socrates argues that true explanations for any given physical phenomenon must be teleological. He bemoans those who fail to distinguish between a thing's necessary and sufficient causes, which he identifies respectively as material and final causes:

Imagine not being able to distinguish the real cause, from that without which the cause would not be able to act, as a cause. It is what the majority appear to do, like people groping in the dark; they call it a cause, thus giving it a name that does not belong to it. That is why one man surrounds the earth with a vortex to make the heavens keep it in place, another makes the air support it like a wide lid. As for their capacity of being in the best place they could be at this very time, this they do not look for, nor do they believe it to have any divine force, but they believe that they will some time discover a stronger and more immortal Atlas to hold everything together more, and they do not believe that the truly good and 'binding' binds and holds them together.

— Plato, Phaedo, 99

Plato here argues that while the materials that compose a body are necessary conditions for its moving or acting in a certain way, they nevertheless cannot be the sufficient condition for its moving or acting as it does. For example, if Socrates is sitting in an Athenian prison, the elasticity of his tendons is what allows him to be sitting, and so a physical description of his tendons can be listed as necessary conditions or auxiliary causes of his act of sitting. However, these are only necessary conditions of Socrates' sitting. To give a physical description of Socrates' body is to say that Socrates is sitting, but it does not give us any idea why it came to be that he was sitting in the first place. To say why he was sitting and not not sitting, we have to explain what it is about his sitting that is good, for all things brought about (i.e., all products of actions) are brought about because the actor saw some good in them. Thus, to give an explanation of something is to determine what about it is good. Its goodness is its actual cause—its purpose, telos or "reason for which."

Aristotelian

Aristotle argued that Democritus was wrong to attempt to reduce all things to mere necessity, because doing so neglects the aim, order, and "final cause", which brings about these necessary conditions:

Democritus, however, neglecting the final cause, reduces to necessity all the operations of nature. Now, they are necessary, it is true, but yet they are for a final cause and for the sake of what is best in each case. Thus nothing prevents the teeth from being formed and being shed in this way; but it is not on account of these causes but on account of the end.…

— Aristotle, Generation of Animals 5.8, 789a8–b15

In Physics, using eternal forms as his model, Aristotle rejects Plato's assumption that the universe was created by an intelligent designer. For Aristotle, natural ends are produced by "natures" (principles of change internal to living things), and natures, Aristotle argued, do not deliberate:

It is absurd to suppose that ends are not present [in nature] because we do not see an agent deliberating.

— Aristotle, Physics, 2.8, 199b27-9

These Platonic and Aristotelian arguments ran counter to those presented earlier by Democritus and later by Lucretius, both of whom were supporters of what is now often called accidentalism:

Nothing in the body is made in order that we may use it. What happens to exist is the cause of its use.

— Lucretius, De rerum natura [On the Nature of Things] 4, 833

Economics

A teleology of human aims played a crucial role in the work of economist Ludwig von Mises, especially in the development of his science of praxeology. More specifically, Mises believed that human action (i.e. purposeful behavior) is teleological, based on the presupposition that an individual's action is governed or caused by the existence of their chosen ends. In other words, individuals select what they believe to be the most appropriate means to achieve a sought after goal or end. Mises also stressed that, with respect to human action, teleology is not independent of causality: "No action can be devised and ventured upon without definite ideas about the relation of cause and effect, teleology presupposes causality."

Assuming reason and action to be predominantly influenced by ideological credence, Mises derived his portrayal of human motivation from Epicurean teachings, insofar as he assumes "atomistic individualism, teleology, and libertarianism, and defines man as an egoist who seeks a maximum of happiness" (i.e. the ultimate pursuit of pleasure over pain). "Man strives for," Mises remarks, "but never attains the perfect state of happiness described by Epicurus." Moreover, expanding upon the Epicurean groundwork, Mises formalized his conception of pleasure and pain by assigning each specific meaning, allowing him to extrapolate his conception of attainable happiness to a critique of liberal versus socialist ideological societies. It is there, in his application of Epicurean belief to political theory, that Mises flouts Marxist theory, considering labor to be one of many of man's 'pains', a consideration which positioned labor as a violation of his original Epicurean assumption of man's manifest hedonistic pursuit. From here he further postulates a critical distinction between introversive labor and extroversive labor, further divaricating from basic Marxist theory, in which Marx hails labor as man's "species-essense", or his "species-activity".

Postmodern philosophy

Teleological-based "grand narratives" are renounced by the postmodern tradition, where teleology may be viewed as reductive, exclusionary, and harmful to those whose stories are diminished or overlooked.

Against this postmodern position, Alasdair MacIntyre has argued that a narrative understanding of oneself, of one's capacity as an independent reasoner, one's dependence on others and on the social practices and traditions in which one participates, all tend towards an ultimate good of liberation. Social practices may themselves be understood as teleologically oriented to internal goods, for example practices of philosophical and scientific inquiry are teleologically ordered to the elaboration of a true understanding of their objects. MacIntyre's After Virtue (1981) famously dismissed the naturalistic teleology of Aristotle's 'metaphysical biology', but he has cautiously moved from that book's account of a sociological teleology toward an exploration of what remains valid in a more traditional teleological naturalism.

Hegel

Historically, teleology may be identified with the philosophical tradition of Aristotelianism. The rationale of teleology was explored by Immanuel Kant (1790) in his Critique of Judgement and made central to speculative philosophy by G. W. F. Hegel (as well as various neo-Hegelian schools). Hegel proposed a history of our species which some consider to be at variance with Darwin, as well as with the dialectical materialism of Karl Marx and Friedrich Engels, employing what is now called analytic philosophy—the point of departure being not formal logic and scientific fact but 'identity', or "objective spirit" in Hegel's terminology.

Individual human consciousness, in the process of reaching for autonomy and freedom, has no choice but to deal with an obvious reality: the collective identities (e.g. the multiplicity of world views, ethnic, cultural, and national identities) that divide the human race and set different groups in violent conflict with each other. Hegel conceived of the 'totality' of mutually antagonistic world-views and life-forms in history as being 'goal-driven', i.e. oriented towards an end-point in history. The 'objective contradiction' of 'subject' and 'object' would eventually 'sublate' into a form of life that leaves violent conflict behind. This goal-oriented, teleological notion of the "historical process as a whole" is present in a variety of 20th-century authors, although its prominence declined drastically after the Second World War.

Ethics

Teleology significantly informs the study of ethics, such as in:

- Business ethics: People in business commonly think in terms of purposeful action, as in, for example, management by objectives. Teleological analysis of business ethics leads to consideration of the full range of stakeholders in any business decision, including the management, the staff, the customers, the shareholders, the country, humanity and the environment.

- Medical ethics: Teleology provides a moral basis for the professional ethics of medicine, as physicians are generally concerned with outcomes and must therefore know the telos of a given treatment paradigm.

Consequentialism

The broad spectrum of consequentialist ethics—of which utilitarianism is a well-known example—focuses on the end result or consequences, with such principles as John Stuart Mill's 'principle of utility': "the greatest good for the greatest number." This principle is thus teleological, though in a broader sense than is elsewhere understood in philosophy.

In the classical notion, teleology is grounded in the inherent nature of things themselves, whereas in consequentialism, teleology is imposed on nature from outside by the human will. Consequentialist theories justify inherently what most people would call evil acts by their desirable outcomes, if the good of the outcome outweighs the bad of the act. So, for example, a consequentialist theory would say it was acceptable to kill one person in order to save two or more other people. These theories may be summarized by the maxim "the end justifies the means."

Deontologicalism

Consequentialism stands in contrast to the more classical notions of deontological ethics, of which examples include Immanuel Kant's categorical imperative, and Aristotle's virtue ethics—although formulations of virtue ethics are also often consequentialist in derivation.

In deontological ethics, the goodness or badness of individual acts is primary and a larger, more desirable goal is insufficient to justify bad acts committed on the way to that goal, even if the bad acts are relatively minor and the goal is major (like telling a small lie to prevent a war and save millions of lives). In requiring all constituent acts to be good, deontological ethics is much more rigid than consequentialism, which varies by circumstances.

Practical ethics are usually a mix of the two. For example, Mill also relies on deontic maxims to guide practical behavior, but they must be justifiable by the principle of utility.

Science

In modern science, explanations that rely on teleology are often, but not always, avoided, either because they are unnecessary or because whether they are true or false is thought to be beyond the ability of human perception and understanding to judge. But using teleology as an explanatory style, in particular within evolutionary biology, is still controversial.

Since the Novum Organum of Francis Bacon, teleological explanations in physical science tend to be deliberately avoided in favor of focus on material and efficient explanations. Final and formal causation came to be viewed as false or too subjective. Nonetheless, some disciplines, in particular within evolutionary biology, continue to use language that appears teleological in describing natural tendencies towards certain end conditions. Some suggest, however, that these arguments ought to be, and practicably can be, rephrased in non-teleological forms, others hold that teleological language cannot always be easily expunged from descriptions in the life sciences, at least within the bounds of practical pedagogy.

Biology

Apparent teleology is a recurring issue in evolutionary biology, much to the consternation of some writers.

Statements implying that nature has goals, for example where a species is said to do something "in order to" achieve survival, appear teleological, and therefore invalid. Usually, it is possible to rewrite such sentences to avoid the apparent teleology. Some biology courses have incorporated exercises requiring students to rephrase such sentences so that they do not read teleologically. Nevertheless, biologists still frequently write in a way which can be read as implying teleology even if that is not the intention. John Reiss (2009) argues that evolutionary biology can be purged of such teleology by rejecting the analogy of natural selection as a watchmaker. Other arguments against this analogy have also been promoted by writers such as Richard Dawkins (1987).

Some authors, like James Lennox (1993), have argued that Darwin was a teleologist, while others, such as Michael Ghiselin (1994), describe this claim as a myth promoted by misinterpretations of his discussions and emphasized the distinction between using teleological metaphors and being teleological.

Biologist philosopher Francisco Ayala (1998) has argued that all statements about processes can be trivially translated into teleological statements, and vice versa, but that teleological statements are more explanatory and cannot be disposed of. Karen Neander (1998) has argued that the modern concept of biological 'function' is dependent upon selection. So, for example, it is not possible to say that anything that simply winks into existence without going through a process of selection has functions. We decide whether an appendage has a function by analysing the process of selection that led to it. Therefore, any talk of functions must be posterior to natural selection and function cannot be defined in the manner advocated by Reiss and Dawkins.

Ernst Mayr (1992) states that "adaptedness…is an a posteriori result rather than an a priori goal-seeking." Various commentators view the teleological phrases used in modern evolutionary biology as a type of shorthand. For example, S. H. P. Madrell (1998) writes that "the proper but cumbersome way of describing change by evolutionary adaptation [may be] substituted by shorter overtly teleological statements" for the sake of saving space, but that this "should not be taken to imply that evolution proceeds by anything other than from mutations arising by chance, with those that impart an advantage being retained by natural selection." Likewise, J. B. S. Haldane says, "Teleology is like a mistress to a biologist: he cannot live without her but he's unwilling to be seen with her in public."

Selected-effects accounts, such as the one suggested by Neander (1998), face objections due to their reliance on etiological accounts, which some fields lack the resources to accommodate. Many such sciences, which study the same traits and behaviors regarded by evolutionary biology, still correctly attribute teleological functions without appeal to selection history. Corey J. Maley and Gualtiero Piccinini (2018/2017) are proponents of one such account, which focuses instead on goal-contribution. With the objective goals of organisms being survival and inclusive fitness, Piccinini and Maley define teleological functions to be “a stable contribution by a trait (or component, activity, property) of organisms belonging to a biological population to an objective goal of those organisms.”

Cybernetics

Cybernetics is the study of the communication and control of regulatory feedback both in living beings and machines, and in combinations of the two.

Arturo Rosenblueth, Norbert Wiener, and Julian Bigelow (1943) had conceived of feedback mechanisms as lending a teleology to machinery. Wiener (1948) coined the term cybernetics to denote the study of "teleological mechanisms." In the cybernetic classification presented by Rosenblueth, Wiener, and Bigelow (1943), teleology is feedback controlled purpose.

The classification system underlying cybernetics has been criticized by Frank Honywill George and Les Johnson (1985), who cite the need for an external observability to the purposeful behavior in order to establish and validate the goal-seeking behavior. In this view, the purpose of observing and observed systems is respectively distinguished by the system's subjective autonomy and objective control.

![{\displaystyle {\begin{aligned}u(x,t)&=e^{-(x-ct)^{2}+ik_{0}(x-ct)}\\&=e^{-(x-ct)^{2}}\left[\cos \left(2\pi {\frac {x-ct}{\lambda }}\right)+i\sin \left(2\pi {\frac {x-ct}{\lambda }}\right)\right].\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/ccc2bb230bd72af84a67abc8270b7ffbe4974fa6)

![{\displaystyle ~\psi (x,0)={\sqrt[{4}]{2/\pi }}\exp({-x^{2}+ik_{0}x})}](https://wikimedia.org/api/rest_v1/media/math/render/svg/7b53d56ba7876d20b2f398f13a949b7406e90f27)

![{\displaystyle {\begin{aligned}\psi (x,t)&={\frac {\sqrt[{4}]{2/\pi }}{\sqrt {1+2it}}}e^{-{\frac {1}{4}}k_{0}^{2}}~e^{-{\frac {1}{1+2it}}\left(x-{\frac {ik_{0}}{2}}\right)^{2}}\\&={\frac {\sqrt[{4}]{2/\pi }}{\sqrt {1+2it}}}e^{-{\frac {1}{1+4t^{2}}}(x-k_{0}t)^{2}}~e^{i{\frac {1}{1+4t^{2}}}\left((k_{0}+2tx)x-{\frac {1}{2}}tk_{0}^{2}\right)}~.\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/d0e67a48191155d76114ed05d738cb11c3830ce3)