From Wikipedia, the free encyclopedia

The liquid drop model

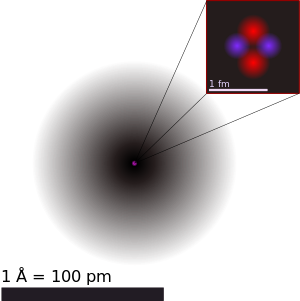

The liquid drop model is one of the first models of

nuclear structure, proposed by

Carl Friedrich von Weizsäcker in 1935. It describes the nucleus as a

semiclassical fluid made up of

neutrons and

protons, with an internal repulsive

electrostatic force proportional to the number of protons. The

quantum mechanical nature of these particles appears via the

Pauli exclusion principle, which states that no two nucleons of the same kind can be at the same

state. Thus the fluid is actually what is known as a

Fermi liquid.

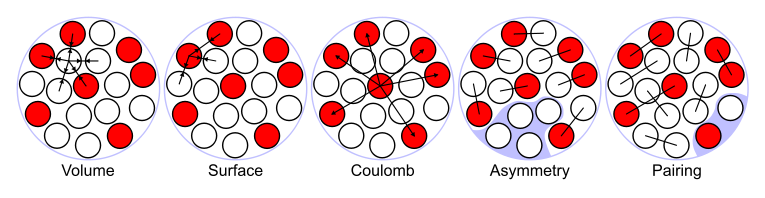

In this model, the binding energy of a nucleus with

protons and

neutrons is given by

where

is the total number of

nucleons (

Mass Number). The terms proportional to

and

represent the volume and surface energy of the liquid drop, the term proportional to

represents the electrostatic energy, the term proportional to

represents the Pauli exclusion principle and the last term

is the pairing term, which lowers the energy for even numbers of protons or neutrons.

The coefficients

and the strength of the pairing term may be estimated theoretically, or fit to data.

This simple model reproduces the main features of the

binding energy of nuclei.

The assumption of nucleus as a drop of

Fermi liquid

is still widely used in the form of Finite Range Droplet Model (FRDM),

due to the possible good reproduction of nuclear binding energy on the

whole chart, with the necessary accuracy for predictions of unknown

nuclei.

The shell model

The expression "shell model" is ambiguous in that it refers to two

different eras in the state of the art. It was previously used to

describe the existence of nucleon shells in the nucleus according to an

approach closer to what is now called

mean field theory.

Nowadays, it refers to a formalism analogous to the

configuration interaction formalism used in

quantum chemistry. We shall introduce the latter here.

Introduction to the shell concept

Difference between experimental binding energies and the liquid drop model prediction as a function of neutron number for Z>7

Systematic measurements of the

binding energy

of atomic nuclei show systematic deviations with respect to those

estimated from the liquid drop model. In particular, some nuclei having

certain values for the number of protons and/or neutrons are bound more

tightly together than predicted by the liquid drop model. These nuclei

are called singly/doubly

magic. This observation led scientists to assume the existence of a shell structure of

nucleons (protons and neutrons) within the nucleus, like that of

electrons within atoms.

Indeed, nucleons are

quantum objects.

Strictly speaking, one should not speak of energies of individual

nucleons, because they are all correlated with each other. However, as

an approximation one may envision an average nucleus, within which

nucleons propagate individually. Owing to their quantum character, they

may only occupy

discrete energy levels.

These levels are by no means uniformly distributed; some intervals of

energy are crowded, and some are empty, generating a gap in possible

energies. A shell is such a set of levels separated from the other ones

by a wide empty gap.

The energy levels are found by solving the

Schrödinger equation

for a single nucleon moving in the average potential generated by all

other nucleons. Each level may be occupied by a nucleon, or empty. Some

levels accommodate several different quantum states with the same

energy; they are said to be

degenerate. This occurs in particular if the average nucleus has some

symmetry.

The concept of shells allows one to understand why some nuclei

are bound more tightly than others. This is because two nucleons of the

same kind cannot be in the same state (

Pauli exclusion principle).

So the lowest-energy state of the nucleus is one where nucleons fill

all energy levels from the bottom up to some level. A nucleus with full

shells is exceptionally stable, as will be explained.

As with electrons in the

electron shell

model, protons in the outermost shell are relatively loosely bound to

the nucleus if there are only few protons in that shell, because they

are farthest from the center of the nucleus. Therefore, nuclei which

have a full outer proton shell will be more tightly bound and have a

higher binding energy than other nuclei with a similar total number of

protons. All this is also true for neutrons.

Furthermore, the energy needed to excite the nucleus (i.e. moving

a nucleon to a higher, previously unoccupied level) is exceptionally

high in such nuclei. Whenever this unoccupied level is the next after a

full shell, the only way to excite the nucleus is to raise one nucleon

across the gap,

thus spending a large amount of energy. Otherwise, if the highest

occupied energy level lies in a partly filled shell, much less energy is

required to raise a nucleon to a higher state in the same shell.

Some evolution of the shell structure observed in stable nuclei is expected away from the

valley of stability. For example, observations of unstable

isotopes have shown shifting and even a reordering of the single particle levels of which the shell structure is composed. This is sometimes observed as the creation of an

island of inversion or in the reduction of excitation energy gaps above the traditional magic numbers.

Basic hypotheses

Some basic hypotheses are made in order to give a precise conceptual framework to the shell model:

- The atomic nucleus is a quantum n-body system.

- The internal motion of nucleons within the nucleus is non-relativistic, and their behavior is governed by the Schrödinger equation.

- Nucleons are considered to be pointlike, without any internal structure.

Brief description of the formalism

The general process used in the shell model calculations is the following. First a

Hamiltonian

for the nucleus is defined. Usually, for computational practicality,

only one- and two-body terms are taken into account in this definition.

The interaction is an

effective theory: it contains free parameters which have to be fitted with experimental data.

The next step consists in defining a

basis of single-particle states, i.e. a set of

wavefunctions describing all possible nucleon states. Most of the time, this basis is obtained via a

Hartree–Fock computation. With this set of one-particle states,

Slater determinants are built, that is, wavefunctions for

Z proton variables or

N

neutron variables, which are antisymmetrized products of

single-particle wavefunctions (antisymmetrized meaning that under

exchange of variables for any pair of nucleons, the wavefunction only

changes sign).

In principle, the number of

quantum states available for a single nucleon at a finite energy is finite, say

n.

The number of nucleons in the nucleus must be smaller than the number

of available states, otherwise the nucleus cannot hold all of its

nucleons. There are thus several ways to choose

Z (or

N) states among the

n possible. In

combinatorial mathematics, the number of choices of

Z objects among

n is the

binomial coefficient C

Z

n. If

n is much larger than

Z (or

N), this increases roughly like

nZ. Practically, this number becomes so large that every computation is impossible for

A=

N+

Z larger than 8.

To obviate this difficulty, the space of possible single-particle states is divided into a core and a

valence shell, by analogy with

chemistry.

The core is a set of single-particles which are assumed to be inactive,

in the sense that they are the well bound lowest-energy states, and

that there is no need to reexamine their situation. They do not appear

in the Slater determinants, contrary to the states in the valence space,

which is the space of all single-particle states

not in the core, but possibly to be considered in the choice of the build of the (

Z-)

N-body wavefunction. The set of all possible Slater determinants in the valence space defines a

basis for

(

Z-)

N-body states.

The last step consists in computing the matrix of the Hamiltonian

within this basis, and to diagonalize it. In spite of the reduction of

the dimension of the basis owing to the fixation of the core, the

matrices to be diagonalized reach easily dimensions of the order of 10

9, and demand specific diagonalization techniques.

The shell model calculations give in general an excellent fit

with experimental data. They depend however strongly on two main

factors:

- The way to divide the single-particle space into core and valence.

- The effective nucleon–nucleon interaction.

Mean field theories

The independent-particle model

The

interaction between nucleons, which is a consequence of

strong interactions

and binds the nucleons within the nucleus, exhibits the peculiar

behaviour of having a finite range: it vanishes when the distance

between two nucleons becomes too large; it is attractive at medium

range, and repulsive at very small range. This last property correlates

with the

Pauli exclusion principle according to which two

fermions (nucleons are fermions) cannot be in the same quantum state. This results in a very large

mean free path predicted for a nucleon within the nucleus.

The main idea of the Independent Particle approach is that a

nucleon moves inside a certain potential well (which keeps it bound to

the nucleus) independently from the other nucleons. This amounts to

replacing a

N-body problem (

N particles interacting) by

N

single-body problems. This essential simplification of the problem is

the cornerstone of mean field theories. These are also widely used in

atomic physics, where electrons move in a mean field due to the central nucleus and the electron cloud itself.

The independent particle model and mean field theories (we shall

see that there exist several variants) have a great success in

describing the properties of the nucleus starting from an effective

interaction or an effective potential, thus are a basic part of atomic

nucleus theory. One should also notice that they are modular enough, in

that it is quite easy to

extend the model to introduce effects such as nuclear pairing, or collective motions of the nucleon like

rotation, or

vibration,

adding the corresponding energy terms in the formalism. This implies

that in many representations, the mean field is only a starting point

for a more complete description which introduces correlations

reproducing properties like collective excitations and nucleon transfer.

Nuclear potential and effective interaction

A large part of the practical difficulties met in mean field theories is the definition (or calculation) of the

potential of the mean field itself. One can very roughly distinguish between two approaches:

- The phenomenological approach is a parameterization of the nuclear potential by an appropriate mathematical function. Historically, this procedure was applied with the greatest success by Sven Gösta Nilsson, who used as a potential a (deformed) harmonic oscillator

potential. The most recent parameterizations are based on more

realistic functions, which account more accurately for scattering

experiments, for example. In particular the form known as the Woods–Saxon potential can be mentioned.

- The self-consistent or Hartree–Fock

approach aims to deduce mathematically the nuclear potential from an

effective nucleon–nucleon interaction. This technique implies a

resolution of the Schrödinger equation

in an iterative fashion, starting from an ansatz wavefunction and

improving it variationally, since the potential depends there upon the

wavefunctions to be determined. The latter are written as Slater determinants.

In the case of the Hartree–Fock approaches, the trouble is not to

find the mathematical function which describes best the nuclear

potential, but that which describes best the nucleon–nucleon

interaction. Indeed, in contrast with

atomic physics where the interaction is known (it is the

Coulomb interaction), the nucleon–nucleon interaction within the nucleus is not known analytically.

There are two main reasons for this fact. First, the strong interaction acts essentially among the

quarks forming the nucleons. The

nucleon–nucleon interaction in vacuum is a mere

consequence of the quark–quark interaction. While the latter is well understood in the framework of the

Standard Model at high energies, it is much more complicated in low energies due to

color confinement and

asymptotic freedom.

Thus there is yet no fundamental theory allowing one to deduce the

nucleon–nucleon interaction from the quark–quark interaction.

Furthermore, even if this problem were solved, there would remain a

large difference between the ideal (and conceptually simpler) case of

two nucleons interacting in vacuum, and that of these nucleons

interacting in the nuclear matter. To go further, it was necessary to

invent the concept of

effective interaction.

The latter is basically a mathematical function with several arbitrary

parameters, which are adjusted to agree with experimental data.

Most modern interaction are zero-range so they act only when the two nucleons are in contact, as introduced by

Tony Skyrme.

The self-consistent approaches of the Hartree–Fock type

In the

Hartree–Fock approach of the

n-body problem, the starting point is a

Hamiltonian containing

n kinetic energy

terms, and potential terms. As mentioned before, one of the mean field

theory hypotheses is that only the two-body interaction is to be taken

into account. The potential term of the Hamiltonian represents all

possible two-body interactions in the set of

n fermions. It is the first hypothesis.

The second step consists in assuming that the

wavefunction of the system can be written as a

Slater determinant of one-particle

spin-orbitals. This statement is the mathematical translation of the independent-particle model. This is the second hypothesis.

There remains now to determine the components of this Slater determinant, that is, the individual

wavefunctions

of the nucleons. To this end, it is assumed that the total wavefunction

(the Slater determinant) is such that the energy is minimum. This is

the third hypothesis.

Technically, it means that one must compute the

mean value of the (known) two-body

Hamiltonian on the (unknown) Slater determinant, and impose that its mathematical

variation

vanishes. This leads to a set of equations where the unknowns are the

individual wavefunctions: the Hartree–Fock equations. Solving these

equations gives the wavefunctions and individual energy levels of

nucleons, and so the total energy of the nucleus and its wavefunction.

This short account of the

Hartree–Fock method explains why it is called also the

variational

approach. At the beginning of the calculation, the total energy is a

"function of the individual wavefunctions" (a so-called functional), and

everything is then made in order to optimize the choice of these

wavefunctions so that the functional has a minimum – hopefully absolute,

and not only local. To be more precise, there should be mentioned that

the energy is a functional of the

density, defined as the sum of the individual squared wavefunctions. Let us note also that the Hartree–Fock method is also used in

atomic physics and

condensed matter physics as Density Functional Theory, DFT.

The process of solving the Hartree–Fock equations can only be iterative, since these are in fact a

Schrödinger equation in which the potential depends on the

density, that is, precisely on the

wavefunctions

to be determined. Practically, the algorithm is started with a set of

individual grossly reasonable wavefunctions (in general the

eigenfunctions of a

harmonic oscillator).

These allow to compute the density, and therefrom the Hartree–Fock

potential. Once this done, the Schrödinger equation is solved anew, and

so on. The calculation stops – convergence is reached – when the

difference among wavefunctions, or energy levels, for two successive

iterations is less than a fixed value. Then the mean field potential is

completely determined, and the Hartree–Fock equations become standard

Schrödinger equations. The corresponding Hamiltonian is then called the

Hartree–Fock Hamiltonian.

The relativistic mean field approaches

Born first in the 1970s with the works of

John Dirk Walecka on

quantum hadrodynamics, the

relativistic

models of the nucleus were sharpened up towards the end of the 1980s by

P. Ring and coworkers. The starting point of these approaches is the

relativistic

quantum field theory. In this context, the nucleon interactions occur via the exchange of

virtual particles called

mesons. The idea is, in a first step, to build a

Lagrangian containing these interaction terms. Second, by an application of the

least action principle, one gets a set of equations of motion. The real particles (here the nucleons) obey the

Dirac equation, whilst the virtual ones (here the mesons) obey the

Klein–Gordon equations.

In view of the non-

perturbative

nature of strong interaction, and also in view of the fact that the

exact potential form of this interaction between groups of nucleons is

relatively badly known, the use of such an approach in the case of

atomic nuclei requires drastic approximations. The main simplification

consists in replacing in the equations all field terms (which are

operators in the mathematical sense) by their

mean value (which are

functions). In this way, one gets a system of coupled

integro-differential equations, which can be solved numerically, if not analytically.

The interacting boson model

The

interacting boson model

(IBM) is a model in nuclear physics in which nucleons are represented

as pairs, each of them acting as a boson particle, with integral spin of

0, 2 or 4. This makes calculations feasible for larger nuclei.

There are several branches of this model - in one of them (IBM-1) one

can group all types of nucleons in pairs, in others (for instance -

IBM-2) one considers protons and neutrons in pairs separately.

Spontaneous breaking of symmetry in nuclear physics

One of the focal points of all physics is

symmetry. The nucleon–nucleon interaction and all

effective interactions used in practice have certain symmetries. They are invariant by

translation (changing the frame of reference so that directions are not altered), by

rotation (turning the frame of reference around some axis), or

parity

(changing the sense of axes) in the sense that the interaction does not

change under any of these operations. Nevertheless, in the Hartree–Fock

approach, solutions which are not invariant under such a symmetry can

appear. One speaks then of

spontaneous symmetry breaking.

Qualitatively, these spontaneous symmetry breakings can be

explained in the following way: in the mean field theory, the nucleus is

described as a set of independent particles. Most additional

correlations among nucleons which do not enter the mean field are

neglected. They can appear however by a breaking of the symmetry of the

mean field Hamiltonian, which is only approximate. If the density used

to start the iterations of the Hartree–Fock process breaks certain

symmetries, the final Hartree–Fock Hamiltonian may break these

symmetries, if it is advantageous to keep these broken from the point of

view of the total energy.

It may also converge towards a symmetric solution. In any case,

if the final solution breaks the symmetry, for example, the rotational

symmetry, so that the nucleus appears not to be spherical, but elliptic,

all configurations deduced from this deformed nucleus by a rotation are

just as good solutions for the Hartree–Fock problem. The ground state

of the nucleus is then

degenerate.

A similar phenomenon happens with the nuclear pairing, which violates the conservation of the number of baryons (see below).

Extensions of the mean field theories

Nuclear pairing phenomenon

The

most common extension to mean field theory is the nuclear pairing.

Nuclei with an even number of nucleons are systematically more bound

than those with an odd one. This implies that each nucleon binds with

another one to form a pair, consequently the system cannot be described

as independent particles subjected to a common mean field. When the

nucleus has an even number of protons and neutrons, each one of them

finds a partner. To excite such a system, one must at least use such an

energy as to break a pair. Conversely, in the case of odd number of

protons or neutrons, there exists an unpaired nucleon, which needs less

energy to be excited.

This phenomenon is closely analogous to that of Type 1

superconductivity in solid state physics. The first theoretical description of nuclear pairing was proposed at the end of the 1950s by

Aage Bohr,

Ben Mottelson, and

David Pines (which contributed to the reception of the Nobel Prize in Physics in 1975 by Bohr and Mottelson). It was close to the

BCS theory

of Bardeen, Cooper and Schrieffer, which accounts for metal

superconductivity. Theoretically, the pairing phenomenon as described by

the BCS theory combines with the mean field theory: nucleons are both

subject to the mean field potential and to the pairing interaction.

The

Hartree–Fock–Bogolyubov (HFB) method is a more sophisticated approach, enabling one to consider the pairing and mean field interactions

consistently on equal footing. HFB is now the de facto standard in the

mean field treatment of nuclear systems.

Symmetry restoration

Peculiarity of mean field methods is the calculation of nuclear property by explicit

symmetry breaking.

The calculation of the mean field with self-consistent methods (e.g.

Hartree-Fock), breaks rotational symmetry, and the calculation of

pairing property breaks particle-number.

Several techniques for symmetry restoration by projecting on good quantum numbers have been developed.

Particle vibration coupling

Mean

field methods (eventually considering symmetry restoration) are a good

approximation for the ground state of the system, even postulating a

system of independent particles. Higher-order corrections consider the

fact that the particles interact together by the means of correlation.

These correlations can be introduced taking into account the coupling of

independent particle degrees of freedom, low-energy collective

excitation of systems with even number of protons and neutrons.

In these way excited states can be reproduced by the means of

random phase approximation (RPA), and eventually consistently calculating also corrections to the ground state (e.g. by the means of

nuclear field theory).