A social network diagram displaying friendship ties among a set of Facebook users.

Social network analysis (SNA) is the process of investigating social structures through the use of networks and graph theory. It characterizes networked structures in terms of nodes (individual actors, people, or things within the network) and the ties, edges, or links (relationships or interactions) that connect them. Examples of social structures commonly visualized through social network analysis include social media networks, memes spread, information circulation, friendship and acquaintance networks, business networks, social networks, collaboration graphs, kinship, disease transmission, and sexual relationships. These networks are often visualized through sociograms in which nodes are represented as points and ties are represented as lines.

Social network analysis has emerged as a key technique in modern sociology. It has also gained a significant following in anthropology, biology, demography, communication studies, economics, geography, history, information science, organizational studies, political science, social psychology, development studies, sociolinguistics, and computer science and is now commonly available as a consumer tool.

History

Social network analysis has its theoretical roots in the work of early sociologists such as Georg Simmel and Émile Durkheim,

who wrote about the importance of studying patterns of relationships

that connect social actors. Social scientists have used the concept of "social networks"

since early in the 20th century to connote complex sets of

relationships between members of social systems at all scales, from

interpersonal to international. In the 1930s Jacob Moreno and Helen Jennings introduced basic analytical methods. In 1954, John Arundel Barnes

started using the term systematically to denote patterns of ties,

encompassing concepts traditionally used by the public and those used by

social scientists: bounded groups (e.g., tribes, families) and social categories (e.g., gender, ethnicity). Scholars such as Ronald Burt, Kathleen Carley, Mark Granovetter, David Krackhardt, Edward Laumann, Anatol Rapoport, Barry Wellman, Douglas R. White, and Harrison White expanded the use of systematic social network analysis. Even in the study of literature, network analysis has been applied by Anheier, Gerhards and Romo, Wouter De Nooy, and Burgert Senekal.

Indeed, social network analysis has found applications in various

academic disciplines, as well as practical applications such as

countering money laundering and terrorism.

Metrics

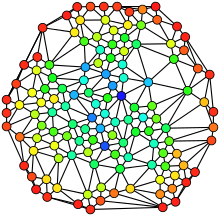

Hue (from red=0 to blue=max) indicates each node's betweenness centrality.

Connections

Homophily:

The extent to which actors form ties with similar versus dissimilar

others. Similarity can be defined by gender, race, age, occupation,

educational achievement, status, values or any other salient

characteristic. Homophily is also referred to as assortativity.

- Multiplexity: The number of content-forms contained in a tie. For example, two people who are friends and also work together would have a multiplexity of 2. Multiplexity has been associated with relationship strength.

- Mutuality/Reciprocity: The extent to which two actors reciprocate each other's friendship or other interaction.

- Network Closure: A measure of the completeness of relational triads. An individual's assumption of network closure (i.e. that their friends are also friends) is called transitivity. Transitivity is an outcome of the individual or situational trait of Need for Cognitive Closure.

- Propinquity: The tendency for actors to have more ties with geographically close others.

Distributions

- Bridge: An individual whose weak ties fill a structural hole, providing the only link between two individuals or clusters. It also includes the shortest route when a longer one is unfeasible due to a high risk of message distortion or delivery failure.

- Centrality: Centrality refers to a group of metrics that aim to quantify the "importance" or "influence" (in a variety of senses) of a particular node (or group) within a network. Examples of common methods of measuring "centrality" include betweenness centrality, closeness centrality, eigenvector centrality, alpha centrality, and degree centrality.

- Density: The proportion of direct ties in a network relative to the total number possible.

- Distance: The minimum number of ties required to connect two particular actors, as popularized by Stanley Milgram's small world experiment and the idea of 'six degrees of separation'.

- Structural holes: The absence of ties between two parts of a network. Finding and exploiting a structural hole can give an entrepreneur a competitive advantage. This concept was developed by sociologist Ronald Burt, and is sometimes referred to as an alternate conception of social capital.

- Tie Strength: Defined by the linear combination of time, emotional intensity, intimacy and reciprocity (i.e. mutuality). Strong ties are associated with homophily, propinquity and transitivity, while weak ties are associated with bridges.

Segmentation

Groups are identified as 'cliques' if every individual is directly tied to every other individual, 'social circles' if there is less stringency of direct contact, which is imprecise, or as structurally cohesive blocks if precision is wanted.

- Clustering coefficient: A measure of the likelihood that two associates of a node are associates. A higher clustering coefficient indicates a greater 'cliquishness'.

- Cohesion: The degree to which actors are connected directly to each other by cohesive bonds. Structural cohesion refers to the minimum number of members who, if removed from a group, would disconnect the group.

Modelling and visualization of networks

Visual representation of social networks is important to understand the network data and convey the result of the analysis. Numerous methods of visualization for data produced by social network analysis have been presented. Many of the analytic software

have modules for network visualization. Exploration of the data is done

through displaying nodes and ties in various layouts, and attributing

colors, size and other advanced properties to nodes. Visual

representations of networks may be a powerful method for conveying

complex information, but care should be taken in interpreting node and

graph properties from visual displays alone, as they may misrepresent

structural properties better captured through quantitative analyses.

Signed graphs

can be used to illustrate good and bad relationships between humans. A

positive edge between two nodes denotes a positive relationship

(friendship, alliance, dating) and a negative edge between two nodes

denotes a negative relationship (hatred, anger). Signed social network

graphs can be used to predict the future evolution of the graph. In

signed social networks, there is the concept of "balanced" and

"unbalanced" cycles. A balanced cycle is defined as a cycle where the product of all the signs are positive. According to balance theory,

balanced graphs represent a group of people who are unlikely to change

their opinions of the other people in the group. Unbalanced graphs

represent a group of people who are very likely to change their opinions

of the people in their group. For example, a group of 3 people (A, B,

and C) where A and B have a positive relationship, B and C have a

positive relationship, but C and A have a negative relationship is an

unbalanced cycle. This group is very likely to morph into a balanced

cycle, such as one where B only has a good relationship with A, and both

A and B have a negative relationship with C. By using the concept of

balanced and unbalanced cycles, the evolution of signed social network graphs can be predicted.

Especially when using social network analysis as a tool for

facilitating change, different approaches of participatory network

mapping have proven useful. Here participants / interviewers provide

network data by actually mapping out the network (with pen and paper or

digitally) during the data collection session. An example of a

pen-and-paper network mapping approach, which also includes the

collection of some actor attributes (perceived influence and goals of

actors) is the * Net-map toolbox.

One benefit of this approach is that it allows researchers to collect

qualitative data and ask clarifying questions while the network data is

collected.

Social networking potential

Social Networking Potential (SNP) is a numeric coefficient, derived through algorithms to represent both the size of an individual's social network

and their ability to influence that network. SNP coefficients were

first defined and used by Bob Gerstley in 2002. A closely related term

is Alpha User, defined as a person with a high SNP.

SNP coefficients have two primary functions:

- The classification of individuals based on their social networking potential, and

- The weighting of respondents in quantitative marketing research studies.

By calculating the SNP of respondents and by targeting High SNP respondents, the strength and relevance of quantitative marketing research used to drive viral marketing strategies is enhanced.

Variables

used to calculate an individual's SNP include but are not limited to:

participation in Social Networking activities, group memberships,

leadership roles, recognition, publication/editing/contributing to

non-electronic media, publication/editing/contributing to electronic

media (websites, blogs), and frequency of past distribution of

information within their network. The acronym "SNP" and some of the

first algorithms developed to quantify an individual's social networking

potential were described in the white paper "Advertising Research is

Changing" (Gerstley, 2003) See Viral Marketing.

The first book

to discuss the commercial use of Alpha Users among mobile telecoms

audiences was 3G Marketing by Ahonen, Kasper and Melkko in 2004. The

first book to discuss Alpha Users more generally in the context of social marketing intelligence was Communities Dominate Brands by Ahonen & Moore in 2005. In 2012, Nicola Greco (UCL) presents at TEDx the Social Networking Potential as a parallelism to the potential energy that users generate and companies should use, stating that "SNP is the new asset that every company should aim to have".

Practical applications

Social network analysis is used extensively in a wide range of

applications and disciplines. Some common network analysis applications

include data aggregation and mining,

network propagation modeling, network modeling and sampling, user

attribute and behavior analysis, community-maintained resource support,

location-based interaction analysis, social sharing and filtering, recommender systems development, and link prediction and entity resolution. In the private sector, businesses use social network analysis to support activities such as customer interaction and analysis, information system development analysis, marketing, and business intelligence needs.

Some public sector uses include development of leader engagement

strategies, analysis of individual and group engagement and media use, and community-based problem solving.

Security applications

Social network analysis is also used in intelligence, counter-intelligence and law enforcement activities. This technique allows the analysts to map covert organizations such as a espionage ring, an organized crime family or a street gang. The National Security Agency (NSA) uses its clandestine mass electronic surveillance

programs to generate the data needed to perform this type of analysis

on terrorist cells and other networks deemed relevant to national

security. The NSA looks up to three nodes deep during this network

analysis.

After the initial mapping of the social network is complete, analysis

is performed to determine the structure of the network and determine,

for example, the leaders within the network. This allows military or law enforcement assets to launch capture-or-kill decapitation attacks on the high-value targets in leadership positions to disrupt the functioning of the network.

The NSA has been performing social network analysis on call detail records (CDRs), also known as metadata, since shortly after the September 11 attacks.

Textual analysis applications

Large

textual corpora can be turned into networks and then analysed with the

method of social network analysis. In these networks, the nodes are

Social Actors, and the links are Actions. The extraction of these

networks can be automated by using parsers. The resulting networks,

which can contain thousands of nodes, are then analyzed by using tools

from network theory to identify the key actors, the key communities or

parties, and general properties such as robustness or structural

stability of the overall network, or centrality of certain nodes. This automates the approach introduced by Quantitative Narrative Analysis, whereby subject-verb-object triplets are identified with pairs of actors linked by an action, or pairs formed by actor-object.

Narrative network of US Elections 2012

Internet applications

Social

network analysis has also been applied to understanding online behavior

by individuals, organizations, and between websites. Hyperlink analysis can be used to analyze the connections between websites or webpages to examine how information flows as individuals navigate the web.

The connections between organizations has been analyzed via hyperlink

analysis to examine which organizations within an issue community.

Social Media Internet Applications

Social

network analysis has been applied to social media as a tool to

understand behavior between individuals or organizations through their

linkages on social media websites such as Twitter and Facebook.

In computer-supported collaborative learning

One of the most current methods of the application of SNA is to the study of computer-supported collaborative learning

(CSCL). When applied to CSCL, SNA is used to help understand how

learners collaborate in terms of amount, frequency, and length, as well

as the quality, topic, and strategies of communication.

Additionally, SNA can focus on specific aspects of the network

connection, or the entire network as a whole. It uses graphical

representations, written representations, and data representations to

help examine the connections within a CSCL network.

When applying SNA to a CSCL environment the interactions of the

participants are treated as a social network. The focus of the analysis

is on the "connections" made among the participants – how they interact

and communicate – as opposed to how each participant behaved on his or

her own.

Key terms

There

are several key terms associated with social network analysis research

in computer-supported collaborative learning such as: density, centrality, indegree, outdegree, and sociogram.

- Density refers to the "connections" between participants. Density is defined as the number of connections a participant has, divided by the total possible connections a participant could have. For example, if there are 20 people participating, each person could potentially connect to 19 other people. A density of 100% (19/19) is the greatest density in the system. A density of 5% indicates there is only 1 of 19 possible connections.

- Centrality focuses on the behavior of individual participants within a network. It measures the extent to which an individual interacts with other individuals in the network. The more an individual connects to others in a network, the greater their centrality in the network.

In-degree and out-degree variables are related to centrality.

- In-degree centrality concentrates on a specific individual as the point of focus; centrality of all other individuals is based on their relation to the focal point of the "in-degree" individual.

- Out-degree is a measure of centrality that still focuses on a single individual, but the analytic is concerned with the out-going interactions of the individual; the measure of out-degree centrality is how many times the focus point individual interacts with others.

- A sociogram is a visualization with defined boundaries of connections in the network. For example, a sociogram which shows out-degree centrality points for Participant A would illustrate all outgoing connections Participant A made in the studied network.

Unique capabilities

Researchers

employ social network analysis in the study of computer-supported

collaborative learning in part due to the unique capabilities it offers.

This particular method allows the study of interaction patterns within a

networked learning community and can help illustrate the extent of the participants' interactions with the other members of the group.

The graphics created using SNA tools provide visualizations of the

connections among participants and the strategies used to communicate

within the group. Some authors also suggest that SNA provides a method

of easily analyzing changes in participatory patterns of members over

time.

A number of research studies have applied SNA to CSCL across a

variety of contexts. The findings include the correlation between a

network's density and the teacher's presence, a greater regard for the recommendations of "central" participants, infrequency of cross-gender interaction in a network, and the relatively small role played by an instructor in an asynchronous learning network.

Other methods used alongside SNA

Although

many studies have demonstrated the value of social network analysis

within the computer-supported collaborative learning field,

researchers have suggested that SNA by itself is not enough for

achieving a full understanding of CSCL. The complexity of the

interaction processes and the myriad sources of data make it difficult

for SNA to provide an in-depth analysis of CSCL.

Researchers indicate that SNA needs to be complemented with other

methods of analysis to form a more accurate picture of collaborative

learning experiences.

A number of research studies have combined other types of

analysis with SNA in the study of CSCL. This can be referred to as a

multi-method approach or data triangulation, which will lead to an increase of evaluation reliability in CSCL studies.

- Qualitative method – The principles of qualitative case study

research constitute a solid framework for the integration of SNA methods

in the study of CSCL experiences.

- Ethnographic data such as student questionnaires and interviews and classroom non-participant observations

- Case studies: comprehensively study particular CSCL situations and relate findings to general schemes

- Content analysis: offers information about the content of the communication among members

- Quantitative method – This includes simple descriptive statistical

analyses on occurrences to identify particular attitudes of group

members who have not been able to be tracked via SNA in order to detect

general tendencies.

- Computer log files: provide automatic data on how collaborative tools are used by learners

- Multidimensional scaling (MDS): charts similarities among actors, so that more similar input data is closer together

- Software tools: QUEST, SAMSA (System for Adjacency Matrix and Sociogram-based Analysis), and Nud*IST