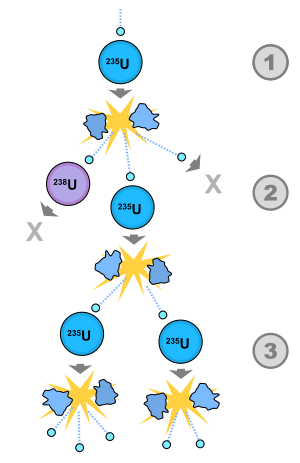

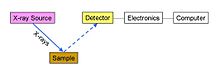

A possible nuclear fission chain reaction. 1. A uranium-235 atom absorbs a neutron,

and fissions into two new atoms (fission fragments), releasing three

new neutrons and a large amount of binding energy. 2. One of those

neutrons is absorbed by an atom of uranium-238,

and does not continue the reaction. Another neutron leaves the system

without being absorbed. However, one neutron does collide with an atom

of uranium-235, which then fissions and releases two neutrons and more

binding energy. 3. Both of those neutrons collide with uranium-235

atoms, each of which fissions and releases a few neutrons, which can

then continue the reaction.

A nuclear chain reaction occurs when one single nuclear reaction

causes an average of one or more subsequent nuclear reactions, this

leading to the possibility of a self-propagating series of these

reactions. The specific nuclear reaction may be the fission of heavy

isotopes (e.g., uranium-235, 235U). The nuclear chain reaction releases several million times more energy per reaction than any chemical reaction.

History

Chemical chain reactions were first proposed by German chemist Max Bodenstein in 1913, and were reasonably well understood before nuclear chain reactions were proposed.

It was understood that chemical chain reactions were responsible for

exponentially increasing rates in reactions, such as produced in

chemical explosions.

The concept of a nuclear chain reaction was reportedly first hypothesized by Hungarian scientist Leó Szilárd on September 12, 1933.

Szilárd that morning had been reading in a London paper of an

experiment in which protons from an accelerator had been used to split

lithium-7 into alpha particles, and the fact that much greater amounts

of energy were produced by the reaction than the proton supplied. Ernest

Rutherford commented in the article that inefficiencies in the process

precluded use of it for power generation. However, the neutron had been

discovered in 1932, shortly before, as the product of a nuclear

reaction. Szilárd, who had been trained as an engineer and physicist,

put the two nuclear experimental results together in his mind and

realized that if a nuclear reaction produced neutrons, which then caused

further similar nuclear reactions, the process might be a

self-perpetuating nuclear chain-reaction, spontaneously producing new

isotopes and power without the need for protons or an accelerator.

Szilárd, however, did not propose fission as the mechanism for his chain

reaction, since the fission reaction was not yet discovered, or even

suspected. Instead, Szilárd proposed using mixtures of lighter known

isotopes which produced neutrons in copious amounts. He filed a patent

for his idea of a simple nuclear reactor the following year.

In 1936, Szilárd attempted to create a chain reaction using beryllium and indium, but was unsuccessful. Nuclear fission was discovered and proved by Otto Hahn and Fritz Strassmann in December 1938. A few months later, Frédéric Joliot, H. Von Halban and L. Kowarski in Paris

searched for, and discovered, neutron multiplication in uranium,

proving that a nuclear chain reaction by this mechanism was indeed

possible.

On May 4, 1939 Joliot, Halban et Kowarski filed three patents.

The first two described power production from a nuclear chain reaction,

the last one called "Perfectionnement aux charges explosives" was the first patent for the atomic bomb and is filed as patent n°445686 by the Caisse nationale de Recherche Scientifique.

In parallel, Szilárd and Enrico Fermi in New York made the same analysis. This discovery prompted the letter from Szilárd and signed by Albert Einstein to President Franklin D. Roosevelt, warning of the possibility that Nazi Germany might be attempting to build an atomic bomb.

On December 2, 1942, a team led by Enrico Fermi (and including Szilárd) produced the first artificial self-sustaining nuclear chain reaction with the Chicago Pile-1 (CP-1) experimental reactor in a racquets court below the bleachers of Stagg Field at the University of Chicago. Fermi's experiments at the University of Chicago were part of Arthur H. Compton's Metallurgical Laboratory of the Manhattan Project; the lab was later renamed Argonne National Laboratory, and tasked with conducting research in harnessing fission for nuclear energy.

In 1956, Paul Kuroda of the University of Arkansas

postulated that a natural fission reactor may have once existed. Since

nuclear chain reactions may only require natural materials (such as

water and uranium, if the uranium has sufficient amounts of U-235), it

was possible to have these chain reactions occur in the distant past

when uranium-235 concentrations were higher than today, and where there

was the right combination of materials within the Earth's crust.

Kuroda's prediction was verified with the discovery of evidence of natural self-sustaining nuclear chain reactions in the past at Oklo in Gabon, Africa, in September 1972.

Fission chain reaction

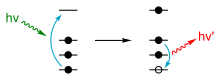

Fission chain reactions occur because of interactions between neutrons and fissile isotopes (such as 235U). The chain reaction requires both the release of neutrons from fissile isotopes undergoing nuclear fission

and the subsequent absorption of some of these neutrons in fissile

isotopes. When an atom undergoes nuclear fission, a few neutrons (the

exact number depends on uncontrollable and unmeasurable factors; the

expected number depends on several factors, usually between 2.5 and 3.0)

are ejected from the reaction. These free neutrons will then interact

with the surrounding medium, and if more fissile fuel is present, some

may be absorbed and cause more fissions. Thus, the cycle repeats to

give a reaction that is self-sustaining.

Nuclear power plants

operate by precisely controlling the rate at which nuclear reactions

occur, and that control is maintained through the use of several

redundant layers of safety measures. Moreover, the materials in a

nuclear reactor core and the uranium enrichment level make a nuclear

explosion impossible, even if all safety measures failed. On the other

hand, nuclear weapons

are specifically engineered to produce a reaction that is so fast and

intense it cannot be controlled after it has started. When properly

designed, this uncontrolled reaction can lead to an explosive energy

release.

Nuclear fission fuel

Nuclear weapons employ high quality, highly enriched fuel exceeding the critical size and geometry (critical mass)

necessary in order to obtain an explosive chain reaction. The fuel for

energy purposes, such as in a nuclear fission reactor, is very

different, usually consisting of a low-enriched oxide material (e.g. UO2).

Fission reaction products

When a fissile atom undergoes nuclear fission, it breaks into two or more fission fragments. Also, several free neutrons, gamma rays, and neutrinos

are emitted, and a large amount of energy is released. The sum of the

rest masses of the fission fragments and ejected neutrons is less than

the sum of the rest masses of the original atom and incident neutron (of

course the fission fragments are not at rest). The mass difference is

accounted for in the release of energy according to the equation E=Δmc2:

mass of released energy =

Due to the extremely large value of the speed of light, c,

a small decrease in mass is associated with a tremendous release of

active energy (for example, the kinetic energy of the fission

fragments). This energy (in the form of radiation and heat) carries the missing mass, when it leaves the reaction system (total mass, like total energy, is always conserved). While typical chemical reactions release energies on the order of a few eVs

(e.g. the binding energy of the electron to hydrogen is 13.6 eV),

nuclear fission reactions typically release energies on the order of

hundreds of millions of eVs.

Two typical fission reactions are shown below with average values of energy released and number of neutrons ejected:

Note that these equations are for fissions caused by slow-moving

(thermal) neutrons. The average energy released and number of neutrons

ejected is a function of the incident neutron speed.

Also, note that these equations exclude energy from neutrinos since

these subatomic particles are extremely non-reactive and, therefore,

rarely deposit their energy in the system.

Timescales of nuclear chain reactions

Prompt neutron lifetime

The prompt neutron lifetime, l,

is the average time between the emission of neutrons and either their

absorption in the system or their escape from the system. The neutrons that occur directly from fission are called "prompt neutrons," and the ones that are a result of radioactive decay of fission fragments are called "delayed neutrons".

The term lifetime is used because the emission of a neutron is often

considered its "birth," and the subsequent absorption is considered its

"death". For thermal (slow-neutron) fission reactors, the typical

prompt neutron lifetime is on the order of 10−4 seconds, and for fast fission reactors, the prompt neutron lifetime is on the order of 10−7 seconds. These extremely short lifetimes mean that in 1 second, 10,000 to 10,000,000 neutron lifetimes can pass. The average (also referred to as the adjoint unweighted) prompt neutron lifetime takes into account all prompt neutrons regardless of their importance in the reactor core; the effective prompt neutron lifetime (referred to as the adjoint weighted over space, energy, and angle) refers to a neutron with average importance.

Mean generation time

The mean generation time, Λ, is the average time from a neutron emission to a capture that results in fission.

The mean generation time is different from the prompt neutron lifetime

because the mean generation time only includes neutron absorptions that

lead to fission reactions (not other absorption reactions). The two

times are related by the following formula:

In this formula, k is the effective neutron multiplication factor, described below.

Effective neutron multiplication factor

The six factor formula effective neutron multiplication factor, k,

is the average number of neutrons from one fission that cause another

fission. The remaining neutrons either are absorbed in non-fission

reactions or leave the system without being absorbed. The value of k determines how a nuclear chain reaction proceeds:

- k < 1 (subcriticality): The system cannot sustain a chain reaction, and any beginning of a chain reaction dies out over time. For every fission that is induced in the system, an average total of 1/(1 − k) fissions occur.

- k = 1 (criticality): Every fission causes an average of one more fission, leading to a fission (and power) level that is constant. Nuclear power plants operate with k = 1 unless the power level is being increased or decreased.

- k > 1 (supercriticality): For every fission in the material, it is likely that there will be "k" fissions after the next mean generation time (Λ). The result is that the number of fission reactions increases exponentially, according to the equation , where t is the elapsed time. Nuclear weapons are designed to operate under this state. There are two subdivisions of supercriticality: prompt and delayed.

When describing kinetics and dynamics of nuclear reactors, and also

in the practice of reactor operation, the concept of reactivity is used,

which characterizes the deflection of reactor from the critical state.

ρ=(k-1)/k. InHour is a unit of reactivity of a nuclear reactor.

In a nuclear reactor, k will actually oscillate from

slightly less than 1 to slightly more than 1, due primarily to thermal

effects (as more power is produced, the fuel rods warm and thus expand,

lowering their capture ratio, and thus driving k lower). This leaves the average value of k at exactly 1. Delayed neutrons play an important role in the timing of these oscillations.

In an infinite medium, the multiplication factor may be described by the four factor formula; in a non-infinite medium, the multiplication factor may be described by the six factor formula.

Prompt and delayed supercriticality

Not all neutrons are emitted as a direct product of fission; some are instead due to the radioactive decay of some of the fission fragments. The neutrons that occur directly from fission are called "prompt neutrons,"

and the ones that are a result of radioactive decay of fission

fragments are called "delayed neutrons". The fraction of neutrons that

are delayed is called β, and this fraction is typically less than 1% of

all the neutrons in the chain reaction.

The delayed neutrons allow a nuclear reactor to respond several

orders of magnitude more slowly than just prompt neutrons would alone.

Without delayed neutrons, changes in reaction rates in nuclear

reactors would occur at speeds that are too fast for humans to control.

The region of supercriticality between k = 1 and k = 1/(1-β) is known as delayed supercriticality (or delayed criticality).

It is in this region that all nuclear power reactors operate. The

region of supercriticality for k > 1/(1-β) is known as prompt supercriticality (or prompt criticality), which is the region in which nuclear weapons operate.

The change in k needed to go from critical to prompt critical is defined as a dollar.

Nuclear weapons application of neutron multiplication

Nuclear fission weapons require a mass of fissile fuel that is prompt supercritical.

For a given mass of fissile material the value of k can be

increased by increasing the density. Since the probability per distance

traveled for a neutron to collide with a nucleus is proportional to the

material density, increasing the density of a fissile material can

increase k. This concept is utilized in the implosion method

for nuclear weapons. In these devices, the nuclear chain reaction

begins after increasing the density of the fissile material with a

conventional explosive.

In the gun-type fission weapon

two subcritical pieces of fuel are rapidly brought together. The value

of k for a combination of two masses is always greater than that of its

components. The magnitude of the difference depends on distance, as

well as the physical orientation.

The value of k can also be increased by using a neutron reflector surrounding the fissile material.

Once the mass of fuel is prompt supercritical, the power

increases exponentially. However, the exponential power increase cannot

continue for long since k decreases when the amount of fission material

that is left decreases (i.e. it is consumed by fissions). Also, the

geometry and density are expected to change during detonation since the

remaining fission material is torn apart from the explosion.

Predetonation

If

two pieces of subcritical material are not brought together fast

enough, nuclear predetonation can occur, whereby a smaller explosion

than expected will blow the bulk of the material apart.

Detonation of a nuclear weapon involves bringing fissile material

into its optimal supercritical state very rapidly. During part of this

process, the assembly is supercritical, but not yet in an optimal state

for a chain reaction. Free neutrons, in particular from spontaneous fissions,

can cause the device to undergo a preliminary chain reaction that

destroys the fissile material before it is ready to produce a large

explosion, which is known as predetonation.

To keep the probability of predetonation low, the duration of the

non-optimal assembly period is minimized and fissile and other

materials are used which have low spontaneous fission rates. In fact,

the combination of materials has to be such that it is unlikely that

there is even a single spontaneous fission during the period of

supercritical assembly. In particular, the gun method cannot be used

with plutonium.

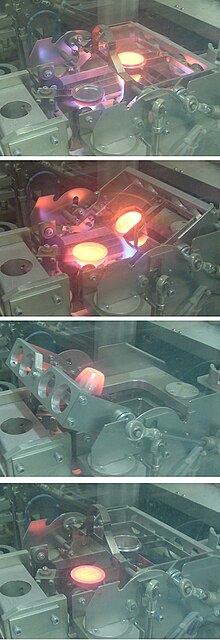

Nuclear power plants and control of chain reactions

Chain reactions naturally give rise to reaction rates that grow (or shrink) exponentially,

whereas a nuclear power reactor needs to be able to hold the reaction

rate reasonably constant. To maintain this control, the chain reaction

criticality must have a slow enough time-scale to permit intervention by

additional effects (e.g., mechanical control rods or thermal

expansion). Consequently, all nuclear power reactors (even fast-neutron reactors)

rely on delayed neutrons for their criticality. An operating nuclear

power reactor fluctuates between being slightly subcritical and slightly

delayed-supercritical, but must always remain below prompt-critical.

It is impossible for a nuclear power plant to undergo a nuclear

chain reaction that results in an explosion of power comparable with a nuclear weapon,

but even low-powered explosions due to uncontrolled chain reactions,

that would be considered "fizzles" in a bomb, may still cause

considerable damage and meltdown in a reactor. For example, the Chernobyl disaster

involved a runaway chain reaction but the result was a low-powered

steam explosion from the relatively small release of heat, as compared

with a bomb. However, the reactor complex was destroyed by the heat, as

well as by ordinary burning of the graphite exposed to air. Such steam explosions would be typical of the very diffuse assembly of materials in a nuclear reactor, even under the worst conditions.

In addition, other steps can be taken for safety. For example, power plants licensed in the United States require a negative void coefficient

of reactivity (this means that if water is removed from the reactor

core, the nuclear reaction will tend to shut down, not increase). This

eliminates the possibility of the type of accident that occurred at

Chernobyl (which was due to a positive void coefficient). However,

nuclear reactors are still capable of causing smaller explosions even

after complete shutdown, such as was the case of the Fukushima Daiichi nuclear disaster. In such cases, residual decay heat

from the core may cause high temperatures if there is loss of coolant

flow, even a day after the chain reaction has been shut down (see SCRAM).

This may cause a chemical reaction between water and fuel that produces

hydrogen gas which can explode after mixing with air, with severe

contamination consequences, since fuel rod material may still be exposed

to the atmosphere from this process. However, such explosions do not

happen during a chain reaction, but rather as a result of energy from

radioactive beta decay, after the fission chain reaction has been stopped.