Soap is a salt of a fatty acid used in a variety of cleansing and lubricating products. In a domestic setting, soaps are surfactants usually used for washing, bathing, and other types of housekeeping. In industrial settings, soaps are used as thickeners, components of some lubricants, and precursors to catalysts.

When used for cleaning, soap solubilizes particles and grime, which can then be separated from the article being cleaned. In hand washing, as a surfactant, when lathered with a little water, soap kills microorganisms by disorganizing their membrane lipid bilayer and denaturing their proteins. It also emulsifies oils, enabling them to be carried away by running water.

Soap is created by mixing fats and oils with a base. Humans have used soap for millennia; evidence exists for the production of soap-like materials in ancient Babylon around 2800 BC.

History

Ancient Middle East

It is uncertain as to who was the first to invent soap. The earliest recorded evidence of the production of soap-like materials dates back to around 2800 BC in ancient Babylon. A formula for making soap was written on a Sumerian clay tablet around 2500 BC; the soap was produced by heating a mixture of oil and wood ash, the earliest recorded chemical reaction, and used for washing woolen clothing.

The Ebers papyrus (Egypt, 1550 BC) indicates the ancient Egyptians used soap as a medicine and combined animal fats or vegetable oils with a soda ash substance called trona to create their soaps. Egyptian documents mention a similar substance was used in the preparation of wool for weaving.

In the reign of Nabonidus (556–539 BC), a recipe for soap consisted of uhulu [ashes], cypress [oil] and sesame [seed oil] "for washing the stones for the servant girls".

In the Southern Levant, the ashes from barilla plants, such as species of Salsola, saltwort (Seidlitzia rosmarinus) and Anabasis, were used in soap production, known as potash. Traditionally, olive oil was used instead of animal lard throughout the Levant, which was boiled in a copper cauldron for several days. As the boiling progresses, alkali ashes and smaller quantities of quicklime are added and constantly stirred. In the case of lard, it required constant stirring while kept lukewarm until it began to trace. Once it began to thicken, the brew was poured into a mold and left to cool and harden for two weeks. After hardening, it was cut into smaller cakes. Aromatic herbs were often added to the rendered soap to impart their fragrance, such as yarrow leaves, lavender, germander, etc.

Roman Empire

Pliny the Elder, whose writings chronicle life in the first century AD, describes soap as "an invention of the Gauls". The word sapo, Latin for soap, likely was borrowed from an early Germanic language and is cognate with Latin sebum, "tallow". It first appears in Pliny the Elder's account, Historia Naturalis, which discusses the manufacture of soap from tallow and ashes. There he mentions its use in the treatment of scrofulous sores, as well as among the Gauls as a dye to redden hair which the men in Germania were more likely to use than women. The Romans avoided washing with harsh soaps before encountering the milder soaps used by the Gauls around 58 BC. Aretaeus of Cappadocia, writing in the 2nd century AD, observes among "Celts, which are men called Gauls, those alkaline substances that are made into balls [...] called soap". The Romans' preferred method of cleaning the body was to massage oil into the skin and then scrape away both the oil and any dirt with a strigil. The standard design is a curved blade with a handle, all of which is made of metal.

The 2nd-century AD physician Galen describes soap-making using lye and prescribes washing to carry away impurities from the body and clothes. The use of soap for personal cleanliness became increasingly common in this period. According to Galen, the best soaps were Germanic, and soaps from Gaul were second best. Zosimos of Panopolis, circa 300 AD, describes soap and soapmaking.

Ancient China

A detergent similar to soap was manufactured in ancient China from the seeds of Gleditsia sinensis. Another traditional detergent is a mixture of pig pancreas and plant ash called zhuyizi (simplified Chinese: 猪胰子; traditional Chinese: 豬胰子; pinyin: zhūyízǐ). Soap made of animal fat did not appear in China until the modern era. Soap-like detergents were not as popular as ointments and creams.

Islamic Golden Age

Hard toilet soap with a pleasant smell was produced in the Middle East during the Islamic Golden Age, when soap-making became an established industry. Recipes for soap-making are described by Muhammad ibn Zakariya al-Razi (c. 865–925), who also gave a recipe for producing glycerine from olive oil. In the Middle East, soap was produced from the interaction of fatty oils and fats with alkali. In Syria, soap was produced using olive oil together with alkali and lime. Soap was exported from Syria to other parts of the Muslim world and to Europe.

A 12th-century document describes the process of soap production. It mentions the key ingredient, alkali, which later became crucial to modern chemistry, derived from al-qaly or "ashes".

By the 13th century, the manufacture of soap in the Middle East had become a major cottage industry, with sources in Nablus, Fes, Damascus, and Aleppo.

Medieval Europe

Soapmakers in Naples were members of a guild in the late sixth century (then under the control of the Eastern Roman Empire), and in the eighth century, soap-making was well known in Italy and Spain. The Carolingian capitulary De Villis, dating to around 800, representing the royal will of Charlemagne, mentions soap as being one of the products the stewards of royal estates are to tally. The lands of Medieval Spain were a leading soapmaker by 800, and soapmaking began in the Kingdom of England about 1200. Soapmaking is mentioned both as "women's work" and as the produce of "good workmen" alongside other necessities, such as the produce of carpenters, blacksmiths, and bakers.

In Europe, soap in the 9th century was produced from animal fats and had an unpleasant smell. This changed when olive oil began to be used in soap formulas instead, after which much of Europe's soap production moved to the Mediterranean olive-growing regions. Hard toilet soap was introduced to Europe by Arabs and gradually spread as a luxury item. It was often perfumed. By the 15th century, the manufacture of soap in the Christendom had become virtually industrialized, with sources in Antwerp, Castile, Marseille, Naples and Venice.

16th–17th century

In France, by the second half of the 16th century, the semi-industrialized professional manufacture of soap was concentrated in a few centers of Provence—Toulon, Hyères, and Marseille—which supplied the rest of France. In Marseilles, by 1525, production was concentrated in at least two factories, and soap production at Marseille tended to eclipse the other Provençal centers. English manufacture tended to concentrate in London.

Finer soaps were later produced in Europe from the 17th century, using vegetable oils (such as olive oil) as opposed to animal fats. Many of these soaps are still produced, both industrially and by small-scale artisans. Castile soap is a popular example of the vegetable-only soaps derived from the oldest "white soap" of Italy. In 1634 Charles I granted the newly formed Society of Soapmakers a monopoly in soap production who produced certificates from 'foure Countesses, and five Viscountesses, and divers other Ladies and Gentlewomen of great credite and quality, besides common Laundresses and others', testifying that 'the New White Soap washeth whiter and sweeter than the Old Soap'.

During the Restoration era (February 1665 – August 1714) a soap tax was introduced in England, which meant that until the mid-1800s, soap was a luxury, used regularly only by the well-to-do. The soap manufacturing process was closely supervised by revenue officials who made sure that soapmakers' equipment was kept under lock and key when not being supervised. Moreover, soap could not be produced by small makers because of a law that stipulated that soap boilers must manufacture a minimum quantity of one imperial ton at each boiling, which placed the process beyond the reach of the average person. The soap trade was boosted and deregulated when the tax was repealed in 1853.

Modern period

Industrially manufactured bar soaps became available in the late 18th century, as advertising campaigns in Europe and America promoted popular awareness of the relationship between cleanliness and health. In modern times, the use of soap has become commonplace in industrialized nations due to a better understanding of the role of hygiene in reducing the population size of pathogenic microorganisms.

-

Advertising for Dobbins' medicated toilet soap

-

A 1922 magazine advertisement for Palmolive Soap

-

Liquid soap

Until the Industrial Revolution, soapmaking was conducted on a small scale and the product was rough. In 1780, James Keir established a chemical works at Tipton, for the manufacture of alkali from the sulfates of potash and soda, to which he afterwards added a soap manufactory. The method of extraction proceeded on a discovery of Keir's. In 1790, Nicolas Leblanc discovered how to make alkali from common salt. Andrew Pears started making a high-quality, transparent soap, Pears soap, in 1807 in London. His son-in-law, Thomas J. Barratt, became the brand manager (the first of its kind) for Pears in 1865. In 1882, Barratt recruited English actress and socialite Lillie Langtry to become the poster-girl for Pears soap, making her the first celebrity to endorse a commercial product.

William Gossage produced low-priced, good-quality soap from the 1850s. Robert Spear Hudson began manufacturing a soap powder in 1837, initially by grinding the soap with a mortar and pestle. American manufacturer Benjamin T. Babbitt introduced marketing innovations that included the sale of bar soap and distribution of product samples. William Hesketh Lever and his brother, James, bought a small soap works in Warrington in 1886 and founded what is still one of the largest soap businesses, formerly called Lever Brothers and now called Unilever. These soap businesses were among the first to employ large-scale advertising campaigns.

Liquid soap

Liquid soap was not invented until the nineteenth century; in 1865, William Sheppard patented a liquid version of soap. In 1898, B.J. Johnson developed a soap derived from palm and olive oils; his company, the B.J. Johnson Soap Company, introduced "Palmolive" brand soap that same year. This new brand of soap became popular rapidly, and to such a degree that B.J. Johnson Soap Company changed its name to Palmolive.

In the early 1900s, other companies began to develop their own liquid soaps. Such products as Pine-Sol and Tide appeared on the market, making the process of cleaning things other than skin, such as clothing, floors, and bathrooms, much easier.

Liquid soap also works better for more traditional or non-machine washing methods, such as using a washboard.

Types

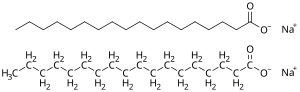

Since they are salts of fatty acids, soaps have the general formula (RCO2−)nMn+, where R is an alkyl, M is a metal and n is the charge of the cation. The major classification of soaps is determined by the identity of Mn+. When M is Na (sodium) or K (potassium), the soaps are called toilet soaps, used for handwashing. Many metal dications (Mg2+, Ca2+, and others) give metallic soap. When M is Li, the result is lithium soap (e.g., lithium stearate), which is used in high-performance greases. A cation from an organic base such as ammonium can be used instead of a metal; ammonium nonanoate is an ammonium-based soap that is used as an herbicide.

When used in hard water, soap does not lather well and a scum of stearate, a common ingredient in soap, forms as an insoluble precipitate.

Non-toilet soaps

Soaps are key components of most lubricating greases and thickeners. Greases are usually emulsions of calcium soap or lithium soap and mineral oil. Many other metallic soaps are also useful, including those of aluminium, sodium, and mixtures thereof. Such soaps are also used as thickeners to increase the viscosity of oils. In ancient times, lubricating greases were made by the addition of lime to olive oil.

Metal soaps are also included in modern artists' oil paints formulations as a rheology modifier.

Production of metallic soaps

Most metal soaps are prepared by hydrolysis:

Toilet soaps

In a domestic setting, "soap" usually refers to what is technically called a toilet soap, used for household and personal cleaning. When used for cleaning, soap solubilizes particles and fats/oils, which can then be separated from the article being cleaned. The insoluble oil/fat molecules become associated inside micelles, tiny spheres formed from soap molecules with polar hydrophilic (water-attracting) groups on the outside and encasing a lipophilic (fat-attracting) pocket, which shields the oil/fat molecules from the water making them soluble. Anything that is soluble will be washed away with the water.

Production of toilet soaps

The production of toilet soaps usually entails saponification of triglycerides, which are vegetable or animal oils and fats. An alkaline solution (often lye or sodium hydroxide) induces saponification whereby the triglyceride fats first hydrolyze into salts of fatty acids. Glycerol (glycerin) is liberated. The glycerin can remain in the soap product as a softening agent, although it is sometimes separated.

The type of alkali metal used determines the kind of soap product. Sodium soaps, prepared from sodium hydroxide, are firm, whereas potassium soaps, derived from potassium hydroxide, are softer or often liquid. Historically, potassium hydroxide was extracted from the ashes of bracken or other plants. Lithium soaps also tend to be hard. These are used exclusively in greases.

For making toilet soaps, triglycerides (oils and fats) are derived from coconut, olive, or palm oils, as well as tallow. Triglyceride is the chemical name for the triesters of fatty acids and glycerin. Tallow, i.e., rendered fat, is the most available triglyceride from animals. Each species offers quite different fatty acid content, resulting in soaps of distinct feel. The seed oils give softer but milder soaps. Soap made from pure olive oil, sometimes called Castile soap or Marseille soap, is reputed for its particular mildness. The term "Castile" is also sometimes applied to soaps from a mixture of oils with a high percentage of olive oil.

| Lauric acid | Myristic acid | Palmitic acid | Stearic acid | Oleic acid | Linoleic acid | Linolenic acid | |

|---|---|---|---|---|---|---|---|

| fats | C12 saturated | C14 saturated | C16 saturated | C18 saturated | C18 monounsaturated | C18 diunsaturated | C18 triunsaturated |

| Tallow | 0 | 4 | 28 | 23 | 35 | 2 | 1 |

| Coconut oil | 48 | 18 | 9 | 3 | 7 | 2 | 0 |

| Palm kernel oil | 46 | 16 | 8 | 3 | 12 | 2 | 0 |

| Palm oil | 0 | 1 | 44 | 4 | 37 | 9 | 0 |

| Laurel oil | 54 | 0 | 0 | 0 | 15 | 17 | 0 |

| Olive oil | 0 | 0 | 11 | 2 | 78 | 10 | 0 |

| Canola oil | 0 | 1 | 3 | 2 | 58 | 9 | 23 |

Soap-making for hobbyists

A variety of methods are available for hobbyists to make soap. Most soapmakers use processes where the glycerol remains in the product, and the saponification continues for many days after the soap is poured into molds. The glycerol is left during the hot process method, but at the high temperature employed, the reaction is practically completed in the kettle, before the soap is poured into molds. This simple and quick process is employed in small factories all over the world.

Handmade soap from the cold process also differs from industrially made soap in that an excess of fat or (Coconut Oil, Cazumbal Process) are used, beyond that needed to consume the alkali (in a cold-pour process, this excess fat is called "superfatting"), and the glycerol left in acts as a moisturizing agent. However, the glycerine also makes the soap softer. The addition of glycerol and processing of this soap produces glycerin soap. Superfatted soap is more skin-friendly than one without extra fat, although it can leave a "greasy" feel. Sometimes, an emollient is added, such as jojoba oil or shea butter. Sand or pumice may be added to produce a scouring soap. The scouring agents serve to remove dead cells from the skin surface being cleaned. This process is called exfoliation.

To make antibacterial soap, compounds such as triclosan or triclocarban can be added. There is some concern that use of antibacterial soaps and other products might encourage antimicrobial resistance in microorganisms.